Loop Optimizations in LLVM: The Good, The Bad, and The Ugly Michael - PowerPoint PPT Presentation

Loop Optimizations in LLVM: The Good, The Bad, and The Ugly Michael Kruse, Hal Finkel Argonne Leadership Computing Facility Argonne National Laboratory 18 th October 2018 Acknowledgments This research was supported by the Exascale Computing

Loop Optimizations in LLVM: The Good, The Bad, and The Ugly Michael Kruse, Hal Finkel Argonne Leadership Computing Facility Argonne National Laboratory 18 th October 2018

Acknowledgments This research was supported by the Exascale Computing Project (17-SC-20-SC), a collaborative efgort of two U.S. Department of Energy organizations (Offjce of Science and the National Nuclear Security Administration) responsible for the planning and preparation of a capable exascale ecosystem, including software, applications, hardware, advanced system engineering, and early testbed platforms, in support of the nation’s exascale computing imperative. This research used resources of the Argonne Leadership Computing Facility, which is a DOE Offjce of Science User Facility supported under Contract DE-AC02-06CH11357. 2 / 45

Table of Contents 1 Why Loop Optimizations in the Compiler? 2 The Good 3 The Bad 4 The Ugly 5 The Solution (?) 1 / 45

Why Loop Optimizations in the Compiler? Table of Contents 1 Why Loop Optimizations in the Compiler? 2 The Good 3 The Bad 4 The Ugly 5 The Solution (?) 2 / 45

Why Loop Optimizations in the Compiler? Source-to-Source (PLuTo, ROSE, PPCG, …) (QIRAL, SPIRAL, LIFT, SQL, ...) Domain-Specifjc Languages and Compilers Embedded DSL (Tensor Comprehensions, …) Templates (RAJA, Kokkos, HPX, Halide, …) Hand-optimized (MKL, OpenBLAS, …) Library-based New languages (Chapel, X10, Fortress, UPC, …) Loop Transformations in the Compiler? Descriptive Prescriptive Language extensions (OpenMP, OpenACC, …) Automatic (Polly, …) Compiler-based Approaches 3 / 45

Why Loop Optimizations in the Compiler? Partial Unrolling https://github.com/kavon/atJIT Loop Autotuning Optimization heuristics https://arxiv.org/abs/1805.03374 Compiler pragmas Why? } } case 1: case 2: 4 / 45 case 3: switch (n % 4) { } Stmt(i); for ( int i = 0; i+3 < n; i += 4) { if (n > 0) { Stmt(i); for ( int i = 0; i < n; i += 1) #pragma unroll 4 Stmt(i + 1); Stmt(i + 2); Stmt(i + 3); Stmt(n - 3); Stmt(n - 2); Stmt(n - 1);

Why Loop Optimizations in the Compiler? #pragma offload #pragma loopid SGI/Open64 #pragma fuse #pragma fission #pragma blocking size #pragma altcode #pragma noinvarif #pragma mem prefetch #pragma interchange #pragma ivdep OpenACC #pragma acc kernels icc #pragma parallel #pragma unroll_and_jam #pragma stream_unroll #pragma pipeloop #pragma NODEPCHK #pragma IVDEP #pragma IF_CONVERT #pragma UNROLL_FACTOR HP #pragma nomemorydepend Oracle Developer Studio #pragma nofusion #pragma loop_count(n) #pragma ivdep #pragma swp #pragma vector #pragma simd #pragma distribute_point #pragma block_loop #pragma unrollandfuse Compiler-Supported Pragmas #pragma loop(ivdep) Compiler Loop Transformations are Here to Stay Clang #pragma unroll #pragma clang loop unroll(enable) #pragma unroll_and_jam #pragma clang loop distribute(enable) #pragma clang loop vectorize(enable) #pragma clang loop interleave(enable) gcc #pragma GCC unroll #pragma GCC ivdep msvc #pragma loop(hint_parallel(0)) #pragma loop(no_vector) Cray xlc #pragma omp for #pragma nodepchk #pragma ivdep #pragma vector #pragma concur PGI #pragma omp target #pragma omp simd #pragma _CRI unroll OpenMP #pragma _CRI collapse #pragma _CRI interchange #pragma _CRI blockingsize #pragma _CRI nofission #pragma _CRI fusion 5 / 45

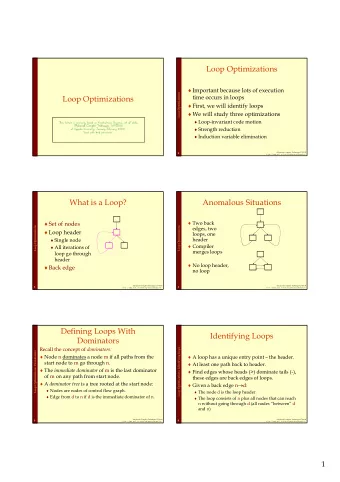

The Good Table of Contents 1 Why Loop Optimizations in the Compiler? 2 The Good Available Loop Transformations Available Pragmas Available Infrastructure 3 The Bad 4 The Ugly 5 The Solution (?) 6 / 45

Supported Loop Transformations Available passes: Loop Unroll (-and-Jam) Loop Unswitching Loop Interchange Detection of memcpy , memset idioms Delete side-efgect free loops Loop Distribution Loop Vectorization Modular: Can switch passes on and ofg independently 7 / 45 The Good → Available Loop Transformations

Supported Pragmas #pragma clang loop unroll / #pragma unroll #pragma unrollandjam #pragma clang loop vectorize(enable) / #pragma omp simd #pragma clang loop interleave(enable) #pragma clang loop distribute(enable) 8 / 45 The Good → Available Pragmas

Canonical Loop Form Loop-rotated form (at least one iteration) Can hoist invariant loads Loop-Closed SSA Pre-Header Header Exiting Latch Backedge 9 / 45 The Good → Available Infrastructure

Available Infrastructure Analysis passes: LoopInfo ScalarEvolution / PredicatedScalarEvolution Preparation passes: LoopRotate LoopSimplify IndVarSimplify Transformations: LoopVersioning 10 / 45 The Good → Available Infrastructure

The Bad Table of Contents 1 Why Loop Optimizations in the Compiler? 2 The Good 3 The Bad Disabled Loop Passes Pipeline Infmexibility Loop Structure Preservation Scalar Code Movement Writing a Loop Pass is Hard 4 The Ugly 5 The Solution (?) 11 / 45

The Bad Late Mid-End passes Clang IR Generation Semantic Analyzer Clang/LLVM/Polly Compiler Pipeline Parser Lexer LLVM Target Backend Preprocessor LoopVectorize … Polly void f() { for (int i=...) 12 / 45 source.c Assembly Canonicalization passes Loop optimization passes IR a t a d a t e m p o o L

13 / 45 LoopDistribute Experimental / not yet matured Many transformations disabled by default … LoopUnroll LoopUnrollAndJam LoopLoadElimination LoopVectorize LoopVersioningLICM Unavailable Loop Passes LoopReroll LoopFullUnroll LoopInterchange LoopIdiom LoopDeletion (Simple-)LoopUnswitch IR Clang CGOpenMPRuntime The Bad → Disabled Loop Passes

14 / 45 … OpenMP proposal: https://arxiv.org/abs/1805.03374 } B[i][j] = A[i-1][j]; A[i][j] = i + j; for ( int j = 0; j < m; j+=1) { for ( int i = 1; i < n; i+=1) #pragma interchange #pragma distribute May confmict with source directives: Diffjcult to optimize afterwards OpenMP outlining happens fjrst Fixed transformation order LoopUnroll Static Loop Pipeline LoopUnrollAndJam LoopLoadElimination LoopVectorize LoopDistribute LoopVersioningLICM LoopReroll LoopFullUnroll LoopInterchange LoopIdiom LoopDeletion (Simple-)LoopUnswitch IR Clang CGOpenMPRuntime The Bad → Pipeline Infmexibility

15 / 45 Stmt(i); https://reviews.llvm.org/D49281 } Stmt(i+1); Stmt(i); for ( int i = 126; i >= 0; i-=2) { } Stmt(i-1); Stmt(i); for ( int i = 127; i >= 0; i-=1) { } Stmt(i+1); for ( int i = 0; i < 128; i+=2) { Composition of Transformations #pragma reverse Stmt(i); for ( int i = 127; i >= 0; i-=1) #pragma unroll 2 Stmt(i); for ( int i = 0; i < 128; i+=1) #pragma unroll 2 #pragma reverse Stmt(i); for ( int i = 0; i < 128; i+=1) #pragma reverse #pragma unroll 2 The Bad → Pipeline Infmexibility

16 / 45 LoopIdiom Analysis invalidation / Extra work in non-loop passes Bit-operations created by InstCombine must be understood by ScalarEvolution Fixed in r312664(?) makes ScalarEvolution not recognize the loop has an integrated loop header detection JumpThreading skips exiting blocks Fixed in r343816 LoopSimplifyCFG only merges blocks within loop keeps a list of loop headers SimplifyCFG removes empty loop headers Non-loop passes may destroy canonical loop structure … LoopDeletion IndVarSimplify Non-Loop Passes Between Loop Passes LCSSA LoopSimplify InstCombine LoopInfo SimplifyCFG LoopUnswitch LICM LoopRotate LCSSA LoopSimplify LoopInfo Reassociate SimplifyCFG … The Bad → Loop Structure Preservation

17 / 45 InstCombine Loop-Closed SSA Global Value Numbering Loop-Invariant Code Motion Scalar transformations making loop optimizations harder … LoopDeletion LoopIdiom IndVarSimplify LCSSA LoopSimplify LoopInfo Instruction Movement vs. Loop Transformations SimplifyCFG LoopUnswitch LICM LoopRotate LCSSA LoopSimplify LoopInfo Reassociate SimplifyCFG … The Bad → Scalar Code Movement

18 / 45 Loop } A[i] += i*tmp; for ( int i=0; i<n; i+=1) tmp = B[j]; for ( int j=0; j<m; j+=1) { (LoadPRE) GVN A[i] += i*B[j]; for ( int i=0; i<n; i+=1) for ( int j=0; j<m; j+=1) Interchange } Scalar/Loop Pass Interaction A[i] = tmp; tmp += i*B[j]; for ( int j=0; j<m; j+=1) tmp = A[i]; for ( int i=0; i<n; i+=1) { (Register Promotion) LICM A[i] += i*B[j]; for ( int j=0; j<m; j+=1) for ( int i=0; i<n; i+=1) Loop Nest Bakin-In The Bad → Scalar Code Movement

19 / 45 LoopUnroll Code transformation UnrolledInstAnalyzer LoopVectorizationCostModel LoopInterchangeProfjtability Profjtability: PolyhedralInfo MemorySSA (LICM, LoopInstSimplify) MemoryDependenceAnalysis (LoopIdiom) LoopInterchangeLegality (LoopInterchange) LoopAccessInfo (LoopDistribute, LoopVectorize, LoopLoadElimination) Dependence analysis (not passes that can be preserved!): … LoopUnrollAndJam Non-Shared Infrastructure LoopLoadElimination LoopVectorize LoopDistribute LoopVersioningLICM LoopReroll LoopFullUnroll LoopInterchange LoopIdiom LoopDeletion (Simple-)LoopUnswitch IR Clang CGOpenMPRuntime The Bad → Writing a Loop Pass is Hard

20 / 45 Loop-Closed SSA Form transformations more complicated Makes some (non-innermost) loop Adds spurious dependencies Otherwise need to pass the loop every time Allows referencing the loop’s exit value use( sumi ); } } for ( int j = 0; j < m; j+=1) { for ( int i = 0; i < n; i+=1) { LCSSA use( sum ); for ( int j = 0; j < m; j+=1) for ( int i = 0; i < n; i+=1) The Bad → Writing a Loop Pass is Hard sum += i*j; sum += i*j; sumj = sum ; sumi = sumj ;

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.