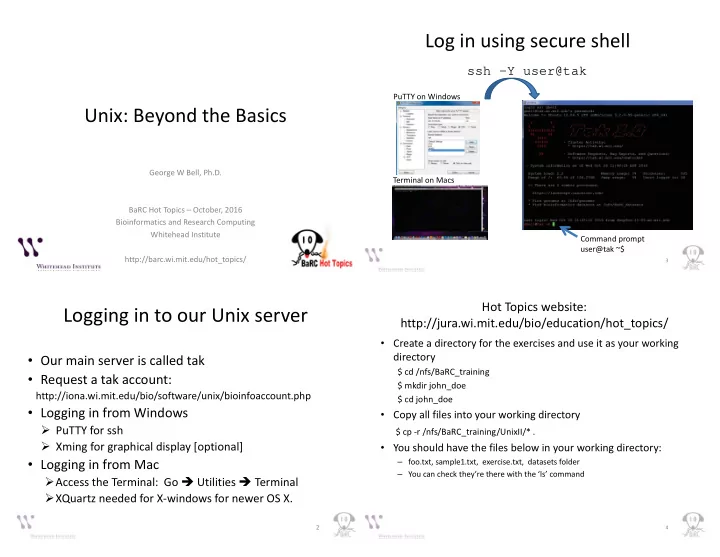

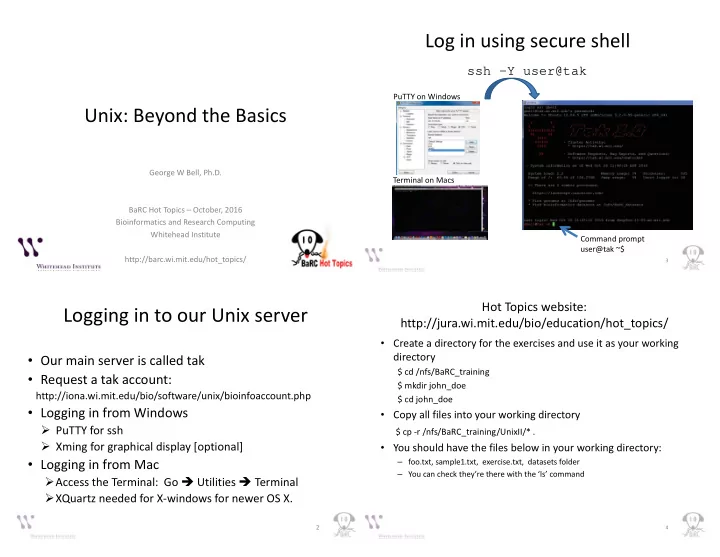

Log in using secure shell ssh –Y user@tak PuTTY on Windows Unix: Beyond the Basics George W Bell, Ph.D. Terminal on Macs BaRC Hot Topics – October, 2016 Bioinformatics and Research Computing Whitehead Institute Command prompt user@tak ~$ http://barc.wi.mit.edu/hot_topics/ 3 Hot Topics website: Logging in to our Unix server http://jura.wi.mit.edu/bio/education/hot_topics/ • Create a directory for the exercises and use it as your working directory • Our main server is called tak $ cd /nfs/BaRC_training • Request a tak account: $ mkdir john_doe http://iona.wi.mit.edu/bio/software/unix/bioinfoaccount.php $ cd john_doe • Logging in from Windows • Copy all files into your working directory � PuTTY for ssh $ cp -r /nfs/BaRC_training/UnixII/* . � Xming for graphical display [optional] • You should have the files below in your working directory: • Logging in from Mac – foo.txt, sample1.txt, exercise.txt, datasets folder – You can check they’re there with the ‘ls’ command � Access the Terminal: Go � Utilities � Terminal � XQuartz needed for X-windows for newer OS X. 2 4

Unix Review: Unix Review: Commands Pipes � command [arg1 arg2 … ] [input1 input2 … ] • Stream output of one command/program as $ sort -k2,3nr foo.tab input for another -n or -g : -n is recommended, except for scientific notation or start end a leading '+' – Avoid intermediate file(s) -r : reverse order $ cut -f1,5 foo.tab � $ cut -f 1 myFile.txt | sort | uniq -c > uniqCounts.txt $ cut -f1-5 foo.tab pipe -f: select only these fields select 1 st and 5 th fields -f1,5: select 1 st , 2 nd , 3 rd , 4 th , and 5 th fields -f1-5: $ wc -l foo.txt How many lines are in this file? 7 5 Unix Review: What we will discuss today Common Mistakes • Case sensitive • Aliases (to reduce typing) cd /nfs/Barc_Public vs cd /nfs/BaRC_Public • sed (for file manipulation) -bash: cd: /nfs/Barc_Public: No such file or directory • awk/bioawk (to filter by column) • Spaces may matter! • groupBy (bedtools; not typical Unix) rm –f myFiles* vs rm –f myFiles * • join (merge files) • loops (one-line and with shell scripts) • Office applications can convert text to special characters that Unix won’t understand • scripting (to streamline commands) • Ex: smart quotes, dashes 6 8

Regular Expressions Aliases • Pattern matching and easier to search • Add a one-word link to a longer command • Commonly used regular expressions • To get current aliases (from ~/.bashrc) • Examples Matches . All characters List all txt files: ls *.txt alias * Zero or more; wildcard Replace CHR with Chr at the beginning of each line: • Create a new alias (two examples) + One or more $ sed 's/^CHR/Chr/' myFile.txt ? One Delete a dot followed by one or more numbers alias sp='cd /lab/solexa_public/Reddien' ^ Beginning of a line $ sed 's/\.[0-9]\+//g' myFile.txt alias CollectRnaSeqMetrics='java -jar $ End of a line /usr/local/share/picard-tools/CollectRnaSeqMetrics.jar' [ab] Any character in brackets • Make an alias permanent • Note: regular expression syntax may slightly differ – Paste command(s) in ~/.bashrc between sed, awk, Unix shell, and Perl – Ex: \+ in sed is equivalent to + in Perl 9 11 sed: awk stream editor for filtering and transforming text • Print lines 10 - 15: • Name comes from the original authors: $ sed -n '10,15p' bigFile > selectedLines.txt Alfred V. Aho, Peter J. Weinberger, Brian W. Kernighan • Delete 5 header lines at the beginning of a file: • A simple programing language $ sed '1,5d' file > fileNoHeader • Remove all version numbers (eg: '.1') from the end of • Good for filtering/manipulating multiple- a list of sequence accessions: eg. NM_000035.2 column files $ sed 's/\.[0-9]\+//g' accsWithVersion > accsOnly s: substitute g: global modifier (change all) 10 12

awk awk: arithmetic operations • By default, awk splits each line by spaces Add average values of 4 th and 5 th fields to the file: $ awk '{ print $0 "\t" ($4+$5)/2 }' foo.tab • Print the 2 nd and 1 st fields of the file: $0: all fields $ awk ' { print $2"\t"$1 } ' foo.tab Operator Description • Convert sequences from tab delimited format to fasta format: + Addition - Subtraction $ head -1 foo.tab * Multiplication Seq1 ACTGCATCAC / Division $ awk ' { print ">" $1 "\n" $2 }' foo.tab > foo.fa % Modulo $ head -2 foo.fa ^ Exponentiation >Seq1 ** Exponentiation ACGCATCAC 13 15 awk: field separator awk: making comparisons Print out records if values in 4 th or 5 th field are above 4: $ awk '{ if( $4>4 || $5>4 ) print $0 } ' foo.tab • Issues with default separator (white space) – one field is gene description with multiple words Sequence Description > Greater than – consecutive empty cells < Less than <= Less than or equal to • To use tab as the separator: >= Greater than or equal to == Equal to $ awk -F "\t" '{ print NF }' foo.txt or != Not equal to Character Description $ awk 'BEGIN {FS="\t"} { print NF }' foo.txt \n newline ~ Matches !~ Does not match \r carriage return BEGIN: action before read input || Logical OR \t horizontal tab NF: number of fields in the current record && Logical AND FS: input field separator OFS: output field separator END : action after read input 14 16

awk bioawk: Examples • Conditional statements: Display expression levels for the gene NANOG: Print transcript info and chr from a gff/gtf file (2 ways) • $ awk '{ if(/NANOG/) print $0 }' foo.txt or bioawk -c gff '{print $group "\t" $seqname}' Homo_sapiens.GRCh37.75.canonical.gtf $ awk '/NANOG/ { print $0 } ' foo.txt or bioawk -c gff '{print $9 "\t" $1}' Homo_sapiens.GRCh37.75.canonical.gtf $ awk '/NANOG/' foo.txt Sample output: Add line number to the above output: gene_id "ENSG00000223972"; transcript_id "ENST00000518655"; chr1 $ awk '/NANOG/ { print NR"\t"$0 }' foo.txt gene_id "ENSG00000223972"; transcript_id "ENST00000515242"; chr1 NR: line number of the current row • Looping: Convert a fastq file into fasta (2 ways) Calculate the average expression (4 th , 5 th and 6 th fields in this case) for each transcript • bioawk -c fastx '{print “>” $name “\n” $seq}' sequences.fastq $ awk '{ total= $4 + $5 + $6; avg=total/3; print $0"\t"avg}' foo.txt or bioawk -c fastx '{print “>” $1 “\n” $2}' sequences.fastq $ awk '{ total=0; for (i=4; i<=6; i++) total=total+$i; avg=total/3; print $0"\t"avg }' foo.txt 17 19 Summarize by Columns: bioawk* groupBy (from bedtools) • Extension of awk for commonly used file formats in bioinformatics Input file must be pre-sorted by grouping column(s)! $ bioawk -c help input bed : !Ensembl Gene ID !Ensembl Transcript ID !Symbol -g grpCols column(s) for grouping 1:chrom 2:start 3:end 4:name 5:score 6:strand 7:thickstart 8:thickend 9:rgb ENSG00000281518 ENST00000627423 FOXO6 -c -opCols column(s) to be summarized 10:blockcount 11:blocksizes 12:blockstarts ENSG00000281518 ENST00000630406 FOXO6 -o Operation(s) applied to opCol: ENSG00000280680 ENST00000625523 HHAT sam : ENSG00000280680 ENST00000627903 HHAT sum, count, min, max, mean, median, stdev, 1:qname 2:flag 3:rname 4:pos 5:mapq 6:cigar 7:rnext 8:pnext 9:tlen 10:seq ENSG00000280680 ENST00000626327 HHAT collapse (comma-sep list) 11:qual ENSG00000281614 ENST00000629761 INPP5D distinct (non-redundant comma-sep list) ENSG00000281614 ENST00000630338 INPP5D vcf : Print the gene ID (1 st column), the gene symbol , and a list of transcript IDs (2 nd field) 1:chrom 2:pos 3:id 4:ref 5:alt 6:qual 7:filter 8:info gff : $ sort -k1,1 Ensembl_info.txt | groupBy -g 1 -c 3,2 -o distinct,collapse 1:seqname 2:source 3:feature 4:start 5:end 6:score 7:filter 8:strand 9:group 10:attribute Partial output fastx : !Ensembl Gene ID !Symbol !Ensembl Transcript ID 1:name 2:seq 3:qual 4:comment ENSG00000281518 FOXO6 ENST00000627423,ENST00000630406 ENSG00000280680 HHAT ENST00000625523,ENST00000626327,ENST00000627903 *https://github.com/lh3/bioawk 18 20

Recommend

More recommend