L M A D A Learning And Mining from DatA NANJING UNIVERSITY - PowerPoint PPT Presentation

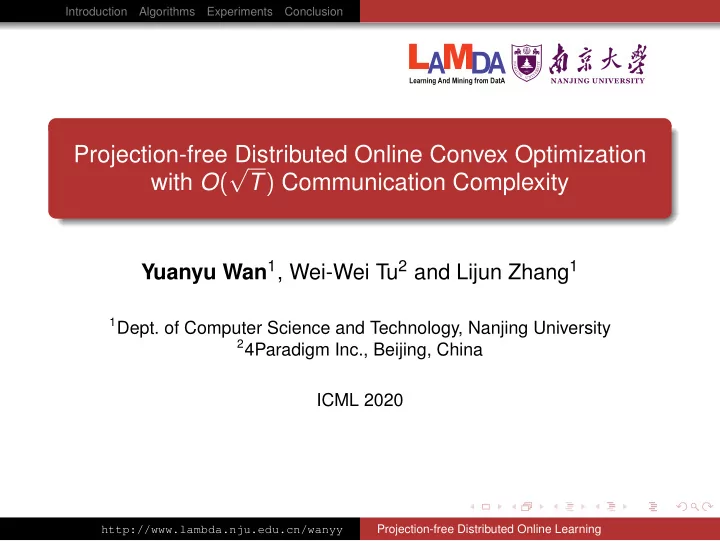

Introduction Algorithms Experiments Conclusion L M A D A Learning And Mining from DatA NANJING UNIVERSITY Projection-free Distributed Online Convex Optimization with O ( T ) Communication Complexity Yuanyu Wan 1 , Wei-Wei Tu 2 and

Introduction Algorithms Experiments Conclusion L M A D A Learning And Mining from DatA NANJING UNIVERSITY Projection-free Distributed Online Convex Optimization √ with O ( T ) Communication Complexity Yuanyu Wan 1 , Wei-Wei Tu 2 and Lijun Zhang 1 1 Dept. of Computer Science and Technology, Nanjing University 2 4Paradigm Inc., Beijing, China ICML 2020 http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Outline Introduction 1 Background The Problem and Our Contributions Our Algorithms 2 D-BOCG for Full Information Setting D-BBCG for Bandit Setting Experiments 3 Conclusion 4 L M A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Outline Introduction 1 Background The Problem and Our Contributions Our Algorithms 2 D-BOCG for Full Information Setting D-BBCG for Bandit Setting Experiments 3 Conclusion 4 L M A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Outline Introduction 1 Background The Problem and Our Contributions Our Algorithms 2 D-BOCG for Full Information Setting D-BBCG for Bandit Setting Experiments 3 Conclusion 4 L M A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Distributed Online Learning over a Network Formal definition 1: for t = 1 , 2 , . . . , T do for each local learner i ∈ [ n ] do 2: pick a decision x i ( t ) ∈ K 3: receive a convex loss function f t , i ( x ) : K → R communicate with its neighbors and update x i ( t ) 4: end for 5: 6: end for L M A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Distributed Online Learning over a Network Formal definition 1: for t = 1 , 2 , . . . , T do for each local learner i ∈ [ n ] do 2: pick a decision x i ( t ) ∈ K 3: receive a convex loss function f t , i ( x ) : K → R communicate with its neighbors and update x i ( t ) 4: end for 5: 6: end for the network is defined as G = ( V , E ) , V = [ n ] each node i ∈ [ n ] is a local learner node i can only communicate with its immediate neighbors N i = { j ∈ V | ( i , j ) ∈ E } the global loss function is defined as f t ( x ) = � n j = 1 f t , j ( x ) L M A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Distributed Online Learning over a Network Formal definition 1: for t = 1 , 2 , . . . , T do for each local learner i ∈ [ n ] do 2: pick a decision x i ( t ) ∈ K 3: receive a convex loss function f t , i ( x ) : K → R communicate with its neighbors and update x i ( t ) 4: end for 5: 6: end for Regret of local learner i T T � � R T , i = f t ( x i ( t )) − min f t ( x ) x ∈K t = 1 t = 1 L M A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Distributed Online Learning over a Network Formal definition 1: for t = 1 , 2 , . . . , T do for each local learner i ∈ [ n ] do 2: pick a decision x i ( t ) ∈ K 3: receive a convex loss function f t , i ( x ) : K → R communicate with its neighbors and update x i ( t ) 4: end for 5: 6: end for Regret of local learner i T T � � R T , i = f t ( x i ( t )) − min f t ( x ) x ∈K t = 1 t = 1 Applications multi-agent coordination L M distributed tracking in sensor networks A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Projection-based Methods Distributed Online Dual Averaging [Hosseini et al., 2013] 1: for each local learner i ∈ [ n ] do Play x i ( t ) and compute g i ( t ) = ∇ f t , i ( x i ( t )) 2: z i ( t + 1 ) = � j ∈ N i P ij z j ( t ) + g i ( t ) 3: x i ( t + 1 ) = Π ψ K ( z i ( t + 1 ) , α ( t )) 4: 5: end for P ij > 0 only if ( i , j ) ∈ E or P ij = 0 ψ ( x ) : K → R is a proximal function, e.g., ψ ( x ) = � x � 2 2 projection step: Π ψ K ( z , α ) = argmin x ∈K z ⊤ x + 1 α ψ ( x ) √ √ α ( t ) = O ( 1 / t ) → R T , i = O ( T ) L M A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Projection-based Methods Distributed Online Dual Averaging [Hosseini et al., 2013] 1: for each local learner i ∈ [ n ] do Play x i ( t ) and compute g i ( t ) = ∇ f t , i ( x i ( t )) 2: z i ( t + 1 ) = � j ∈ N i P ij z j ( t ) + g i ( t ) 3: x i ( t + 1 ) = Π ψ K ( z i ( t + 1 ) , α ( t )) 4: 5: end for P ij > 0 only if ( i , j ) ∈ E or P ij = 0 ψ ( x ) : K → R is a proximal function, e.g., ψ ( x ) = � x � 2 2 projection step: Π ψ K ( z , α ) = argmin x ∈K z ⊤ x + 1 α ψ ( x ) √ √ α ( t ) = O ( 1 / t ) → R T , i = O ( T ) Distributed Online Gradient Descent [Ram et al., 2010] also need a projection step L M A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Projection-free Methods Motivation: the projection step could be time-consuming if K is a trace norm ball, it requires SVD of a matrix L M A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Projection-free Methods Motivation: the projection step could be time-consuming if K is a trace norm ball, it requires SVD of a matrix Distributed Online Conditional Gradient [Zhang et al., 2017] 1: for each local learner i ∈ [ n ] do Play x i ( t ) and compute g i ( t ) = ∇ f t , i ( x i ( t )) 2: v i = argmin x ∈K ∇ F t , i ( x i ( t )) ⊤ x 3: x i ( t + 1 ) = x i ( t ) + s t ( v i − x i ( t )) 4: z i ( t + 1 ) = � j ∈ N i P ij z j ( t ) + g i ( t ) 5: 6: end for F t , i ( x ) = η z i ( t ) ⊤ x + � x − x 1 ( 1 ) � 2 2 √ η = O ( T − 3 / 4 ) , s t = 1 / t → R T , i = O ( T 3 / 4 ) only contain linear optimization step (step 3) T communication rounds L M A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Outline Introduction 1 Background The Problem and Our Contributions Our Algorithms 2 D-BOCG for Full Information Setting D-BBCG for Bandit Setting Experiments 3 Conclusion 4 L M A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Question Can the O ( T ) communication complexity of distributed online conditional gradient (D-OCG) be reduced? http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Question Can the O ( T ) communication complexity of distributed online conditional gradient (D-OCG) be reduced? An affirmative and non-trivial answer distributed block online conditional gradient (D-BOCG) √ communication complexity: from O ( T ) to O ( T ) regret bound: O ( T 3 / 4 ) http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion Background The Problem and Our Contributions Question Can the O ( T ) communication complexity of distributed online conditional gradient (D-OCG) be reduced? An affirmative and non-trivial answer distributed block online conditional gradient (D-BOCG) √ communication complexity: from O ( T ) to O ( T ) regret bound: O ( T 3 / 4 ) An extension to the bandit setting distributed block bandit conditional gradient (D-BBCG) √ communication complexity: O ( T ) high-probability regret bound: � O ( T 3 / 4 ) http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Introduction Algorithms Experiments Conclusion D-BOCG for Full Information Setting D-BBCG for Bandit Setting Outline Introduction 1 Background The Problem and Our Contributions Our Algorithms 2 D-BOCG for Full Information Setting D-BBCG for Bandit Setting Experiments 3 Conclusion 4 L M A D A Learning And Mining from DatA http://www.lambda.nju.edu.cn/wanyy Projection-free Distributed Online Learning

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.