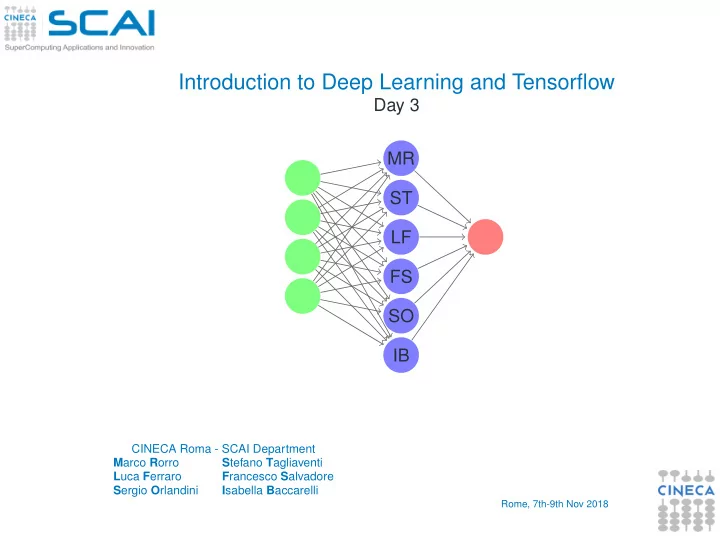

Introduction to Deep Learning and Tensorflow Day 3 MR ST LF FS SO IB CINECA Roma - SCAI Department M arco R orro S tefano T agliaventi L uca F erraro F rancesco S alvadore S ergio O rlandini I sabella B accarelli Rome, 7th-9th Nov 2018

Outline AE Best practices 1 Autoencoders Large-Scale Deep Learning 2 Best practices DAVIDE Jupyter on DAVIDE 3 Large-Scale Deep Learning GAN Objective Algorithm 4 DAVIDE References 5 Jupyter on DAVIDE 6 GAN Objective Algorithm 7 References

Autoencoders AE Best • A basic autoencoder is a neural network that is trained to attempt to copy practices its input to its output. Large-Scale Deep • as such it is usually considered an unsupervised learning algorithm Learning • Internally, it has a hidden layer h that describes a code ( internal DAVIDE representation ) used to represent the input. Jupyter on DAVIDE • The network may be viewed as consisting of two parts GAN • an encoder function h = f ( x ) Objective Algorithm • a decoder that produces a reconstruction r = g ( h ) . References

Autoencoders sketch AE Best practices Large-Scale Deep Learning DAVIDE Jupyter on DAVIDE GAN Objective Algorithm References

AE applications AE Best practices Large-Scale Deep Learning DAVIDE • If an autoencoder succeeds in simply learning to set g ( f ( x )) = x Jupyter on everywhere, it does not seem especially useful DAVIDE • However, (different types of) autoencoders have several applications: GAN Objective • compression Algorithm • dimensionality reduction (to be used to feed other networks) References • feature extraction (possibly before feeding to other networks) • unsupervised pre-training • noise reduction • anomaly detection

Undercomplete AE AE Best practices Large-Scale • If the h is constrained to have a smaller dimension than x – undercomplete Deep Learning autoencoder – the autoencoder can only produce an approximate DAVIDE reconstruction of x Jupyter on • ...often we are not interested in the output of the decoder but in the the DAVIDE code output of the encoding part h GAN Objective • In such case the code h Algorithm • is a compressed version of x References • can capture the most salient features of the training data • The learning process tries to minimize the loss function which penalizes the reconstruction for being dissimilar from the input L ( x , g ( f ( x ))) • Nonlinear autoencoders somehow learn a nonlinear generalization of PCA

Regularized AE AE Best practices Large-Scale Deep • If the dimensionality of the code is too high – equal to input or larger than Learning DAVIDE input ( overcomplete ) – it can completely store the input information (even Jupyter on using linear encoder and decoder) but may fail to learn anything useful DAVIDE • Rather than limiting the model capacity by keeping the code size small, GAN regularized autoencoders use a loss function that encourages the model Objective Algorithm to have other properties besides the ability to copy its input to its output References • sparsity of the representation • smallness of the derivative of the representation • robustness to noise • robustness to missing inputs • A regularized autoencoder can be nonlinear and overcomplete but still learn something useful about the data distribution

Sparse AE • A sparse autoencoder is simply an autoencoder whose training criterion AE Best involves a sparsity penalty Ω( h ) on the code layer h , in addition to the practices reconstruction error: Large-Scale Deep L ( x , g ( f ( x ))) + Ω( h ) Learning where typically we have h = f ( x ) , the encoder output. DAVIDE • Sparse autoencoders are typically used to learn features for another task Jupyter on DAVIDE such as classification. GAN Objective • The penalty Ω( h ) can be seen as a regularizer term added to a Algorithm feedforward network whose primary task is to copy the input to the output References (unsupervised learning objective) and possibly also perform some supervised task (with a supervised learning objective) that depends on these sparse features. • Unlike other regularizers such as weight decay, there is not a straightforward Bayesian interpretation to this regularizer. • One way to achieve actual zeros in h for sparse (and denoising) autoencoders is to use rectified linear units to produce the code layer. With a prior that actually pushes the representations to zero (like the absolute value penalty), one can thus indirectly control the average number of zeros in the representation.

Denoising AE • Rather than adding a penalty Ω to the cost function, we can obtain an AE Best autoencoder that learns something useful by changing the reconstruction practices error term of the cost function. Large-Scale Deep • A denoising autoencoder or DAE instead minimizes Learning DAVIDE L ( x , g ( f (˜ x ))) Jupyter on DAVIDE where ˜ x is a copy of x that has been corrupted by some form of noise. GAN Objective • Denoising autoencoders must therefore undo this corruption rather than Algorithm simply copying their input. References

Denoising AE sketch • Not only denoising autoencoders are used to remove noise: in order to AE force the hidden layer to discover more robust features and prevent it from Best practices simply learning the identity, we train the autoencoder to reconstruct the Large-Scale input from an artificially corrupted version of it. Deep Learning DAVIDE Jupyter on DAVIDE GAN Objective Algorithm References

Deep or shallow? AE Best • Autoencoders are just feedforward networks. The same loss functions and practices output unit types that can be used for traditional feedforward networks are Large-Scale Deep also used for autoencoders. Learning • Autoencoders are often trained with only a single layer encoder and a DAVIDE single layer decoder. However, this is not a requirement. In fact, using Jupyter on DAVIDE deep encoders and decoders offers many advantages. GAN • Depth can exponentially reduce the computational cost of representing Objective Algorithm some functions. References • Depth can also exponentially decrease the amount of training data needed to learn some functions. • Experimentally, deep autoencoders yield much better compression than corresponding shallow or linear autoencoders • A common strategy for training a deep autoencoder is to greedily pretrain the deep architecture by training a stack of shallow autoencoders, so we often encounter shallow autoencoders, even when the ultimate goal is to train a deep autoencoder.

Anomaly detection using AE - Part I AE Best practices Large-Scale Deep • Example dataset: 280000 instances of credit card use and for each Learning DAVIDE transaction the classification fraudulent/legit Jupyter on • The goal: for a new transaction predict if fraudulent or not DAVIDE • Some critical issues: GAN Objective • as a classification problem there is lack of large fraudulent training set Algorithm • marking every transaction as non-fraud would lead to > 99% accuracy (we References need a different metrics) • no single parameter analysis helps in finding out the fraud transactions • Idea: use AE training the model on the normal (non-fraud) transactions • Once the model is trained, in order to predict whether or not a new/unseen transaction is normal or fraudulent, we calculate the reconstruction error

Anomaly detection using AE - Part II AE Best practices • If the error is larger than a predefined threshold, we will mark it as a fraud Large-Scale Deep • depending on the threshold we will have different quality of results Learning • the so called confusion matrix helps summarizing the quality of results DAVIDE Jupyter on DAVIDE GAN Objective Algorithm References

Accuracy vs Precision vs Recall AE Best Accuracy = Number of correct predictions practices = Large-Scale Total number of predictions Deep Learning TP+TN = DAVIDE TP+TN+FP+FN Jupyter on DAVIDE TP GAN Precision = Objective TP + FP Algorithm References TP Recall = TP + FN • Accuracy alone doesn’t tell the full story when you’re working with a class-imbalanced data set • assuming trivial always predominant model gives high accuracy, but it is useless

Variational autoencoders (ideas) - 1 • Variational Autoencoders (VAEs) are powerful generative models now AE having applications as diverse as Best • generate a random, new output, that looks similar to the training data (e.g. practices generating fake human faces, producing purely synthetic music) Large-Scale • alter, or explore variations on data you already have, and not just in a random Deep Learning way either, but in a desired, specific direction DAVIDE • The fundamental problem with autoencoders, for generation, is that the Jupyter on latent space where their encoded vectors lie, may not be continuous, or DAVIDE allow easy interpolation. GAN Objective Algorithm References

Recommend

More recommend