Input: Concepts, instances, attributes Terminology Whats a concept? - PDF document

Input: Concepts, instances, attributes Terminology Whats a concept? z Classification, association, clustering, numeric prediction Data Mining Whats in an example? z Relations, flat files,

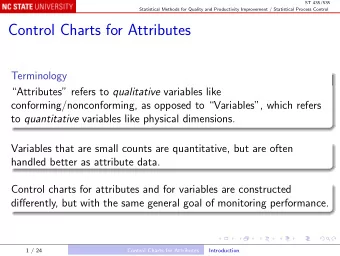

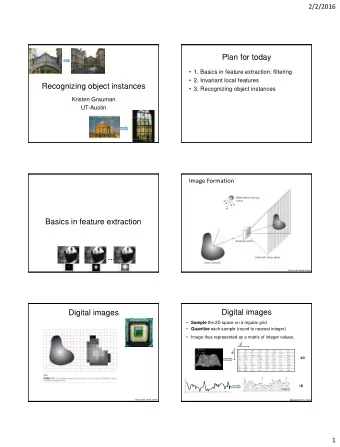

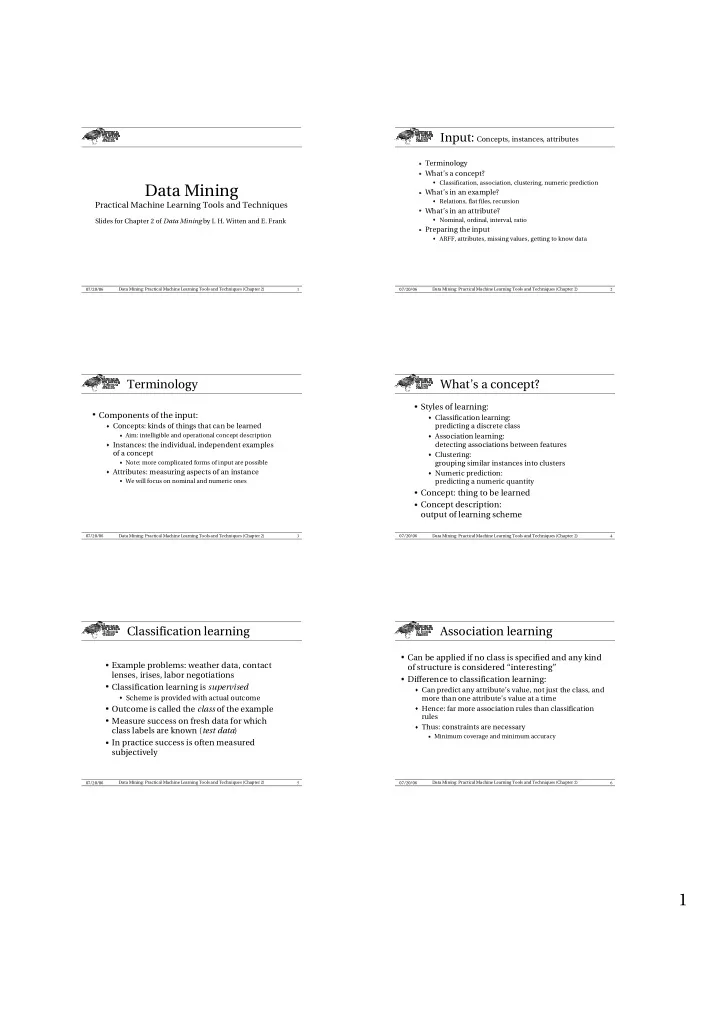

� � ✂ ✂ ✂ � � � ✂ Input: Concepts, instances, attributes Terminology What’s a concept? z Classification, association, clustering, numeric prediction Data Mining What’s in an example? z Relations, flat files, recursion Practical Machine Learning Tools and Techniques What’s in an attribute? z Nominal, ordinal, interval, ratio Slides for Chapter 2 of Data Mining by I. H. Witten and E. Frank Preparing the input z ARFF, attributes, missing values, getting to know data 1 2 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) Terminology What’s a concept? ✁ Styles of learning: ✁ Components of the input: z Classification learning: z Concepts: kinds of things that can be learned predicting a discrete class Aim: intelligible and operational concept description z Association learning: z Instances: the individual, independent examples detecting associations between features of a concept z Clustering: Note: more complicated forms of input are possible grouping similar instances into clusters z Attributes: measuring aspects of an instance z Numeric prediction: We will focus on nominal and numeric ones predicting a numeric quantity ✁ Concept: thing to be learned ✁ Concept description: output of learning scheme 3 4 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) Classification learning Association learning ✁ Can be applied if no class is specified and any kind ✁ Example problems: weather data, contact of structure is considered “interesting” lenses, irises, labor negotiations ✁ Difference to classification learning: ✁ Classification learning is supervised z Can predict any attribute’s value, not just the class, and z Scheme is provided with actual outcome more than one attribute’s value at a time ✁ Outcome is called the class of the example z Hence: far more association rules than classification ✁ Measure success on fresh data for which rules z Thus: constraints are necessary class labels are known ( test data ) Minimum coverage and minimum accuracy ✁ In practice success is often measured subjectively 5 6 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) 1

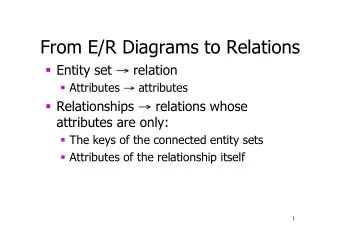

Clustering Numeric prediction ✁ Variant of classification learning where ✁ Finding groups of items that are similar ✁ Clustering is unsupervised “class” is numeric (also called “regression”) ✁ Learning is supervised z The class of an example is not known ✁ Success often measured subjectively z Scheme is being provided with target value ✁ Measure success on test data Sepal length Sepal width Petal length Petal width Type 1 5.1 3.5 1.4 0.2 Iris setosa Outlook Temperature Humidity Windy Play- time 2 4.9 3.0 1.4 0.2 Iris setosa Sunny Hot High False 5 … 51 7.0 3.2 4.7 1.4 Iris versicolor Sunny Hot High True 0 52 6.4 3.2 4.5 1.5 Iris versicolor Overcast Hot High False 55 … Rainy Mild Normal False 40 101 6.3 3.3 6.0 2.5 Iris virginica … … … … … 102 5.8 2.7 5.1 1.9 Iris virginica … 7 8 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) What’s in an example? A family tree � Instance: specific type of example ✁ Thing to be classified, associated, or clustered Peter Peggy Grace Ray = = ✁ Individual, independent example of target concept M F F M ✁ Characterized by a predetermined set of attributes � Input to learning scheme: set of instances/dataset Steven Graham Pam Ian Pippa Brian = ✁ Represented as a single relation/flat file M M F M F M � Rather restricted form of input ✁ No relationships between objects � Most common form in practical data mining Anna Nikki F F 9 10 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) Family tree represented as a table The “sister-of” relation First S econd S ister First S econd Sister Name Gender Parent1 parent2 person person of? person person of? Peter Male ? ? Peter Peggy No Steven Pam Yes Peggy Female ? ? Peter Steven No Graham Pam Yes Steven Male Peter Peggy … … … Ian Pippa Yes Graham Male Peter Peggy Steven Peter No Brian Pippa Yes Pam Female Peter Peggy Steven Graham No Anna Nikki Yes Ian Male Grace Ray Steven Pam Yes Nikki Anna Yes Pippa Female Grace Ray … … … All the rest No Brian Male Grace Ray Ian Pippa Yes Anna Female Pam Ian … … … Nikki Female Pam Ian Closed-world assumption Anna Nikki Yes … … … Nikki Anna yes 11 12 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) 2

Generating a flat file A full representation in one table ✁ Process of flattening called “denormalization” First person Second person Sister of? z Several relations are joined together to make one Name Gender Parent1 Parent2 Name Gender Parent1 Parent2 ✁ Possible with any finite set of finite relations Steven Male Peter Peggy Pam Female Peter Peggy Yes Graham Male Peter Peggy Pam Female Peter Peggy Yes ✁ Problematic: relationships without pre-specified Ian Male Grace Ray Pippa Female Grace Ray Yes Brian Male Grace Ray Pippa Female Grace Ray Yes number of objects Anna Female Pam Ian Nikki Female Pam Ian Yes z Example: concept of nuclear-family Nikki Female Pam Ian Anna Female Pam Ian Yes ✁ Denormalization may produce spurious regularities All the rest No that reflect structure of database If second person’s gender = female z Example: “supplier” predicts “supplier address” and first person’s parent = second person’s parent then sister-of = yes 13 14 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) The “ancestor-of” relation Recursion ✁ Infinite relations require recursion First person Second person Ancestor of? Name Gender Parent Parent Name Gender Parent Parent2 If person1 is a parent of person2 1 2 1 Peter Male ? ? Steven Male Peter Peggy Yes then person1 is an ancestor of person2 Peter Male ? ? Pam Female Peter Peggy Yes Peter Male ? ? Anna Female Pam Ian Yes If person1 is a parent of person2 Peter Male ? ? Nikki Female Pam Ian Yes and person2 is an ancestor of person3 Pam Female Peter Peggy Nikki Female Pam Ian Yes then person1 is an ancestor of person3 Grace Female ? ? Ian Male Grace Ray Yes Grace Female ? ? Nikki Female Pam Ian Yes ✁ Appropriate techniques are known as Other positive examples here Yes All the rest No “inductive logic programming” z (e.g. Quinlan’s FOIL) z Problems: (a) noise and (b) computational complexity 15 16 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) What’s in an attribute? Nominal quantities ✁ Each instance is described by a fixed predefined ✁ Values are distinct symbols set of features, its “attributes” z Values themselves serve only as labels or names ✁ But: number of attributes may vary in practice z Nominal comes from the Latin word for name ✁ Example: attribute “outlook” from weather data z Possible solution: “irrelevant value” flag ✁ Related problem: existence of an attribute may z Values: “sunny”,”overcast”, and “rainy” ✁ No relation is implied among nominal values (no depend of value of another one ✁ Possible attribute types (“levels of ordering or distance measure) ✁ Only equality tests can be performed measurement”): z Nominal, ordinal, interval and ratio 17 18 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) 07/20/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 2) 3

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.