Inference • Suppose you are given a Bayesian network with the graph structure and the parameters CS 331: Artificial Intelligence all figured out Bayesian Networks (Inference) • Now you would like to use it to do inference • You need inference to make predictions or classifications with a Bayes net 1 2 Another Example Another Example • You are very sick and you visit your doctor. • Need to compute • The doctor is able to get the following information P( SwineFlu = true | HasFever = true , from you: HasCough = true , HasBreathingProblems = – HasFever = true true, AteBaconRecently = true ) – HasCough = true • Suppose you pass out before you say a word to – HasBreathingProblems = true the doctor. The doctor is only able to – AteBaconRecently = true determine you have a fever. What is • What’s the probability you have SwineFlu given the P( SwineFlu = true | HasFever = true )? above? 3 4 Query Example Queries Formalized We will use the following notation: P( SwineFlu = true | HasFever = true) • X = query variable • E = { E 1 , …, E m } is the set of evidence variables Query Variable • e = observed event • Y = { Y 1 , …, Y l ) are the non-evidence (or hidden) variables Evidence Variable • The complete set of variables X = { X } E Y Need to calculate the query P ( X | e ) Unobserved variables: HasCough , HasBreathingProblems , AteBaconRecently 5 6 1

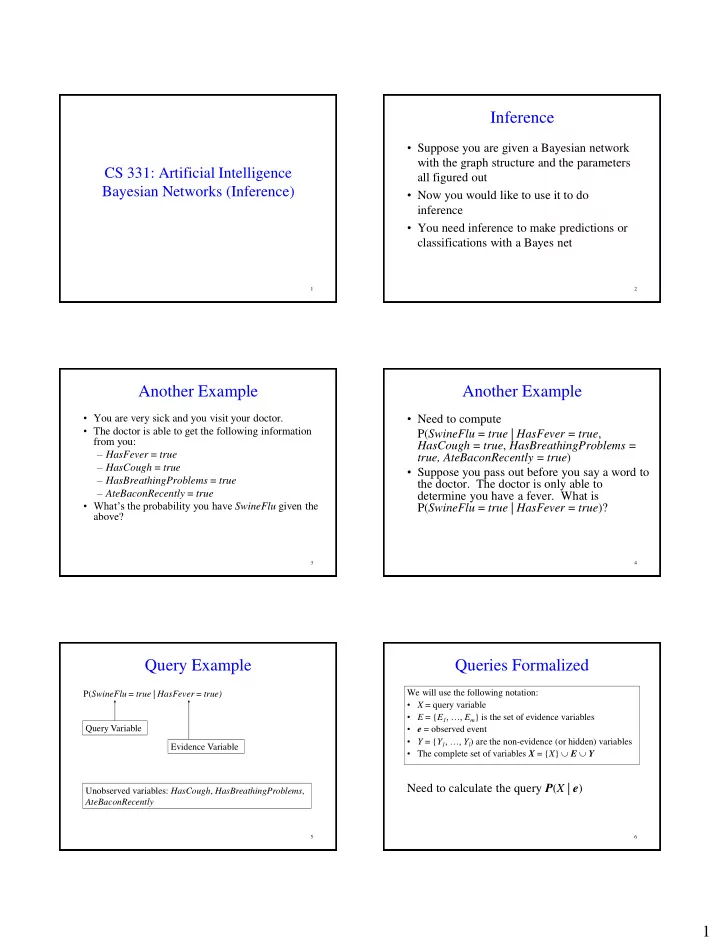

Inference by Enumeration Example #1 • Recall that: A ( X | ) ( X , ) ( X , , ) P e P e P e y B C D y n ( ,..., ) ( | ( )) P x x P x parents X 1 n i i 1 i Query: P( B=true | C=true ) This means you can answer queries by computing How do you solve this? 2 steps: sums of products of conditional probabilities from the 1. Express it in terms of the joint probability distribution P(A, B, network C,D) 2. Express the joint probability distribution in terms of the entries in the CPTs of the Bayes net 7 8 Example #1 Example #1 Whenever you see a Whenever you need to get a A A conditional like P( B=true | subset of the variables e.g. C=true ), use the Chain Rule: P(B,C) from the full joint distribution P(A,B,C,D), use B C D B C D P( B | C ) = P( B, C ) / P(C) marginalization: ( ) ( , ) P X P X Y y ( | ) P B true C true P ( B true | C true ) y ( , ) P B true C true ( , ) P B true C true ( ) P C true ( ) P C true P ( A a , B true , C true , D d ) a d ( , , , ) P A a B b C true D d 9 10 a b d Example #1 Example #1 To express the joint Take the probabilities that probability distribution as the A A don’t depend on the terms in entries in the CPTs, use: the summation and move ( ,..., ) P X X them outside the summation 1 N B C D B C D N ( | ( )) P X Parents X i i i 1 ( , , , ) P A a B true C true D d P ( A a ) P ( B true | A a ) P ( C true | A a ) P ( D d | C true ) a d a d ( , , , ) ( ) ( | ) ( | ) ( | ) P A a B b C true D d P A a P B b A a P C true A a P D d C true a b d a b d P ( A a ) P ( B true | A a ) P ( C true | A a ) P ( D d | C true ) P ( A a ) P ( B true | A a ) P ( C true | A a ) P ( D d | C true ) a d a d P ( A a ) P ( B b | A a ) P ( C true | A a ) P ( D d | C true ) P ( A a ) P ( B b | A a ) P ( C true | A a ) P ( D d | C true ) a b d a b d 11 2

Example #1 Example #1 Take the probabilities that Take the probabilities that don’t depend on the terms in don’t depend on the terms in A A the summation and move the summation and move them outside the summation them outside the summation B C D B C D Sums to 1 ( ) ( | ) ( | ) P A a P B true A a P C true A a a Doesn’t depend P ( A a ) P ( B b | A a ) P ( C true | A a ) ( ) ( | ) ( | ) ( | ) P A a P B true A a P C true A a P D d C true on b. Can move a b to the left a d P ( A a ) P ( B true | A a ) P ( C true | A a ) P ( A a ) P ( B b | A a ) P ( C true | A a ) P ( D d | C true ) a a b d P ( A a ) P ( C true | A a ) P ( B b | A a ) Sums to 1 P ( A a ) P ( B true | A a ) P ( C true | A a ) P ( D d | C true ) a b a d ( ) ( | ) ( | ) P A a P B true A a P C true A a ( ) ( | ) ( | ) ( | ) P A a P B b A a P C true A a P D d C true a a b d Sums to 1 ( ) ( | ) P A a P C true A a a Example #2 Practice P ( B true | J true , M true ) A B E Write out the equations for the ( , , ) P B true J true M true following probabilities using P ( J true , M true ) probabilities you can obtain from the P ( B true , E e , A a , J true , M true ) A Bayesian network. You will have to B C e a leave it in symbolic form because the P ( B b , E e , A a , J true , M true ) b e a J M CPTs are not shown, but simplify your ( ) ( ) ( | , ) P B true P E e P A a B true E e answer as much as possible. P ( J true | A a ) P ( M true | A a ) D E e a ( ) ( ) ( | , ) 1. P(A=true, B=true, C=true, D=true, P B b P E e P A a B b E e ( | ) ( | ) E=true) P J true A a P M true A a b e a P ( A a | B true , E e ) ( ) ( ) P B true P E e P ( J true | A a ) P ( M true | A a ) e a ( | , ) P A a B b E e P ( B b ) P ( E e ) ( | ) ( | ) P J true A a P M true A a b e a 15 16 CW: Practice CW: Practice A A 2. P(B=true | D=true) 3. P(A=true, D=true, E=true | B=true, C=true) B C B C D E D E 17 18 3

Complexity of Exact Inference Complexity of Exact Inference Burglary Earthquake • Polytrees have a nice property: The time and space complexity of exact inference in polytrees is linear in the number of variables Alarm • What about multiply connected networks? JohnCalls MaryCalls Cloudy • The Burglary/Earthquake Bayesian network is an example of a polytree • Singly connected networks (aka polytrees) have at Sprinkler Rain most one undirected path between any two nodes in the network Wet Grass 19 Complexity of Exact Inference The Good News • What about for multiply connected • Although exact inference is NP-hard, networks? approximate inference is tractable • Exponential time and space complexity in – Lots of promising methods like sampling, MCMC, variational methods, etc. the number of variables in the worst case • Approximate inference is a current research • Bad news: Inference in Bayesian networks topic in Machine Learning is NP-hard • Even worse news: inference is #P-hard (strictly harder than NP-complete problems) 21 22 CW: Practice CW: Practice B P(B) C B A P(A|B,C) B P(B) C B A P(A|B,C) B C B C false 0.25 false false false 0.1 false 0.25 false false false 0.1 true 0.75 false false true 0.9 true 0.75 false false true 0.9 false true false 0.2 false true false 0.2 C P(C) C P(C) false true true 0.8 false true true 0.8 A A false 0.1 false 0.1 true false false 0.3 true false false 0.3 true 0.9 true 0.9 true false true 0.7 true false true 0.7 true true false 0.4 true true false 0.4 true true true 0.6 true true true 0.6 4. What is P(B=false,C=false)? 5. Can you come up with another Bayes net structure (using only the 3 nodes above) that represents the same joint probability distribution? 23 24 4

What You Should Know • How to do exact inference in probabilistic queries of Bayes nets • The complexity of inference for polytrees and multiply connected networks 25 5

Recommend

More recommend