Improving the performance of data servers on multicore architectures - PowerPoint PPT Presentation

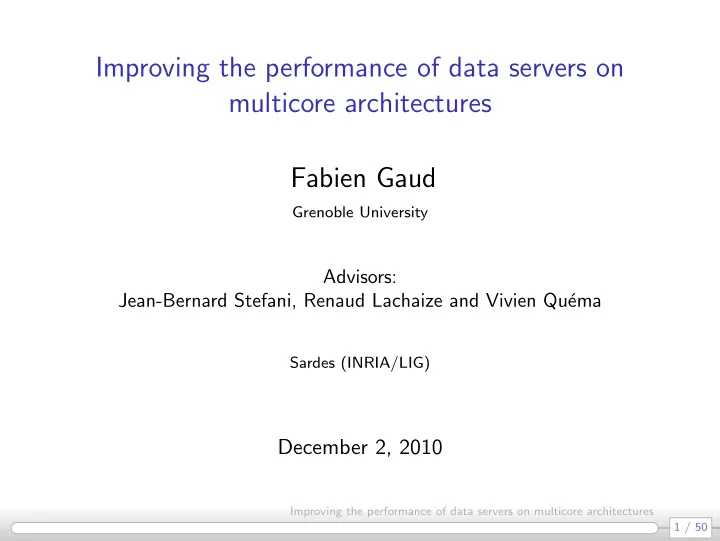

Improving the performance of data servers on multicore architectures Fabien Gaud Grenoble University Advisors: Jean-Bernard Stefani, Renaud Lachaize and Vivien Qu ema Sardes (INRIA/LIG) December 2, 2010 Improving the performance of data

Improving the performance of data servers on multicore architectures Fabien Gaud Grenoble University Advisors: Jean-Bernard Stefani, Renaud Lachaize and Vivien Qu´ ema Sardes (INRIA/LIG) December 2, 2010 Improving the performance of data servers on multicore architectures 1 / 50

Processor evolution Before ∼ 2006: One core Regular increase of clock frequency Since then: Almost no increase of clock frequency Increasing number of cores: Multicore architectures NUMA architectures Manycore architectures Improving the performance of data servers on multicore architectures 2 / 50

Multicore is a hot topic Legacy applications do not efficiently leverage multicore hardware Research topics: Programming models/languages Operating systems abstractions/internals Runtime/libraries Applications Active research field: Corey (OSDI’08) Barrelfish (SOSP’09), Helios (SOSP’09) PK (OSDI’10) Improving the performance of data servers on multicore architectures 3 / 50

This thesis Application domain: data servers, a.k.a. networked services Goal: Improve the performance of data servers on multicore architectures Contributions: Efficient multicore event-driven programming Scaling the Apache Web server on NUMA multicore systems Improving the performance of data servers on multicore architectures 4 / 50

#1: Efficient multicore event-driven programming CFSE 2009 ( best paper award ) ICDCS 2010 Improving the performance of data servers on multicore architectures 5 / 50

Event-driven programming Application is structured as a set of handlers processing events An event can be: Triggered by an I/O operation Produced internally by the application Events are stored in a queue and processed by a single thread Handler 1 Handler 2 Control Loop Handler 3 Event Handler 4 Improving the performance of data servers on multicore architectures 6 / 50

Multicore event-driven programming Goal: concurrently execute multiple handlers Challenges: Concurrency management Balancing load on cores Solutions: N-Copy 1-Copy with synchronization Improving the performance of data servers on multicore architectures 7 / 50

N-Copy Principle: running one instance of the application per core App1 App2 App3 App4 Core 1 Core 2 Core 3 Core 4 Control loop Event queue Event Improving the performance of data servers on multicore architectures 8 / 50

N-Copy (2) Advantages: No concurrency management needed No application modification needed Drawbacks: Not applicable to all applications Multiple copies of data Requires external load balancing Improving the performance of data servers on multicore architectures 9 / 50

1-copy with synchronization Principle: 1 instance on multiple cores Concurrency can be managed using: Locks STM Annotations Load balancing can be achieved with: Static placement Workgiving Workstealing Chosen approach is implemented in Libasync-SMP (Usenix’03) Improving the performance of data servers on multicore architectures 10 / 50

Libasync-SMP – Concurrency management Annotations (colors) set on events App1 Core 1 Core 2 Core 3 Core 4 Events with color 0 Events with color 1 Event queue Control loop Events with color 2 Events with color 3 Improving the performance of data servers on multicore architectures 11 / 50

Libasync-SMP – Load balancing Load balancing is done through workstealing App1 Core 1 Core 2 Core 3 Core 4 Events with color 0 Events with color 1 Event queue Control loop Events with color 2 Events with color 3 Improving the performance of data servers on multicore architectures 12 / 50

1-Copy with synchronization Advantages: Allows sharing between cores Allows load balancing between cores Drawbacks: Need to modify the application Efficient load balancing is difficult Improving the performance of data servers on multicore architectures 13 / 50

Workstealing performance: SFS Libasync-smp 140 Libasync-smp - WS 120 Throughput (MB/sec) 100 80 60 40 20 0 35% throughput increase Improving the performance of data servers on multicore architectures 14 / 50

Workstealing performance: Web server 180 Libasync-smp Libasync-smp - WS 160 140 Throughput (MB/sec) 120 100 80 60 40 20 0 33% throughput decrease Improving the performance of data servers on multicore architectures 15 / 50

What is the problem? Fine grain events: Stealing time (197 Kcycles) ≫ stolen processing time (20 Kcycles) Inefficient cache usage: +146% L2 cache misses Inefficient workstealing implementation O(n) complexity Improving the performance of data servers on multicore architectures 16 / 50

Contributions New: Workstealing algorithm Runtime implementation Fine grain events: Algorithm: steal events with high execution time Inefficient cache usage: Algorithm: steal cache-friendly events Algorithm: take cache hierarchy into account Inefficient workstealing implementation Runtime: mitigate stealing costs Improving the performance of data servers on multicore architectures 17 / 50

Idea #1: Take into account execution time Problem: stealing cost is not always amortized Many event handlers are relatively fine grain Workstealing may have a significant cost Solution: Time-left stealing Know at any time which colors are worthy (Handler execution time is set by the programmer) Improving the performance of data servers on multicore architectures 18 / 50

Idea #2: Take into account caches Problem: Workstealing can reduce cache efficiency Stealing events increases cache misses Example: event handlers accessing large, long-lived, data sets Solution 1: Penalty-aware stealing Set penalties on handlers based on their cache access pattern (Penalties are set manually based on preliminary profiling) Solution 2: Locality-aware stealing Give priority to a neighbor when stealing Improving the performance of data servers on multicore architectures 19 / 50

Runtime implementation Core X Color 0 color-queue core-queue Color 1 Color 2 stealing-queue Control loop Color 3 One color-queue per color One core-queue per core that links color-queues One stealing-queue per core Improving the performance of data servers on multicore architectures 20 / 50

Performance evaluation: SFS Libasync-smp 140 Libasync-smp - WS Mely - WS 120 Throughput (MB/sec) 100 80 60 40 20 0 No throughput degradation Improving the performance of data servers on multicore architectures 21 / 50

Performance evaluation: Web server 200 Libasync-smp Libasync-smp - WS Mely - WS 150 Throughput (MB/sec) 100 50 0 73% throughput improvement Improving the performance of data servers on multicore architectures 22 / 50

Web server profiling Web server configuration Stealing time Stolen time Cache misses/event Libasync-SMP - WS 197 Kcycles 20 Kcycles 21 Mely - WS 6 Kcycles 23 Kcycles 9 Stealing time (6 Kcycles) < stolen processing time (23 Kcycles) Improved cache efficiency: -57% L2 cache misses Improving the performance of data servers on multicore architectures 23 / 50

Summary Goal: efficient runtime for multicore event-driven systems Problem: workstealing sometimes degrades performance Contributions: New workstealing algorithm New runtime implementation Results: improve throughput by up to 73% Improving the performance of data servers on multicore architectures 24 / 50

#2: Scaling the Apache Web server on NUMA multicore systems Under submission Improving the performance of data servers on multicore architectures 25 / 50

Problem 10000 8000 -26% # of clients per die 6000 4000 2000 Ideal scalability Apache 0 1 2 3 4 # of dies The Apache web server do not scale on NUMA architectures Improving the performance of data servers on multicore architectures 26 / 50

What can we do? Address scalability issues at the OS level Corey (OSDI 08) Barrelfish (SOSP 09) PK (OSDI 10) Improving the performance of data servers on multicore architectures 27 / 50

Apache on PK 10000 -22% 8000 # of clients per die 6000 4000 2000 Ideal scalability Apache on PK Apache 0 1 2 3 4 # of dies Does not solve scalability issues Improving the performance of data servers on multicore architectures 28 / 50

What do we propose? Addressing scalability issues at the OS level is not sufficient Application-level issues Some issues are difficult to handle (e.g. scheduling) Approach: address scalability issues at the application level Improving the performance of data servers on multicore architectures 29 / 50

Methodology Consider both hardware and software bottlenecks Hardware bottlenecks: Processor interconnect Distant memory accesses Software bottlenecks: Synchronization primitives Improving the performance of data servers on multicore architectures 30 / 50

Hardware testbed DRAM DRAM 61 Gb/s I/O C0 C4 C8 C12 C3 C7 C11 C15 24 Gb/s L1 L1 L1 L1 L2 L2 L2 L2 L3 Die 0 Die 1 Die 3 Die 2 I/O 24 Gb/s C2 C6 C10 C14 C1 C5 C9 C13 DRAM DRAM 4 processors / 16 cores Improving the performance of data servers on multicore architectures 31 / 50

Hardware testbed DRAM DRAM 61 Gb/s I/O C0 C4 C8 C12 C3 C7 C11 C15 24 Gb/s L1 L1 L1 L1 L2 L2 L2 L2 L3 Die 0 Die 1 Die 3 Die 2 I/O 24 Gb/s C2 C6 C10 C14 C1 C5 C9 C13 DRAM DRAM 4 processors / 16 cores Improving the performance of data servers on multicore architectures 31 / 50

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.