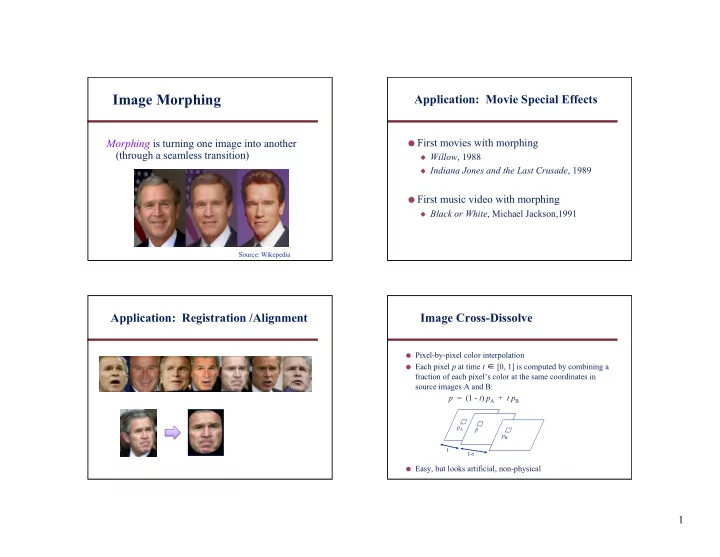

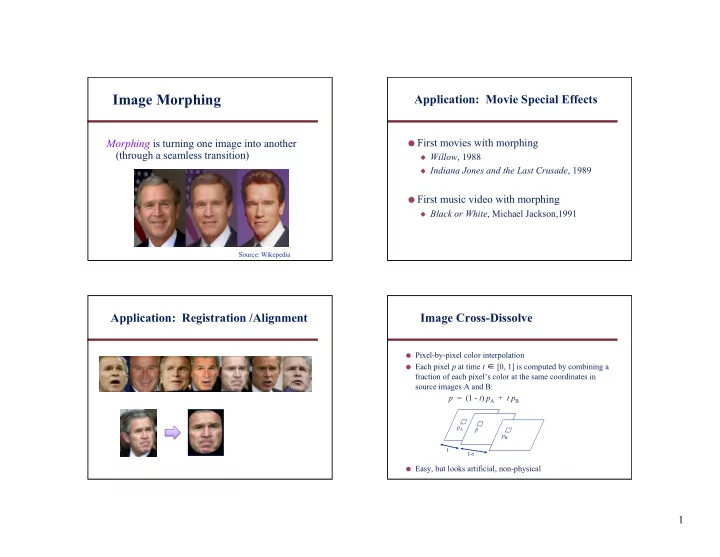

Image Morphing Application: Movie Special Effects ● First movies with morphing Morphing is turning one image into another (through a seamless transition) ◆ Willow , 1988 ◆ Indiana Jones and the Last Crusade , 1989 ● First music video with morphing ◆ Black or White , Michael Jackson,1991 Source: Wikepedia Application: Registration /Alignment Image Cross-Dissolve ● Pixel-by-pixel color interpolation ● Each pixel p at time t ∈ [0, 1] is computed by combining a fraction of each pixel’s color at the same coordinates in source images A and B: p = (1 - t ) p A + t p B p A p p B t 1-t ● Easy, but looks artificial, non-physical 1

Jason Salavon: “The Late Night Triad” Jason Salavon: “100 Special Moments” http://www.salavon.com/ Align, then Cross-Disolve Image Morphing = Object Averaging ● The aim is to find “an average” between two objects ◆ Not an average of two images of objects ◆ …but an image of the average object ◆ How can we make a smooth transition in time? ◆ Do a “weighted average” over time ● How do we know what the average object looks like? ● Alignment of rigid object using global warp okay – picture ◆ We haven’t a clue! still valid ◆ But we can often fake something reasonable ● But we have different objects, so transformation is non-rigid 2

Dog Averaging Averaging Points Q v = Q - P What’s the average P ● What to do? of P and Q? ◆ Cross-dissolve doesn’t work ◆ Global alignment doesn’t work P + 1.5 v ◆ Cannot be done with a global transformation (e.g., affine) = P + 1.5( Q – P ) P + 0.5 v = -0.5 P + 1.5 Q ● Feature matching! = P + 0.5( Q – P ) (extrapolation) Linear Interpolation = 0.5 P + 0.5 Q ◆ Nose to nose, tail to tail, etc. (Affine Combination): ● P and Q can be anything: New point aP + bQ, ◆ This is a local, non-parametric , warp ◆ points on a plane (2D) or in space (3D) defined only when a +b = 1 ◆ Colors in RGB or HSV (3D) So, aP + bQ = aP +(1- a ) Q ◆ Whole images (m-by-n D)… etc. Idea: Local Warp, then Cross-Dissolve Image Morphing Morphing = warping + cross-dissolving color shape (photometric) (geometric) Morphing procedure: for every t Find the average shape (the “mean dog” ) 1. Warp = feature specification + warp generation ◆ local warping Find the average color 2. ◆ Cross-dissolve the warped images 3

Local (Non-Parametric) Image Warping Mesh-based Warp Specification How can we specify the warp? ● Specify corresponding spline control points ● Need to specify a more detailed warp function Interpolate to a complete warping function • ◆ Global warps are functions of a few (e.g., 2, 4, 8) parameters ◆ Non-parametric warps u ( x , y ) and v ( x , y ) can be defined independently for every single location x , y ◆ Once we know vector field u , v we can easily warp each pixel (use backward warping with interpolation) Sparse Warp Specification Image Morphing ● Mesh-based image morphing How can we specify the warp? ● ◆ G. Wolberg, Digital Image Warping , 1990 Specify corresponding line segments ( vectors ) 2. ◆ Warp between corresponding grid points in source and ◆ Interpolate to a complete warping function destination images ◆ Interpolate between grid points, e.g., linearly using three closest grid points ◆ Fast, but hard to control so as to avoid unwanted distortions 4

Feature-based Image Morphing Beier and Neely Algorithm ● Given : 2 images, A and B, and their corresponding sets of ● T. Beier and S. Neely, Proc. SIGGRAPH 1992 line segments, L A and L B , respectively ● Distort color and shape ● Foreach intermediate frame time t ∈ [0, 1] do ⇒ image warping + cross-dissolving ◆ Linearly interpolate the position of each line ● Warping transformation partially defined by user interactively ◆ L t [i] = Interpolate(L A [i], L B [i], t ) specifying corresponding pairs of vectors in the source and ◆ Warp image A to destination shape destination images; only a sparse set is required (but carefully chosen) ◆ WA = Warp(A, L A , L t ) ◆ Warp image B to destination shape ● Compute dense pixel correspondences, defining continuous mapping function, based on weighted combination of ◆ WB = Warp(B, L B , L t ) displacement vectors of a pixel from all of the input vectors ◆ Cross-dissolve by fraction t ● Interpolate pixel positions and colors (2D linear interpolation) ◆ MorphImage = CrossDissolve(WA, WB, t ) Example: Translation Line Feature-based Warping ● Goal : Define a continuous function that warps a source ● Consider images where there is one line segment pair, and image to a destination image from a sparse set of it is translated from image A to image B: corresponding, oriented, line segment features - each pixel’s position defined relative to these line segments ● Warping with one line pair : foreach pixel p B in destination image B do A M .5 B find dimension-less coordinates ( u , v ) relative to oriented line segment q B r B find p A in source image A using ( u , v ) relative to q A r A ● First, linearly interpolate position of line segment in M p B r A copy color at p A to p B r B ● Second, for each pixel ( x , y ) in M, find corresponding v Destination Image B pixels in A( x -a, y ) and B( x +a, y ), and average them Source v u p A Image A u q B q A 5

Single Line-Pair Examples Feature-based Warping (cont.) ● Warping with multiple line pairs ◆ Use a weighted combination of the points defined by the same mapping q 1 q´ 1 q 2 X v 2 X q´ 2 X ´ 1 v 1 v 1 u 2 X´ v 2 u 1 p 2 u 1 u 2 X ´ 2 p´ 2 p 1 p´ 1 Source Image Destination Image X´ = weighted average of D 1 and D 2 , where D i = X ´ i - X , and weight = (length( p i q i ) c / (a + | v i |)) b , for constants a, b, c Resulting Warp 2 Input Images with Line Correspondences 6

Images Warped to Same “Shape” Warped Shapes without Grid Lines Sparse Warp Specification Cross-Dissolved Result How can we specify the warp? ● Specify corresponding points Interpolate to a complete warping function • How do we go from feature points to pixels? 7

Point Feature Morphing: Triangular Mesh Morphing between Two Image Sequences ● Goal : Given two animated sequences of images, create a morph sequence ● User defines corresponding line segments in pairs of key Input correspondences at landmark/fiducial feature points 1. frames in the two sequences Define a triangular mesh over the points 2. ● At frame i , compute the two sets of line segments by ◆ Same mesh in both images interpolating between the nearest bracketing key frames’ ◆ Now we have triangle-to-triangle correspondences line sets Warp each triangle separately from source to destination 3. ● Apply 2-image morph algorithm for t = 0.5 only to obtain ◆ How do we warp a triangle? morph frame i ◆ 3 points = affine warp ◆ Just like texture mapping Geometrically-Correct Pixel Reprojection ● What geometric information is needed to generate optically-correct virtual camera views? ◆ Dense pixel correspondences between two input views ◆ Known geometric relationship between the two cameras ◆ Epipolar geometry 8

View Morphing ● Seitz and Dyer, Proc. SIGGRAPH 1995 ● Given : Two views of an unknown rigid scene, with no camera information known, compute new views from a virtual camera at viewpoints in- between two input views Photograph Photograph Virtual Camera Morphed View 9

1. Prewarp 1. Prewarp align views align views 2. Morph move camera Application: Pose Correction 1. Prewarp align views ● Image Postprocessing 2. Morph ◆ Alter image perspective in the lab move camera ● Image Databases 3. Postwarp ◆ Normalize images for better indexing point camera ◆ Simplify face recognition tasks 10

Original Photographs Another Example Frontal Poses 11

A Morphable Model for Synthesis of Faces the Synthesis of 3D Faces Database Morphable Face Model V. Blanz and T. Vetter Face Modeler Proc. SIGGRAPH 1999 Analyzer Result Input Image 3D Head Face Representation using Approach: Example-based Modeling of Cylindrical Coordinates Faces h 2D Image 3D Face Models red(h, f ) Texture , green(h, f ) T blue(h, f ) = w 1* + w 2* + w 3* + w 4* +. . . f h Linear combination of exemplar face models (all exemplar faces are in full correspondence) Shape , S radius(h, f ) 200 exemplar faces f 70,000 points per image 12

Morphing 3D Faces Vector Space of 3D Faces 1 1 __ __ + = ● A Morphable Model can generate new faces 2 2 3D Blend a 1 * + a 2 * + a 3 * + a 4 * + . . . = b 1 * + b 2 * + b 3* + b 4* + . . . 3D Morph Dense point correspondences across all exemplar faces Modeling the Appearance of Faces Modeling in Face Space A face is represented as a point in a “face space” ● Which directions code for specific attributes? Caricature Original Average 13

Matching a Morphable 3D Face Model 3D Shape from Images a 1 * + a 2 * + a 3 * + a 4 * + . . . = R Face Analyzer b 1 * + b 2 * + b 3 * + b 4 * + . . . Input Image 3D Head Optimization problem over the range of values of a i and b i delimited by the training faces, plus rendering parameters, ρ , such as camera pose and illumination More Fun with Faces 14

Recommend

More recommend