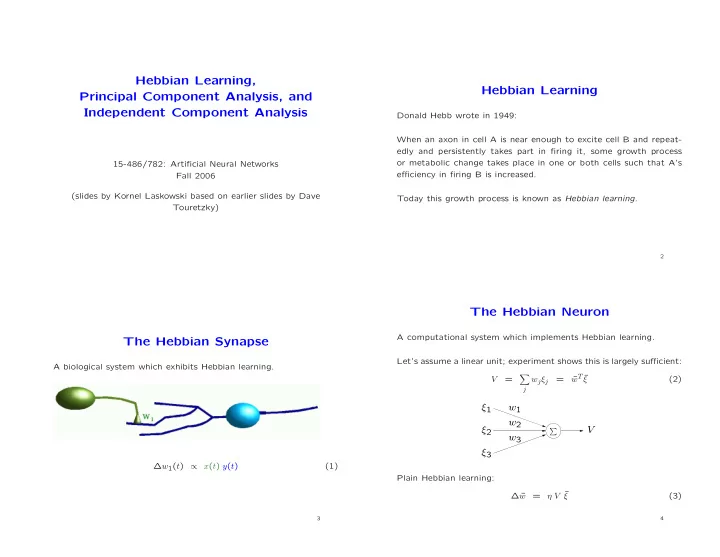

Hebbian Learning, Hebbian Learning Principal Component Analysis, and Independent Component Analysis Donald Hebb wrote in 1949: When an axon in cell A is near enough to excite cell B and repeat- edly and persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that A’s 15-486/782: Artificial Neural Networks e ffi ciency in firing B is increased. Fall 2006 (slides by Kornel Laskowski based on earlier slides by Dave Today this growth process is known as Hebbian learning . Touretzky) 2 The Hebbian Neuron A computational system which implements Hebbian learning. The Hebbian Synapse Let’s assume a linear unit; experiment shows this is largely su ffi cient: A biological system which exhibits Hebbian learning. � w T ¯ V = w j ξ j = ¯ ξ (2) j ξ 1 w 1 w 2 ξ 2 � V w 3 ξ 3 ∆ w 1 ( t ) ∝ x ( t ) y ( t ) (1) Plain Hebbian learning: η V ¯ ∆ ¯ = (3) w ξ 3 4

Hebbian Learning, Non-zero-mean Data Plain Hebbian Learning, Zero-mean Data w T ¯ w = η V ¯ Recall that ∆ ¯ ξ and that V = ¯ ξ . w T ¯ w = η V ¯ Recall that ∆ ¯ ξ and that V = ¯ ξ . But note that if the initial ¯ w points away from the cluster, the dot product with ¯ ξ will be negative, and ¯ w will ultimately point in exactly In this case, the weight vector will ultimately align itself with the the opposite direction. direction of greatest variance in the data. Interpretation awkward ... 5 6 The Direction of Greatest Variance Locality of Plain Hebbian Learning Looking at one synapse at a time (from input unit j ) ∆ w j = η V ξ j (4) This is a local update rule. The Hebbian synapse, characterized by a weight w j , is modified as If the data has zero mean, Hebbian learning will adjust the weight a function of the activity of only the two units it connects. vector ¯ w so as to maximize the variance in the output V . By contrast, backprop is non-local . The update rule involves the When projected onto this direction, the data { ¯ ξ } will exhibit variance backpropagation of a distant error signal, computed (potentially greater than in any other direction. many) layers above it. This direction is also known as the largest principal component of This makes Hebbian learning a likely candidate for biological systems. the data. 7 8

w = η V ¯ Hebbian vs Competitive Learning A Consequence of ∆ ¯ ξ Plain Competitive Learning Plain Hebbian Learning Let’s look at the update rule Eq 3 given our expression for V in Eq 2: Similarities η V ¯ Unsupervised training methods (no teacher, no error signal). ∆ ¯ = w ξ � � w T ¯ Frequently used with online learning. ¯ = ¯ (inner product) η ξ ξ Strong connection to biological systems. � ξ T � ξ ¯ ¯ ≡ ¯ (outer product) (5) η w w T ¯ Linear units: V = ¯ ξ Given a current value of the weight vector ¯ w , the weight update ∆ ¯ w will be a function of the outer product of the input pattern with Di ff erences itself. Exactly one output must be No constraint imposed by active. neighboring units. Note that learning is incremental; that is, a weight update is per- Only winner’s weights updated All weights updated at every formed each time the neuron is exposed to a training pattern ¯ ξ . at every epoch. epoch. We can compute an expectation for ∆ ¯ w if we take into account the w = η ¯ w = η V ¯ ∆ ¯ ∆ ¯ ξ ξ distribution over patterns, P (¯ ξ ). 9 10 � ¯ � � ¯ � w T ¯ ξ ¯ ξ T An Aside: ¯ ξ = ¯ ξ w The Correlation Matrix Taking the expectation of Eq 5 under the input distribution P (¯ ξ 1 ξ ): ξ � ¯ ξ 2 � w T ¯ ¯ = ( w 1 ξ 1 + w 2 ξ 2 + · · · + w N ξ N ) ξ . � ξ T � . . ξ ¯ ¯ � ∆ ¯ w � = η w ¯ ξ N ≡ η C ¯ (6) w w 1 ξ 2 + w 2 ξ 1 ξ 2 + · · · + w N ξ 1 ξ N 1 w 2 ξ 2 where we have defined the correlation matrix as w 1 ξ 2 ξ 1 + + · · · + w N ξ 2 ξ N 2 = . . . � ξ T � ξ ¯ ¯ w N ξ 2 + + · · · + C ≡ (7) w 1 ξ N ξ 1 w 2 ξ N ξ 2 N ξ 2 ξ 1 ξ 2 · · · ξ 1 ξ N w 1 1 � ξ 2 ξ 2 ξ 2 ξ 1 · · · ξ 2 ξ N w 2 i � � ξ 1 ξ 2 � · · · � ξ 1 ξ N � 2 = . . ... . . . . . . . . . . � ξ 2 � ξ 2 ξ 1 � 2 � · · · � ξ 2 ξ N � = ξ 2 · · · ξ N ξ 1 ξ N ξ 2 w N . . ... . . . . N . . . � ¯ ξ T � � ξ 2 ξ ¯ � ξ N ξ 1 � � ξ N ξ 2 � · · · N � = w ¯ � � � � � � � ξ T � w T ¯ w T ¯ ξ T ¯ ξ T ¯ ξ = ¯ ¯ = ¯ ¯ = ¯ ξ ¯ ¯ ξ ¯ and N is the number of inputs to our Hebbian neuron. Or just ¯ ¯ w = w . ¯ ξ ξ ξ ξ w 11 12

The Stability of Hebbian Learning More on the Correlation Matrix Recalling Eq 6, � ∆ ¯ w � = η C ¯ w Similar to the covariance matrix �� � T � From a mathematical perspective, this is a discretized version of a � � ¯ ¯ = ξ − ¯ ξ − ¯ (8) Σ µ µ linear system of coupled first-order di ff erential equations, d ¯ C is the second moment of the input distribution about the origin w = C ¯ w (9) ( Σ is the second moment of the input distribution about the mean) dt whose natural solutions are µ = ¯ If ¯ 0, then C = Σ . e λ t ¯ w ( t ) ¯ = u (10) Like Σ , C is symmetric. Symmetry implies that all eigenvalues are where λ is a scalar and ¯ u is a vector independent of time. If we real and all eigenvectors are orthogonal. wanted to solve this system of equations, we’d still need to solve for both λ and ¯ u . Additionally, because C is an outer product, it’s positive-definite. There turn out to be multiple pairs { λ , ¯ u } ; then ¯ w ( t ) is a linear This means that all eigenvalues are not just real, they’re also all combination of terms like the one in Eq 10. non-negative. Note that if any λ i > 0, ¯ w ( t ) blows up. 13 14 Hebbian Learning Blows Up Plain Hebbian Learning: Conclusions To see that Eq 10 represents solutions to Eq 9, We’ve shown that for zero-mean data, the weight vector aligns itself with the direction of maximum variance as training continues. d ¯ w C ¯ w = dt This direction corresponds to the eigenvector of the correlation ma- d � � � � e λ t ¯ e λ t ¯ = C u u trix C with the largest corresponging eigenvalue. If we decompose dt w = � λ e λ t ¯ the current ¯ w in terms of the eigenvectors of C , ¯ i α i ¯ u i , then = u the expected weight update rule ⇒ C ¯ u = λ ¯ u (11) � ∆ ¯ w � = η C ¯ w � = α i ¯ η C u i So the λ i ’s are the eigenvalues of the correlation matrix C ! i � = α i λ i ¯ (12) η u i 1. C is an outer product ⇒ all λ ≥ 0. i will move the weight vector ¯ w towards eigenvector ¯ u i by a factor 2. From Eq 10, if any λ > 0 then ¯ w → ∞ . proportional to λ i . Over many updates, the eigenvector with the largest λ i will drown out the contributions from the others. 3. The λ i ’s cannot all be zero (true only for the zero matrix). So plain Hebbian learning blows up. But because of this mechanism, the magnitude of ¯ w blows up. 15 16

Stabilizing Hebbian Learning Oja’s Rule How to keep ¯ w from blowing up? An alternative, suggested by Oja, is to add weight decay . We could renormalize ¯ w : The weight decay term is proportional to V 2 (recall V = ¯ w T ¯ w ( τ +1) w ( τ ) + ∆ ¯ w ( τ ) ξ is the ¯ = ¯ (13) ∗ (output) activation of our Hebbian neuron). w ( τ +1) ¯ � � w ( τ +1) ∗ ¯ ¯ = (14) ∆ ¯ w = η V ξ − V ¯ (15) w w ( τ +1) � ¯ � ∗ w approaches unit length, without any additional explicit e ff ort. ¯ This ensures that ¯ w always has unit length. But it retains the property that it points in the direction of maximum But it obliges us to give up our claim that learning is local — every variance in the data (the largest principal component). synapse ( w i ) must know what every other synapse of the unit is doing. 17 18 Sejnowski’s Hebbian Covariance Rule Principal Component Analysis (PCA) � � A tool from statistics for data analysis. ξ − � ¯ ¯ ∆ ¯ = η ( V − � V � ) ξ � (16) w Can reveal structure in high- N -dimensional data that is not otherwise Then under the input distribution P (¯ ξ ), obvious. � � �� ξ − � ¯ ¯ � ∆ ¯ w � = η ( V − � V � ) ξ � η � V ¯ ξ − V � ¯ ξ � − ¯ ξ � V � + � V �� ¯ Like Hebbian learning, it discovers the direction of maximum vari- = ξ �� � � ance in the data. But then in the ( N − 1)-dimensional subspace � V ¯ ξ � − � V �� ¯ ξ � − � V �� ¯ ξ � + � V �� ¯ = ξ � η perpendicular to that direction, it discovers the direction of maxi- � � � V ¯ ξ � − � V �� ¯ = η ξ � mum remaining variance, and so on for all N . � � � � � � w T ¯ w T ¯ ¯ �� ¯ = � ¯ ξ � − � ¯ ξ � η ξ ξ � � � ¯ ξ ¯ ξ T � − � ¯ ξ �� ¯ ξ T � The result is an ordered sequence of principal components. These = η w ¯ (17) are equivalently the eigenvectors of the correlation matrix C for zero- mean data, ordered by magnitude of eigenvalue in descending order. Subtracting the mean allows ∆ ¯ w to be negative, reducing the weight They are mutually orthogonal. vector. 19 20

Recommend

More recommend