Global Illumination II Shih-Chin Weng shihchin.weng@gmail.com Case - PowerPoint PPT Presentation

Global Illumination II Shih-Chin Weng shihchin.weng@gmail.com Case Study PantaRay from NVidia & Weta Scenario of PantaRay Scenario of PantaRay Case Study: PantaRay Splitting to chunks (green) Bucking van Emde Boas Layout etc.

Global Illumination II Shih-Chin Weng shihchin.weng@gmail.com

Case Study PantaRay from NVidia & Weta

Scenario of PantaRay

Scenario of PantaRay

Case Study: PantaRay Splitting to chunks (green) Bucking van Emde Boas Layout etc. Converting to Bricks BVH construction

Case Study Hyperion from Disney Animation

Concerns of Production Renderers • Computation bound or I/O bound? • Challenges – Massive geometry data set • Buildings, forest, hair, fur, etc. – Large amount of high-resolution textures • Goals – Reduce I/O costs – Improve memory access patterns

The Beauty of San Fransokyo [Video courtesy of Disney Animation.]

City View of San Fransokyo [Video courtesy of Disney Animation.]

Introduction of Hyperion in Big Hero 6 • Features – Uni-directional path tracer (w/o intermediate caches) – Physically based rendering – Support volumetric rendering and mesh lights, etc. • Data complexity – 83,000 buildings – 216,000 street lights

Core Ideas To Ensure Coherence • Sort potentially out-of-core ray batches to extract ray groups from a complex scene – There are 30~60M rays per batch – Perform scene traversal per ray batch at a time • Sort ray hits for deferred shading w.r.t. shading context (mesh ID + face ID) – Hit points are grouped by mesh ID, then sorted by face ID • Achieve sequential texture reads with PTex

Tracing Pipeline [Eisenacher et al., EGSR’13]

Tracing Pipeline [Eisenacher et al., EGSR’13]

Render Equation

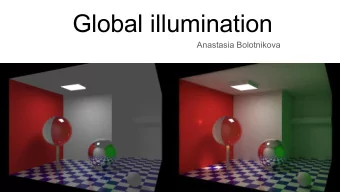

Where Does Light Come From? direct indirect

Global = Direct + Indirect Lighting Direct Illumination Global Illumination

Global = Direct + Indirect Lighting color bleeding Direct Illumination Global Illumination

Render Equation Unknown!! 𝑀 𝑦, 𝜕 𝑝 = 𝑀 𝑓 𝑦, 𝜕 𝑝 + න 𝑀 𝑦, 𝜕 𝑗 𝑔 𝜕 𝑗 , 𝜕 𝑝 𝜕 𝑗 ⋅ 𝑜 𝑒𝜕 𝑗 Ω

Render Equation Unknown!! 𝑀 𝑦, 𝜕 𝑝 = 𝑀 𝑓 𝑦, 𝜕 𝑝 + න 𝑀 𝑦, 𝜕 𝑗 𝑔 𝜕 𝑗 , 𝜕 𝑝 𝜕 𝑗 ⋅ 𝑜 𝑒𝜕 𝑗 Ω How do we solve this kind of equation??

Render Equation Unknown!! 𝑀 𝑦, 𝜕 𝑝 = 𝑀 𝑓 𝑦, 𝜕 𝑝 + න 𝑀 𝑦, 𝜕 𝑗 𝑔 𝜕 𝑗 , 𝜕 𝑝 𝜕 𝑗 ⋅ 𝑜 𝑒𝜕 𝑗 Ω How do we solve this kind of equation??

Quadrature • 1D example – Rectangle/trapezoid – Gaussian quadrature • Curse of dimensionality – The dimension of render equation is infinity!! – That’s why we need Monte Carlo https://en.wikipedia.org/wiki/Numerical_integration

Monte Carlo Integration

Probability Review Excepted value of a random variable E p f x = නf x p x dx sample space Variance event 2 V 𝑔(𝑦) = E 𝑔 𝑦 − 𝐹 𝑔 𝑦 2 − 𝐹 𝑔 𝑦 2 V 𝑔(𝑦) = 𝐹 𝑔 𝑦 random variable probability set function 𝑌: 𝑇 → ℝ P 𝐹 It’s a function, NOT a variable!! 𝑇 0

Concepts • Use random numbers to approximate integrals • It only estimates the values of integrals – i.e. gives the right answer on average • It only requires to be able to evaluate the integrand at arbitrary points – Nice property for multi-dimensional integrand such as radiance in render equation

Monte Carlo Sampling Easy to implement Efficient for high dimensional integrals ꭙ Noise (variance) ꭙ Low convergence rate (1/ 𝑜) – But we don’t have many other choices in high dimensional space!

Estimate 𝜌 with Monte Carlo Sampling 2r 𝑄(𝑄𝑝𝑗𝑜𝑢𝑡𝐽𝑜𝐷𝑗𝑠𝑑𝑚𝑓) = 𝜌𝑠 2 4𝑠 2 ⇒ 𝜌 = 4𝑄 https://en.wikipedia.org/wiki/Monte_Carlo_method#/media/File:Pi_30K.gif

Probability Density Function (PDF) 𝑐 Pr x ∈ a, b = න 𝑞 𝑦 𝑒𝑦 a The relative probability of a random variable taking on a particular value 𝑒𝑄𝑠 𝑦 • 𝑞 𝑦 = ≥ 0 𝑒𝑦 ∞ 𝑞 𝑦 𝑒𝑦 = 1, Pr 𝑦 ∈ ℝ = 1 • −∞

Cumulative Distribution Function (CDF) 𝑄 𝑦 = 𝑄𝑠 𝑌 ≤ 𝑦 Figure from “Global Illumination Compendium” , Philip Dutré

Properties of Estimators • Suppose 𝑅 is the unknown quantity • Unbiased: E 𝐺 𝑂 = 𝑅 – Bias β F N = E 𝐺 𝑂 − 𝑅 – The expected value is independent of sample size N • Consistent 𝑂→∞ 𝛾 𝐺 lim 𝑂 = 0 • 𝑂→∞ 𝐹 𝐺 lim 𝑂 = 𝑅 •

Law of Large Numbers 𝑂 1 Pr E x = lim 𝑂 𝑦 𝑗 = 1 N→∞ 𝑗=1 𝑂 E 𝑔(𝑦) ≈ 1 𝑂 𝑔(𝑦 𝑗 ) 𝑗=1

Law of Large Numbers 𝑂 1 Pr E x = lim 𝑂 𝑦 𝑗 = 1 N N→∞ 𝑗=1 𝑂 E 𝑔(𝑦) ≈ 1 𝑂 𝑔(𝑦 𝑗 ) 𝑗=1

Law of Large Numbers 𝑂 1 Pr E x = lim 𝑂 𝑦 𝑗 = 1 N N→∞ 𝑗=1 𝑂 E 𝑔(𝑦) ≈ 1 𝑂 𝑔(𝑦 𝑗 ) 𝑗=1

Insufficient Samples = High Variance = Noise [Rendered with pbrt.v3]

Monte Carlo Estimation estimator 𝑂 𝑔 𝑦 න𝑔 𝑦 𝑒𝑦 = න 𝑔 𝑦 𝑞 𝑦 𝑞 𝑦 𝑒𝑦 = 𝐹 𝑔 𝑦 ≈ 1 𝑂 𝑞 𝑦 𝑞 𝑦 𝑗=1 න 𝑀 𝑗 𝑦, 𝜕 𝑗 𝑔 𝜕 𝑗 , 𝜕 𝑝 𝜕 𝑗 ⋅ 𝑜 𝑒𝜕 𝑗 Ω ≈ 𝑂 𝑀 𝑗 𝑦, 𝜕 𝑗 𝑔 𝜕 𝑗 , 𝜕 𝑝 1 𝜕 𝑗 ⋅ 𝑜 𝑂 𝑞 𝜕 𝑗 𝑗=1 Tricky Part!!

Light Transport Algorithms

Light Transport Algorithms • Path tracing • Light tracing • Bidirectional path tracing • Photon mapping • and many more…

Path Tracing

Light Tracing

Bidirectional Path Tracing

Photon Tracing

Photon Tracing

http://iliyan.com/publications/VertexMerging/comparison/

http://iliyan.com/publications/VertexMerging/comparison/ Bidirectional Path Tracing VCM Path Tracing Progressive Photon Mapping

Importance Sampling

Direct Illumination 𝑀 𝑠 𝑦, 𝜕 𝑝 = න 𝑀 𝑦, 𝜕 𝑗 𝑔 𝜕 𝑗 , 𝜕 𝑝 𝜕 𝑗 ⋅ 𝑜 𝑒𝜕 𝑗 Ω ≈ 𝑂 𝑀 𝑗 𝑦, 𝜕 𝑗 𝑔 𝜕 𝑗 , 𝜕 𝑝 1 𝜕 𝑗 ⋅ 𝑜 𝑂 𝑞 𝜕 𝑗 𝑗=1 1. 𝑞 𝜕 𝑗 ∝ 𝑀 𝑗 𝑦, 𝜕 𝑗 𝑔 𝜕 𝑗 , 𝜕 𝑝 𝜕 𝑗 ⋅ 𝑜 ? 2. 𝑞 𝜕 𝑗 ∝ 𝑀 𝑗 𝑦, 𝜕 𝑗 ? 3. 𝑞 𝜕 𝑗 ∝ 𝑔 𝜕 𝑗 , 𝜕 𝑝 𝜕 𝑗 ⋅ 𝑜 ?

Uniform Sampling

Importance Sampling

Importance Sampling (Cont’d) Unknown!! F N = 1 N 𝑔 𝑌 𝑗 , 𝑥ℎ𝑓𝑠𝑓 𝑞(𝑌 𝑗 ) ∝ 𝑔(𝑌 𝑗 ) 𝑞 𝑌 𝑗 [ Premože’10]

Importance Sampling (Cont’d) Unknown!! F N = 1 N 𝑔 𝑌 𝑗 , 𝑥ℎ𝑓𝑠𝑓 𝑞(𝑌 𝑗 ) ∝ 𝑔(𝑌 𝑗 ) 𝑞 𝑌 𝑗 [ Premože’10] Bad choice of density function would increase the variance (to infinity)!!

Emission Sampling

BRDF Sampling

High Throughput Connection

Challenges • It’s hard to get the PDF of the convolution of – Incoming radiance 𝑀 𝑦, 𝜕 𝑗 and – BRDF 𝑔 𝜕 𝑗 , 𝜕 𝑝 • There’s an implicit visibility term within 𝑀 𝑦, 𝜕 𝑗 – Visibility term can’t be derived before tracing • Using machine learning to adapt sampling distribution? • Dilemma – Highly specular BRDFs with point light sources

Highly Specular BRDF & Point Light BRDF Sampling Light Sampling

Multiple Importance Sampling [Veach’95]

Multiple Importance Sampling (Cont’d) 𝑜 𝑔 𝑔 𝑌 𝑗 𝑌 𝑗 𝑥 𝑔 (𝑌 𝑗 ) 𝑜 𝑔 𝑍 𝑘 𝑍 𝑘 𝑥 (𝑍 𝑘 ) 1 + 1 𝑜 𝑔 𝑞 𝑔 𝑌 𝑗 𝑜 𝑞 𝑍 𝑘 𝑗=1 𝑘=1 • 𝑜 𝑙 is the number of samples taken from the 𝑞 𝑙 • the weighting functions 𝑥 𝑙 take all of the different ways that a sample 𝑌 𝑗 𝑝𝑠 𝑍 𝑗 could have been generated β n s p s x n s p s x w s x = w s x = σ 𝑗 𝑜 𝑗 𝑞 𝑗 𝑦 𝛾 σ 𝑗 𝑜 𝑗 𝑞 𝑗 𝑦

Multiple Importance Sampling (Cont’d) 𝑜 𝑔 𝑔 𝑌 𝑗 𝑌 𝑗 𝑥 𝑔 (𝑌 𝑗 ) 𝑜 𝑔 𝑍 𝑘 𝑍 𝑘 𝑥 (𝑍 𝑘 ) 1 + 1 𝑜 𝑔 𝑞 𝑔 𝑌 𝑗 𝑜 𝑞 𝑍 𝑘 𝑗=1 𝑘=1 • 𝑜 𝑙 is the number of samples taken from the 𝑞 𝑙 • the weighting functions 𝑥 𝑙 take all of the different ways that a sample 𝑌 𝑗 𝑝𝑠 𝑍 𝑗 could have been generated β n s p s x n s p s x w s x = w s x = σ 𝑗 𝑜 𝑗 𝑞 𝑗 𝑦 𝛾 σ 𝑗 𝑜 𝑗 𝑞 𝑗 𝑦

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.

![Read Chapter 7 of Machine Learning [Suggested exercises: 7.1, 7.2, 7.5, 7.7] Function](https://c.sambuz.com/1093741/read-chapter-7-of-machine-learning-s.webp)