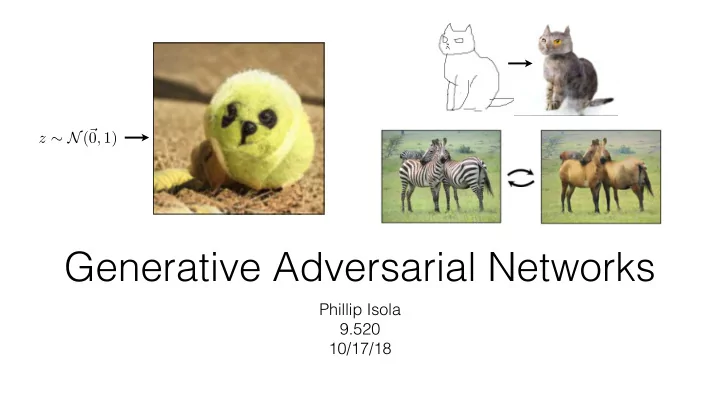

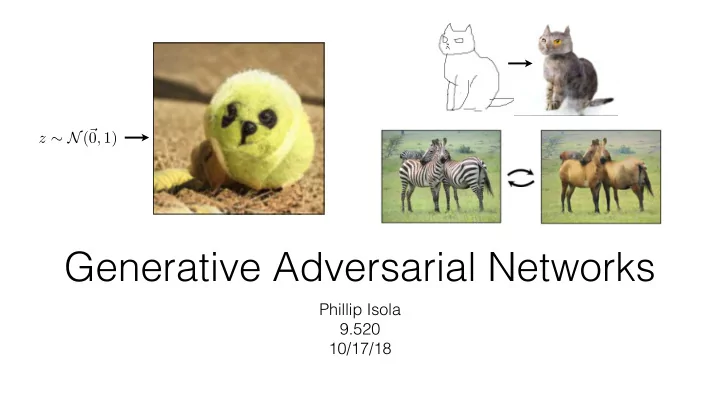

GANs are implicit generative models p ( x ) “generative model” of the data x Noise distribution Data distribution G ( z ) x ⇠ p ( x ) z ⇠ N (0 , 1) GAN G ( z ) ⇠ p ( x ) Samples from a perfectly optimized GAN are samples from the data distribution

Progressive GAN [Karras et al., 2018]

Progressive GAN [Karras et al., 2018]

Proposition 1. For G fixed, the optimal discriminator D is p data ( x ) G ( x ) = D ∗ p data ( x ) + p g ( x ) Proof ( G, D ) Z Z V ( G, D ) = p data ( x ) log( D ( x )) dx + p z ( z ) log(1 � D ( g ( z ))) dz z x Z = p data ( x ) log( D ( x )) + p g ( x ) log(1 � D ( x )) dx Z x For any ( a, b ) 2 R 2 \ { 0 , 0 } , the function y ! a log( y ) + b log(1 � y ) achieves its maximum in a [0 , 1] at a + b . The discriminator does not need to be defined outside of Supp ( p data ) [ Supp ( p g ) , concluding the proof.

<latexit sha1_base64="q9QynLOGAUsJcUg4ZSq4HGXK5U=">ACknicfVFtaxQxEM6tL63rW6t+80vwETKsSuCRCq9YMfFCt4d4XLcsxlZ/dCk+w2mVWPZX+Av8av+lP8N2avJ2grDiQ8eaZmczMotbKU5L8HESXLl+5urV9Lb5+4+at2zu7dya+apzEsax05Y4X4FEri2NSpPG4dghmoXG6ODns/dNP6Lyq7Eda1ZgZK0qlAQK1HxnKEo85ckeF6cN5DzhQhUFr+clfxHuNgeCLqiSUbI2fhGkGzBkGzua7w6mIq9kY9CS1OD9LE1qylpwpKTGLhaNxrkCZQ4C9CQZ+16246/jAwOS8qF4lvmb/jGjBeL8yi6A0QEt/3teT/LNGir2s1bZuiG08qxQ0WhOFe9Hw3PlUJeBQDSqfBXLpfgQFIYByL1xiacfguJH5fowOq3ONWgCsNfOlCc6XY69H/hMr+FgYUlr8LCtjwOatsJUz3SzNWqGxIKEn6GiYCqfKJQnXv7o4rCI9P/iLYPJklCaj9MPT4cGrzVK2X32gD1iKXvGDtgbdsTGTLKv7Bv7zn5E96Ln0cvo8EwaDTYxd9lfFr39BX0IybU=</latexit> <latexit sha1_base64="q9QynLOGAUsJcUg4ZSq4HGXK5U=">ACknicfVFtaxQxEM6tL63rW6t+80vwETKsSuCRCq9YMfFCt4d4XLcsxlZ/dCk+w2mVWPZX+Av8av+lP8N2avJ2grDiQ8eaZmczMotbKU5L8HESXLl+5urV9Lb5+4+at2zu7dya+apzEsax05Y4X4FEri2NSpPG4dghmoXG6ODns/dNP6Lyq7Eda1ZgZK0qlAQK1HxnKEo85ckeF6cN5DzhQhUFr+clfxHuNgeCLqiSUbI2fhGkGzBkGzua7w6mIq9kY9CS1OD9LE1qylpwpKTGLhaNxrkCZQ4C9CQZ+16246/jAwOS8qF4lvmb/jGjBeL8yi6A0QEt/3teT/LNGir2s1bZuiG08qxQ0WhOFe9Hw3PlUJeBQDSqfBXLpfgQFIYByL1xiacfguJH5fowOq3ONWgCsNfOlCc6XY69H/hMr+FgYUlr8LCtjwOatsJUz3SzNWqGxIKEn6GiYCqfKJQnXv7o4rCI9P/iLYPJklCaj9MPT4cGrzVK2X32gD1iKXvGDtgbdsTGTLKv7Bv7zn5E96Ln0cvo8EwaDTYxd9lfFr39BX0IybU=</latexit> <latexit sha1_base64="q9QynLOGAUsJcUg4ZSq4HGXK5U=">ACknicfVFtaxQxEM6tL63rW6t+80vwETKsSuCRCq9YMfFCt4d4XLcsxlZ/dCk+w2mVWPZX+Av8av+lP8N2avJ2grDiQ8eaZmczMotbKU5L8HESXLl+5urV9Lb5+4+at2zu7dya+apzEsax05Y4X4FEri2NSpPG4dghmoXG6ODns/dNP6Lyq7Eda1ZgZK0qlAQK1HxnKEo85ckeF6cN5DzhQhUFr+clfxHuNgeCLqiSUbI2fhGkGzBkGzua7w6mIq9kY9CS1OD9LE1qylpwpKTGLhaNxrkCZQ4C9CQZ+16246/jAwOS8qF4lvmb/jGjBeL8yi6A0QEt/3teT/LNGir2s1bZuiG08qxQ0WhOFe9Hw3PlUJeBQDSqfBXLpfgQFIYByL1xiacfguJH5fowOq3ONWgCsNfOlCc6XY69H/hMr+FgYUlr8LCtjwOatsJUz3SzNWqGxIKEn6GiYCqfKJQnXv7o4rCI9P/iLYPJklCaj9MPT4cGrzVK2X32gD1iKXvGDtgbdsTGTLKv7Bv7zn5E96Ln0cvo8EwaDTYxd9lfFr39BX0IybU=</latexit> <latexit sha1_base64="q9QynLOGAUsJcUg4ZSq4HGXK5U=">ACknicfVFtaxQxEM6tL63rW6t+80vwETKsSuCRCq9YMfFCt4d4XLcsxlZ/dCk+w2mVWPZX+Av8av+lP8N2avJ2grDiQ8eaZmczMotbKU5L8HESXLl+5urV9Lb5+4+at2zu7dya+apzEsax05Y4X4FEri2NSpPG4dghmoXG6ODns/dNP6Lyq7Eda1ZgZK0qlAQK1HxnKEo85ckeF6cN5DzhQhUFr+clfxHuNgeCLqiSUbI2fhGkGzBkGzua7w6mIq9kY9CS1OD9LE1qylpwpKTGLhaNxrkCZQ4C9CQZ+16246/jAwOS8qF4lvmb/jGjBeL8yi6A0QEt/3teT/LNGir2s1bZuiG08qxQ0WhOFe9Hw3PlUJeBQDSqfBXLpfgQFIYByL1xiacfguJH5fowOq3ONWgCsNfOlCc6XY69H/hMr+FgYUlr8LCtjwOatsJUz3SzNWqGxIKEn6GiYCqfKJQnXv7o4rCI9P/iLYPJklCaj9MPT4cGrzVK2X32gD1iKXvGDtgbdsTGTLKv7Bv7zn5E96Ln0cvo8EwaDTYxd9lfFr39BX0IybU=</latexit> <latexit sha1_base64="OtnNZnbVLP85mY3HmKMLIG9weoU=">ACdHicfVFLaxRBEO4dX3F8JXrUw+AQEJFlRgLxGKIHL2IEdzewPYSa3prZvsxdNeoyzA/Itf4y/wjnu3ZrKCJWNDw1VdfVdejbJT0lGU/RtGNm7du39m5G9+7/+Dho929x1NvWydwIqy7rQEj0oanJAkhaeNQ9Clwlm5ejvEZ1/QeWnNZ1o3WGiojaykArUjB/Lubd2W6ajbONJdBvgUp29rJ2d5oxhdWtBoNCQXez/OsoaIDR1Io7GPemxArKDGeYAGNPqi2/TbJ/uBWSVdeEZSjbsnxkdaO/XugxKDbT0V2MD+a/YvKXqTdFJ07SERlx+VLUqIZsMwycL6VCQWgcAwsnQayKW4EBQWFEc83cYhnH4IRT+2KADsu5lx8HVGr71YbiavxrQ/4TS/BYGFEoa/Cqs1mAWHTfW6X6eFx1XWBFXU3SU5tzJekncDV4fh1PkVxd/HUxfj/NsnH86SI+Ot0fZYU/Zc/aC5eyQHbH37IRNmGArds4u2PfRz+hZlEb7l9JotM15wv6yaPwLaU/Apg=</latexit> <latexit sha1_base64="OtnNZnbVLP85mY3HmKMLIG9weoU=">ACdHicfVFLaxRBEO4dX3F8JXrUw+AQEJFlRgLxGKIHL2IEdzewPYSa3prZvsxdNeoyzA/Itf4y/wjnu3ZrKCJWNDw1VdfVdejbJT0lGU/RtGNm7du39m5G9+7/+Dho929x1NvWydwIqy7rQEj0oanJAkhaeNQ9Clwlm5ejvEZ1/QeWnNZ1o3WGiojaykArUjB/Lubd2W6ajbONJdBvgUp29rJ2d5oxhdWtBoNCQXez/OsoaIDR1Io7GPemxArKDGeYAGNPqi2/TbJ/uBWSVdeEZSjbsnxkdaO/XugxKDbT0V2MD+a/YvKXqTdFJ07SERlx+VLUqIZsMwycL6VCQWgcAwsnQayKW4EBQWFEc83cYhnH4IRT+2KADsu5lx8HVGr71YbiavxrQ/4TS/BYGFEoa/Cqs1mAWHTfW6X6eFx1XWBFXU3SU5tzJekncDV4fh1PkVxd/HUxfj/NsnH86SI+Ot0fZYU/Zc/aC5eyQHbH37IRNmGArds4u2PfRz+hZlEb7l9JotM15wv6yaPwLaU/Apg=</latexit> <latexit sha1_base64="OtnNZnbVLP85mY3HmKMLIG9weoU=">ACdHicfVFLaxRBEO4dX3F8JXrUw+AQEJFlRgLxGKIHL2IEdzewPYSa3prZvsxdNeoyzA/Itf4y/wjnu3ZrKCJWNDw1VdfVdejbJT0lGU/RtGNm7du39m5G9+7/+Dho929x1NvWydwIqy7rQEj0oanJAkhaeNQ9Clwlm5ejvEZ1/QeWnNZ1o3WGiojaykArUjB/Lubd2W6ajbONJdBvgUp29rJ2d5oxhdWtBoNCQXez/OsoaIDR1Io7GPemxArKDGeYAGNPqi2/TbJ/uBWSVdeEZSjbsnxkdaO/XugxKDbT0V2MD+a/YvKXqTdFJ07SERlx+VLUqIZsMwycL6VCQWgcAwsnQayKW4EBQWFEc83cYhnH4IRT+2KADsu5lx8HVGr71YbiavxrQ/4TS/BYGFEoa/Cqs1mAWHTfW6X6eFx1XWBFXU3SU5tzJekncDV4fh1PkVxd/HUxfj/NsnH86SI+Ot0fZYU/Zc/aC5eyQHbH37IRNmGArds4u2PfRz+hZlEb7l9JotM15wv6yaPwLaU/Apg=</latexit> <latexit sha1_base64="OtnNZnbVLP85mY3HmKMLIG9weoU=">ACdHicfVFLaxRBEO4dX3F8JXrUw+AQEJFlRgLxGKIHL2IEdzewPYSa3prZvsxdNeoyzA/Itf4y/wjnu3ZrKCJWNDw1VdfVdejbJT0lGU/RtGNm7du39m5G9+7/+Dho929x1NvWydwIqy7rQEj0oanJAkhaeNQ9Clwlm5ejvEZ1/QeWnNZ1o3WGiojaykArUjB/Lubd2W6ajbONJdBvgUp29rJ2d5oxhdWtBoNCQXez/OsoaIDR1Io7GPemxArKDGeYAGNPqi2/TbJ/uBWSVdeEZSjbsnxkdaO/XugxKDbT0V2MD+a/YvKXqTdFJ07SERlx+VLUqIZsMwycL6VCQWgcAwsnQayKW4EBQWFEc83cYhnH4IRT+2KADsu5lx8HVGr71YbiavxrQ/4TS/BYGFEoa/Cqs1mAWHTfW6X6eFx1XWBFXU3SU5tzJekncDV4fh1PkVxd/HUxfj/NsnH86SI+Ot0fZYU/Zc/aC5eyQHbH37IRNmGArds4u2PfRz+hZlEb7l9JotM15wv6yaPwLaU/Apg=</latexit> <latexit sha1_base64="hOert/YFld76Co4Ny75oRLEwVo=">ACe3icfVHbitRAEO2JtzXedvXRl8YgyLIMiSjuy8KiPvgiruDMLEziUOnUZJrtS+iuqEPIf/iqf+XHCHZmR9BdsaDh1KlT1XUpGyU9pemPUXTl6rXrN3Zuxrdu37l7b3fv/tTb1gmcCKusOy3Bo5IGJyRJ4WnjEHSpcFaevRris0/ovLTmA60bLDTURi6lArUx2ZR8yPeLoKCPrFbpKO043xyDbgoRt7WSxN5rlRWtRkNCgfzLG2o6MCRFAr7OG89NiDOoMZ5gAY0+qLbtN3zx4Gp+NK68AzxDftnRgfa+7Uug1IDrfzF2ED+KzZvaXlYdNI0LaER5x8tW8XJ8mEHvJIOBal1ACcDL1ysQIHgsKm4jh/jWEYh29D4XcNOiDr9rscXK3hSx+Gq/ODAf1PKM1vYUChpMHPwmoNpupyY53u51nR5QqXlKspOkqy3Ml6RbkbvD4Op8guLv4ymD4dZ+k4e/8sOX65PcoOe8gesScsYy/YMXvDTtiECebYV/aNfR/9jJoPzo4l0ajbc4D9pdFz38Bb0vDVA=</latexit> <latexit sha1_base64="hOert/YFld76Co4Ny75oRLEwVo=">ACe3icfVHbitRAEO2JtzXedvXRl8YgyLIMiSjuy8KiPvgiruDMLEziUOnUZJrtS+iuqEPIf/iqf+XHCHZmR9BdsaDh1KlT1XUpGyU9pemPUXTl6rXrN3Zuxrdu37l7b3fv/tTb1gmcCKusOy3Bo5IGJyRJ4WnjEHSpcFaevRris0/ovLTmA60bLDTURi6lArUx2ZR8yPeLoKCPrFbpKO043xyDbgoRt7WSxN5rlRWtRkNCgfzLG2o6MCRFAr7OG89NiDOoMZ5gAY0+qLbtN3zx4Gp+NK68AzxDftnRgfa+7Uug1IDrfzF2ED+KzZvaXlYdNI0LaER5x8tW8XJ8mEHvJIOBal1ACcDL1ysQIHgsKm4jh/jWEYh29D4XcNOiDr9rscXK3hSx+Gq/ODAf1PKM1vYUChpMHPwmoNpupyY53u51nR5QqXlKspOkqy3Ml6RbkbvD4Op8guLv4ymD4dZ+k4e/8sOX65PcoOe8gesScsYy/YMXvDTtiECebYV/aNfR/9jJoPzo4l0ajbc4D9pdFz38Bb0vDVA=</latexit> <latexit sha1_base64="hOert/YFld76Co4Ny75oRLEwVo=">ACe3icfVHbitRAEO2JtzXedvXRl8YgyLIMiSjuy8KiPvgiruDMLEziUOnUZJrtS+iuqEPIf/iqf+XHCHZmR9BdsaDh1KlT1XUpGyU9pemPUXTl6rXrN3Zuxrdu37l7b3fv/tTb1gmcCKusOy3Bo5IGJyRJ4WnjEHSpcFaevRris0/ovLTmA60bLDTURi6lArUx2ZR8yPeLoKCPrFbpKO043xyDbgoRt7WSxN5rlRWtRkNCgfzLG2o6MCRFAr7OG89NiDOoMZ5gAY0+qLbtN3zx4Gp+NK68AzxDftnRgfa+7Uug1IDrfzF2ED+KzZvaXlYdNI0LaER5x8tW8XJ8mEHvJIOBal1ACcDL1ysQIHgsKm4jh/jWEYh29D4XcNOiDr9rscXK3hSx+Gq/ODAf1PKM1vYUChpMHPwmoNpupyY53u51nR5QqXlKspOkqy3Ml6RbkbvD4Op8guLv4ymD4dZ+k4e/8sOX65PcoOe8gesScsYy/YMXvDTtiECebYV/aNfR/9jJoPzo4l0ajbc4D9pdFz38Bb0vDVA=</latexit> <latexit sha1_base64="hOert/YFld76Co4Ny75oRLEwVo=">ACe3icfVHbitRAEO2JtzXedvXRl8YgyLIMiSjuy8KiPvgiruDMLEziUOnUZJrtS+iuqEPIf/iqf+XHCHZmR9BdsaDh1KlT1XUpGyU9pemPUXTl6rXrN3Zuxrdu37l7b3fv/tTb1gmcCKusOy3Bo5IGJyRJ4WnjEHSpcFaevRris0/ovLTmA60bLDTURi6lArUx2ZR8yPeLoKCPrFbpKO043xyDbgoRt7WSxN5rlRWtRkNCgfzLG2o6MCRFAr7OG89NiDOoMZ5gAY0+qLbtN3zx4Gp+NK68AzxDftnRgfa+7Uug1IDrfzF2ED+KzZvaXlYdNI0LaER5x8tW8XJ8mEHvJIOBal1ACcDL1ysQIHgsKm4jh/jWEYh29D4XcNOiDr9rscXK3hSx+Gq/ODAf1PKM1vYUChpMHPwmoNpupyY53u51nR5QqXlKspOkqy3Ml6RbkbvD4Op8guLv4ymD4dZ+k4e/8sOX65PcoOe8gesScsYy/YMXvDTtiECebYV/aNfR/9jJoPzo4l0ajbc4D9pdFz38Bb0vDVA=</latexit> is the unique global minimizer of the GAN objective. p g = p data Proof C ( G ) = max D V ( G, D ) = E x ∼ p data [log D ∗ G ( x )] + E z ∼ p z [log(1 � D ∗ G ( G ( z )))] = E x ∼ p data [log D ∗ G ( x )] + E x ∼ p g [log(1 � D ∗ G ( x ))] � � p data ( x ) p g ( x ) = E x ∼ p data log + E x ∼ p g log P data ( x ) + p g ( x ) p data ( x ) + p g ( x ) 1. The global minimum of the virtual training criterion is achieved if G � � ✓ ◆ ✓ ◆ p data + p g p data + p g � � C ( G ) = � log(4) + KL + KL p data p g � � 2 2 � � between the model’s distribution and the data generating is the Kullback–Leibler divergence. We recognize in the previous expression C ( G ) = � log(4) + 2 · JSD ( p data k p g ) ( g ≥ 0 , 0 ⇐ ⇒ p g = p data

Behavior under model misspecification [Theis et al. 2016]

Mode covering versus mode seeking [Larsen et al. 2016]

G ( x ) D G fake (0.9) z D x real (0.1) E z , x [ log (1 − D ( x )) ] log D ( G ( z )) + arg max D [Goodfellow et al., 2014]

G ( x ) f G low score z f x high score E z , x [ f ( x ) ] − f ( G ( z )) + arg max f EBGAN, WGAN, LSGAN, etc

Modeling multiple possible outputs G ( x ) x G

Modeling multiple possible outputs ? ? ? ? ? Input Possible outputs

Modeling multiple possible outputs G ( x ) x G z ∼ N ( ~ 0 , 1)

y x G InfoGAN [Chen et al. 2016] BiCycleGAN [Zhu et al., NIPS 2017] z q ( z | y ) Encourages z to relay information about the target.

Labels Randomly generated facades [BiCycleGAN, Zhu et al., NIPS 2017]

Properties of generative models 1. Model high-dimensional, structured output —> Use a deep net, D, to model output! 2. Model uncertainty; a whole distribution of possible outputs —> Generator is stochastic, learns to match data distribution

Three perspectives on GANs 1. Structured loss 2. Generative model 3. Domain-level supervision / mapping

Three perspectives on GANs 1. Structured loss 2. Generative model 3. Domain-level supervision / mapping

Paired data

Paired data Unpaired data Jun-Yan Zhu Taesung Park

G ( x ) x G D real or fake pair ? E x , y [ ] log D ( x , G ( x )) + log(1 − D ( x , y )) arg min G max D

G ( x ) x G D real or fake pair ? E x , y [ ] log D ( x , G ( x )) + log(1 − D ( x , y )) arg min G max D No input-output pairs!

G ( x ) x D G real or fake? E x , y [ log D ( G ( x )) log(1 − D ( y )) ] arg min G max + D Usually loss functions check if output matches a target instance GAN loss checks if output is part of an admissible set

Gaussian Target distribution z Y

Horses Zebras X Y

G ( x ) D G x Real!

G ( x ) D G x Real too! Nothing to force output to correspond to input

Cycle-Consistent Adversarial Networks [Zhu et al. 2017], [Yi et al. 2017], [Kim et al. 2017]

Cycle-Consistent Adversarial Networks

Cycle Consistency Loss

Cycle Consistency Loss

Failure case

Failure case

Why does CycleGAN work?

Slide credit: Ming-Yu Liu

Slide credit: Ming-Yu Liu

Simplicity hypothesis [Galanti, Wolf, Benaim, 2018]

Cycle Loss upper bounds Conditional Entropy Conditional Entropy High Low Conditional Conditional Entropy Entropy “ALICE: Towards Understanding Adversarial Learning for Joint Distribution Matching” [Li et al. NIPS 2017]. Also see [Tiao et al. 2018] “CycleGAN as Approximate Bayesian Inference”

Cycle Loss upper bounds Conditional Entropy Conditional Entropy “ALICE: Towards Understanding Adversarial Learning for Joint Distribution Matching” [Li et al. NIPS 2017]. Also see [Tiao et al. 2018] “CycleGAN as Approximate Bayesian Inference”

Domain Adaptation [Tzeng et al. 2014]

Sim2real Simulated data Real data , , ? [Richter*, Vineet* et al. 2016] [Krähenbühl et al. 2018]

Recommend

More recommend