Experiment Driven Research Emmanuel Jeannot INRIA LaBRI 2011 - PowerPoint PPT Presentation

Experiment Driven Research Emmanuel Jeannot INRIA LaBRI 2011 ComplexHPC Spring School Amsterdam, May 2011 The ComplexHPC Action What is ComplexHPC? Experiment Driven Research Emmanuel Jeannot 1/33 Modern infrastructures are more and

Experiment Driven Research � Emmanuel Jeannot INRIA – LaBRI 2011 ComplexHPC Spring School Amsterdam, May 2011

The ComplexHPC Action What is ComplexHPC? Experiment Driven Research Emmanuel Jeannot 1/33

Modern infrastructures are more and more complex Large scale env.: • WAN (latency) • High computing/storage capacity Tens of clusters: Large-scale env. • Homogeneity • Power consumption Heterogeneous env.: Cluster/Heter. env. • Use of available resources • Processor and network heter. Node Thousands of nodes: • SMP • RAM (NUMA) Processor Thousands of processors: • CPU/GPU Core • Intrinsically parallel (ILP, TLP) Millions of cores: • More and more • General purpose or specialized High Performance Computing on Complex Environments 2/20 E. Jeannot

Characteristics of modern infrastructures Modern infrastructures are already: • Hierarchical • Heterogeneous (data transfer & computation) • Of different scales Near future: 1 Large-scale • Large scale infrastructures env. • Dozens of sites 20 Sites • Several heterogeneous computers/clusters per sites Complexity • Thousands of processors per parallel computer 100 Clusters • Tens of cores on each processor 10 5 Processors Lot of power: do we need it? 10 8 Cores High Performance Computing on Complex Environments 3/20 E. Jeannot

Applications High-performance applications: • Larger and larger data sets • Higher and higher computational requirements • Relevant applications: ! Environmental simulations ! Molecular dynamics ! Satellite imaging ! Medicine (modeling and simulation) High Performance Computing on Complex Environments 4/20 E. Jeannot

Goal of the ComplexHPC Action Rationale: • Enormous computational demand • Architectural advances: potential to meet requirements • No integrated solution to master the complexity • Research is fragmented Goals: • Overcome research fragmentation: foster HPC efforts to increase Europe competitiveness • Tackle the problem at every level (from cores to large-scale env.) • Vertical integration: provide new integrated solutions for large-scale computing for future platforms • Train new generations of scientists in high-performance and heterogeneous computing High Performance Computing on Complex Environments 5/20 E. Jeannot

Activities within the Action MC meetings Working groups • Organization of the network • 4 working groups • Action steering • Meet twice a year • Inclusion of new members • Identify synergies • Exchange ideas Visits • Implement synergies Summer/spring schools • 60 short-term visits (priority • Forum for students and to young researchers) young researchers • 12 long-term visits (fellowship) • Second and four years International workshops Action meetings • HCW (in conjunction with • Transversal to working groups IPDPS) • Once a year • Heteropar (in conjunction with Europar) High Performance Computing on Complex Environments 6/20 E. Jeannot

Scientific programme Four working groups: • Numerical analysis • Efficient use of complex systems (comp. or comm. library) • Algorithms and tools for mapping applications • Applications Applications Numerical analysis Libraries Alg. & tools for mapping Target vertical aspects of the architectural structure Each WG managed by a its own leader (specialist, nominated by MC) Each participant of the Action will join at least one WG High Performance Computing on Complex Environments 7/20 E. Jeannot

STSM ! Short Term Scientific Mission • An exchange program is open for visits and exchanges before end of june. • Do not hesitate to apply ! Emmanuel.jeannot@inria.fr, ! Krzysztof Kurowski: krzysztof.kurowski@man.poznan.pl Experiment Driven Research Emmanuel Jeannot 8/33

My solution is better than yours Efficiency Workload A Workload B Workload C My Solution 10 20 40 Your Solution 40 20 10 My Solution/Your Solution: • Workload A: 0.25 • Workload B: 1 • Workload C: 4 Average: 5.25/3= 1.75 On average, My Solution is 1.75 more efficient than Your Solution Experiment Driven Research Emmanuel Jeannot 9/33

Environment Stack • Example: Experimental validation Research issues at each layer of the stack Applications • algorithms • software Middleware • data • models Services-protocols • … Infrastructure Problem of experiments • Testing and validating solutions and models as a scientific problematic Questions: • ! what is a good experiments? ! which methodologies and tools to perform experiments? ! advantages and drawbacks of these methodologies/tools? Experiment Driven Research Emmanuel Jeannot 10/33

Outline ! Importance, role and properties of experiments in computer science ! Different experimental methodologies ! Statistical analysis of results Experiment Driven Research Emmanuel Jeannot 11/33

The discipline of computing: a science “ The discipline of computing is the systematic study of algorithmic processes that describe and transform information: their theory, analysis design, efficiency, implementation and application ” Peter J. Denning et al. COMPUTING AS A DISCIPLINE ! Confusion : computer science is not only science it is also engineering, technology, etc ( ! biology or physic) Problem of vulgarization ! Two ways for classifying knowledge : ! Analytic: using mathematics to tract models • Experimental: gathering facts through observation • Experiment Driven Research Emmanuel Jeannot 12/33

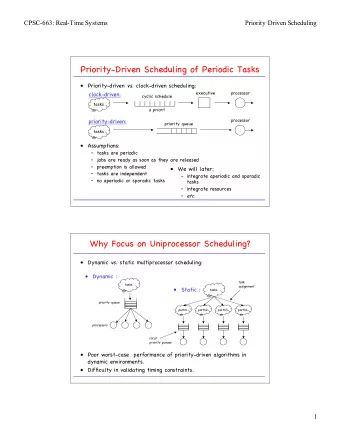

The discipline of computing: an experimental science The reality of computer science: -information -computers, network, algorithms, programs, etc. Studied objects (hardware, programs, data, protocols, algorithms, network): more and more complex. Modern infrastructures: Processors have very nice features • ! Cache ! Hyperthreading ! Multi-core Operating system impacts the performance • (process scheduling, socket implementation, etc.) The runtime environment plays a role • (MPICH ! OPENMPI) Middleware have an impact (Globus ! GridSolve) • Various parallel architectures that can be: • ! Heterogeneous ! Hierarchical ! Distributed ! Dynamic Experiment Driven Research Emmanuel Jeannot 13/33

Analytic modeling Purely analytical (math) models: • Demonstration of properties (theorem) • Models need to be tractable: over- simplification? • Good to understand the basic of the problem • Most of the time ones still perform a experiments (at least for comparison) For a practical impact (especially in distributed computing): analytic study not always possible or not sufficient Experiment Driven Research Emmanuel Jeannot 14/33

Experimental culture: great successes Experimental computer science at its best [Denning1980]: • Queue models (Jackson, Gordon, Newel, ‘50s and 60’s). Stochastic models validated experimentally • Paging algorithms (Belady, end of the 60’s). Experiments to show that LRU is better than FIFO Experiment Driven Research Emmanuel Jeannot 15/33

Experimental culture not comparable with other science Different studies: � • In the 90 ʼ s: between 40% and 50% of CS ACM papers requiring experimental validation had none (15% in optical engineering) [Lukovicz et al.] � • “ Too many articles have no experimental � validation ” [Zelkowitz and Wallace 98]: � 612 articles published by IEEE. � • Quantitatively more experiments � with times � Computer science not at the same � level than some other sciences: � • Nobody redo experiments (no funding). � M.V. Zelkowitz and D.R. Wallace. Experimental models for validating technology. Computer, 31(5):23-31, May 1998. • Lack of tool and methodologies. � Experiment Driven Research Emmanuel Jeannot 16/33

Error bars in scientific papers Who has ever published a paper with error bars? In computer science, very few papers contain error bars: Euro-Par Nb Papers With Error Percentage Bar 2007 89 5 5.6% 2008 89 3 3.4% 2009 86 2 2.3% 3 last conf 264 10 3.8% Experiment Driven Research Emmanuel Jeannot 17/33

Three paradigms of computer science Three feedback loops of the three paradigm of CS [Denning 89], [Feitelson 07] Definition Observation Idea/need Result Experimental Experimental interpretation test validation Proof Theorem Prediction Model Implementation Design Theory Modeling Design Experiment Driven Research Emmanuel Jeannot 18/33

Two types of experiments Observation Test and compare: • Experimental 1. Model validation (comparing models test with reality): is my hypothesis valid? 2. Quantitative validation (measuring performance): is my solution better? Prediction Model Idea/need • Can occur at the same time. Ex. validation of the implementation Experimental of an algorithm: � validation • grounding modeling is precise � design is correct • Implementation Design Experiment Driven Research Emmanuel Jeannot 19/33

Main advantages [Tichy 98]: � ! Experiments : testing hypothesis, algorithms or programs help construct a database of knowledge on theories, methods and tools used for such study. � ! Observations: unexpected or negative results " eliminate some less fruitful field of study, erroneous approaches or false hypothesis. � Experiment Driven Research Emmanuel Jeannot 20/33

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.