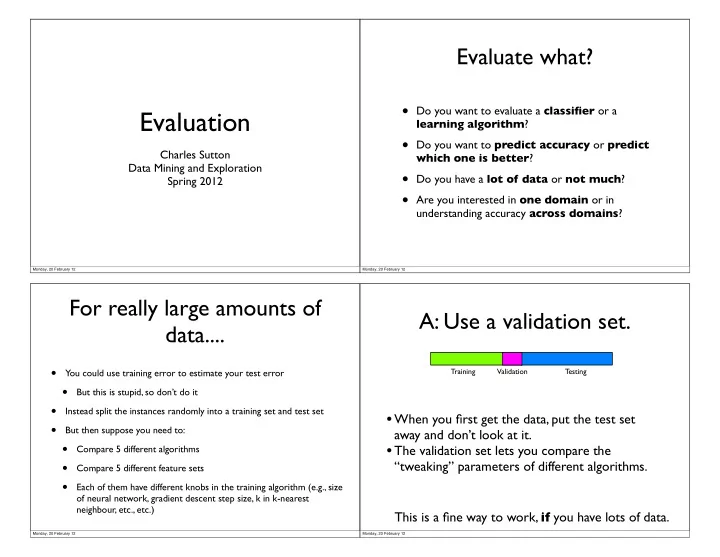

Evaluate what? • Do you want to evaluate a classifier or a Evaluation learning algorithm ? • Do you want to predict accuracy or predict Charles Sutton which one is better ? Data Mining and Exploration • Do you have a lot of data or not much ? Spring 2012 • Are you interested in one domain or in understanding accuracy across domains ? Monday, 20 February 12 Monday, 20 February 12 For really large amounts of A: Use a validation set. data.... • Training Validation Testing You could use training error to estimate your test error • But this is stupid, so don’t do it • Instead split the instances randomly into a training set and test set • When you first get the data, put the test set • But then suppose you need to: away and don’t look at it. • • The validation set lets you compare the Compare 5 different algorithms • “tweaking” parameters of different algorithms. Compare 5 different feature sets • Each of them have different knobs in the training algorithm (e.g., size of neural network, gradient descent step size, k in k-nearest neighbour, etc., etc.) This is a fine way to work, if you have lots of data. Monday, 20 February 12 Monday, 20 February 12

Variability Classifier A: 81% accuracy Classifier B: 84% accuracy 1. Hypothesis Testing Which classifier do you think is best? Monday, 20 February 12 Monday, 20 February 12 Variability Sources of Variability Classifier A: 81% accuracy • Choice of training set Classifier B: 84% accuracy • Choice of test set But then suppose I tell you • Inherent randomness in learning algorithm • Only 100 examples in the test set • Errors in data labeling • After 400 more test examples, I get 0-100 101-200 201-300 301-400 401-500 A: 0.81 0.77 0.78 0.81 0.78 B: 0.84 0.75 0.75 0.76 0.78 Monday, 20 February 12 Monday, 20 February 12

Test error is a random 0-100 101-200 201-300 301-400 401-500 A: 0.81 0.77 0.78 0.81 0.78 B: 0.84 0.75 0.75 0.76 0.78 variable Key point: Call your test data x 1 , x 2 , . . . , x N Your measured testing error is a random variable independently where x i ∼ D the classifier h (you sampled the testing data) True error the true function f e = Pr x ∼D [ f ( x ) 6 = h ( x )] 1 foo delta function Want to infer the “true test error” based on this sample Test error N e = 1 X ˆ 1 [ f ( x i ) 6 = h ( x i )] This is another learning problem! N i =1 Theorem : As then ˆ [Why?] Next slide: Make this more formal... N → ∞ e → e Monday, 20 February 12 Monday, 20 February 12 Test error is a random Main question variable Suppose Call your test data x 1 , x 2 , . . . , x N Classifier A: 81% accuracy independently where x i ∼ D the classifier h Classifier B: 84% accuracy True error the true function f e = Pr x ∼D [ f ( x ) 6 = h ( x )] 1 foo delta function Is that difference real? Test error N e = 1 X ˆ 1 [ f ( x i ) 6 = h ( x i )] N i =1 Theorem : e ∼ Binomial( N, e ) ˆ Monday, 20 February 12 Monday, 20 February 12

Learning Evaluation Rough-and-ready variability Original problem True error World (e.g., Difference between spam and normal emails) Classifier A: 81% accuracy Classifier B: 84% accuracy Classifier performance Sample Inboxes for multiple users on each example Is that difference real? Estimation Classifier Avg error on test set Answer 1: If doing c-v, report both mean and standard deviation of error across folds. If doing c-v, report both mean and standard deviation of error across folds. Monday, 20 February 12 Monday, 20 February 12 Hypothesis testing Example Want to know whether and are significantly different. ˆ e B ˆ e A Classifier A: 81% accuracy 1. Suppose not. [“null hypothesis”] Classifier B: 84% accuracy 2. Define a test statistic, in this case T = | e A − e B | ˆ T = | ˆ e A − ˆ e B | = 0 . 03 3. Measure a value of the statistic ˆ T = | ˆ e A − ˆ e B | ˆ 4. Derive the distribution of assuming the null. T 4. Derive the distribution of assuming #1. ˆ T 5. If p = Pr[ T > ˆ T ] is really low, e.g., < 0.05, What we know: “reject the null hypothesis” e A ∼ Binomial( N, e A ) ˆ is your p-value p e B ∼ Binomial( N, e B ) ˆ e A = e B If you reject, then the difference is “statistically significant” Monday, 20 February 12 Monday, 20 February 12

Approximation to the Distribution under the null rescue ˆ 4. Derive the distribution of assuming the null. 0.15 T dbinom(x1, 25, 0.76) 0.10 What we know: where 0.05 e A ∼ N ( Ne A , s 2 ˆ A ) s 2 A = Ne A (1 − e A ) 0.00 e B ∼ N ( Ne B , s 2 ˆ B ) 0 5 10 15 20 25 e A = e B Approximate binomial by normal e A ∼ N ( Ne A , Ne A (1 − e A )) ˆ Monday, 20 February 12 Monday, 20 February 12 Distribution under the null Computing the p-value 5. If p = Pr[ T > ˆ T ] is really low, e.g., < 0.05, 4. Derive the distribution of assuming the null. ˆ T “reject the null hypothesis” In our example What we know: where 2 e A − ˆ ˆ e B ∼ N (0 , s AB ) e A ∼ N ( Ne A , s 2 s 2 ˆ A ) A = Ne A (1 − e A ) s 2 AB ≈ 0 . 0029 e B ∼ N ( Ne B , s 2 ˆ B ) s AB = 2 e AB (1 − e AB ) So one line of R (or MATLAB): 2 e A = e B N But this means e AB = 1 > pnorm(-0.03, mean=0, sd=sqrt(0.0029)) 2( e A + e B ) [1] 0.2887343 2 ˆ e A − ˆ e B ∼ N (0 , s AB ) (assuming the two are independent...) (assuming the two are independent...) Monday, 20 February 12 Monday, 20 February 12

Frequentist Statistics Frequentist Statistics 120 What does What does 100 p = Pr[ T > ˆ p = Pr[ T > ˆ T ] T ] 80 really mean? really mean? Frequency 60 40 Generated 1000 test Refers to the “frequency” behaviour if the test is applied 20 sets for classifiers A over and over for different data sets. 0 and B, computed 0.00 0.05 0.10 0.15 0.20 0.25 error under the null: T.hat Fundamentally different (and more orthodox) than Our example: ˆ T = 0 . 03 Bayesian statistics. p-value is shaded area Monday, 20 February 12 Monday, 20 February 12 Errors in the hypothesis test Summary Type I error: False rejects Type II error: False non-reject • Call this test “difference in proportions test” Logic is to fix the Type I error α = 0 . 05 • An instance of a “z-test” Design the test to minimise Type II error • This is OK, but there are tests that work better in practice... Monday, 20 February 12 Monday, 20 February 12

McNemar’s Test McNemar’s Test p A probability A is correct GIVEN A and B disagree Classifier B Classifier B Null hypothesis: p A = 0 . 5 correct wrong Test statistic: ( | n 10 − n 01 | − 1) 2 Classifier A n 11 n 01 n 01 + n 10 correct Distribution under null? Classifier A n 00 n 10 wrong Monday, 20 February 12 Monday, 20 February 12 McNemar’s Test Pros/Cons McNemar’s test Test statistic: ( | n 10 − n 01 | − 1) 2 n 01 + n 10 Pros Distribution under null? χ 2 (1 degree of freedom) • Doesn’t require the independence assumptions of the difference-of-proportions test 1.5 • Works well in practice [Dietterich, 1997] dchisq(x, df = 1) 1.0 Cons 0.5 • Does not assess training set variability 0.0 0 1 2 3 4 5 6 Monday, 20 February 12 Monday, 20 February 12

Accuracy is not the only Calibration measure Sometimes we care about the confidence of a classification. Accuracy is great, but not always helpful If the classifier outputs probabilities, can use cross-entropy: e.g., Two class problem. 98% instances negative N H ( p ) = 1 X log p ( y i | x i ) N Alternative: for every class C, define i =1 where Precision P = # instances of C that classifier got right ( x i , y i ) feature vector, true label for each instance i # instances that classifier predicted C p ( y i | x i ) probabilities output by the classifier R = # instances of C that classifier got right Recall # true instances of C F-measure 2 = 2 PR F 1 = P + 1 1 P + R R Monday, 20 February 12 Monday, 20 February 12 An aside • We’ve talked a lot about overfitting. 2. ROC curves • What does this mean for well-known contest data (Receiver Operating Characteristic) sets? (Like the ones in your mini-project.) • Think about the paper publishing process. I have an idea, implement it, try it on a standard train/test set, publish a paper if it works. • Is there a problem with this? Monday, 20 February 12 Monday, 20 February 12

Problems in what we’ve Classifiers as rankers done so far • Most classifiers output a real-valued score as well as a prediction • e.g., decision trees: proportion of classes at leaf • Skewed class distributions • e.g., logistic regression: P(class | x) • Differing costs • Instead of evaluating accuracy at a single threshold, evaluate how good the score is at ranking Monday, 20 February 12 Monday, 20 February 12 More evaluation measures More evaluation measures True Assume two classes. (Hard to do ROC with more.) + - True + TP FP + - Predicted + TP FP - FN TN Predicted - FN TN TP + TN ACC = TP + TN + FP + FN TP: True positives FP: False positives TN: True negatives FN: False negatives Monday, 20 February 12 Monday, 20 February 12

Recommend

More recommend