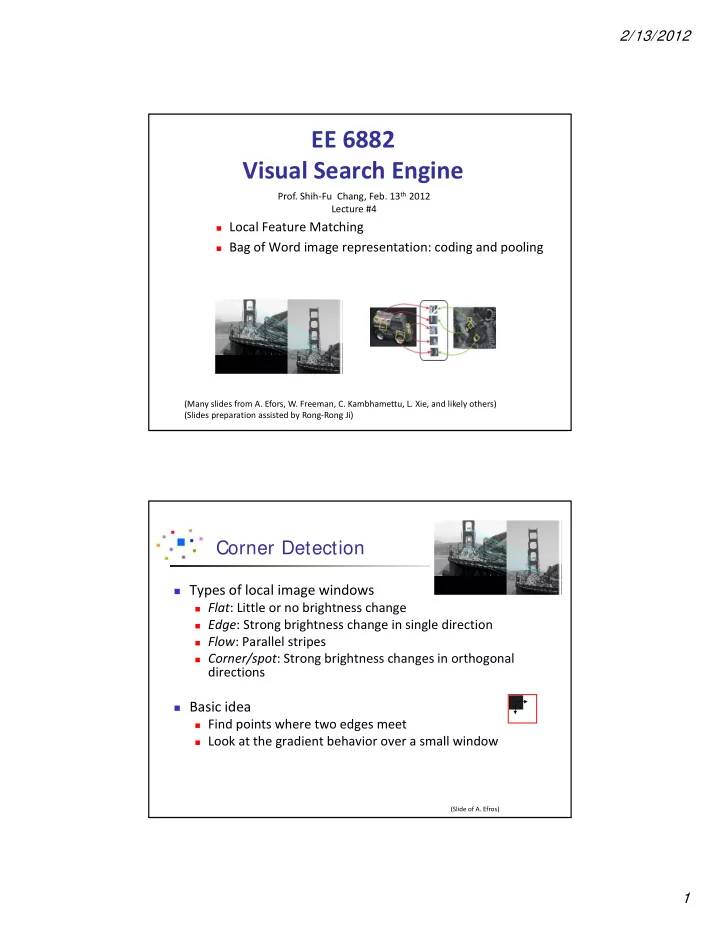

2/13/2012 EE 6882 Visual Search Engine Prof. Shih ‐ Fu Chang, Feb. 13 th 2012 Lecture #4 Local Feature Matching Bag of Word image representation: coding and pooling (Many slides from A. Efors, W. Freeman, C. Kambhamettu, L. Xie, and likely others) (Slides preparation assisted by Rong ‐ Rong Ji) Corner Detection Types of local image windows Flat : Little or no brightness change Edge : Strong brightness change in single direction Flow : Parallel stripes Corner/spot : Strong brightness changes in orthogonal directions Basic idea Find points where two edges meet Look at the gradient behavior over a small window (Slide of A. Efros) 1

2/13/2012 Harris Detector: Mathematics Change of intensity for the shift [u,v]: 2 E u v w x y I x u y v I x y ( , ) ( , ) ( , ) ( , ) x y , Window Shifted Intensity function intensity Window function w(x,y) = or 1 in window, 0 outside Gaussian Harris Detector: Mathematics Taylor’s Expansion: For small shifts [u,v ] we have a bilinear approximation: u E u v u v M ( , ) , v where M is a 2 2 matrix computed from image derivatives: I 2 I I x x y M w x y ( , ) I I I 2 x y x y y , 2

2/13/2012 Harris Detector: Mathematics Intensity change in shifting window: eigenvalue analysis u 1 > 2 – eigenvalues of M E u v u v M ( , ) , v If we try every possible shift, the direction of fastest change is 1 Ellipse E(u,v) = const ( 1 ) -1/2 ( 2 ) -1/2 (Slide of K. Efros) Harris Detector: Mathematics Measure of corner response: M det 2 R M k M R det trace M Trace Or M det M det 1 2 1 2 M trace M trace 1 2 1 2 (k – empirical constant, k = 0.04-0.06) 3

2/13/2012 Harris Detector The Algorithm: Find points with large corner response function R ( R > threshold) Take the points of local maxima of R Models of Image Change Geometry Rotation Similarity (rotation + uniform scale) Affine (scale dependent on direction) valid for: orthographic camera, locally planar object Photometry Affine intensity change ( I a I + b ) (Slide of C. Kambhamettu) 4

2/13/2012 Harris Detector: Some Properties But: non-invariant to image scale ! Corner ! All points will be classified as edges (Slide of C. Kambhamettu) Scale Invariant Detection Consider regions (e.g. circles) of different sizes around a point Regions of corresponding sizes (at different scales) will look the same in both images Fine/Low Coarse/High (Slide of C. Kambhamettu) 5

2/13/2012 Scale Invariant Detection The problem: how do we choose corresponding circles independently in each image? (Slide of C. Kambhamettu) Scale-Space Pyrimad 6

2/13/2012 Scale Space: Difference of Guassian x 2 y 2 G x y e 2 ( , , ) 1 2 2 Scale Invariant Detection f Kernel Image Functions for determining scale Kernels: DoG G x y k G x y ( , , ) ( , , ) (Difference of Gaussians) L G x y G x y 2 ( , , ) ( , , ) xx yy (Laplacian) where Gaussian Note: both kernels are invariant to 2 2 x y scale and rotation G x y e 2 ( , , ) 1 2 2 (Slide of C. Kambhamettu) 7

2/13/2012 Gausian Kernel, DOG Sigma 4 Diff Sigma2-Sigma4 Sigma 2 Difference of Gaussian, DOG 8

2/13/2012 Key Point Localization Detect maxima and minima of difference-of-Gaussian in scale s e l p a m e R r u B l S u b t r a c t space Scale Invariant Interest Point Detectors scale Laplacian Harris-Laplacian 1 Find local maximum of: Harris corner detector in space (image coordinates) y Laplacian in scale Harris x scale • SIFT (Lowe) 2 DoG Find local maximum of: – Difference of Gaussians in space and scale y DoG x (Slide of C. Kambhamettu) 1 K.Mikolajczyk, C.Schmid. “Indexing Based on Scale Invariant Interest Points”. ICCV 2001 2 D.Lowe. “Distinctive Image Features from Scale-Invariant Keypoints”. IJCV 2004 9

2/13/2012 Scale Invariant Detectors Experimental evaluation of detectors w.r.t. scale change Repeatability rate: # correct correspondences avg # detected points K.Mikolajczyk, C.Schmid. “Indexing Based on Scale Invariant Interest Points”. ICCV 2001 SIFT keypoints 10

2/13/2012 After extrema detection After curvature, edge responses 11

2/13/2012 Keypoints orientation and scale SIFT Invariant Descriptors • Extract image patches relative to local orientation Dominant direction of gradient 12

2/13/2012 Local Appearance Descriptor (SIFT) Compute gradient in a local patch Histogram of oriented gradients over local grids • e.g., 4x4 grids and 8 directions ‐ > 4x4x8=128 dimensions • Scale invariant S.-F. Chang, Columbia U. 25 [Lowe, ICCV 1999] Point Descriptors We know how to detect points Next question: How to match them? ? Point descriptor should be: Invariant Distinctive 13

2/13/2012 Feature matching ? Slide of A. Efros Feature-space outlier rejection [Lowe, 1999]: • 1-NN: SSD of the closest match • 2-NN: SSD of the second-closest match • Look at how much the best match (1-NN) is than the 2 nd best match (2-NN), e.g. 1-NN/2-NN Slide of A. Efros 14

2/13/2012 Feature-space outliner rejection Can we now compute H from the blue points? • No! Still too many outliers… • What can we do? Slide of A. Efros RANSAC for estimating homography RANSAC loop: 1. Select four feature pairs (at random) 2. Compute homography H (exact) Compute inliers where SSD(p i ’, H p i) < ε 3. 4. Keep largest set of inliers 5. Re-compute least-squares H estimate on all of the inliers Slide of A. Efros 15

2/13/2012 Least squares fit Find “average” translation vector Slide of A. Efros RANSAC Slide of A. Efros 16

2/13/2012 From local features to Visual Words 128 ‐ D feature space visual word vocabulary clustering … K-Mean Clustering Training data x(2) C 1 x label i ? + ( ) + i C 2 ++ + + + Unsupervised learning + + + … oo K ‐ mean clustering o o o o o C K o o C 3 Fix K value x(1) Initialize the representative of each cluster Map samples to closest cluster Re ‐ compute the centers x x x samples , ,..., N 1 2 for i=1,2,...,N, x C if Dist(x C Dist(x C k k , , ) , ), ' i k i k i k' end Can be used to initialize other clustering methods 17

2/13/2012 Visual Words: Image Patch Patterns Corners Blobs eyes letters Sivic and Zisserman, “Video Google”, 2006 Represent Image as Bag of Words keypoint features visual words clustering … BoW histogram … … 18

2/13/2012 Pooling Binary Features Boureau, Jean Ponce, Yann LeCun, A Theoretical Analysis of Feature Pooling in Visual Recognition, ICML 2010 � � � … � � � Consider PxK matrix � � P: # of features, K: # of codewords � � To begin with simple model, assume vi � � … are iid. … � � Distribution Separability Better separability achieved by 1. increasing the distance between the means of the two class ‐ conditional distributions 2. reducing their standard deviations. 19

2/13/2012 Distribution Separability Average pooling: Max pooling: Class separability 20

2/13/2012 For binary features: For continuous features: • Modeling will be more complex and the conclusions are slightly different Soft Coding -- Assign a feature to multiple visual words -- weights are determined by feature-to-word similarity Details in: Jiang, Ngo and Yang, ACM CIVR 2007. 42 Image source: http://www.cs.joensuu.fi/pages/franti/vq/lkm15.gif 21

2/13/2012 Multi ‐ BoW Spatial Pyramid Kernel • a S. Lazebnik, et al, CVPR 2006 43 Classifiers • K ‐ Nearest Neighbors + Voting • Linear Discriminative Model (SVM) 44 22

2/13/2012 Machine Learning: Build Classifier Find separating hyperplane: w to maximize margin Airplane w T x + b = 0 Decision function: f (x) = sign(w T x + b ) w T x i + b > 0 if label y i = +1 w T x i + b < 0 if label y i = ‐ 1 Support Vector Machine (tutorial by Burges ‘98) Look for separation plane with the highest margin Decision boundary t H b : w x + = 0 0 Linearly separable w T x i + b > 1 if label y i = +1 w T x i + b < ‐ 1 if label y i = ‐ 1 y i (w T x i + b) > 1 for all x i Two parallel hyperplanes defining the margin t H H b hyperplane ( ) : + = + 1 w x i 1 + t H H b hyperplane ( ) : w x + = - 1 i 2 - Margin: sum of distances of the closest points to the separation plane margin = 2/ w Best plane defined by w and b 23

Recommend

More recommend