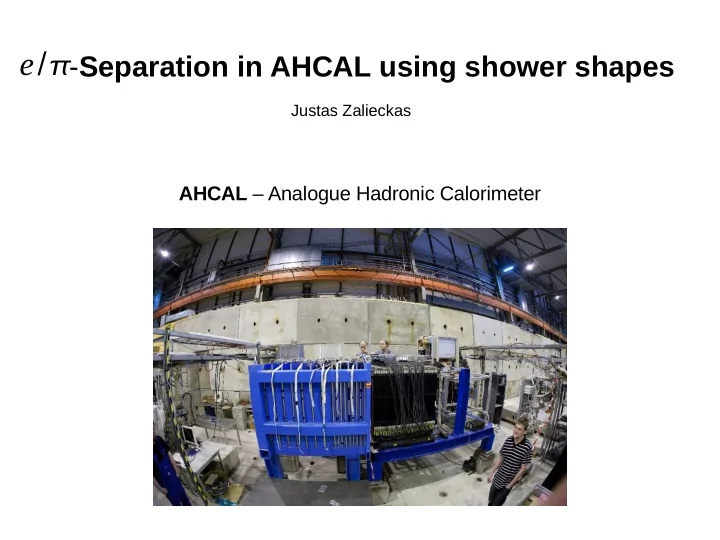

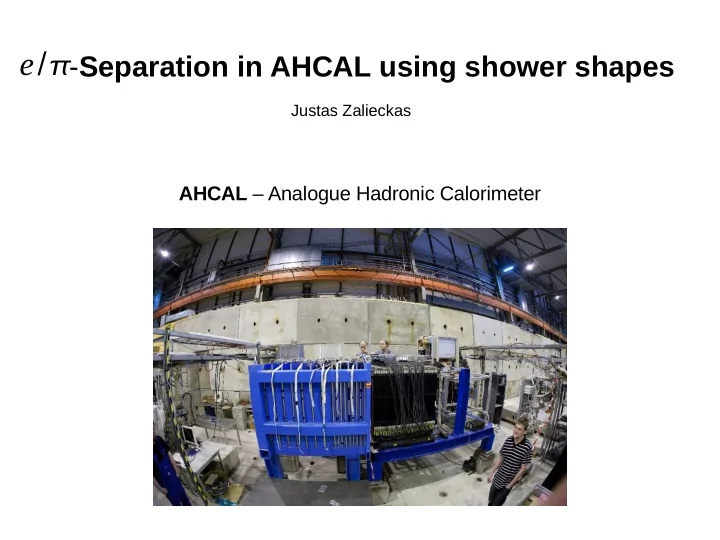

e / - Separation in AHCAL using shower shapes Justas Zalieckas AHCAL – Analogue Hadronic Calorimeter

Outline ● Analogue Hadronic Calorimeter (AHCAL) ● separation problem e / e / ● Shower shape variables for separation ● Boosted Decision Trees (BDT) and Multivariate Data Analysis (MDA) ● Results ● Conclusions 2

Analogue Hadronic Calorimeter (AHCAL) AHCAL Sampling calorimeter ● 30 layers of sandwich ● structure One layer – 10 mm W + ● 5 mm scintillator tiles High scintillator ● granularity Wavelength shifting ● fibers are read out with Silicon Photomultipliers Used to determine the coordinates of the incident point of the particles on the (SiPM) calorimeter surface: x trk and y trk . Cherenkov counters in ● front of AHCAL Granularity: 3x3 cm 2 , 6x6 cm 2 , 12x12 cm 2 . 3

e / separation problem e / separation based on Cherenkov counters - efficiency for electron identification becomes low for low pressure - purity for electron identification drops with increasing pion content of the beam e / --> difficult to separate in low energy range (E<10 GeV) Proposed solution - use shower shape information from calorimeter to distinguish between electromagnetic and hadronic showers - combine information using multivariate data analysis technique My tasks: 1. Write Marlin processor to create ROOT files with trees containing separation variables. 2. Use TMVA 4 (Toolkit for Multivariate Data Analysis with ROOT) package with e / created ROOT files for separation. 4

e / separation problem Scatter plot of two shower ● shape variables: - energy weighted radial distance d = ∑ E i x i − x trk 2 y i − y trk 2 ∑ E i E i - = energy of cell i E 5 - = energy sum in the first 5 AHCAL layers - = total energy sum E tot x i , y i - = cells center coordinates Overlapping regions ● 5 GeV samples ● For better electrons ● separation – use more 5 shower shape variables

e / Shower shape variables for separation - energy weighted radial distance d 1 = ∑ E i x i − x trk 2 y i − y trk 2 ∑ E i E tot E 5 / E tot - = fraction of contained in the first 5 layers - third momentum of E 5 / E tot d 1 [ mm ] radial distance 3 x i − x trk 2 y i − y trk 2 d 3 = ∑ E i ∑ E i 3 - energy density ∑ E i / V i N - = cell volume V i 3 ] log d 3 [ mm ] Energy density [ MIPs / cm N 6 - = cells number

Shower shape variables for separation e / - second momentum of radial distance 3 x i − x trk 2 y i − y trk 2 d 2 = ∑ E i ∑ E i 2 - radial distance R 90 E tot containing 90% - number of hits N 90 E tot containing 90% d 2 [ mm ] R 90 [ mm ] N - total hits number N 90 / N - is fraction of cells containing 90% E tot of - cells average energy ∑ E i N 7 Cellsaverage energy [ MIPs ] N 90 / N

e / Shower shape variables for separation - maximum energy L max loss layer number - shower start layer L start number L max − L start - is number of layers to reach shower maximum L max − L start Shower start layer number L start Max.energylosslayernumber L max 8

Decision tree for events classification Leaf node ● Root node ● Events sample ● Classification/separation ● variables for split decisions Repeated yes/no split decisions ● Phase space is divided in many ● regions Events end in final leaf node ● ∑ W S Purity ● S p = ∑ W S ∑ W B S B W S , W B – signal and background weights. 9 If p>0.5 – signal, if p<0.5 - background.

Boosted Decision Trees Boosting the decision tree Training sample Reweight events ● f err W i W i W i e New trees are derived from the ● same training sample Trees form a forest ● Average weights ● (misclassification) Combine into a single classifier ● Test classifier with test sample ● Boosting stabilizes fluctuations ● in the training sample and considerably enhances classifier Single classifier Testing sample performance w.r.t. a single tree. 10

Correlation of input variables for signal Correlation matrix for electrons 11

Correlation of input variables for background Correlation matrix for pions 12

Results Variable ranking: ● Rank Variable Importance 1 N 90 /N 1.288e-01 2 d 1 1.269e-01 3 E 5 /E tot 1.165e-01 4 d 2 1.145e-01 5 1.100e-01 Energy density 6 R 90 1.018e-01 7 d 3 1.015e-01 8 L start 7.623e-02 9 6.687e-02 Cells average energy 10 L max 4.406e-02 1.272e-02 11 L max -L start Electron: 22586 (training), 22587 (testing). Pion: 18045 (training), 18046 (testing). 13

Results Electron eff. with Electron eff. with Input variables in Separation ● Electron efficiency contamination contamination BDT <S 2 > fraction eff pion =0.01 fraction eff pion =0.1 eff el. = N el.selected d 1 , E 5 /E tot 0.977 1 0.956 N el.total ● Pion efficiency N 90 /N, d 1 , E 5 /E tot , d 2 , 0.988 1 0.97 Energy density, eff pion = N pion. selected R 90 , d 3 N pion.total N 90 /N, d 1 , E 5 /E tot , d 2 , ● Separation Energy density, R 90 , d 3 , L start , Cells 0.991 1 0.973 ∫ y el. − y pion 2 2 〉= 1 〈 S dy average energy, 2 y el. y pion L max , L max -L start Input variables in is PDFs of classifier . y el. , y pion y optimized Cut method 2 〉 〈 S is 1 with no overlap and is 0 d 1 , E 5 /E tot 0.975 0.992 - with full overlap. Cut on and d 1 ≤ 40 E 5 / E tot ≥ 0.875 gives . eff el. = 0.48, eff pion = 0.00047 Using BDT with 11 input variables for: - --> eff pion = 0.00027 eff el. = 0.48 - --> eff el. = 0.61 eff pion = 0.00047 14 --> Large improvement with multivariate selection.

Conclusions ● Increase in input variables number increases electron separation efficiency ● BDT classifier allows better electron/pion separation than simple cut method ● Further analysis with real data sample 15

Recommend

More recommend