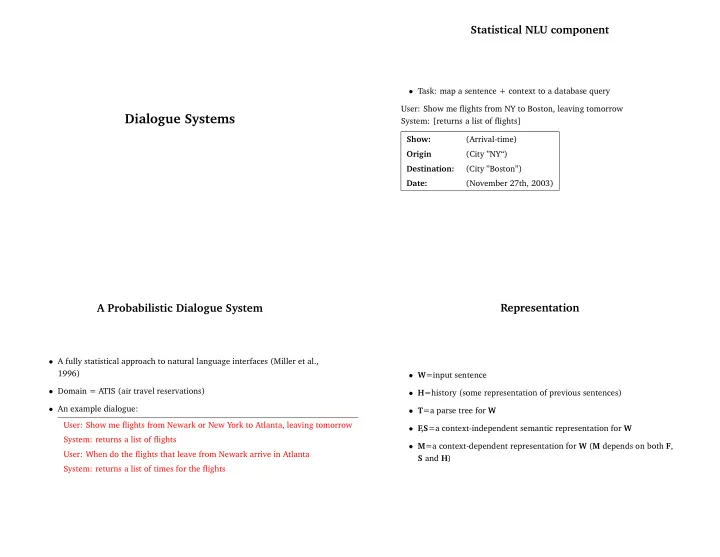

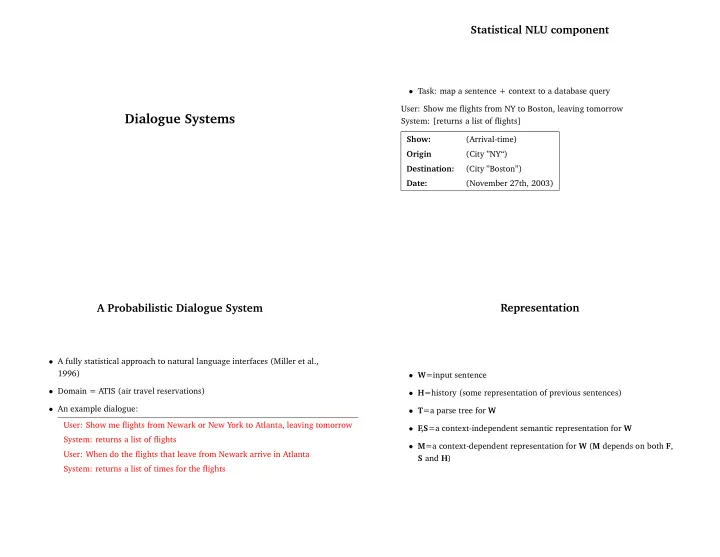

Statistical NLU component • Task: map a sentence + context to a database query User: Show me flights from NY to Boston, leaving tomorrow Dialogue Systems System: [returns a list of flights] Show: (Arrival-time) Origin (City ”NY“) Destination: (City ”Boston”) Date: (November 27th, 2003) A Probabilistic Dialogue System Representation • A fully statistical approach to natural language interfaces (Miller et al., 1996) • W =input sentence • Domain = ATIS (air travel reservations) • H =history (some representation of previous sentences) • An example dialogue: • T =a parse tree for W User: Show me flights from Newark or New York to Atlanta, leaving tomorrow • F ,S =a context-independent semantic representation for W System: returns a list of flights • M =a context-dependent representation for W ( M depends on both F , User: When do the flights that leave from Newark arrive in Atlanta S and H ) System: returns a list of times for the flights

Example Example Show: (flights) W = input sentence; H = history; T = a parse tree for W ; F , S = a context Origin (City ”NY“) or (City ”NY“) independent semantic representation for W ; M = a context-dependent semantic H= Destination: (City ”Atlanta”) representation for W Date: (November 27th, 2003) User: Show me flights from Newark or New York to Atlanta, leaving tomorrow System: returns a list of flights Show: (Arrival-time) User: When do the flights that leave from Newark arrive in Atlanta F ,S= Origin (City “Newark”) W = When do the flights that leave from Newark arrive in Atlanta Destination: (City ”Atlanta”) Show: (flights) Show: (Arrival-time) Origin (City ”Newark“) or (City ”NY“) H= Origin (City “Newark”) M= Destination: (City ”Atlanta”) Destination: (City ”Atlanta”) Date: (November 27th, 2003) Date: (November 27th, 2003) A Parse Tree Example W = input sentence; H = history; T = a parse tree for W ; F , S = a context independent semantic representation for W ; M = a context-dependent semantic Each non-terminal has a syntactic and semantic tag, e.g., city/npr representation for W /top User: Show me flights from Newark or New York to Atlanta, leaving tomorrow /wh−question System: returns a list of flights flight/np arrival/vp /aux time User: When do the flights that leave from Newark arrive in Atlanta /wh−head location arrival do W = When do the flights that leave from Newark arrive in Atlanta When /pp /vp−head city Show: (Arrival-time) location /npr /prep F ,S= Origin (City “Newark”) Atlanta in Destination: (City ”Atlanta”)

Building a Probabilistic Model Building a Probabilistic Model • Basic goal: build a model of P ( M | W, H ) – probability of a context-dependent interpretation, given a sentence and a history • We’ll do this by building a model of P ( M, W, F, T, S | H ) , giving P ( M, W, F, T, S | H ) = P ( F ) P ( T, W | F ) P ( S | T, W, F ) × P ( M | S, F, H ) � P ( M, W | H ) = P ( M, W, F, T, S | H ) • The sentence processing model is a model of P ( T, W, F, S ) . Maps W F,T,S to ( F, S, T ) triple (a context-independent interpretation) and • The contextual processing model goes from a ( F, S, H ) triple to a final argmax M P ( M | W, H ) = argmax M P ( M, W | H ) interpretation, M � = argmax M P ( M, W, F, T, S | H ) F,T,S Building a Probabilistic Model Example Show: (flights) Origin (City ”NY“) or (City ”NY“) H= Destination: (City ”Atlanta”) Our aim is to estimate P ( M, W, F, T, S | H ) Date: (November 27th, 2003) Show: (Arrival-time) • Apply Chain rule: F ,S= Origin (City “Newark”) P ( M, W, F, T, S | H ) = P ( F | H ) P ( T, W | F, H ) P ( S | T, W, F, H ) P ( M | S, T, W, F, H ) Destination: (City ”Atlanta”) • Independence assumption: Show: (Arrival-time) P ( M, W, F, T, S | H ) = P ( F ) P ( T, W | F ) P ( S | T, W, F ) × P ( M | S, F, H ) Origin (City “Newark”) M= Destination: (City ”Atlanta”) Date: (November 27th, 2003)

Building a Probabilistic Model The Sentence Processing Model flight /np flight−constraint /det flight /corenp /rel−clause P ( T, W, F, S ) = P ( F ) P ( T, W | F ) P ( S | T, W, F ) P(/det flight/corenp flight−constraints/rel−clause|flight/np) = P(/det|NULL, flight/np) *P(flight/corenp|/det,flight/np) • First step: choose the frame F with probability P ( F ) * P(flight−constraints|relclause|flight/corenp,flight/np) * P(STOP|flight−constraints/relclause,flight/np) Show: (Arrival-time) Origin • Use maximum likelihood estimation Destination: P ML ( corenp | np ) = Count ( corenp, np ) Count ( np ) • Note: there are relatively small number of frames • Backed-off estimates generate semantic, syntactic parts of each label separately The Sentence Processing Model The Sentence Processing Model P ( T, W, F, S ) = P ( F ) P ( T, W | F ) P ( S | T, W, F ) P ( T, W, F, S ) = P ( F ) P ( T, W | F ) P ( S | T, W, F ) • Given a frame F , and a tree T , fill in the semantic slots S Show: (Arrival-time) Show: (Arrival-time) • Next step: generate the parse tree T and sentence W Origin Origin Newark • Method uses a probabilistic context-free grammar, where markov Destination: Destination: Atlanta processes are used to generate rules. Different rule parameters are used for each value of F • Method works by considering each node of the parse tree T, and applying probabilities P ( slot-fill-action | S,node )

The Sentence Processing Model: Search The Contextual Model P ( T, W, F, S ) = P ( F ) P ( T, W | F ) P ( S | T, W, F ) • Only issue is whether values in H , but not in ( F, S ) , should be carried over to • Next problem: Search M. • Goal: produce n high probability ( F, S, T, W ) tuples Show: (Arrival-time) Origin (City “Newark”) • Method: M= Destination: (City ”Atlanta”) – In first pass, produce n -best parses under a parsing model that is Date: (November 27th, 2003) independent of F • Method uses a decision-tree model to estimate probability of ”carrying over” – For each tree T , for each possible frame F , create a ( W, T, F ) triple with each slot in H which is not in F, S . probability P ( T, W | F ) . Keep the top n most probable triples. – For each triple, use beam search to generate several high probability ( W, T, F, S ) tuples. Keep the top n most probable. The Contextual Model The Final Model • Final search method: Show: (flights) Origin (City ”NY“) or (City ”NY“) – Given an input sentence W , use the sentence processing model to H= Destination: (City ”Atlanta”) produce n high probability (F ,S,T ,W) tuples. Date: (November 27th, 2003) Call these ( F i , S i , T i , W i ) for i = 1 . . . n – Choose the final interpretation as Show: (Arrival-time) F ,S= Origin (City “Newark”) argmax M (max iP ( M | F i , S i , H ) P ( F i , S i , T i , W )) Destination: (City ”Atlanta”) • Note that this is an approximation to finding: Show: (Arrival-time) � argmax M P ( M, W | H ) = argmax M P ( M, W, F, T, S | H ) Origin (City “Newark”) M= F,T,S Destination: (City ”Atlanta”) Date: (November 27th, 2003) • Results: training on 4000 sentences, achieved an error rate of 21.6%

Reinforcement Learning for Dialogue The NJFun System Management • Three attributes needed: activity, location, time • Automatic Optimization of Dialogue Management e.g., wine-tasting, Lambertsville, Sunday (Litman&Kearns&Singh&, 2000) • Basic strategy: first get activity attribute, then location, finally • Uses reinforcement learning to learn a high-level dialogue strategy time, then make a database query Dialogue Strategies System: Welcome to NJFun. How may I help you? At any point in the dialogue, the following choices can be made: User: I’d like to find um winetasting in Lambertville in the morning System: Did you say you are interested in Lambertville? • System initiative vs. user User: Yes System initiative: Welcome to NJFun. Please say an activity System: Did you say you want to go in the morning? name or say “list activities” for activities I know about. User: Yes User initiative: Welcome to NJFun. How may I help you? System: I found a winery near Lambertville that is open in the • Confirmation/no confirmation of attribute values morning. It is [. . . ]. Please give me feedback by saying “good”, Confirmation: Did you say you are interested in Lambertville? “so-so” or “bad” User: Good

Recommend

More recommend