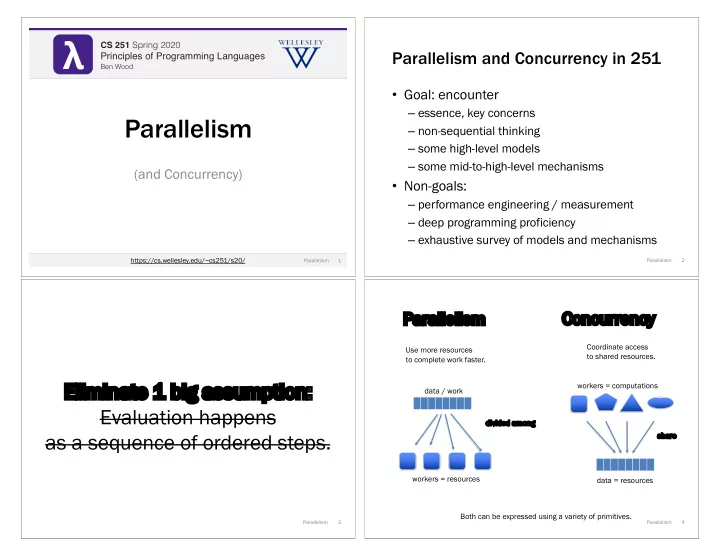

λ λ CS 251 Fall 2019 CS 251 Spring 2020 Parallelism and Concurrency in 251 Principles of Programming Languages Principles of Programming Languages Ben Wood Ben Wood • Goal: encounter – essence, key concerns Parallelism – non-sequential thinking – some high-level models – some mid-to-high-level mechanisms (and Concurrency) • Non-goals: – performance engineering / measurement – deep programming proficiency – exhaustive survey of models and mechanisms https://cs.wellesley.edu/~cs251/s20/ 1 Parallelism 2 Parallelism Parallelism Pa Co Concu curre rrency cy Coordinate access Use more resources to shared resources. to complete work faster. Elim El imin inate 1 1 b big a ig assumptio ion: workers = computations data / work Evaluation happens di divide ded d among as a sequence of ordered steps. sh share workers = resources data = resources Both can be expressed using a variety of primitives. Parallelism 3 Parallelism 4

Manticore Parallelism via Manticore Data parallelism many argument data of same type • Extends SML with language features for parallelism/concurrency. • Mix research vehicle / established models. • Parallelism patterns: – data parallelism: • parallel arrays parallelize pa ze • parallel tuples ap appli licat cation o of s same ame o operat ation – task parallelism: to to all data • parallel bindings no ordering/ • parallel case expressions interdependence • Unifying model: – futures / tasks • Mechanism: – work-stealing many result data of same type Parallelism 5 Parallelism 6 Manticore Manticore Parallel arrays: 'a parray Parallel array comprehensions [| e1 | x in e2 |] literal parray [| e1 , e2 , …, en |] Evaluation rule: integer ranges [| elo to ehi by estep |] 1. Under the current environment, E, evaluate e2 to a parray v2 . parallel mapping comprehensions [| e | x in elems |] 2. For each element vi in v2 , wi with no o con onstra raint on re on relative timing or order : parallel filtering comprehensions [| e | x in elems where pred |] 1. Create new environment Ei = x ↦ vi, E . 2. Under environment Ei , evaluate e1 to a value vi' 3. The result is [| v1', v2', …, vn' |] Parallelism 7 Parallelism 8

Manticore Manticore Parallel map / filter Parallel reduce sibling of foldl/foldr combiner function, f , must be as assoc ociat iativ ive fun reduceP f init xs = … fun mapP f xs = [| f x | x in xs |] : (('a * 'a) -> 'a) -> 'a -> 'a parray -> 'a : ('a -> 'b) -> 'a parray -> 'b parray f ( , ) fun filterP p xs = f ( , ) f ( , ) [| x | x in xs where p x |] f ( , ) f ( , ) f ( , ) f ( , ) : ('a -> bool) -> 'a parray -> 'a parray … … … … Parallelism 9 Parallelism 10 Manticore pivot Task parallelism Parallel bindings sorted_lt sorted_eq sorted_gt concatP fun qsortP (a: int parray) : int parray = if lengthP a <= 1 concatP then a else let val pivot = a ! 0 (* parray indexing *) parallelize pa ze appl pplication on Start ev St evaluating pval sorted_lt = of of different ope operation ons in p in parallel el qsortP (filterP (fn x => x < pivot) a) wi within larg rger r computation but bu pval sorted_eq = don’t wait do filterP (fn x => x = pivot) a unt until il need needed ed. some ordering/interdependence pval sorted_gt = controlled explicitly qsortP (filterP (fn x => x > pivot) a) in concatP ( sorted_lt , concatP ( sorted_eq , sorted_gt )) end Wa Wait until results are ready before using them. Parallelism 11 Parallelism 12

future = promise speculatively forced in parallel Manticore Parallel cases Futures: unifying model for Manticore parallel features signature FUTURE = datatype 'a bintree = Empty sig | Node of 'a * 'a bintree * 'a bintree type 'a future fun find_any t e = (* Produce a future for a thunk. case t of Like Promise.delay . *) Empty => NONE Eval Ev aluat uate these in n par aral allel. val future : (unit -> ’a) -> ’a future | Node (elem, left, right) => if e = elem then SOME t (* Wait for the future to complete and return the result. else Like Promise.force . *) pcase find_any left e & find_any right e of val touch : ’a future -> ’a If If o one ne f finis inishes w wit ith S SOME, r , retur urn it n it without waiting for wi r the other. (* More advanced features. *) datatype 'a result = VAL of 'a | EXN of exn SOME tree & ? => SOME tree | ? & SOME tree => SOME tree (* Check if the future is complete and get result if so. *) val poll : ’a future -> ’a result option | NONE & NONE => NONE (* Stop work on a future that won't be needed. *) val cancel : ’a future -> unit If If b both f finis inish w wit ith N NONE, r , retur urn N n NONE. Parallelism 13 Parallelism 14 end Futures: timeline visualization 1 Futures: timeline visualization 2 time time let let val f = future (fn () => e) val f = future (fn () => e) in in work work … … (touch f) (touch f) … … end end Parallelism 15 Parallelism 16

pval as future sugar Parray ops as futures: rough idea 1 Suppose we represent parrays as lists* of ents : of el elem ement let pval x = e in … x … end [| f x | x in xs |] let val x = future (fn () => e ) in … (touch x ) … end map touch ( map (fn x => *a bit more: implicitly cancel an untouched future future (fn () => f x )) once it becomes clear it won't be touched. xs) *actual implementation uses a more sophisticated data structure Parallelism 17 Parallelism 18 Odds and ends Parray ops as futures: rough idea 2 Suppose we represent parrays as lists* of es : of el elem ement ent fut utur ures • pcase : not just future sugar – Choice is a distinct primitive* not offered by futures alone. [| f x | x in xs |] • Where do execution resources from futures come from? How are they managed? • Tasks vs futures: – Analogy: function calls vs. val bindings. • Forward to concurrency and events… map (fn x => future (fn () => f (touch x) )) xs Ke Key semantic difference 1 vs 2? *at least when implemented well. *actual implementation uses a more sophisticated data structure Parallelism 19 Parallelism 20

Recommend

More recommend