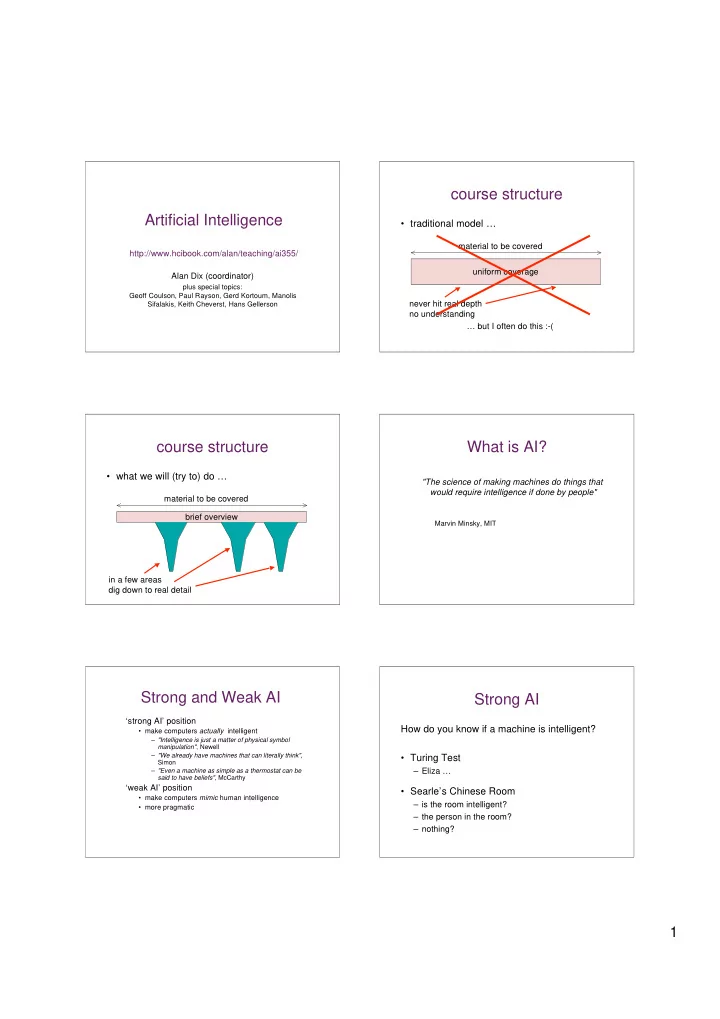

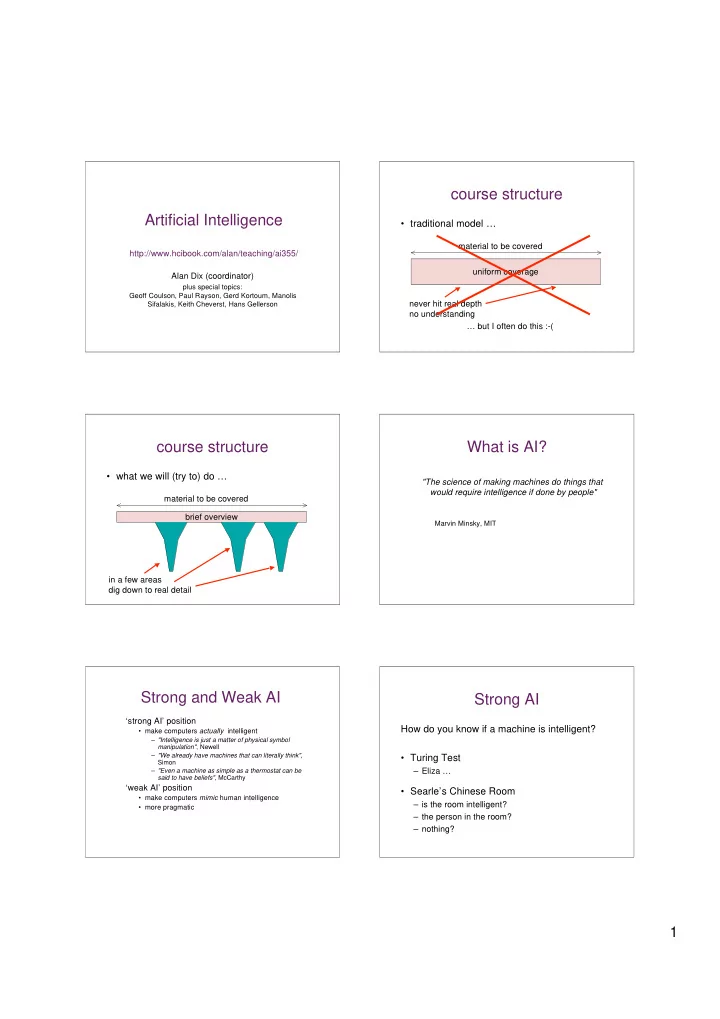

course structure Artificial Intelligence • traditional model … material to be covered http://www.hcibook.com/alan/teaching/ai355/ uniform coverage Alan Dix (coordinator) plus special topics: Geoff Coulson, Paul Rayson, Gerd Kortoum, Manolis Sifalakis, Keith Cheverst, Hans Gellerson never hit real depth no understanding … but I often do this :-( course structure What is AI? • what we will (try to) do … "The science of making machines do things that would require intelligence if done by people" material to be covered brief overview Marvin Minsky, MIT in a few areas dig down to real detail Strong and Weak AI Strong AI ‘strong AI’ position How do you know if a machine is intelligent? • make computers actually intelligent – "Intelligence is just a matter of physical symbol manipulation" , Newell – "We already have machines that can literally think" , • Turing Test Simon – "Even a machine as simple as a thermostat can be – Eliza … said to have beliefs" , McCarthy ‘weak AI’ position • Searle’s Chinese Room • make computers mimic human intelligence – is the room intelligent? • more pragmatic – the person in the room? – nothing? 1

Weaker AI … the great divide Alien Intelligence logic, search expert systems • symbolic (traditional) AI not as we know it … deduction – based on high-level cognitive reasoning – small richer representations Chess programs – well-defined formal representations, rules – can be very good – but NOT like a person • sub-symbolic AI – Computer: very broad ‘lookahead’ scanning – based on low-level neurological concepts or other ‘natural computation’ thousands of possible move paths neural nets – large simple representations – Human: small number of ‘sensible’ moves genetic algorithms – simple attributes, weights emergent behaviour intuition heuristics Natural computation broad areas of AI inspirations (traditional) • Neural networks / Connectionist • knowledge representation – neuron firing in the brain • reasoning • Genetic Algorithms • search – natural selection / selective breeding • planning • Artificial life & emergent behaviour • game playing – colony behaviour, ants, • machine learning • Simulated annealing (not strictly ‘AI’) • language and speech now separate – crystal formation • vision communities (some) application areas recent directions • expert systems (in many domains) • Embodiment • theorem proving – Intelligence includes interactions with the world • games • Emotion • robotics and control – intelligence includes feeling • interfaces and ambient intelligence • network routing • Emergence • text and data mining (inc. security) – intelligence arises in communities • semantic web 2

goal Representation matters state 2 How many moves for knight to get to square X? start 3 state X 1 … draw state space … Knowledge Representation Representing rules and facts (examples) actions • Predicate logic • Logical inference is_person(Jane) � meeting(Jane,10am,tax_office) – smaller(X,Y) � smaller(Y,Z) � smaller(X,Z) named • Frames (a bit like objects) ‘slots’ • Production rules (like IF, but always ‘active’) Meeting { who:Jane, when:10am, where: tax_office) – WHENEVER see(target) AND not moving in RDF • Semantic Web - triples/RDF DO point_towards(target), start_moving URIs id#15 class Person, id#15 name ‘Jane’, id#37 class Meeting, id#37 time ‘10am’, id#37 who id#15 • Scripts – Shopping: get trolley, fill trolley, go to checkout • may have probabilities, weights … meeting(Jane, time ,tax_office), time =10am 75%, time =11am 25% Forward vs. backward Reasoning chaining • Forward vs. backward chaining forward: – forward: – from start state towards goal • from start state towards goal – from known facts infer new ones • known facts infer knew ones eg. if we know Dolly is a sheep and all sheep have wool – backward: infer new fact Dolly has wool backward: • from goal towards start • from query towards facts – from goal towards start – from query towards known facts 3

backward reasoning search example: Horn clauses used in Prolog • traditional AI algorithms with Geoff Coulson Known facts: father(Henry VII,Henry VIII). father(Henry VIII,Elizabeth). • lots of things can be seen as search: r1. ancestor(X,Y) :- father(X,Y). { read father => ancestor } – reasoning – find the pattern of rules that lead r2. ancestor(X,Y) :- ancestor(X,Z), father(Z,Y). from premise to conclusion Query: – learning – find the rules that explain the facts ancestor( Henry VII, Elizabeth). – game playing – find the move that is best Try r1: father(Henry VII, Elizabeth). – FAIL no matter what my opponent does Try r2: ancestor(Henry VII,Z), father(Z,Elizabeth). – route finding – directions and movements to Try r1. father(Henry VII,Z), father(Z,Elizabeth). destination succeeds with Z = Henry VIII – puzzle solving … examples … knight’s moves towers of Hanoi • find the shortest path • get rings from first tower from start state to the second tower to end state small on top of large • state space • state space – which ring on which tower N.B. size constraint • goal • goal – all the rings on second tower • start • start – all the rings • evaluation function (number of moves) • evaluation function – boolean succeed/fail or shortest path plan … week lecturer topic 11 Alan Dix Intro and my bits … 12 Geoff Coulson Scheme Programming and Search Algorithms 13 Geoff Coulson 14 Paul Rayson Natural Language Processing 15 Gerd Kortuem Reasoning, including Distributed Reasoning (plus maybe temporal reasoning) 16 Manolis Sifalakis Emergent AI, Ant models, natural comp., … 17 Manolis Sifalakis Applications to Networking Keith Cheverst Decision Trees for Ambient Intelligence 18 Hans Gellerson Machine Learning and N. Nets for AmbientI 19 Hans Gellerson Computer Vision and Ubicomp 20 Alan Dix (& GC) Group presentations Alan Dix Wrap up (maybe bit of semantic web) lots more! 4

Recommend

More recommend