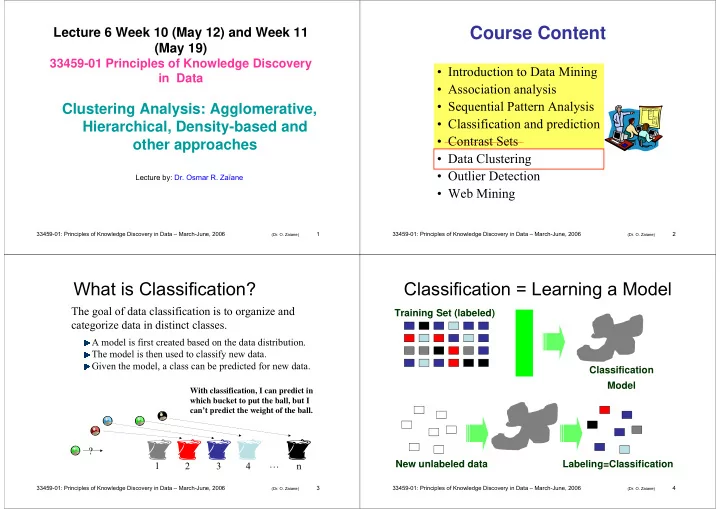

Course Content Lecture 6 Week 10 (May 12) and Week 11 (May 19) 33459-01 Principles of Knowledge Discovery • Introduction to Data Mining in Data • Association analysis • Sequential Pattern Analysis Clustering Analysis: Agglomerative, • Classification and prediction Hierarchical, Density-based and • Contrast Sets other approaches • Data Clustering • Outlier Detection Lecture by: Dr. Osmar R. Zaïane • Web Mining 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 1 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 2 (Dr. O. Zaiane) (Dr. O. Zaiane) What is Classification? Classification = Learning a Model The goal of data classification is to organize and Training Set (labeled) categorize data in distinct classes. A model is first created based on the data distribution. The model is then used to classify new data. Given the model, a class can be predicted for new data. Classification Model With classification, I can predict in which bucket to put the ball, but I can’t predict the weight of the ball. ? … New unlabeled data Labeling=Classification 1 2 3 4 n 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 3 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 4 (Dr. O. Zaiane) (Dr. O. Zaiane)

Supervised and Unsupervised What is Clustering? The process of putting similar data together. Supervised Classification = Classification e � We know the class labels and the number of classes a Grouping a a b a e gray red blue green black e a Clustering a b … 1 2 3 4 n b b e d Partitioning d d c e c Unsupervised Classification = Clustering d d c � We do not know the class labels and may not know the number d of classes – Objects are not labeled, i.e. there is no training data. ? ? ? ? ? – We need a notion of similarity or closeness (what features?) … 1 2 3 4 n – Should we know apriori how many clusters exist? ? ? ? ? ? – How do we characterize members of groups? … 1 2 3 4 – How do we label groups? ? 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 5 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 6 (Dr. O. Zaiane) (Dr. O. Zaiane) What is Clustering in Data Mining? Lecture Outline Part I: What is Clustering in Data Mining (30 minutes) Clustering is a process of partitioning a set of data (or objects) in a set of • Introduction to clustering meaningful sub-classes, called clusters . • Motivating Examples for clustering – Helps users understand the natural grouping or structure in a data set. • Taxonomy of Major Clustering Algorithms • Cluster: a collection of data objects that are “similar” to one another and thus • Major Issues in Clustering • What is Good Clustering? can be treated collectively as one group. Part II: Major Clustering Approaches (1 hour 20 minutes) • A good clustering method produces high quality clusters in which: • K-means (Partitioning-based clustering algorithm) • The intra-class (that is, intra intra-cluster) similarity is high. • Nearest Neighbor clustering algorithm • The inter-class similarity is low. • Hierarchical Clustering • The quality of a clustering result depends on both the • Density-based Clustering similarity measure used and its implementation. Part III: Open Problems (10 minutes) • Clustering = function that maximizes similarity between • Research Issues in Clustering objects within a cluster and minimizes similarity between objects in different clusters. 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 7 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 8 (Dr. O. Zaiane) (Dr. O. Zaiane)

Typical Clustering Applications Clustering Example – Fitting Troops • Fitting the troops – re-design of uniforms for female • As a stand-alone tool to soldiers in US army – get insight into data distribution – Goal: reduce the number of uniform sizes to be kept in – find the characteristics of each cluster inventory while still providing good fit – assign the cluster of a new example • Researchers from Cornell University used clustering • As a preprocessing step for other algorithms and designed a new set of sizes – e.g. numerosity reduction – using cluster centers to – Traditional clothing size system: ordered set of graduated sizes represent data in clusters where all dimensions increase together • It is a building block for many data mining – The new system: sizes that fit body types • E.g. one size for short-legged, small waisted, women with wide and solutions long torsos, average arms, broad shoulders, and skinny necks – e.g. Recommender systems – group users with similar behaviour or preferences to improve recommendation. 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 9 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 10 (Dr. O. Zaiane) (Dr. O. Zaiane) Other Examples of Clustering Applications Clustering Example - Houses • • Given a dataset it may be clustered on different Marketing – help discover distinct groups of customers, and then use this attributes. The result and its interpretation would be knowledge to develop targeted marketing programs different • Biology – derive plant and animal taxonomies – find genes with similar function • Land use – identify areas of similar land use in an earth observation database • Insurance Group of houses Clustered based on Clustered based on Clustered based on size – identify groups of motor insurance policy holders with a high average geographic distance value and value claim cost Definition of a distance function is highly application dependent • City-planning Properties of a distance function Measures “dissimilarity” between pairs dist ( x , y ) ≥ 0 – identify groups of houses according to their house type, value, and objects x and y dist ( x , y ) = 0 iff x = y • Small distance dist ( x , y ): objects x and y geographical location dist ( x , y ) = dist ( y , x ) (symmetry) are more similar If dist is a metric, which is often the case: • Large distance dist ( x , y ): objects x and y dist ( x , z ) ≤ dist ( x , y ) + dist ( y , z ) (triangle inequality) are less similar 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 11 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 12 (Dr. O. Zaiane) (Dr. O. Zaiane)

Five Categories of Clustering Methods Major Clustering Techniques • Partitioning algorithms : Construct various partitions and then • Clustering techniques have been studied extensively in: evaluate them by some criterion. (K-Means is the most known) – Statistics, machine learning, and data mining • Hierarchy algorithms : Create a hierarchical decomposition of with many methods proposed and studied. the set of data (or objects) using some criterion. There is an • Clustering methods can be classified into 5 approaches: agglomerative approach and a divisive approach. – partitioning algorithms • Density-based : based on connectivity and density functions. – hierarchical algorithms We will cover only these. • Grid-based : based on a multiple-level granularity structure. • Model-based : (Generative approach) A model is hypothesized – density-based methods for each of the clusters and the idea is to find the best fit of that – grid-based methods model to each other. Generative models estimated through maximum likelihood approach. (EM: Expectation Maximization – model-based methods with a Gaussian Mixture Model, is a typical example) 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 13 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 14 (Dr. O. Zaiane) (Dr. O. Zaiane) Important Issues in Clustering Important Issues in Clustering (2) • Different Types of Attributes • Noise and outlier Detection – Numerical: Generally can be represented in a – Differentiate remote points from internal ones. Euclidean Space. Distance can be used as a – Noisy points (errors in data) can artificially split measure of dissimilarity. (See classification slides for measures) or merge clusters. – Distinguishing remote noisy points or very – Categorical: A metric space may not be small unexpected clusters can be very definable. A similarity measure has to be important for the validity and quality of the defined. Jaccard ( ); Dice ( ); results. Overlap ( ); Cosine ( ) etc. – Noise can bias the results especially in the – Sequence aware similarity: eg. DNA calculation of cluster characteristics. sequences, web access behaviour. Can use Dynamic Programming. 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 15 33459-01: Principles of Knowledge Discovery in Data – March-June, 2006 16 (Dr. O. Zaiane) (Dr. O. Zaiane)

Recommend

More recommend