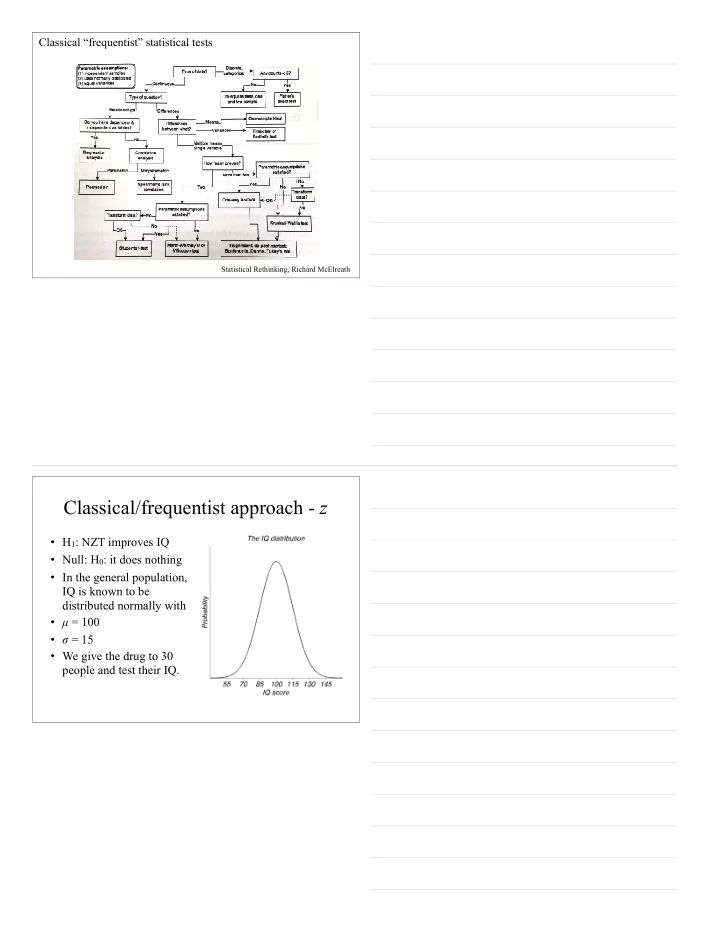

Classical “frequentist” statistical tests Statistical Rethinking, Richard McElreath Classical/frequentist approach - z • H 1 : NZT improves IQ • Null: H 0 : it does nothing • In the general population, IQ is known to be distributed normally with • µ = 100 • σ = 15 • We give the drug to 30 people and test their IQ.

The z -test • µ = 100 (Population mean) • σ = 15 (Population standard deviation) • N = 30 (Sample contains scores from 30 participants) • x = 108.3 (Sample mean) • z = ( x – µ )/SE = (108.3-100)/SE (Standardized score) • SE = σ / √ N = 15/ √ 30 = 2.74 • Error bar/CI: ±2 SE • z = 8.3/2.74 = 3.03 • p = 0.0012 • Significant? • One- vs. two-tailed test What if the measured effect of NZT had been half that? • µ = 100 (Population mean) • σ = 15 (Population standard deviation) • N = 30 (Sample contains scores from 30 participants) • x = 104.2 (Sample mean) • z = ( x – µ )/SE = (104.2-100)/SE • SE = σ / √ N = 15/ √ 30 = 2.74 • z = 4.2/2.74 = 1.53 • p = 0.061 • Significant?

Significance levels • Are denoted by the Greek letter α . • In principle, we can pick anything that we consider unlikely. • In practice, the consensus is that a level of 0.05 or 1 in 20 is considered as unlikely enough to reject H 0 and accept the alternative. • A level of 0.01 or 1 in 100 is considered “highly significant” or really unlikely. Does NZT improve IQ scores or not? Reality Yes No Type I error Correct α -error Yes Significant? False alarm Type II error β -error No Correct Miss

Test statistic • We calculate how far the observed value of the sample average is away from its expected value. • In units of standard error. • In this case, the test statistic is z = x − µ = x − µ SE σ / N • Compare to a distribution, in this case z or N (0,1) Common misconceptions Is “Statistically significant” a synonym for: • Substantial • Important • Big • Real Does statistical significance gives the • probability that the null hypothesis is true • probability that the null hypothesis is false • probability that the alternative hypothesis is true • probability that the alternative hypothesis is false Meaning of p -value. Meaning of CI.

Student’s t -test • σ not assumed known • Use N ( ) ∑ 2 x i − x s 2 = i = 1 N − 1 E ( s 2 ) = σ 2 • Why N -1? s is unbiased (unlike ML version), i.e., t = x − µ 0 • Test statistic is s / N • Compare to t distribution for CIs and NHST • “Degrees of freedom” reduced by 1 to N -1 The t distribution approaches the normal distribution for large N Probability x (z or t)

The z -test for binomial data • Is the coin fair? • Lean on central limit theorem • Sample is n heads out of m tosses p = n / m • Sample mean: ˆ • H 0 : p = 0.5 • Binomial variability (one toss): σ = pq , where q = 1 − p p − p 0 ˆ • Test statistic: z = p 0 q 0 / m • Compare to z (standard normal) • For CI, use ± z α /2 p ˆ ˆ q / m Many varieties of frequentist univariate tests • goodness of fit χ 2 • test of independence χ 2 χ 2 • test a variance using • F to compare variances (as a ratio) • Nonparametric tests (e.g., sign, rank-order, etc.)

The Gaussian • parameterized by mean and stdev (position / width) • joint density of two indep Gaussian RVs is circular! [easy] • product of two Gaussian dists is Gaussian! [easy] • conditionals of a Gaussian are Gaussian! [easy] • sum of Gaussian RVs is Gaussian! [moderate] • all marginals of a Gaussian are Gaussian! [moderate] • central limit theorem: sum of many RVs is Gaussian! [hard] • most random (max entropy) density with this variance! [moderate] true density 700 samples Measurement (sampling) Inference true mean: [0 0.8] sample mean: [-0.05 0.83] true cov: [1.0 -0.25 sample cov: [0.95 -0.23 -0.25 0.3] -0.23 0.29]

Correlation: summary of data cloud shape + - + - Correlation and regression TLS (largest eigenvector) Least-squares regression “Regression to the mean”

Correlation and regression corr= − 0.80 corr= − 0.40 corr=0.00 corr=0.40 corr=0.80 5 5 5 5 5 0 0 0 0 0 − 5 − 5 − 5 − 5 − 5 − 5 0 5 − 5 0 5 − 5 0 5 − 5 0 5 − 5 0 5 Independence implies uncorrelated, Statistical independence a stronger assumption uncorrelatedness ⇒ All independent variables are uncorrelated but uncorrelated doesn’t imply independent! ⇒ Not all uncorrelated variables are independent: r =

More extreme examples ! https://www.autodeskresearch.com/publications/samestats Correlation between variables does not explain their relationship

Correlation in 1.5 1.2 N=16 N=3 1 N dimensions 1 0.8 0.6 0.5 0.4 0.2 0 0 − 1 − 0.5 0 0.5 1 − 1 − 0.5 0 0.5 1 0.05 1.2 N=4 N=32 0.04 1 Null Hypothesis: 0.8 0.03 Distribution of 0.6 0.02 0.4 normalized 0.01 0.2 0 0 dot product of − 1 − 0.5 0 0.5 1 − 1 − 0.5 0 0.5 1 pairs of 0.025 0.08 Gaussian vectors N=8 N=64 0.02 0.06 in N dimensions: 0.015 0.04 0.01 0.02 N − 3 (1 − d 2 ) 0.005 2 0 0 − 1 − 0.5 0 0.5 1 − 1 − 0.5 0 0.5 1 1 1 Distribution of 2D 6D 0.8 0.8 angles of pairs of 0.6 0.6 Gaussian vectors 0.4 0.4 0.2 0.2 0 0 0 1 2 3 0 1 2 3 0.8 1.5 3D 10D 0.6 1 0.4 0.5 0.2 sin(theta)^(N-2) 0 0 0 1 2 3 0 1 2 3 2 0.8 4D 18D 1.5 0.6 1 0.4 0.5 0.2 0 0 0 1 2 3 0 1 2 3

Per capita cheese consumption correlates with Number of people who died by becoming tangled in their bedsheets Correlation does 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 not imply causation 800 deaths 33lbs Cheese consumed Bedsheet tanglings 600 deaths 31.5lbs 30lbs 400 deaths 28.5lbs 200 deaths 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 Bedsheet tanglings Cheese consumed tylervigen.com Worldwide non-commercial space launches correlates with Sociology doctorates awarded (US) Worldwide non-commercial space launches 1997 1998 1999 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 60 Launches 700 Degrees awarded Sociology doctorates awarded (US) 650 Degrees awarded 50 Launches 600 Degrees awarded 40 Launches 550 Degrees awarded 30 Launches 500 Degrees awarded 1997 1998 1999 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 Sociology doctorates awarded (US) Worldwide non-commercial space launches tylervigen.com Letters in Winning Word of Scripps National Spelling Bee correlates with Number of people killed by venomous spiders Number of people killed by venomous spiders 1999 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 15 letters 15 deaths Spelling Bee winning word 10 deaths 10 letters 5 deaths 5 letters 0 deaths 1999 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 http://www.tylervigen.com/spurious-correlations Number of people killed by venomous spiders Spelling Bee winning word tylervigen.com Correlation does not imply causation • Beware selection bias • Correlation does not provide a direction for causality. For that, you need additional (temporal) information. • More generally, correlations are often a result of hidden (unmeasured, uncontrolled) variables… H Example: conditional independence: + + p(A,B | H) = p(A | H) p(B | H) A B [on board: In Gaussian case, connections are explicit in the Precision Matrix]

Another example: Simpson’s paradox H + - + A B Milton Friedman’s Thermostat True interactions: O = outside temperature (assumed cold) - I = inside temperature (ideally, constant) E = energy used for heating O E + + Statistical interactions, P=C -1 : Statistical observations: I - • O and I uncorrelated • I and E uncorrelated O E • O and E anti-correlated I Some nonsensical conclusions: • O and E have no effect on I, so shut off heater to save money! • I is irrelevant, and can be ignored. Increases in E cause decreases in O. Statistical summary cannot replace scientific reasoning/experiments!

Summary: misinterpretations of Correlation • Correlation => dependency, but non-correlation does not imply independence • Correlation does not imply data lie on a line (subspace), with noise perturbations • Correlation does not imply causation (temporally, or by direct influence) • Correlation is only a descriptive statistic, and cannot replace the need for scientific reasoning/experiment Taxonomy of model-fitting errors • Optimization failures (e.g., local minima) [prefer convex objective, test with simulations] • Overfitting [use cross-validation to select complexity, or to control regularization] • Experimental variability (due to finite noisy measurements) [use math/distributional assumptions, or simulations, or bootstrapping] • Model failures

Optimization... Heuristics, exhaustive search, (pain & suffering) Smooth (C 2 ) Iterative descent, (possible local Convex minima) Quadratic Iterative descent, unique Closed-form, and unique statAnMod - 9/12/07 - E.P. Simoncelli Bootstrapping • “The Baron had fallen to the bottom of a deep lake. Just when it looked like all was lost, he thought to pick himself up by his own bootstraps” [Adventures of Baron von Munchausen, by Rudolph Erich Raspe] • A ( re)sampling method for computing estimator distribution (incl. stdev error bars or confidence intervals) • Idea: instead of running experiment multiple times, resample (with replacement) from the existing data. Compute an estimate from each of these “bootstrapped” data sets.

Recommend

More recommend