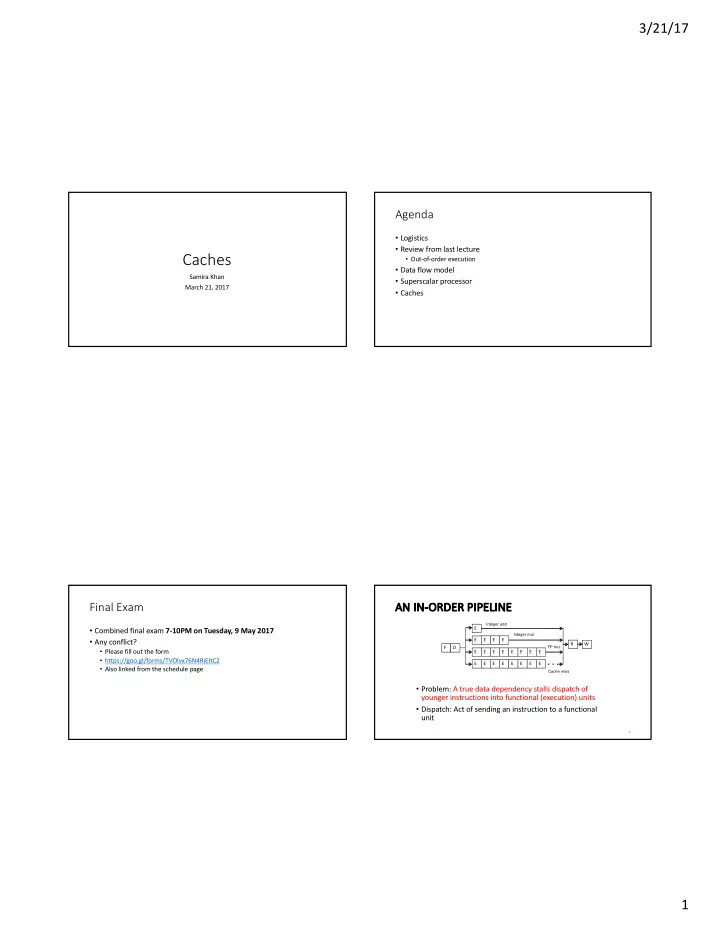

3/21/17 Agenda • Logistics • Review from last lecture Caches • Out-of-order execution • Data flow model Samira Khan • Superscalar processor March 21, 2017 • Caches Final Exam AN AN IN-OR ORDER PIPELINE Integer add • Combined final exam 7-10PM on Tuesday, 9 May 2017 E Integer mul E E E • Any conflict? E R W F D FP mul • Please fill out the form E E E E E E E E • https://goo.gl/forms/TVOlvx76N4RiEItC2 . . . E E E E E E E E • Also linked from the schedule page Cache miss • Problem: A true data dependency stalls dispatch of younger instructions into functional (execution) units • Dispatch: Act of sending an instruction to a functional unit 4 1

3/21/17 CAN WE DO BETTER? IN-ORDER VS. OUT-OF-ORDER DISPATCH • What do the following two pieces of code have in common • In order dispatch + precise exceptions: (with respect to execution in the previous design)? IMUL R3 ß R1, R2 F D E E E E R W ADD R3 ß R3, R1 IMUL R3 ß R1, R2 LD R3 ß R1 (0) F D STALL E R W ADD R1 ß R6, R7 ADD R3 ß R3, R1 ADD R3 ß R3, R1 IMUL R5 ß R6, R8 F STALL D E R W ADD R1 ß R6, R7 ADD R1 ß R6, R7 ADD R7 ß R3, R5 F D E E E E E R W IMUL R5 ß R6, R8 IMUL R5 ß R6, R8 ADD R7 ß R9, R9 ADD R7 ß R9, R9 F D STALL E R W • Out-of-order dispatch + precise exceptions: • Answer: First ADD stalls the whole pipeline! • ADD cannot dispatch because its source registers unavailable F D E E E E R W • Later independent instructions cannot get executed F D WAIT E R W F D E R W • How are the above code portions different? F D E E E E R W • Answer: Load latency is variable (unknown until runtime) • What does this affect? Think compiler vs. microarchitecture F D WAIT E R W • 16 vs. 12 cycles 5 6 TOMASULO’S ALGORITHM Out-of-Order Execution \w Precise Exception • OoO with register renaming invented by Robert Tomasulo • Variants are used in most high-performance • Used in IBM 360/91 Floating Point Units processors • Tomasulo, “ An Efficient Algorithm for Exploiting Multiple Arithmetic Units, ” • Initially in Intel Pentium Pro, AMD K5 • IBM Journal of R&D, Jan. 1967 • Alpha 21264, MIPS R10000, IBM POWER5, IBM z196, Oracle UltraSPARC T4, ARM Cortex • What is the major difference today? A15 • Precise exceptions: IBM 360/91 did NOT have this • Patt, Hwu, Shebanow, “ HPS, a new microarchitecture: rationale and introduction, ” MICRO 1985. • Patt et al., “ Critical issues regarding HPS, a high performance • The Pentium Chronicles: The People, Passion, and microarchitecture, ” MICRO 1985. Politics Behind Intel's Landmark Chips by Robert P. Colwell 7 2

3/21/17 The Von Neumann Model/Architecture Agenda • Also called stored program computer (instructions in memory). Two key properties: • Logistics • Stored program • Review from last lecture • Instructions stored in a linear memory array • Out-of-order execution • Memory is unified between instructions and data • Data flow model • The interpretation of a stored value depends on the control signals When is a value interpreted as an instruction? • Superscalar processor • Sequential instruction processing • Caches • One instruction processed (fetched, executed, and completed) at a time • Program counter (instruction pointer) identifies the current instr. • Program counter is advanced sequentially except for control transfer instructions 10 The Dataflow Model (of a Computer) Von Neumann vs Dataflow • Von Neumann model: An instruction is fetched and • Consider a Von Neumann program executed in control flow order • What is the significance of the program order? • As specified by the instruction pointer • What is the significance of the storage locations? • Sequential unless explicit control flow instruction a b v <= a + b; w <= b * 2; • Dataflow model: An instruction is fetched and executed x <= v - w + *2 in data flow order y <= v + w z <= x * y • i.e., when its operands are ready • i.e., there is no instruction pointer - + • Instruction ordering specified by data flow dependence Sequential • Each instruction specifies “who” should receive the result * • An instruction can “fire” whenever all operands are received Dataflow • Potentially many instructions can execute at the same time z • Inherently more parallel n Which model is more natural to you as a programmer? 11 12 3

3/21/17 More on Data Flow Data Flow Nodes • In a data flow machine, a program consists of data flow nodes • A data flow node fires (fetched and executed) when all it inputs are ready • i.e. when all inputs have tokens • Data flow node and its ISA representation 13 14 An Example What does this model perform? A B A B val = a ^ b XOR XOR c c 0 Legend 0 Legend =0? =0? c Copy c Copy c c X X T F 1 T F Initially Z=X T F 1 T F Initially Z=X Y Y then Z=Y then Z=Y c c 1 Z 1 Z + + ANSWER ANSWER - - X Y X Y Z=X-Y Z=X-Y - Z - Z AND AND 4

3/21/17 What does this model perform? What does this model perform? A B A B val = a ^ b val = a ^ b XOR XOR c c 0 Legend val =! 0 0 Legend val =! 0 =0? =0? c Copy c Copy c c X X T F 1 T F Initially Z=X T F 1 T F Initially Z=X Y Y then Z=Y then Z=Y c c 1 Z 1 Z + + ANSWER ANSWER - - X Y X Y val &= val - 1; Z=X-Y Z=X-Y - Z - Z AND AND What does this model perform? Hamming Distance A B int hamming_distance(unsigned a, unsigned b) { val = a ^ b int dist = 0; XOR unsigned val = a ^ b; // Count the number of bits set c while (val != 0) { // A bit is set, so increment the count and clear the bit 0 Legend val =! 0 =0? c Copy dist++; val &= val - 1; c X T F 1 T F Initially Z=X Y } then Z=Y c 1 Z + ANSWER - X Y // Return the number of differing bits val &= val - 1; Z=X-Y - Z return dist; dist = 0 } AND dist++; 5

3/21/17 RI RICH CHARD RD HAMMING Hamming Distance • Best known for Hamming Code • Won Turing Award in 1968 • Number of positions at which the corresponding symbols are different. • Was part of the Manhattan Project • The Hamming distance between: • Worked in Bell Labs for 30 years • "karolin" and "kathrin" is 3 • 1011101 and 1001001 is 2 • You and Your Research is mainly his • 2173896 and 2233796 is 3 advice to other researchers • Had given the talk many times during his life time • http://www.cs.virginia.edu/~robins/Y ouAndYourResearch.html 22 Data Flow Advantages/Disadvantages OOO EXECUTION: RESTRICTED DATAFLOW • Advantages • Very good at exploiting irregular parallelism • An out-of-order engine dynamically builds the dataflow • Only real dependencies constrain processing graph of a piece of the program • Disadvantages • which piece? • Debugging difficult (no precise state) • The dataflow graph is limited to the instruction window • Interrupt/exception handling is difficult (what is precise state semantics?) • Instruction window: all decoded but not yet retired • Too much parallelism? (Parallelism control needed) instructions • High bookkeeping overhead (tag matching, data storage) • Memory locality is not exploited • Can we do it for the whole program? • Why would we like to? • In other words, how can we have a large instruction window? 23 24 6

3/21/17 Superscalar Processor Agenda F D E M W F D E M W F D E M W • Logistics E M W F D • Review from last lecture Each instruction still takes 5 cycles, but instructions now complete every cycle: • Out-of-order execution CPI → 1 • Data flow model F D E M W • Superscalar processor F D E M W • Caches F D E M W F D E M W F D E M W F D E M W Each instruction still takes 5 cycles, but instructions now complete every cycle: CPI → 0.5 Problems? Superscalar Processor • Fetch • May be located at different cachelines • Ideally: in an n-issue superscalar, n instructions are fetched, decoded, • More than one cache lookup is required in the same cycle executed, and committed per cycle • What if there are branches? • In practice: • Branch prediction is required within the instruction fetch stage • Data, control, and structural hazards spoil issue flow • Decode/Execute • Multi-cycle instructions spoil commit flow • Replicate (ok) • Buffers at issue (issue queue) and commit (reorder buffer) • Issue • Number of dependence tests increases quadratically (bad) • Decouple these stages from the rest of the pipeline and regularize • Register read/write somewhat breaks in the flow • Number of register ports increases linearly (bad) • Bypass/forwarding • Increases quadratically (bad) 7

3/21/17 Memory in a Modern System L2 CACHE 0 L2 CACHE 1 SHARED L3 CACHE DRAM INTERFACE CORE 0 CORE 1 DRAM BANKS The Memory Hierarchy DRAM MEMORY CONTROLLER L2 CACHE 2 L2 CACHE 3 CORE 2 CORE 3 30 Ideal Memory The Problem • Zero access time (latency) • Ideal memory’s requirements oppose each other • Infinite capacity • Zero cost • Bigger is slower • Bigger à Takes longer to determine the location • Infinite bandwidth (to support multiple accesses in parallel) • Faster is more expensive • Memory technology: SRAM vs. DRAM vs. Disk vs. Tape • Higher bandwidth is more expensive • Need more banks, more ports, higher frequency, or faster technology 31 32 8

Recommend

More recommend