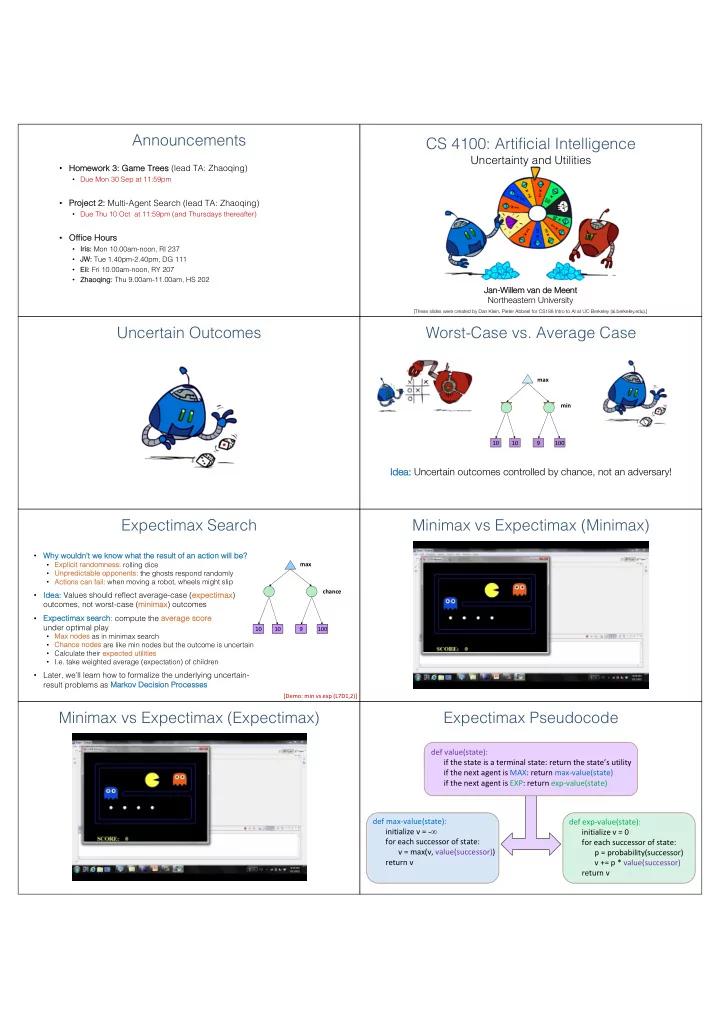

Announcements CS 4100: Artificial Intelligence Uncertainty and Utilities • Homework k 3: Game Trees s (lead TA: Zhaoqing) • Due Mon 30 Sep at 11:59pm • Pr Project 2 t 2: Multi-Agent Search (lead TA: Zhaoqing) • Due Thu 10 Oct at 11:59pm (and Thursdays thereafter) • Offi Office Ho Hours • Iris: s: Mon 10.00am-noon, RI 237 • JW JW: Tue 1.40pm-2.40pm, DG 111 • El Eli: Fri 10.00am-noon, RY 207 • Zh Zhaoqi qing: : Thu 9.00am-11.00am, HS 202 Ja Jan-Wi Willem van de Meent Northeastern University [These slides were created by Dan Klein, Pieter Abbeel for CS188 Intro to AI at UC Berkeley (ai.berkeley.edu).] Uncertain Outcomes Worst-Case vs. Average Case max min 10 10 9 100 Idea: Uncertain outcomes controlled by chance, not an adversary! Id Expectimax Search Minimax vs Expectimax (Minimax) • Why y wouldn’t we kn know what the resu sult of an action will be? • Exp max xplicit randomness: ss: rolling dice • Unpredictable opponents: s: the ghosts respond randomly • Actions s can fail: when moving a robot, wheels might slip chance • Id Idea: ea: Values should reflect average-case ( exp xpectimax ) outcomes, not worst-case ( mi minima max ) outcomes • Exp xpectimax se search : compute the ave verage sc score under optimal play 10 10 10 4 9 5 100 7 • Max x nodes s as in minimax search • Ch Chance n nodes are like min nodes but the outcome is uncertain • Calculate their exp xpected utilities • I.e. take weighted average (expectation) of children • Later, we’ll learn how to formalize the underlying uncertain- result problems as Marko kov v Decisi sion Processe sses [Demo: min vs exp (L7D1,2)] Minimax vs Expectimax (Expectimax) Expectimax Pseudocode def value(state): if the state is a terminal state: return the state’s utility if the next agent is MAX: return max-value(state) if the next agent is EXP: return exp-value(state) def max-value(state): def exp-value(state): initialize v = -∞ initialize v = 0 for each successor of state: for each successor of state: v = max(v, value(successor)) p = probability(successor) return v v += p * value(successor) return v

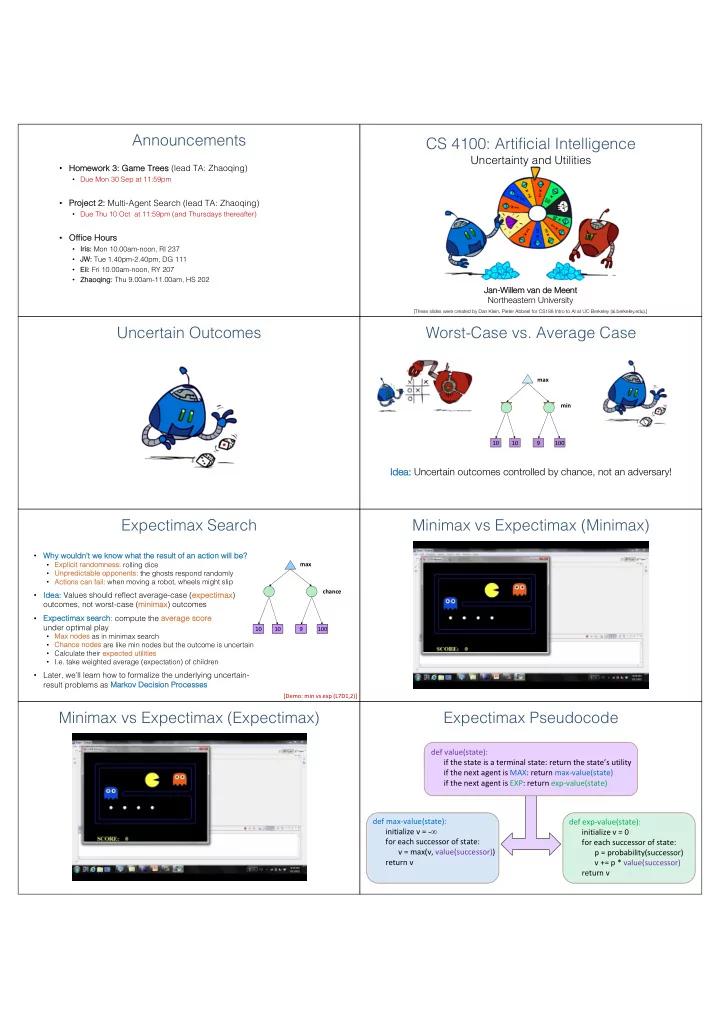

Expectimax Pseudocode Expectimax Example def exp-value(state): initialize v = 0 for each successor of state: 1/2 1/6 p = probability(successor) 1/3 v += p * value(successor) return v 8 5 24 7 -12 1/3 1/3 1/3 1/3 1/3 1/3 1/3 1/3 1/3 v = (1/2) (8) + (1/3) (24) + (1/6) (-12) = 10 3 12 9 2 4 6 15 6 0 Expectimax Pruning? Depth-Limited Expectimax Estimate of true … expectimax value 400 300 (which would require a lot of 3 12 9 2 … work to compute) … 492 362 Probabilities Reminder: Probabilities • A random va variable represents an eve vent whose out outcom come is unknown • A probability y dist stribution assigns weights to outcomes 0.25 • Exa xample: Traffic on freeway • Random va variable: T = am T = amount of tr ount of traffi affic s: T ∈ {none, light, heavy} • Outcomes: vy} • Dist stribution: P(T P(T=n =none) = 0 ) = 0.2 .25 , P(T P(T=ligh =light) = 0 t) = 0.5 .50 , P(T=heavy) vy) = 0.25 0.50 • Some laws s of probability y (more later): • Probabilities are always non non-negative ve • Probabilities over all possible outcomes su sum to one • As s we get more evi vidence, probabilities s may y change: • 0.25 P(T=heavy) vy) = 0.25 , P(T=heavy vy | Hour=8am) = 0.60 • We’ll talk about methods for reasoning and updating probabilities later What Probabilities to Use? Reminder: Expectations • The exp xpected va value of a function f( f(X) of a random • In ex search , we have a pr expect ectimax ax se proba babi bilistic variable X is is a weighted average over outcomes. model of the opponent (or environment) mo • Model could be a simple uniform distribution (roll a die) • Exa xample: How long to get to the airport? • Model could be sophisticated and require a great deal of computation • We have a chance node for any outcome Time: 20 min 30 min 60 min out of our control: opponent or environment + + 35 min x x x • The model might say that adversarial actions are likely! Probability: 0.25 0.50 0.25 • For now, assume each chance node “m “magically” comes along with probabilities that specify the distribution over its outcomes Having a probabilistic belief about another agent’s action does not mean that the agent is flipping any coins!

Quiz: Informed Probabilities Modeling Assumptions • Let’s say you know that your opponent is actually running a de depth pth 2 2 mi minima max , using the result 80% 80% of of the he time , and moving randomly y otherwise se • Quest stion: What tree search should you use? • An Answer: Ex Expecti tima max! • To compute EACH chance node’s probabilities, you have to run a simulation of your opponent This kind of thing gets very slow very quickly • 0.1 0.9 • Even worse if you have to simulate your opponent simulating you… … except for minimax, which has the nice • property that it all collapses into one game tree The Dangers of Optimism and Pessimism Assumptions vs. Reality Da Dangerous Optim imis ism Da Dangerous Pessim imis ism Assuming chance when the world is adversarial Assuming the worst case when it’s not likely Adversarial Ghost Random Ghost Won 5/5 Won 5/5 Minimax Pacman Avg. Score: 483 Avg. Score: 493 Won 1/5 Won 5/5 Expectimax Pacman Avg. Score: -303 Avg. Score: 503 Results from playing 5 games Pacman used depth 4 search with an eval function that avoids trouble Ghost used depth 2 search with an eval function that seeks Pacman [Demos: world assumptions (L7D3,4,5,6)] Adversarial Ghost vs. Minimax Pacman Assumptions vs. Reality Adversarial Ghost Random Ghost Won 5/5 Won 5/5 Minimax Pacman Avg. Score: 483 Avg. Score: 493 Won 1/5 Won 5/5 Expectimax Pacman Avg. Score: -303 Avg. Score: 503 Results from playing 5 games Pacman used depth 4 search with an eval function that avoids trouble Ghost used depth 2 search with an eval function that seeks Pacman [Demos: world assumptions (L7D3,4,5,6)] Random Ghost vs. Expectimax Pacman Adversarial Ghost vs. Expectimax Pacman

Random Ghost vs Minimax Pacman Other Game Types Mixed Layer Types Example: Backgammon • E.g. Backg • Dice rolls s increase se breadth: 21 outcomes with 2 dice kgammon • Backg kgammon: ~20 legal move ves • Exp xpectiminimax x 20) 3 = 1.2 x • De Depth th 2 2: 20 x x (21 x x 10 9 • Environment is an extra • As s depth increase ses, s, probability y of reaching “r “rand andom om ag agent ent” ” player a give ven se search node sh shrinks ks that moves after each • So usefulness of search is diminished min/max agent • So limiting depth is less damaging • Each node computes the • But pruning is trickier… appropriate combination of its children • on uses depth-2 search + very Hist storic AI: TD TDGam Gammon good evaluation function + reinforcement learning: world-champion level play st AI world champion in any • 1 st y game! Image: Wikipedia Multi-Agent Utilities Utilities • What if the game is not ze zero-su sum , or has multiple playe yers ? • Generaliza zation of mi minima max: • Terminals s have utility tuples s (one for each agent) • Node va values s are also utility tuples • Each player maximizes its own component • Can give rise to cooperation and competition dynamically… 1,6,6 7,1,2 6,1,2 7,2,1 5,1,7 1,5,2 7,7,1 5,2,5 Maximum Expected Utility What Utilities to Use? • Why should we ave verage utilities ? Why not mi minima max ? • Minimax will be overly risk sk-ave verse se in most settings. • Principle of maxi ximum exp xpected utility 0 40 20 30 x 2 0 1600 400 900 • A ra rational agent should chose the action that maxi ximize zes s its s exp xpected utility , given its kn knowledge of the world . • Quest • For worst-case mi stions: s: minima max reasoning, terminal sc scaling doesn’t matter • Where do utilities come from? • We just want better states to have higher evaluations (get the ordering right) • How do we know such utilities even exist? • We call this inse sensi sitivi vity y to monotonic transf sformations • How do we know that averaging even makes sense? • What if our behavior (preferences) can’t be described by utilities? • For average-case exp xpectimax reasoning, magnitudes s matter

Recommend

More recommend