Analysis-driven Engineering of Comparison-based Sorting Algorithms - PowerPoint PPT Presentation

AlgoPARC Analysis-driven Engineering of Comparison-based Sorting Algorithms on GPUs 32nd ACM International Conference on Supercomputing June 17, 2018 Ben Karsin 1 karsin@hawaii.edu Volker Weichert 2 weichert@cs.uni-frankfurt.de Henri

AlgoPARC Analysis-driven Engineering of Comparison-based Sorting Algorithms on GPUs 32nd ACM International Conference on Supercomputing · June 17, 2018 Ben Karsin 1 · karsin@hawaii.edu Volker Weichert 2 · weichert@cs.uni-frankfurt.de Henri Casanova 1 · henric@hawaii.edu John Iacono 3 · john.iacono@ulb.ac.be Nodari Sitchinava 1 · nodari@hawaii.edu 1 D EPARTMENT OF ICS, U NIVERSITY OF H AWAII AT M ANOA 2 G OETHE U NIVERSITY F RANKFURT 3 D PARTEMENT D ’I NFORMATIQUE , U NIVERSIT ´ E L IBRE DE B RUXELLES Work supported by the National Science Foundation under grants 1533823 and1745331 www.algoparc.ics.hawaii.edu Ben Karsin – A Performance Model for GPU Architectures

Sorting: A fundamental problem Sorting is a building block Used by countless algorithms... Ben Karsin – A Performance Model for GPU Architectures

Sorting: A fundamental problem Sorting is a building block Used by countless algorithms... O ( N ) · · · O (log N ) Ben Karsin – A Performance Model for GPU Architectures

Sorting: A fundamental problem Sorting is a building block Used by countless algorithms... Ben Karsin – A Performance Model for GPU Architectures

Sorting: A fundamental problem Sorting is a building block Used by countless algorithms... Ben Karsin – A Performance Model for GPU Architectures

Sorting: A fundamental problem Sorting is a building block Used by countless algorithms... Many solutions Ben Karsin – A Performance Model for GPU Architectures

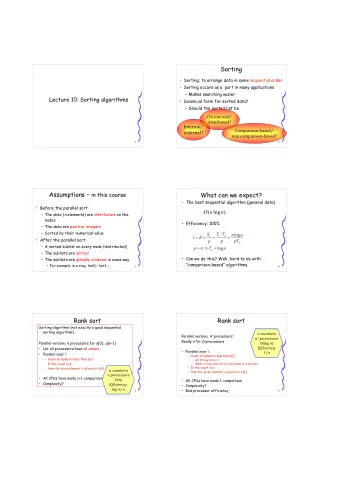

Graphics Processing Units Designed for high throughput Extremely Parallel Thousands of cores Huge performance potential Lots of application research No standard performance model Ben Karsin – A Performance Model for GPU Architectures

NVIDIA GPU Streaming Multiprocessors NVIDIA GPU (SMs) · · · SM SM SM SM < 20 per GPU Global Memory < 200 cores each · · · SM SM SM SM Control Logic Shared Memory processor cores Ben Karsin – A Performance Model for GPU Architectures

NVIDIA GPU Streaming Multiprocessors NVIDIA GPU (SMs) · · · SM SM SM SM < 20 per GPU Global Memory < 200 cores each · · · SM SM SM SM Memory Hierarchy User-controlled Control Logic Shared Memory Different scope processor cores Ben Karsin – A Performance Model for GPU Architectures

NVIDIA GPU Streaming Multiprocessors NVIDIA GPU (SMs) · · · SM SM SM SM < 20 per GPU Global Memory < 200 cores each · · · SM SM SM SM Memory Hierarchy User-controlled Control Logic Shared Memory Different scope processor cores Thread organization Cores share logic Need lots of parallelism! Ben Karsin – A Performance Model for GPU Architectures

Thread Organization · · · · · · SM SM SM SM Global Memory · · · SM SM SM SM Ben Karsin – A Performance Model for GPU Architectures

Thread Organization b · · · · · · SM SM SM SM Global Memory · · · SM SM SM SM Threads are groupped into thread-blocks b threads Run on the SM Ben Karsin – A Performance Model for GPU Architectures

Thread Organization b w · · · · · · SM SM SM SM Global Memory · · · SM SM SM SM Threads are groupped into thread-blocks b threads Run on the SM Groups of w = 32 form a warp execute in ‘SIMT’ lockstep Ben Karsin – A Performance Model for GPU Architectures

Memory Hierarchy NVIDIA GPU 3 levels with different: · · · SM SM SM SM Access scope Capacity Global Memory Access pattern · · · SM SM SM SM Latency Peak bandwidth Control Logic Shared Memory processor cores Ben Karsin – A Performance Model for GPU Architectures

Global Memory Large (up to 32 GB) NVIDIA GPU · · · SM SM SM SM Shared by all threads Global Memory Slow · · · SM SM SM SM “Blocked” accesses Control Logic Shared Memory I/O model processor cores Ben Karsin – A Performance Model for GPU Architectures

Global Memory Access Pattern Warp - 32 threads execute in lockstep Access global memory together Warp is a single unit 1 operation accesses 32 elements Just like disk accesses in ’I/O’ model ( B = 32) Ben Karsin – A Performance Model for GPU Architectures

Shared Memory Small (48-64 KB per SM) NVIDIA GPU · · · SM SM SM SM Private to SM Global Memory User defines sharing · · · SM SM SM SM 5 – 10 × faster Unique access pattern Control Logic Shared Memory organized into banks processor cores Ben Karsin – A Performance Model for GPU Architectures

Shared Memory Access Pattern · · · A Stored across w memory banks Shared memory . Bank 1 . . Bank 2 Bank 3 Bank 4 Ben Karsin – A Performance Model for GPU Architectures

Shared Memory Access Pattern T 1 T 2 T 3 T 4 · · · A Separate banks accessed concurrently Shared memory . Bank 1 O . . Bank 2 O Bank 3 O Bank 4 O Ben Karsin – A Performance Model for GPU Architectures

Shared Memory Access Pattern T 1 T 2 T 3 T 4 · · · A Threads accessing same bank = Bank conflict Serialize access Shared memory . Bank 1 X X X X . . Bank 2 Bank 3 Bank 4 Ben Karsin – A Performance Model for GPU Architectures

Registers Small (255 per thread) NVIDIA GPU · · · SM SM SM SM Private to thread Global Memory Fastest · · · SM SM SM SM Random access Must be “static” Control Logic Shared Memory known at compile time processor cores Ben Karsin – A Performance Model for GPU Architectures

Talk Outline Motivation/background GPU overview Memory hierarchy State-of-the-art GPU sorting Our multiway mergesort (GPU-MMS) Optimizations Performance results Conclusions & future work Ben Karsin – A Performance Model for GPU Architectures

State-of-the-art GPU sorting Modern GPU (MGPU) Pairwise mergesort CUB Radix sort Limited application Thrust Changes algorithm based on input type Comes with CUDA compiler All highly engineered and optimized for hardware Change parameters based on hardware detected Ben Karsin – A Performance Model for GPU Architectures

MGPU mergesort Pairwise mergesort E elements per thread E · · · t 1 t 2 t 3 t 4 t ( N t N E − 1) E Ben Karsin – A Performance Model for GPU Architectures

MGPU mergesort Pairwise mergesort E elements per thread b threads per thread-block bE · · · t 1 t 2 t 3 t 4 t ( N t N E − 1) E Ben Karsin – A Performance Model for GPU Architectures

MGPU mergesort Pairwise mergesort E elements per thread b threads per thread-block Lots of parallelism N E threads! bE · · · t 1 t 2 t 3 t 4 t ( N t N E − 1) E Ben Karsin – A Performance Model for GPU Architectures

MGPU mergesort Each thread-block sorts bE elements bE Ben Karsin – A Performance Model for GPU Architectures

MGPU mergesort Each thread-block sorts bE elements Merge pairs of lists bE Ben Karsin – A Performance Model for GPU Architectures

MGPU mergesort Each thread-block sorts bE elements Merge pairs of lists � log N � merge rounds bE b and E iare small constants log N � � bE bE Ben Karsin – A Performance Model for GPU Architectures

MGPU bottlenecks Global memory is the main bottleneck Unavoidable: O (log 2 N ) merge rounds Ben Karsin – A Performance Model for GPU Architectures

Multiway mergesort Reduce global memory bottleneck Merge K lists at a time! log K N · · · · · · · · · · · · · · · N � � log K merge rounds B Merging done in internal memory Use a priority queue Ben Karsin – A Performance Model for GPU Architectures

Merging K lists Use a heap Load blocks from each list Build min-heap on smallest items 1 3 6 7 4 8 7 8 9 6 5 10 11 9 11 7 8 12 19 16 16 14 13 22 18 K Ben Karsin – A Performance Model for GPU Architectures

Merging K lists Use a heap Buffer smallest item Heapify to find next smallest 3 1 4 6 7 5 8 7 8 9 6 8 10 11 9 11 7 14 12 19 16 16 13 22 18 K Ben Karsin – A Performance Model for GPU Architectures

Merging K lists Use a heap Output buffer when full Read block when needed 3 1 4 6 7 5 8 7 8 9 6 8 10 11 9 11 7 14 12 19 16 16 13 22 18 K Ben Karsin – A Performance Model for GPU Architectures

Parallel ’Block Heap’ Warp shares a heap 32 threads all need work... 32 K Ben Karsin – A Performance Model for GPU Architectures

Parallel ’Block Heap’ Each node has a sorted list 1 2 4 5 7 9 1117 8 121420 19222330 18202124 28293133 23242526 Ben Karsin – A Performance Model for GPU Architectures

Parallel ’Block Heap’ Each node has a sorted list Output 7 9 1117 8 121420 19222330 18202124 28293133 23242526 Ben Karsin – A Performance Model for GPU Architectures

Parallel ’Block Heap’ Each node has a sorted list Merge child nodes All 32 threads work together 7 9 1117 8 121420 Merge 19222330 18202124 28293133 23242526 Ben Karsin – A Performance Model for GPU Architectures

Parallel ’Block Heap’ Each node has a sorted list Merge child nodes Smallest Largest 7 8 9 11 1214 17 20 19222330 18202124 28293133 23242526 Ben Karsin – A Performance Model for GPU Architectures

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.