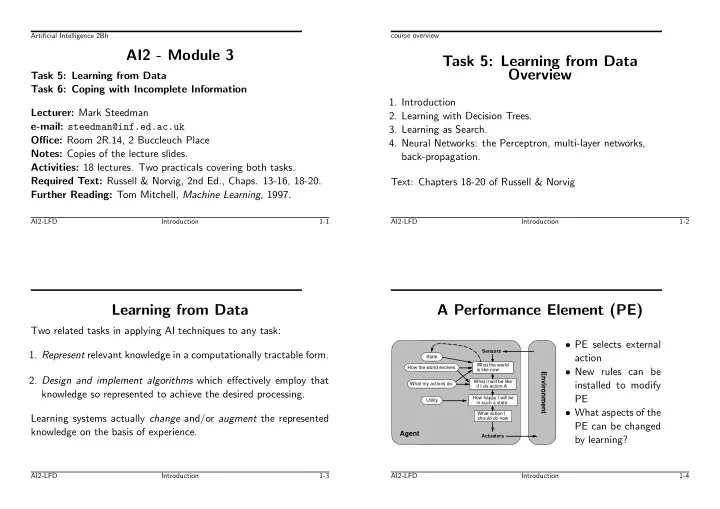

Artificial Intelligence 2Bh course overview AI2 - Module 3 Task 5: Learning from Data Overview Task 5: Learning from Data Task 6: Coping with Incomplete Information 1. Introduction Lecturer: Mark Steedman 2. Learning with Decision Trees. e-mail: steedman@inf.ed.ac.uk 3. Learning as Search. Office: Room 2R.14, 2 Buccleuch Place 4. Neural Networks: the Perceptron, multi-layer networks, Notes: Copies of the lecture slides. back-propagation. Activities: 18 lectures. Two practicals covering both tasks. Required Text: Russell & Norvig, 2nd Ed., Chaps. 13-16, 18-20. Text: Chapters 18-20 of Russell & Norvig Further Reading: Tom Mitchell, Machine Learning , 1997. AI2-LFD Introduction 1-1 AI2-LFD Introduction 1-2 Learning from Data A Performance Element (PE) Two related tasks in applying AI techniques to any task: • PE selects external Sensors 1. Represent relevant knowledge in a computationally tractable form. State action What the world How the world evolves is like now • New rules can be Environment 2. Design and implement algorithms which effectively employ that What it will be like What my actions do installed to modify if I do action A knowledge so represented to achieve the desired processing. How happy I will be PE Utility in such a state • What aspects of the What action I Learning systems actually change and/or augment the represented should do now PE can be changed knowledge on the basis of experience. Agent Actuators by learning? AI2-LFD Introduction 1-3 AI2-LFD Introduction 1-4

6. Action-value information indicating the desirability of particular What components of PE can change? actions in particular states. 7. Goals that describe classes of states whose achievement maximises Examples are: the agent’s utility. 1. A direct mapping from conditions on the current state to actions. Each of these components can be learned 2. A means to infer relevant properties of the world from the percept sequence. • Have notion of performance standard 3. Information about the way the world evolves. – can be hardwired: e.g. hunger, pain etc. 4. Information about the results of possible actions the agent can – provides feedback on quality of agent’s behaviour take. 5. Utility information indicating the desirability of world states. AI2-LFD Introduction 1-5 AI2-LFD Introduction 1-6 Learning from Data A Range of Applications We will consider a slightly constrained setup known as Scientific research, control problems, engineering devices, game playing, natural language applications, Internet tools, commerce. Supervised Learning • Diagnosing diseases in plants. • The learning agent sees a set of examples . • Identifying useful drugs (pharmaceutical research). • Each example is labelled , i.e. associated with some relevant • Predicting protein folds (Genome project). information. • The learning agent generalises by producing some representation • Cataloguing space images. that can be used to predict the associated value on unseen • Steering a car on highway (by mapping state to action). examples. • An automated pilot in a restricted environment (by mapping state • The predictor is used either directly or in a larger system. to action). AI2-LFD Introduction 1-7 AI2-LFD Introduction 1-8

• Playing Backgammon (by mapping state to its “value”). Major issues for learning problems • Performing symbolic integration (by mapping expressions to integration operators). • What components of the performance element are to be improved. • Controlling oil-gas separation. • What representation is used for the knowledge in those • Generating the past tense of a verb. components. • Context sensitive spelling correction (“I had a cake for desert ”). • What feedback is available. • Filtering interesting articles from newsgroups. • What representation is used for the examples. • Identifying interesting web-sites. • What prior knowledge is available. • Fraud detection (on credit card activities). • Identifying market properties for commerce. • Stock market prediction. AI2-LFD Introduction 1-9 AI2-LFD Introduction 1-10 Sources and Types of Learning Systems Other Types of Learning Problems unsupervised learning The system receives no external feedback, Is the system passive or active ? but has some internal utility function to maximise. Is the system taught by someone else, or must it learn for itself ? For example, a robot exploring a distant planet might be set to Do we approach the system as a whole, or component by classify the forms of life it encounters there. component? reinforcement learning The system is trained with post-hoc Our setup of Supervised Learning implies a passive system that is evaluation of every output. taught through the selection of examples (though the “teacher” For example, a system to play backgammon might be trained by may not be helpful). letting it play some games, and at the end of each game telling it whether it won or lost. (There is no direct feedback on every action.) AI2-LFD Introduction 1-11 AI2-LFD Introduction 1-12

Supervised Learning Supervised Learning: Example The first major practical application of machine learning techniques • Some part of the performance element is modelled as a function was indicated by Michalski and Chilausky’s (1980) soybean f - a mapping from possible descriptions into possible values. experiment. They were presented with information about sick • A labelled example is a pair ( x, v ) where x is the description and soya bean plants whose diseases had been diagnosed. the intention is that the v = f ( x ) . • Each plant was described by values of 35 attributes, such as • The learner is given some labelled examples. leafspots halos and leafspot size , as well as by its actual • The learner is required to find a mapping h (for hypothesis) that disease. can be used to compute the value of f on unseen descriptions: • The plants had 1 of 4 types of diseases. “ h approximates f ” AI2-LFD Introduction 1-13 AI2-LFD Introduction 1-14 • The learning system automatically inferred a set of rules which Supervised Learning with Decision Trees would predict the disease of a plant on the basis of its attribute Overview values. • When tested with new plants, the system predicted the correct disease 100% of the time. Rules constructed by human experts 1. Attribute-Value representation of examples were only able to achieve 96.2%. 2. Decision tree representation • However, the learned rules were much more complex than those 3. Supervised learning methodology extracted from the human experts. 4. Decision tree learning algorithm 5. Was learning successful ? 6. Some applications Text: Sections 18.2-18.3 of Russell & Norvig AI2-LFD Introduction 1-15 AI2-LFD Decision Trees 2-1

Supervised Learning with Decision Trees Attribute-Value Representation for Examples • Some part of the performance element is modelled as a function The mapping f decides whether a credit card be granted to an f - a mapping from possible descriptions into possible values. applicant. What are the important attributes or properties ? • A labelled example is a pair ( x, v ) where x is the description and the intention is that v = f ( x ) . Credit History What is the applicant’s credit history like? (values: good, bad, unknown ) • The learner is given some labelled examples. Debt How much debt does the applicant have? • The learner is required to find a mapping h (for hypothesis) that (values: low, high ) can be used to compute the value of f on unseen descriptions. Collateral Can the applicant put up any collateral? • h is represented by a decision tree. (values: adequate, none ) AI2-LFD Decision Trees 2-2 AI2-LFD Decision Trees - Representing Examples 2-3 Income How much does the applicant earn? Example (cont.) (values: numerical) Dependents Does the applicant have any Dependents ? A set of examples (descriptions and labels) can be described as in the following table. (values: numerical) Example Attributes Goal Credit History Debt Collateral Income Dependents Yes/no The last two attributes are numerical. X1 Good Low Adequate 20K 3 yes X2 Good High None 15K 2 yes We may need to discretize them e.g. using X3 Good High None 7K 0 no X4 Bad Low Adequate 15K 0 yes (values: > 10000 , < 10000 ) for Income and X5 Bad High None 10k 4 no X6 Unknown High None 11K 1 no (values: yes, no ) for Dependents. X7 Unknown Low None 9k 2 no X8 Unknown Low Adequate 9K 2 yes X9 Unknown Low None 19k 0 yes The learner is required to find a mapping h that can be used to compute the value of f on unseen descriptions. AI2-LFD Decision Trees - Representing Examples 2-4 AI2-LFD Decision Trees - Representing Examples 2-5

Recommend

More recommend