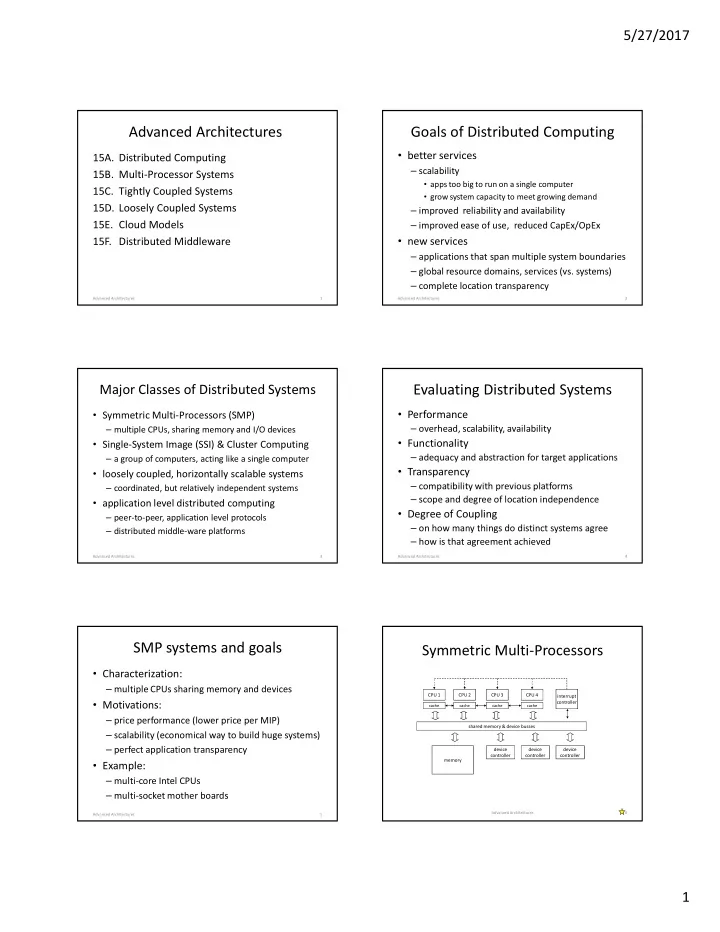

5/27/2017 Advanced Architectures Goals of Distributed Computing • better services 15A. Distributed Computing – scalability 15B. Multi-Processor Systems • apps too big to run on a single computer 15C. Tightly Coupled Systems • grow system capacity to meet growing demand 15D. Loosely Coupled Systems – improved reliability and availability 15E. Cloud Models – improved ease of use, reduced CapEx/OpEx 15F. Distributed Middleware • new services – applications that span multiple system boundaries – global resource domains, services (vs. systems) – complete location transparency Advanced Architectures 1 Advanced Architectures 2 Major Classes of Distributed Systems Evaluating Distributed Systems • Performance • Symmetric Multi-Processors (SMP) – overhead, scalability, availability – multiple CPUs, sharing memory and I/O devices • Functionality • Single-System Image (SSI) & Cluster Computing – adequacy and abstraction for target applications – a group of computers, acting like a single computer • Transparency • loosely coupled, horizontally scalable systems – compatibility with previous platforms – coordinated, but relatively independent systems – scope and degree of location independence • application level distributed computing • Degree of Coupling – peer-to-peer, application level protocols – on how many things do distinct systems agree – distributed middle-ware platforms – how is that agreement achieved Advanced Architectures 3 Advanced Architectures 4 SMP systems and goals Symmetric Multi-Processors • Characterization: – multiple CPUs sharing memory and devices CPU 1 CPU 2 CPU 3 CPU 4 interrupt • Motivations: controller cache cache cache cache – price performance (lower price per MIP) shared memory & device busses – scalability (economical way to build huge systems) – perfect application transparency device device device controller controller controller memory • Example: – multi-core Intel CPUs – multi-socket mother boards Advanced Architectures 6 Advanced Architectures 5 1

5/27/2017 SMP Price/Performance SMP Operating System Design • a computer is much more than a CPU • one processor boots with power on – mother-board, disks, controllers, power supplies, case – it controls the starting of all other processors – CPU might cost 10-15% of the cost of the computer • same OS code runs in all processors • adding CPUs to a computer is very cost-effective – one physical copy in memory, shared by all CPUs – a second CPU yields cost of 1.1x, performance 1.9x • Each CPU has its own registers, cache, MMU – a third CPU yields cost of 1.2x, performance 2.7x – they must cooperatively share memory and devices • same argument also applies at the chip level • ALL kernel operations must be Multi-Thread-Safe – making a machine twice as fast is ever more difficult – adding more cores to the chip gets ever easier – protected by appropriate locks/semaphores • massive multi-processors are obvious direction – very fine grained locking to avoid contention Advanced Architectures 7 Advanced Architectures 8 SMP Parallelism The Challenge of SMP Performance • scheduling and load sharing • scalability depends on memory contention – each CPU can be running a different process – memory bandwidth is limited, can't handle all CPUs – just take the next ready process off the run-queue – most references satisfied from per-core cache – processes run in parallel – if too many requests go to memory, CPUs slow down – most processes don't interact (other than in kernel) • scalability depends on lock contention • serialization – waiting for spin-locks wastes time – mutual exclusion achieved by locks in shared memory – context switches waiting for kernel locks waste time – locks can be maintained with atomic instructions • contention wastes cycles, reduces throughput – spin locks acceptable for VERY short critical sections – 2 CPUs might deliver only 1.9x performance – if a process blocks, that CPU finds next ready process – 3 CPUs might deliver only 2.7x performance Advanced Architectures 9 Advanced Architectures 10 Managing Memory Contention Non-Uniform Memory Architecture Symmetric Multi-Processors • Fast n-way memory is very expensive – without it, memory contention taxes performance CPU n CPU n+1 – cost/complexity limits how many CPUs we can add local local cache cache memory memory • Non-Uniform Memory Architectures (NUMA) PCI bridge PCI bridge – each CPU has its own memory • each CPU has fast path to its own memory PCI bus PCI bus – connected by a Scalable Coherent Interconnect CC NUMA device device CC NUMA device device • a very fast, very local network between memories interface controller controller interface controller controller • accessing memory over the SCI may be 3-20x slower Scalable Coherent Interconnect (e.g. Intel Quick Path Interconnect) – these interconnects can be highly scalable Advanced Architectures 12 Advanced Architectures 11 2

5/27/2017 OS design for NUMA systems Single System Image (SSI) Clusters • Characterization: • it is all about local memory hit rates – a group of seemingly independent computers – every outside reference costs us 3-20x performance collaborating to provide SMP-like transparency – we need 75-95% hit rate just to break even • Motivation: • How can the OS ensure high hit-rates? – higher reliability, availability than SMP/NUMA – replicate shared code pages in each CPU's memory – more scalable than SMP/NUMA – assign processes to CPUs, allocate all memory there – excellent application transparency – migrate processes to achieve load balancing • Examples: – spread kernel resources among all the CPUs – Locus, MicroSoft Wolf-Pack, OpenSSI – attempt to preferentially allocate local resources – Oracle Parallel Server – migrate resource ownership to CPU that is using it Advanced Architectures 13 Advanced Architectures 14 The Dream Modern Clustered Architecture Programs don’t run on hardware, they run atop operating systems. All the resources that processes see are already virtualized. physical systems Instead of merely virtualizing all the resources in a single system, virtualize all the resources in a cluster of systems. Applications proc 101 geographic fail over proc 103 CD1 that run in such a cluster are (automatically and transparently) switch switch proc 106 distributed. lock 1A virtual HA computer w/4x MIPS & memory one global pool ethernet request replication of devices processes 101, 103, 106, + LP2 proc 202 202, 204, 205, CD1 SMP SMP SMP SMP proc 204 + 301, 305, 306, system #1 system #2 system #3 system #4 proc 205 + 403, 405, 407 locks CD3 1A, 3B CD3 proc 301 proc 305 one large HA virtual file system optional proc 306 LP2 dual ported synchronous FC replication dual ported primary site LP3 RAID RAID back-up site primary copies lock 3B LP3 disk 1A disk 2A disk 3A disk 4A Active systems service independent requests in parallel. They cooperate to maintain SCN4 SCN4 shared global locks, and are prepared to take over partner’s work in case of failure. proc 403 disk 3B disk 4B disk 1B disk 2B proc 405 State replication to a back-up site is handled by external mechanisms. proc 407 secondary replicas Advanced Architectures 16 OS design for SSI clustering Structure of a Modern OS • all nodes agree on the state of all OS resources system call interfaces user visible OS model – file systems, processes, devices, locks IPC ports file namespace authorization file file I/O IPC process/thread exception synchronization model model model model model model model model – any process can operate on any object, transparently run-time configuration fault quality … higher level transport file systems loader services management of service • they achieve this by exchanging messages services protocols stream volume hot-plug block I/O services management services services – advising one-another of all changes to resources memory logging swapping paging scheduling & tracing network serial display storage I/O class driver class driver class driver class driver • each OS's internal state mirrors the global state abstraction virtual execution fault process/thread processes asynchronous engine – request execution of node-specific requests device drivers device drivers handling scheduling (resource containers) events • node-specific requests are forwarded to owning node DMA configuration thread memory memory thread bus drivers services analysis dispatching allocation segments synchronization • implementation is large, complex, difficult boot I/O resource enclosure processor processor context kernel processor strap allocation management exceptions initialization switching debugger abstraction I/O processor processor memory memory cache cache atomic atomic • the exchange of messages can be very expensive DMA interrupts interrupts traps traps timers timers operations mode mode mapping mapping mgmt mgmt updates updates Advanced Architectures 17 Advanced Architectures 18 3

Recommend

More recommend