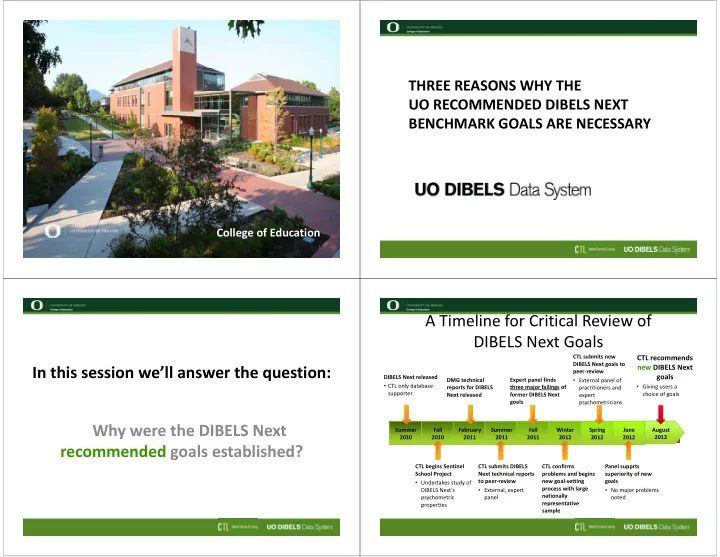

THREE REASONS WHY THE UO RECOMMENDED DIBELS NEXT BENCHMARK GOALS ARE NECESSARY College of Education 1 2 A Timeline for Critical Review of DIBELS Next Goals CTL submits new CTL recommends DIBELS Next goals to new DIBELS Next In this session we’ll answer the question: peer ‐ review goals DIBELS Next released Expert panel finds DMG technical • External panel of • CTL only database three major failings of • Giving users a reports for DIBELS practitioners and supporter former DIBELS Next choice of goals Next released expert goals psychometricians Why were the DIBELS Next Summer Fall February Summer Fall Winter Spring June August 2012 2012 2012 2010 2010 2011 2011 2011 2012 recommended goals established? CTL begins Sentinel CTL submits DIBELS CTL confirms Panel supprts School Project Next technical reports problems and begins superiority of new to peer ‐ review new goal ‐ setting goals • Undertakes study of process with large DIBELS Next’s • External, expert • No major problems nationally psychometric panel noted representative properties sample 4

What’s wrong with the former DIBELS Next goals? Former DIBELS Next goals … Reason 1: 1. Vary widely, yet still miss substantial numbers of children who need intervention (UO ‐ CTL, 2012c, p. 9) DMG Former Goals Miss Children – On average, the former goals miss 40% of students who Who Require Intervention may need additional, strategic support – On average, the former goals miss 56% of students who may need intensive support 2. Are based on a small sample that does not represent the diversity of U.S. children (DMG, 2011, p. 39) 3. Do not use a consistent, external criterion measure to determine risk and cut ‐ points (DMG, 2011, pp. 48 ‐ 49) 5 What’s wrong with the former DIBELS Next goals? What’s wrong with the former DIBELS Next goals? And 40% need intervention … Assume we assess 100 students … 7 8

What’s wrong with the former DIBELS Next goals? What’s wrong with the former DIBELS Next goals? (University of Oregon ‐ CTL, 2012c, p. 9) And half of those (or 20% overall) The former goals will, on average, miss 40% need intensive intervention … of students needing intervention 9 10 What’s right about the new DIBELS Next goals? What’s wrong with the former DIBELS Next goals? (University of Oregon ‐ CTL, 2012c, p. 9) (University of Oregon ‐ CTL, 2012c, p. 9) The UO ‐ CTL recommended goals identify 90% of students who may And the former goals will, on average, miss 56% need support— ensuring more confidence in decision making of students needing intensive intervention 11 12

Why the discrepancies? Reason 2: DMG did not follow recommended, research ‐ based practices to create their goals The DMG former goals were created with: DMG Former Goals Lack a • a sample of students that was small, and not Representative School & representative of the nation Student Sample • procedures that do not meet test and measurement standards in the field of education ( Standards for Educational and Psychological Testing, AERA, APA, NCME, 1999; National Center on Response to Instruction) 13 Let’s compare samples Representative Sample DMG Former (DMG, 2011, p. 39); UO Recommended (UO ‐ CTL, 2012a, p. 2); U.S. (NCES, 2011) All Schools in the U.S. Critical and required for generalizability. 4.3 2.2 16.5 Representative samples should include descriptions of the ethnicity and race, socioeconomic status, 55.1 DMG Former Goals UO Recommended Goals 20 gender, and geographic locations of the 0 0.7 2.1 4.7 4 1.1 1.2 0.7 14.4 participants , so that the sample can be appropriately compared to other students and 50.9 schools at other time points ( Standards for 19.7 Educational and Psychological Testing, AERA, APA, 90.7 NCME, 1999). White Hispanic African American American Indian Asian/Pacific Islander Other Multiracial 15 16

Let’s compare samples Reason 3: Percent of students who qualify for Free and Reduced Lunch 100 DMG Former Goals Were Developed 52.4% U.S. average 80 Using an Inconsistent and Non ‐ 63.7% UO recommended goals average 60 Validated Process to Determine 40 16.0% DMG former goals average Goals and Cut Points 20 0 U.S. Average UO DMG Former Recommended Goals Goals 17 Key Terms Key Terms Benchmark Goal : Students above the Cut Point for Risk : Students who score below benchmark goal have a strong likelihood of the cut point have a strong likelihood of NOT meeting end ‐ of ‐ year performance standards on meeting end of year performance standards on an important outcome measure …. an important outcome measure . . . as long as continued good teaching occurs . if intensive intervention is not provided . Ensuring confidence in decision making. 19 20

Why do schools use screening measures? Why does your school use a screening measure? THE GOAL : To quickly determine how well students are NOT THE GOAL : To see if students will meet the DIBELS performing and identify students at ‐ risk for reading Next composite score. difficulties or who need additional intervention. Individual Individual End of Outcome Year Outcome Measure Measure Composite Goals & Cut Goals & Cut Score Points Points 21 22 Process Used to Establish the DMG Former Goals The DMG goal ‐ setting process did not meet (DMG, 2011, pp. 48 ‐ 49) recommended research ‐ based educational standards Global Outcome Outcome Measure The Standards for Educational and Step 1 Psychological Testing (AERA, APA, NCME, 1999) Composite Composite Composite recommend a link between student Score Score Score performance on screening measures (e.g. Step 3 Step 2 DIBELS Next measures) and a standardized, widely used, external criterion measure (e.g. Individual Individual Individual Step 4 DIBELS Next DIBELS Next DIBELS Next SAT10) . Measures Measures Measures 23 24

Variability Associated with the FORMER Goals Variability Associated with the FORMER Goals DDS percentile rank DDS percentile rank DDS percentile rank associated with the associated with the associated with the DDS percentile rank beginning of end of beginning of associated with the beginning of DDS percentile DDS percentile rank rank associated associated with the with the middle of beginning of Source for Percentiles : Cummings, Kennedy, Otterstedt, Baker, S.K., & Kame’enui, 2011 Source for Percentiles : Cummings, Kennedy, Otterstedt, Baker, S.K., & Kame’enui, 2011 25 26 Why does this negatively impact Recommended Linking Procedure for Establishing Goals students and schools? • Complicates school ‐ level planning and coordination of intervention efforts Outcome – The former benchmark goals vary widely across measures, grades, and times of the year. • Makes evaluating progress within and across school years very unclear Goals & Goals & Goals & – When a non ‐ standard linking procedure is used, the actual Cut Points Cut Points Cut Points “value” of the former goals is inconsistent across grades and measures . 27 28

The UO DIBELS Data System is committed to SUMMARY providing teachers with the tools they need New goals were needed to assist schools in making to meet the needs of all students. sound educational decisions. DMG former goals are problematic because they: 1. Miss substantial numbers of children who need The UO Recommended Goals are research ‐ intervention— provide a false level of confidence based to support schools in making 2. Are based on a sample that does not represent current educational decisions they can have U.S. public schools in terms of region, ethnicity, and SES confidence in that are in the best interest of 3. Did not use a consistent or valid process to determine their students. goals and cut ‐ points 30 29 References Thank you! American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (1999). Standards for Educational and Psychological Testing . Washington, DC: American Psychological Association. For free resources please visit: Cummings, K.D., Kennedy, P.C., Otterstedt, J., Baker, S.K., & Kame’enui, E.J. (2011). DIBELS Data System: 2010 ‐ 2011 Percentile Ranks for DIBELS DIBELS Data System Next Benchmark Assessments (Technical Report 1101) . Eugene, OR: University of Oregon. Research and Training pages Dynamic Measurement Group (2011). DIBELS Next Technical Manual . Eugene, OR: Author. https://dibels.uoregon.edu Joint Committee on Testing Practices (2004). Code of Fair Testing Practices in Education . Washington, DC: Author. Retrieved from http://www.theaaceonline.com/codefair.pdf National Center on Response to Intervention. (2011). Standard protocol for evaluating response to intervention tools: Screening reading and math You can call us at (888) 497 ‐ 4290. [Screening tool evaluation protocol]. Washington, DC: Author. Pearson Education, Inc. (2007). Stanford Achievement Test—10th Edition We’re here to support you! (SAT10): Normative update. Upper Saddle River, New Jersey: Author. 31 32

Recommend

More recommend