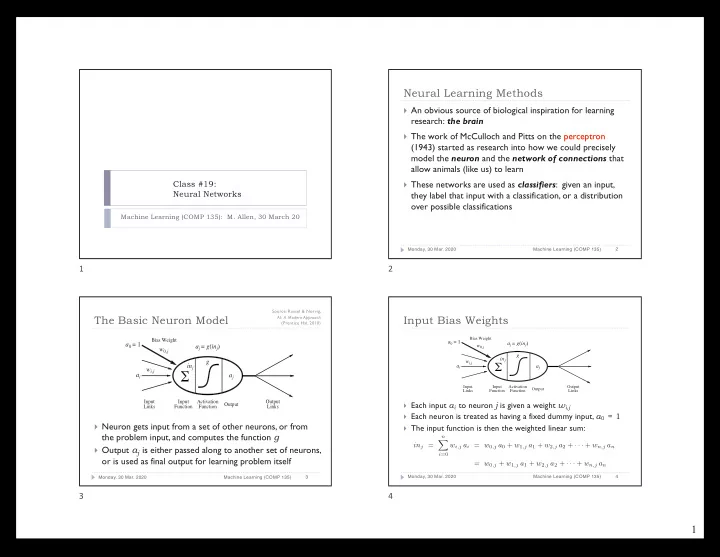

Neural Learning Methods } An obvious source of biological inspiration for learning research: the brain } The work of McCulloch and Pitts on the perceptron (1943) started as research into how we could precisely model the neuron and the network of connections that allow animals (like us) to learn Class #19: } These networks are used as classifiers : given an input, Neural Networks they label that input with a classification, or a distribution over possible classifications Machine Learning (COMP 135): M. Allen, 30 March 20 2 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 1 2 Source: Russel & Norvig, The Basic Neuron Model Input Bias Weights AI: A Modern Approach (Prentice Hal, 2010) Bias Weight Bias Weight a 0 = 1 a j = g ( in j ) a 0 = 1 a j = g ( in j ) w 0 ,j w 0 ,j g in j g w i,j Σ in j a i a j w i,j Σ a i a j Input Input Activation Output Output Links Function Function Links Input Input Activation Output } Each input a i to neuron j is given a weight w i,j Output Links Function Function Links } Each neuron is treated as having a fixed dummy input, a 0 = 1 } Neuron gets input from a set of other neurons, or from } The input function is then the weighted linear sum: the problem input, and computes the function g n X in j = w i,j a i = w 0 ,j a 0 + w 1 ,j a 1 + w 2 ,j a 2 + · · · + w n,j a n } Output a j is either passed along to another set of neurons, i =0 or is used as final output for learning problem itself = w 0 ,j + w 1 ,j a 1 + w 2 ,j a 2 + · · · + w n,j a n 4 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 3 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 3 4 1

We’ve Seen This Before! Neuron Output Functions Bias Weight Bias Weight a 0 = 1 a 0 = 1 a j = g ( in j ) a j = g ( in j ) w 0 ,j w 0 ,j g g in j in j w i,j w i,j Σ Σ a i a j a i a j Input Input Activation Output Input Input Activation Output Output Output Links Function Function Links Links Function Function Links } The weighted linear sum of inputs, with dummy, a 0 = 1 , is just a form of the cross-product that our classifiers have been using all along } While the inputs to any neuron are treated in a linear } Remember that the “neuron” here is just another way of looking at the fashion, the output function g need not be linear perceptron idea we already discussed } The power of neural nets comes from fact that we can n combine large numbers of neurons together to compute X in j = w i,j a i = w 0 ,j + w 1 ,j a 1 , + w 2 ,j a 2 + · · · + w n,j a n any function (linear or not) that we choose i =0 = w j · a 6 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 5 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 5 6 The Perceptron Threshold Function The Sigmoid Activation Function Bias Weight Bias Weight a 0 = 1 a 0 = 1 a j = g ( in j ) a j = g ( in j ) w 0 ,j w 0 ,j g g in j in j w i,j w i,j Σ Σ a i a j a i a j Input Input Activation Output Input Input Activation Output Output Output Links Function Function Links Links Function Function Links } A function that has been more often used in neural networks is } One possible function is the binary threshold, which is the logistic (also known as the Sigmoid), as seen before suitable for “firm” classification problems, and causes the } This gives us a “soft” value, which we can often interpret as the neuron to activate based on a simple binary function: probability of belonging to some output class ( 1 if in j ≥ 0 1 g ( in j ) = g ( in j ) = 0 else 1 + e − in j 8 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 7 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 7 8 2

Power of Perceptron Networks Power of Perceptron Networks } A single-layer network with inputs for variables ( x 1 , x 2 ), and } A single-layer network combines a linear function of input bias term ( x 0 == 1 ), can compute OR of inputs weights with the non-linear output function } Threshold: ( y == 1 ) if weighted sum ( S >= 0 ); else ( y == 0 ) } If we threshold output, we have a boolean ( 1/0 ) function } This is sufficient to compute numerous linear functions x 1 OR x 2 1 x 1 x 2 y x 1 OR x 2 x 1 AND x 2 y x 1 0 0 0 x 1 x 2 y x 1 x 2 y 0 1 1 x 2 0 0 0 0 0 0 1 0 1 0 1 1 0 1 0 1 1 1 1 0 1 1 0 0 } What weights can we apply to the three inputs to produce OR ? 1 1 1 1 1 1 } One answer: -0.5 + x 1 + x 2 10 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 9 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 9 10 Power of Perceptron Networks Linear Separation with Perceptron Networks } We can think of binary functions as dividing ( x1, x2 ) plane x 1 AND x 2 1 } The ability to express such a function is analogous to the ability to linearly separate data in such regions x 1 x 2 y x 1 y 0 0 0 x 1 OR x 2 0 1 0 x 2 1 1 0 0 x 1 x 2 y 1 1 1 0 0 0 x 2 0 1 1 } What about the AND function instead? 1 0 1 0 } One answer: -1.5 + x 1 + x 2 1 1 1 0 1 x 1 = 1 = 0 12 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 11 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 11 12 3

Functions with Non-Linear Boundaries Linear Separation with Perceptron Networks There are some functions that cannot be expressed using a single layer of linear } We can think of binary functions as dividing ( x1, x2 ) plane } weighted inputs, and a non-linear output } The ability to express such a function is analogous to the ability Again, this is analogous to the inability to linearly separate data in some cases } to linearly separate data in such regions x 1 AND x 2 x 1 XOR x 2 1 1 x 1 x 2 y x 1 x 2 y 0 0 0 0 0 0 x 2 x 2 0 1 1 0 1 0 1 0 1 1 0 0 0 0 1 1 1 1 1 0 0 1 0 x 1 1 x 1 = 1 = 1 = 0 = 0 14 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 13 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 13 14 MLP’s for Non-Linear Boundaries Review: Properties of the Sigmoid Function } Neural networks gain expressive power } The Sigmoid takes its name because they can have more than one layer from the shape of its plot 1 1 } A multi-layer perceptron has one or more hidden layers between input and output } It always has a value in range: x 2 } Each hidden node applies a non-linear 0 ≤ x ≤ 1 activation function, producing output that it sends along to the next layer 0.5 } The function is everywhere 0 In such cases, much more complex functions } differentiable, and has a are possible, corresponding to non-linear decision boundaries (as in current derivative that is easy to homework assignment) 0 x 1 1 calculate, which turns out to 0 1 8 -6 -4 -2 0 2 4 6 be useful for learning: h 1 (b) y 1 x 1 g ( in j ) = g 0 ( in j ) = g ( in j )(1 − g ( in j )) h 2 1 + e − in j x 2 16 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 15 Monday, 30 Mar. 2020 Machine Learning (COMP 135) 15 16 4

Recommend

More recommend