8. Nearest neighbors Chlo-Agathe Azencot Centre for Computatjonal - PowerPoint PPT Presentation

Foundatjons of Machine Learning CentraleSuplec Paris Fall 2017 8. Nearest neighbors Chlo-Agathe Azencot Centre for Computatjonal Biology, Mines ParisTech chloe-agathe.azencott@mines-paristech.fr Practjcal maters Class

Foundatjons of Machine Learning CentraleSupélec Paris — Fall 2017 8. Nearest neighbors Chloé-Agathe Azencot Centre for Computatjonal Biology, Mines ParisTech chloe-agathe.azencott@mines-paristech.fr

Practjcal maters ● Class representatjves – William PALMER william.palmer@student.ecp.fr – Léonard BOUSSIOUX leonard.boussioux@student.ecp.fr ● Kaggle project 2

Learning objectjves ● Implement the nearest-neighbor and k-nearest- neighbors algorithms. ● Compute distances between real-valued vectors as well as objects represented by categorical features . ● Defjne the decision boundary of the nearest- neighbor algorithm. ● Explain why kNN might not work well in high dimension . 3

Nearest neighbors 4

● How would you color the blank circles? 5

● How would you color the blank circles? 6

Partjtjoning the space The training data partjtjons the entjre space 7

Nearest neighbor ● Learning: – Store all the training examples ● Predictjon : – For x : the label of the training example closest to it 8

k nearest neighbors ● Learning: – Store all the training examples ● Predictjon : – Find the k training examples closest to x – Classifjcatjon? 9

k nearest neighbors ● Learning: – Store all the training examples ● Predictjon : – Find the k training examples closest to x – Classifjcatjon Majority vote: Predict the class of the most frequent label among the k neighbors. 10

k nearest neighbors ● Learning: – Store all the training examples ● Predictjon : – Find the k training examples closest to x – Classifjcatjon Majority vote: Predict the class of the most frequent label among the k neighbors. – Regression? 11

k nearest neighbors ● Learning: – Store all the training examples ● Predictjon : – Find the k training examples closest to x – Classifjcatjon Majority vote: Predict the class of the most frequent label among the k neighbors. – Regression Predict the average of the labels of the k neighbors. 12

Choice of k ● Small k: noisy The idea behind using more than 1 neighbor is to average out the noise ● Large k: computatjonally intensive If k = n ? 13

Choice of k ● Small k: noisy The idea behind using more than 1 neighbor is to average out the noise ● Large k: computatjonally intensive If k=n, then we predict – for classifjcatjon: the majority class – for regression: the average value ● Set k by cross-validatjon ● Heuristjc: k ≈ √n 14

Non-parametric learning Non-parametric learning algorithm: – the complexity of the decision functjon grows with the number of data points. – contrast with linear regression (≈ as many parameters as features). – Usually: decision functjon is expressed directly in terms of the training examples. – Examples: ● kNN (this chapter) ● tree-based methods (Chap. 9) ● SVM (Chap. 10) 15

Instance-based learning ● Learning: – Storing training instances. ● Predictjng: – Compute the label for a new instance based on its similarity with the stored instances. ● Also called lazy learning. ● Similar to case-based reasoning – Doctors treatjng a patjent based on how patjents with similar symptoms were treated, – Judges ruling court cases based on legal precedent. 16

Instance-based learning ● Learning: – Storing training instances. ● Predictjng: – Compute the label for a new instance based on its similarity with the stored instances. where the magic happens! ● Also called lazy learning. ● Similar to case-based reasoning – Doctors treatjng a patjent based on how patjents with similar symptoms were treated, – Judges ruling court cases based on legal precedent. 17

Computjng distances & similaritjes 18

Distances between instances ● Distance 19

Distances between instances ● Distance 20

Distances between instances ● Euclidean distance 21

Distances between instances ● Euclidean distance ● Manhatan distance Why is this called the Manhatan distance? 22

Distances between instances ● Euclidean distance ● Manhatan distance ● Lq-norm: Minkowski distance – L1 = Manhatuan. – L2 = Euclidean. – L ∞ ? 23

Distances between instances ● Euclidean distance ● Manhatan distance ● Lq-norm: Minkowski distance – L1 = Manhatuan. – L2 = Euclidean. – L ∞ 24

Similarity between instances ● Pearson's correlatjon ● Assuming the data is centered Geometric interpretatjon? 25

Similarity between instances ● Pearson's correlatjon (centered data) ● Cosine similarity: the dot product can be used to measure similaritjes. 26

Categorical features ● Ex: a feature that can take 5 values – Sports – World – Culture – Internet – Politjcs ● Naive encoding: x 1 in {1, 2, 3, 4, 5}: – Why is Sports closer to World than Politjcs? ● One-hot encoding: x 1 , x 2 , x 3 , x 4 , x 5 – Sports: [1, 0, 0, 0, 0] – Internet: [0, 0, 0, 1, 0] 27

Categorical features ● Represent object as the list of presence/absence (or counts) of features that appear in it. ● Example : small molecules features = atoms and bonds of a certain type – C, H, S, O, N... – O-H, O=C, C-N.... 28

Binary representatjon 0 1 1 0 0 1 0 0 0 1 0 1 0 0 1 no occurrence 1+ occurrences of the 1 st feature of the 10 th feature ● Hamming distance Number of bits that are difgerent Equivalent to ? 29

Binary representatjon 0 1 1 0 0 1 0 0 0 1 0 1 0 0 1 no occurrence 1+ occurrences of the 1 st feature of the 10 th feature ● Hamming distance Number of bits that are difgerent Equivalent to 30

Binary representatjon 0 1 1 0 0 1 0 0 0 1 0 1 0 0 1 ● Tanimoto/Jaccard similarity Number of shared features (normalized) 31

Counts representatjon 0 1 2 0 0 1 0 0 0 4 0 1 0 0 7 no occurrence # occurrences of the 1 st feature of the 10 th feature ● MinMax similarity Number of shared features (normalized) If x is binary, MinMax and Tanimoto are equivalent 32

Categorical features ● Features ● Compute the Hamming distance and Tanimoto and MinMax similaritjes between these objects: ? 33

Categorical features ● Features ● Compute the Hamming distance and Tanimoto and MinMax similaritjes between these objects: 100011010110 300011010120 111011011110 211021011120 111011010100 311011010100 34

Categorical features ● A = 100011010110 / 300011010120 ● B = 111011011110 / 211021011120 ● C = 111011010100 / 311011010100 ● Hamming distance d(A, B) = 3 d(A, C) = 3 d(B, C) = 2 ● Tanimoto similarity s(A, B) = 6/9 s(A, C) = 5/8 s(B, C) = 7/9 = 0.67 = 0.63 = 0.78 ● MinMax similarity s(A, B) = 8/13 s(A, C) = 7/11 s(B, C) = 8/13 = 0.62 = 0.64 = 0.62 35

Categorical features ● Features ● When new data has unknown features: ignore them. = 36

Back to nearest neighbors 37

Advantages of kNN ● Training is very fast – Just store the training examples. – Can use smart indexing procedures to speed-up testjng (slower training). ● Keeps the training data – Useful if we want to do something else with it. ● Rather robust to noisy data (averaging k votes) ● Can learn complex functjons 38

Drawbacks of kNN ● Memory requirements ● Predictjon can be slow. – Complexity of labeling 1 new data point ? 39

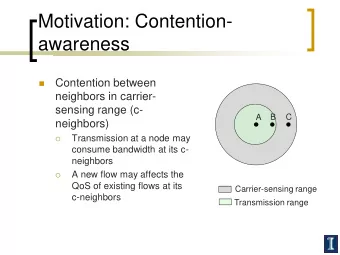

Drawbacks of kNN ● Memory requirements ● Predictjon can be slow. Complexity of labeling 1 new data point: But kNN works best with lots of samples... → Effjcient data structures ( k-D trees , ball-trees ) ● constructjon space: tjme: ● query: → Approximate solutjons based on hashing ● kNN are fooled by irrelevant atributes. E.g. p=1000, only 10 features are relevant; distances become meaningless. 40

Decision boundary of kNN ● Classifjcatjon ● Decision boundary: Line separatjng the positjve from negatjve regions. ● What decision boundary is the kNN building? 41

Voronoi tesselatjon ● Voronoi cell of x : – set of all points of the space closer to x than any other point of the training set – polyhedron ● Voronoid tesselatjon of the space: union of all Voronoi cells. Draw the ? Voronoi cell of the blue dot. 42

Voronoi tesselatjon ● Voronoi cell of x : – set of all points of the space closer to x than any other point of the training set – polyhedron ● Voronoid tesselatjon of the space: union of all Voronoi cells. 43

Voronoi tesselatjon ● The Voronoi tesselatjon defjnes the decision boundary of the 1-NN. ● The kNN also partjtjons the space (in a more complex way). 44

Curse of dimensionality ● Remember from Chap 3 ● When p ↗ the proportjon of a hypercube outside of its inscribed hypersphere approaches 1. ● Volume of a p-sphere: ● What this means: – hyperspace is very big – all points are far apart – dimensionality reductjon needed. 45

kNN variants ● ε-ball neighbors – Instead of using the k nearest neighbors, use all points within a distance ε of the test point. – What if there are no such points? 46

kNN variants ● Weighted kNN – Weigh the vote of each neighbor according to the distance to the test point. – Variant: learn the optjmal weights [e.g. Swamidass, Azencotu et al. 2009, Infmuence Relevance Voter] 47

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.