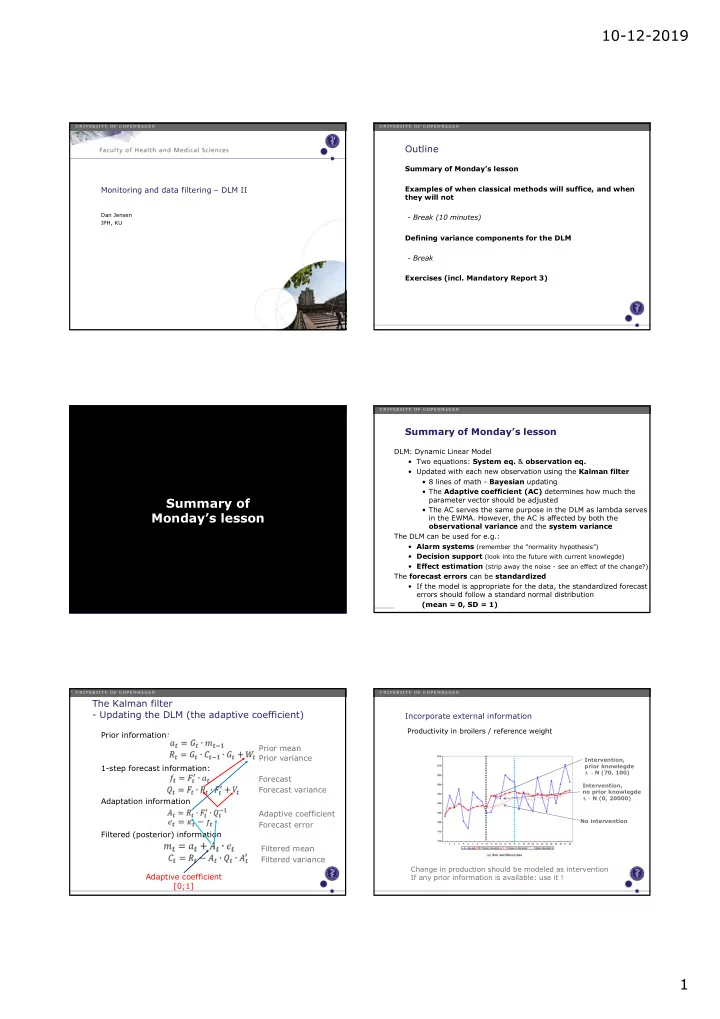

10-12-2019 Outline Summary of Monday’s lesson Monitoring and data filtering – DLM II Examples of when classical methods will suffice, and when they will not Dan Jensen - Break (10 minutes) IPH, KU Defining variance components for the DLM - Break Exercises (incl. Mandatory Report 3) Summary of Monday’s lesson DLM: Dynamic Linear Model • Two equations: System eq. & observation eq. • Updated with each new observation using the Kalman filter • 8 lines of math - Bayesian updating • The Adaptive coefficient (AC) determines how much the Summary of parameter vector should be adjusted • The AC serves the same purpose in the DLM as lambda serves Monday’s lesson in the EWMA. However, the AC is affected by both the observational variance and the system variance The DLM can be used for e.g.: • Alarm systems (remember the ”normality hypothesis”) • Decision support (look into the future with current knowlegde) • Effect estimation (strip away the noise - see an effect of the change?) The forecast errors can be standardized • If the model is appropriate for the data, the standardized forecast errors should follow a standard normal distribution (mean = 0, SD = 1) The Kalman filter - Updating the DLM (the adaptive coefficient) Incorporate external information Productivity in broilers / reference weight Prior information: Prior mean Prior variance Intervention, 1-step forecast information: prior knowlegde Δ ~ N (70, 100) Forecast Intervention, Forecast variance no prior knowlegde Δ ~ N (0, 20000) Adaptation information Adaptive coefficient No intervention Forecast error Filtered (posterior) information Filtered mean Filtered variance Change in production should be modeled as intervention Adaptive coefficient If any prior information is available: use it ! [0;1] 1

10-12-2019 Monitoring Deviations from the model The model removes auto-correlation First of all: use standardized errors: Other monioring methods, e.g.: V-mask, Bayesian networks, neural networks, etc. Shewart control chart and/or EWMA OK: - The observations can (reasonably) be assumed to be mutually independent - I.e. none or low auto-correlation - If there is auto-correlation, but no systematic trend is When are expcted: EWMA forecast errors the classical methods - Low observation frequency (e.g. quarterly or yearly) enough? - Remember Thomas’ slide on the ADG of slaughter pigs! - High observation level (e.g. whole herd, not pens or individuals) - External or prior information is not availible or won’t be considered Examples: DLM is needed: - External and/or prior information should be considered (Bayesian updating) - Systematic changes over time are expected - High observation frequency, e.g. from sensors - Low-level observations (individuals or smaller groups of animals) Examples: 2

10-12-2019 Specification of variance components Specification of variance components - Wt using discount factor C 0 Prior variance – how uncertain are we of our prior mean? Discount factor ( δ ) as an aid to choosing W t Discount factor δ can be used if W is unknown - 0 < δ < 1 V Observational variance – how uncertain are we of the observed values? we know that W is a fixed proportion of C R t = C t-1 + W → R t = C t-1 / δ W System variance – how uncertain re we in the stability of the system? For a process in control we use the value of delta that minimize the sum of the squares of the forecast errors e t (like lambda for the EWMA!) Specification of variance components Specification of variance components - Wt using discount factor - Wt using discount factor Delta = 0.01 Daily gain example High value of delta: small system (evolution) variance W, slow adaptation to new information Low value of delta: very adaptive model This is the opporsite of how lambda works in the EWMA! Meaningful range! NB: lower delta can be used for modeling intervention ! 3

10-12-2019 Specification of variance components Specification of variance components - Wt using discount factor - V based on 2-sided moving average If you have ”healthy/normal” data availible for learning! Daily gain example For a process in MA window: control we use the here 15 obs. value of delta that (7 each side) minimize the sum of the squares of the forecast errors e t (like lambda for the EWMA!) 0.59 Specification of variance components - other methods The expectation maximation (EM) algorithm by Dempster et al. (1977 ): Assumes that learning ata is availible! 1. Start with some inital guess for V and W (and C0) 2. Run the DLM om the learning data 3. Apply the smoothening to the raw and DLM-filtered data 4. Adjust the values of V and W 5. (Check the sum of squared errrors (SSE)) 6. Repeat until convergence or SSE is no longer reduced Reference analysis, described in detail by West and Harrison (1997): If no learning data is availible! 1. Estimate mt, Ct, W and V based on the first few observations 2. For more details, see Example 8.7 on page 104 in the Advanced topics book. 4

Recommend

More recommend