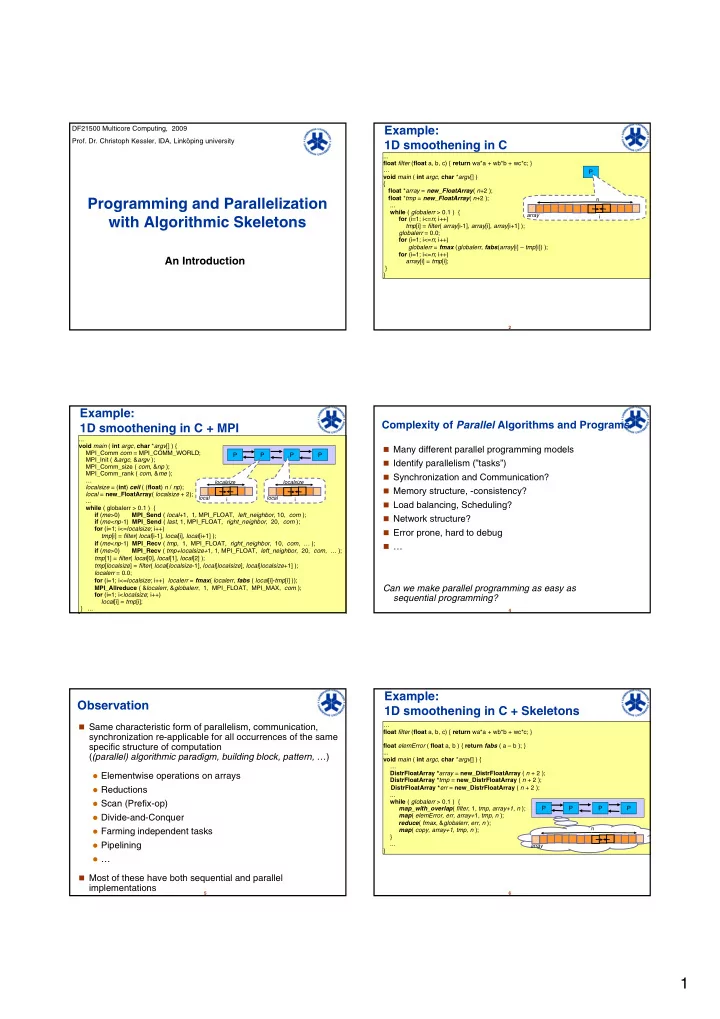

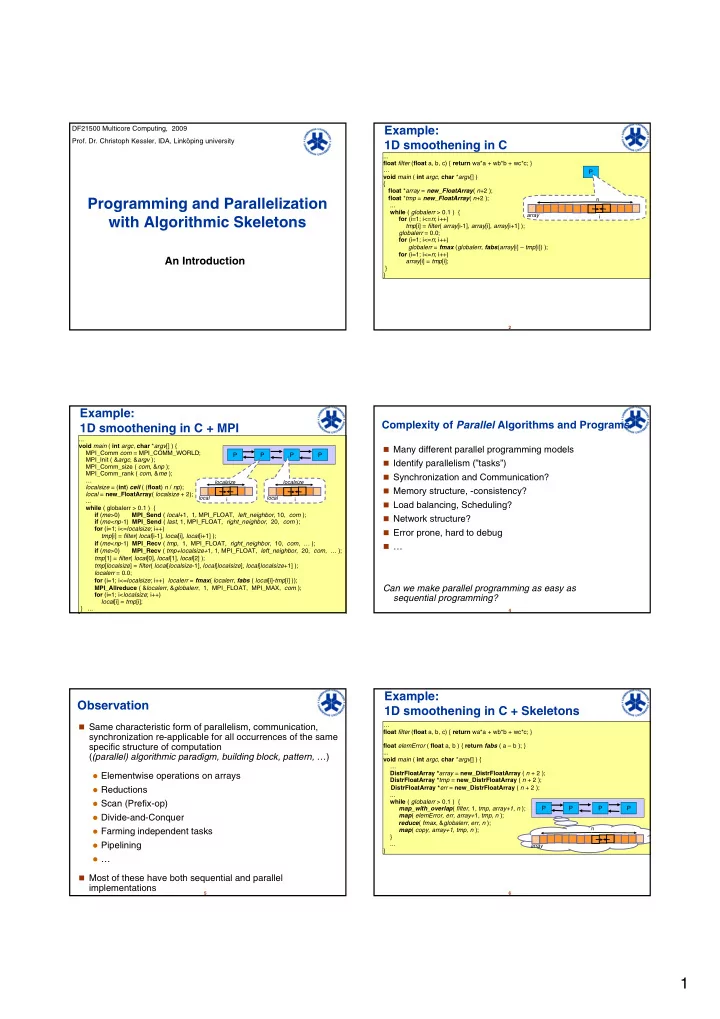

DF21500 Multicore Computing, 2009 Example: Prof. Dr. Christoph Kessler, IDA, Linköping university 1D smoothening in C ... float filter ( float a, b, c) { return wa*a + wb*b + wc*c; } … P void main ( int argc , char * argv [] ) { float * array = new_FloatArray ( n +2 ); float * tmp = new_FloatArray ( n +2 ); n Programming and Parallelization ... while ( globalerr > 0.1 ) { array i for (i=1; i<= n ; i++) with Algorithmic Skeletons tmp [i] = filter ( array [i-1], array [i], array [i+1] ); globalerr = 0.0; for (i=1; i<= n ; i++) globalerr = fmax ( globalerr, fabs ( array [i] – tmp [i]) ); for (i=1; i<= n ; i++) An Introduction array [i] = tmp [i]; } } 2 Example: Complexity of Parallel Algorithms and Programs 1D smoothening in C + MPI ... void main ( int argc , char * argv [] ) { � Many different parallel programming models MPI_Comm com = MPI_COMM_WORLD; P P P P MPI_Init ( & argc , & argv ); � Identify parallelism (”tasks”) MPI_Comm_size ( com , & np ); MPI_Comm_rank ( com , & me ); � Synchronization and Communication? … localsize localsize localsize = ( int ) ceil ( ( float ) n / np ); � Memory structure, -consistency? local = new_FloatArray ( localsize + 2); local local i i ... � Load balancing, Scheduling? while ( globalerr > 0.1 ) { if ( me >0) MPI_Send ( local +1, 1, MPI_FLOAT, left _ neighbor , 10, com ); � Network structure? if ( me<np- 1) MPI_Send ( last , 1, MPI_FLOAT, right _ neighbor , 20, com ); for (i=1; i<= localsize ; i++) � Error prone, hard to debug tmp [i] = filter ( local [i-1], local [i], local [i+1] ); if ( me < np -1) MPI_Recv ( tmp , 1, MPI_FLOAT, right _ neighbor , 10, com , … ); � … if ( me >0) MPI_Recv ( tmp+localsize+ 1 , 1, MPI_FLOAT, left _ neighbor , 20, com , … ); tmp [1] = filter ( local [0], local [1], local [2] ); tmp [ localsize ] = filter ( local [ localsize -1], local [ localsize ], local [ localsize +1] ); localerr = 0.0; for (i=1; i<= localsize ; i++) localerr = fmax ( localerr , fabs ( local [i]- tmp [i] )); Can we make parallel programming as easy as MPI_Allreduce ( & localerr , & globalerr , 1, MPI_FLOAT, MPI_MAX, com ); for (i=1; i< localsize ; i++) sequential programming? local [i] = tmp [i]; } ... 3 4 } Example: Observation 1D smoothening in C + Skeletons � Same characteristic form of parallelism, communication, … float filter ( float a, b, c) { return wa*a + wb*b + wc*c; } synchronization re-applicable for all occurrences of the same specific structure of computation float elemError ( float a, b ) { return fabs ( a – b ); } ... ( (parallel) algorithmic paradigm, building block, pattern, … ) void main ( int argc , char * argv [] ) { … DistrFloatArray * array = new_DistrFloatArray ( n + 2 ); � Elementwise operations on arrays DistrFloatArray * tmp = new_DistrFloatArray ( n + 2 ); � Reductions DistrFloatArray * err = new_DistrFloatArray ( n + 2 ); ... � Scan (Prefix-op) while ( globalerr > 0.1 ) { P P P P map_with_overlap ( filter , 1, tmp , array+1 , n ); � Divide-and-Conquer map ( elemError, err, array+ 1 , tmp, n ); reduce ( fmax , & globalerr, err, n ); n � Farming independent tasks map ( copy, array+1, tmp, n ); } � Pipelining ... array } � … � Most of these have both sequential and parallel implementations 5 6 1

Data parallelism Data-parallel Reduction Given: Given: � � � One or several data containers x w ith n e lements, � A data container x with n e lements, e.g. array(s) x= ( x 1 , ... x n ), z =( z 1 ,…,z n ), … e.g. array x= ( x 1 , ... x n ) � An operation f on individual elements of x, z, … � A binary, associative operation op on individual elements of x (e.g. incr, sqrt, mult , ...) (e.g. add, max, bitwise-or , ...) Compute : y = f ( x ) = ( f ( x 1 ) , ..., f ( x n ) ) Compute : y = OP i=1…n x = x 1 op x 2 op ... op x n � � Parallelizability: Exploit associativity of op Parallelizability: Each data element defines a task � � � Fine grained parallelism � Portionable, fits very well on all parallel architectures Notation with higher-order function : � � y = map ( f, x ) Notation with higher-order function : � Variant: map with overlap: y i = f ( x i-k ,…, x i+k ) , i = 0,…,n-1 � y = reduce ( op, x ) � 7 8 Paralleles Divide-and-Conquer Task farming dispatcher (Sequential) Divide-and-conquer: f 1 , f 2 , ..., f m Independent computations � � � Divide : Decompose problem instance P in one or several smaller could be done in parallel and/or in arbitrary order, e.g. f 1 f 2 f m independent instances of the same problem, P 1 , ..., P k … � independent loop iterations � For all i : If P i trivial , solve it directly . � independent function calls Else, solve P i by recursion. collector � � Combine the solutions of the P i into an overall solution for P Zeit Scheduling problem � Parallel Divide-and-Conquer: � � n tasks onto p processors P1 f 1 � Recursive calls can be done in parallel. � static or dynamic P2 � Parallelize, if possible, also the divide and combine phase. � Load balancing � Switch to sequential divide-and-conquer when enough parallel tasks P3 f 2 have been created. Notation with higher-order function: Notation with higher-order function: � � � ( y 1 ,...,y m ) = farm ( f 1 , ..., f m ) ( x 1 ,...,x n ) � solution = DC ( divide, combine, istrivial, solvedirectly, n, P ) 9 10 Example: Parallel Divide-and-Conquer Example: Parallel Divide-and-Conquer (2) Example : Parallel QuickSort over a float-array x Divide: Partition the array (elements <= pivot, elements > pivot) Combine: trivial, concatenate sorted sub-arrays sorted = DC partition concatenate nIsSmall qsort n x Example : Parallel Sum over integer-array x Exploit associativity: Sum( x 1 ,...,x n ) = Sum( x 1 ,...x n/2 ) + Sum( x n/2+ 1 ,..., x n ) Divide: trivial, split array x in place Combine is just an addition. y = DC ( split, add, nIsSmall, addFewInSeq, n, x ) Data parallel reductions are an important special case of DC. 11 12 2

Recommend

More recommend