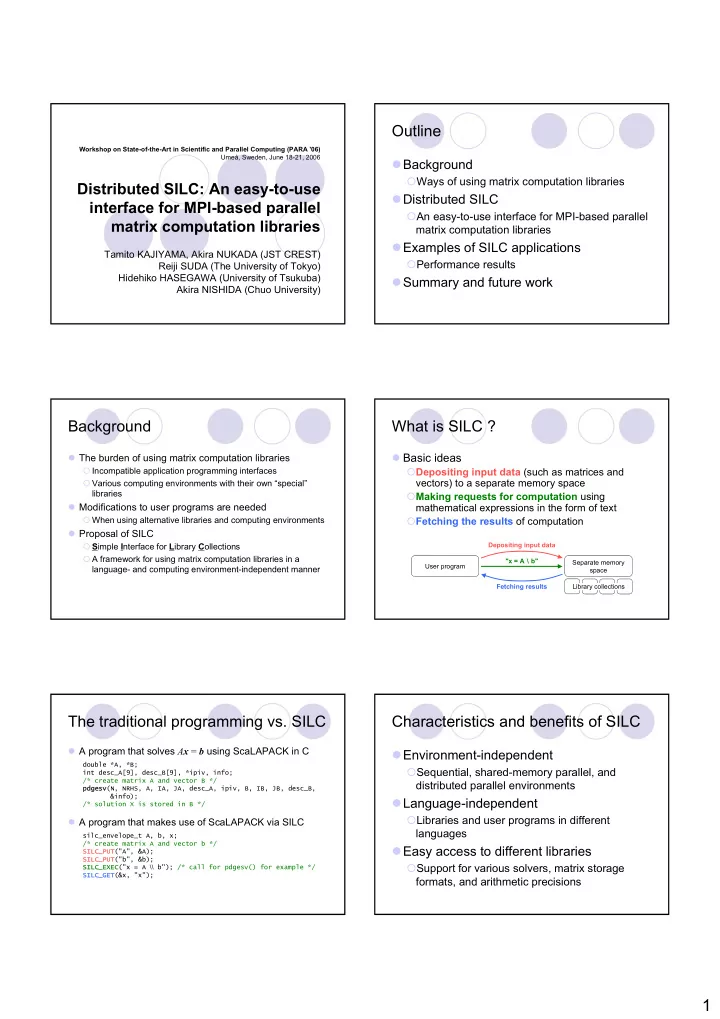

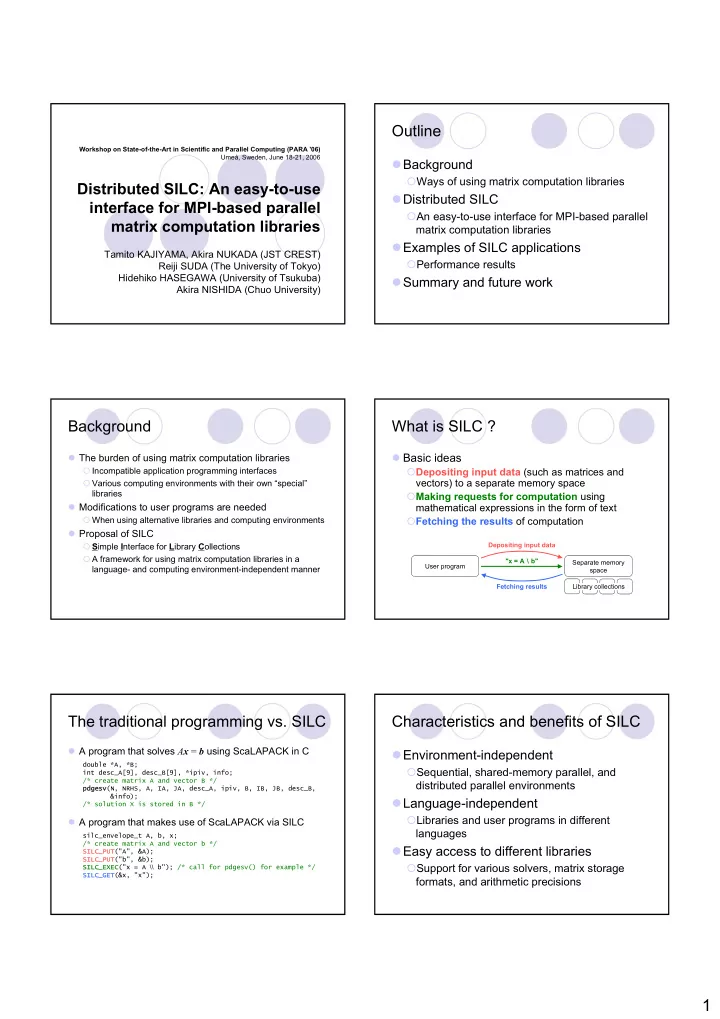

Outline Workshop on State-of-the-Art in Scientific and Parallel Computing (PARA '06) Umeå, Sweden, June 18-21, 2006 � Background � Ways of using matrix computation libraries Distributed SILC: An easy-to-use � Distributed SILC interface for MPI-based parallel � An easy-to-use interface for MPI-based parallel matrix computation libraries matrix computation libraries � Examples of SILC applications Tamito KAJIYAMA, Akira NUKADA (JST CREST) � Performance results Reiji SUDA (The University of Tokyo) Hidehiko HASEGAWA (University of Tsukuba) � Summary and future work Akira NISHIDA (Chuo University) Background What is SILC ? � The burden of using matrix computation libraries � Basic ideas � Incompatible application programming interfaces � Depositing input data (such as matrices and vectors) to a separate memory space � Various computing environments with their own “special” libraries � Making requests for computation using � Modifications to user programs are needed mathematical expressions in the form of text � When using alternative libraries and computing environments � Fetching the results of computation � Proposal of SILC � S imple I nterface for L ibrary C ollections Depositing input data � A framework for using matrix computation libraries in a "x = A \ b" Separate memory User program language- and computing environment-independent manner space Fetching results Library collections The traditional programming vs. SILC Characteristics and benefits of SILC � A program that solves A x = b using ScaLAPACK in C � Environment-independent double *A, *B; � Sequential, shared-memory parallel, and int desc_A[9], desc_B[9], *ipiv, info; /* create matrix A and vector B */ distributed parallel environments pd pdge gesv(N, NRHS, A, IA, JA, desc_A, ipiv, B, IB, JB, desc_B, &info); � Language-independent /* solution X is stored in B */ � Libraries and user programs in different � A program that makes use of ScaLAPACK via SILC languages silc_envelope_t A, b, x; /* create matrix A and vector b */ � Easy access to different libraries SILC_P C_PUT UT("A", &A); SILC_P C_PUT UT("b", &b); XEC("x = A ∖∖ b"); /* call for pdgesv() for example */ � Support for various solvers, matrix storage SILC_E C_EXE SILC_G C_GET ET(&x, "x"); formats, and arithmetic precisions 1

MPI-based SILC system Data transfer: the sequential case � Currently based on a client-server model � SILC_PUT � SILC_GET � A SILC server is an MPI-based parallel program Sequential Sequential � Support for both sequential user programs and user user program program MPI-based parallel user programs � Data redistribution mechanism Parallel Parallel � The server keeps data in a distributed manner server server � Support for various data distributions Received data Data to be sent � 2D block-cyclic distribution, Distribution Collection of data of data � 1D row-block and column-block distributions, etc. Distributed data Distributed data � In different matrix storage formats � Dense, band, the CRS format, etc. Data transfer: the parallel case Performance comparisons � SILC_PUT � SILC_GET � The traditional programming vs. SILC � Examples of SILC applications Parallel Parallel user user program program 1. Solution of a dense system with ScaLAPACK � MPI-based parallel user programs Parallel Parallel 2. Solution of an initial-value problem of a PDE server server Received data Data to be sent 3. Cloth simulation Distribution Collection � Sequential user programs of data of data Distributed data Distributed data Solving A x = b with ScaLAPACK Tested environments � Traditional � SILC � For both user programs pdgesv pdgesv(N, NRHS, A, IA, JA, SILC_PUT SILC_PUT("A", &A); � IBM OpenPower 710 (Power5 1.65 GHz × 4 ) desc_A, ipiv, B, IB, JB, SILC_PUT("b", &b); SILC_PUT � For SILC servers SILC_EXEC("x = A ∖∖ b"); SILC_EXEC desc_B, &info); SILC_GET SILC_GET(&x, "x"); � Xeon cluster (Intel Xeon 2.8 GHz × 8) � SGI Altix 3700 (Intel Itanium2 1.3 GHz × 16) Traditional User program SILC user program in SILC server � Gigabit Ethernet (1 Gbps) GbE � Computation in double precision real � MPI-based parallel user programs and SILC server � Matrix A in the dense format (2D block-cyclic distribution) 2

Solving A x = b with ScaLAPACK (results) An initial-value problem of a PDE � Traditional: elapsed time in pdgesv � Solve the 1D time-dependent diffusion equation � SILC: elapsed time from connection until SILC_GET ∂ ϕ ∂ 2 ϕ = ( ≥ , ≤ ≤ π ) t 0 0 x � Speedups ( N = 4,096): 4.88 (Xeon cluster), 6.46 (Altix) ∂ ∂ 2 t x under the initial condition ϕ = ( = , ≤ ≤ ) and sin x t 0 0 x π Traditional SILC (Xeon cluster, 8 PEs) SILC (Altix, 16 PEs) ϕ = > = ϕ = > = boundary conditions 0 ( t 0 , x 0 ) and 0 ( t 0 , x π ) 1e+03 Traditional user program � By the Crank-Nicolson method Execution time (in seconds) 1e+02 � Solution of a sparse linear system A x = b for each (OpenPower) time step using the CG method in Lis (an iterative 1e+01 User program SILC solvers library) in SILC server � Matrix A is an N × N sparse matrix with 3 N − 2 non- 1e+00 GbE zero elements, stored in the CRS format 1e-01 (OpenPower) (Xeon cluster, Altix) 512 1,024 2,048 4,096 Dimension N An initial-value problem of a PDE (cont'd) Tested environments � Traditional � SILC � For both user programs Prepare A and x Prepare A and x � IBM ThinkPad T42 (Intel Pentium M 1.7 GHz) For each time step { SILC_PUT SILC_PUT("A", &A); � For SILC servers Construct b from x For each time step { Solve A x = b with lis_solve is_solve Construct b from x � Xeon cluster (Intel Xeon 2.8 GHz × 8) } SILC_PUT SILC_PUT("b", &b); � SGI Altix 3700 (Intel Itanium2 1.3 GHz × 16) SILC_EXEC("x = A ∖∖ b"); SILC_EXEC � Gigabit Ethernet (1 Gbps) SILC_GET SILC_GET(&x, "x"); } � Computation in double precision real Traditional User program SILC user program in SILC server GbE An initial-value problem of a PDE (results) Cloth simulation � Execution time (in seconds) of the first 20 time steps � A simulator of cloth based � Speedups ( N = 80,000): 3.38 (Xeon cluster), 9.12 (Altix) on the mass-spring model � An implicit integrator by Traditional (1 PE) SILC (Xeon cluster, 8 PEs) SILC (Altix, 16 PEs) Baraff & Witkin (1998) 1e+04 Traditional user program � Code written in Python Execution time (in seconds) � SciPy for solving a sparse 1e+03 linear system A ⊿ v = b (T42) 1e+02 � OpenGL for rendering User program SILC in SILC server the results of simulation 1e+01 GbE � GUI for controlling the 1e+00 (T42) (Xeon cluster, simulation interactively Altix) 10,000 20,000 40,000 80,000 Dimension N 3

Cloth simulation (cont'd) Cloth simulation (results) � Execution time of the first 100 time steps � Traditional � SILC � In the case of 8 2 particles (dimension 192) For each time step { For each time step { Compute force f 0 Compute force f 0 � Matrix A consists of 5,652 non-zero elements, Construct A and b Construct A and b stored in the CRS format Solve A ⊿ v = b with SciPy SILC_PUT("A", &A); SILC_PUT Update velocity v SILC_PUT("b", &b); SILC_PUT Time (sec.) Speedup SILC_EXEC("d = A ∖∖ b"); Update position x SILC_EXEC Traditional T42 121.74 1.00 SILC_GET(&d, "d"); /* ⊿ v */ } SILC_GET T42 / Xeon cluster (8 PEs) 039.51 3.08 Update velocity v SILC Update position x T42 / Altix (16 PEs) 023.71 5.14 } Traditional User program SILC Traditional User program SILC user program in SILC server user program in SILC server GbE GbE Summary and future work � Distributed SILC: An easy-to-use interface for MPI-based parallel matrix computation libraries � Good speedups even at the cost of data transfer � Support for sequential and parallel user programs � Easy access to alternative libraries and computing environments (no need to modify user programs) � Future work � Ready-made modules for various MPI-based parallel matrix computation libraries � Performance evaluation of the system 4

Recommend

More recommend