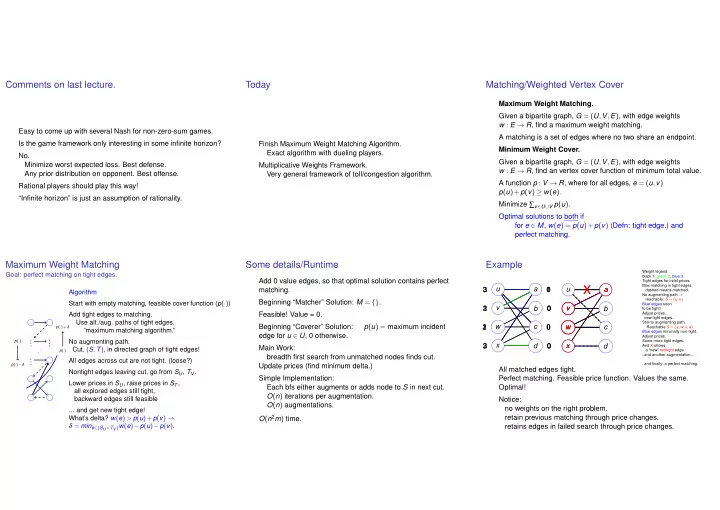

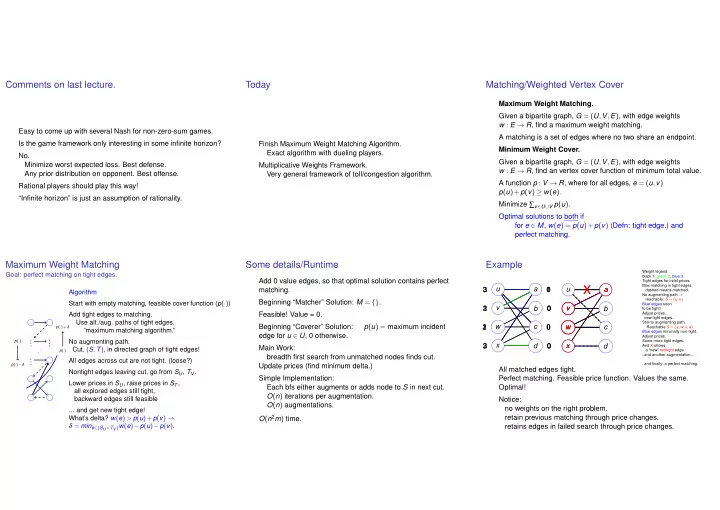

Comments on last lecture. Today Matching/Weighted Vertex Cover Maximum Weight Matching. Given a bipartite graph, G = ( U , V , E ) , with edge weights w : E → R , find a maximum weight matching. Easy to come up with several Nash for non-zero-sum games. A matching is a set of edges where no two share an endpoint. Is the game framework only interesting in some infinite horizon? Finish Maximum Weight Matching Algorithm. Minimum Weight Cover. Exact algorithm with dueling players. No. Given a bipartite graph, G = ( U , V , E ) , with edge weights Minimize worst expected loss. Best defense. Multiplicative Weights Framework. w : E → R , find an vertex cover function of minimum total value. Any prior distribution on opponent. Best offense. Very general framework of toll/congestion algorithm. A function p : V → R , where for all edges, e = ( u , v ) Rational players should play this way! p ( u )+ p ( v ) ≥ w ( e ) . “Infinite horizon” is just an assumption of rationality. Minimize ∑ v ∈ U ∪ V p ( u ) . Optimal solutions to both if for e ∈ M , w ( e ) = p ( u )+ p ( v ) (Defn: tight edge.) and perfect matching. Maximum Weight Matching Some details/Runtime Example Weight legend: Goal: perfect matching on tight edges. black 1, green 2, blue 3 Add 0 value edges, so that optimal solution contains perfect Tight edges for inital prices. Max matching in tight edges. X u a u a a matching. 3 3 3 0 0 1 dashed means matched. Algorithm No augmenting path → reachable: S = { u , v } Beginning “Matcher” Solution: M = {} . Start with empty matching, feasible cover function ( p ( · ) ) Blue edges soon 3 v 0 0 0 v v v 1 2 b b to be tight! Add tight edges to matching. Feasible! Value = 0. Adjust prices... new tight edges. Use alt./aug. paths of tight edges. Still no augmenting path. Beginning “Coverer” Solution: p ( u ) = maximum incident 1 3 2 w c 0 0 0 w w w c p ( · )+ δ Reachable S = { v , w , x , a } ”maximum matching algorithm.” Blue edges minimally non-tight. edge for u ∈ U , 0 otherwise. Adjust prices. . . p ( · ) . . No augmenting path. Some more tight edges. . . 2 3 3 x d 0 0 0 x x d And X shows Main Work: . . . Cut, ( S , T ) , in directed graph of tight edges! p ( · ) a “new” nontight edge. ..and another augmentation... breadth first search from unmatched nodes finds cut. . . All edges across cut are not tight. (loose?) . ..and finally: a perfect matching. p ( · ) − δ Update prices (find minimum delta.) All matched edges tight. Nontight edges leaving cut, go from S U , T V . Simple Implementation: Perfect matching. Feasible price function. Values the same. Lower prices in S U , raise prices in S T , Each bfs either augments or adds node to S in next cut. Optimal! all explored edges still tight, O ( n ) iterations per augmentation. backward edges still feasible Notice: O ( n ) augmentations. no weights on the right problem. ... and get new tight edge! O ( n 2 m ) time. retain previous matching through price changes. What’s delta? w ( e ) > p ( u )+ p ( v ) → δ = min e ∈ ( S U × T V ) w ( e ) − p ( u ) − p ( v ) . retains edges in failed search through price changes.

The multiplicative weights framework. Expert’s framework. Infallible expert. One of the expert’s is infallible! Your strategy? Choose any expert that has not made a mistake! n experts. How long to find perfect expert? Every day, each offers a prediction. Maybe..never! Never see a mistake. “Rain” or “Shine.” Better model? Whose advise do you follow? How many mistakes could you make? Mistake Bound. “The one who is correct most often.” (A) 1 Sort of. (B) 2 How well do you do? (C) log n (D) n − 1 Adversary designs setup to watch who you choose, and make that expert make a mistake. n − 1! Concept Alert. Back to mistake bound. Alg 2: find majority of the perfect How many mistakes could you make? (A) 1 Infallible Experts. (B) 2 Alg: Choose one of the perfect experts. Note. (C) log n Mistake Bound: n − 1 Adversary: (D) n − 1 Lower bound: adversary argument. makes you want to look bad. At most log n ! Upper bound: every mistake finds fallible expert. ”You could have done so well”... When alg makes a mistake, Better Algorithm? but you didn’t! ha..ha! | “perfect” experts | drops by a factor of two. Analysis of Algorithms: do as well as possible! Making decision, not trying to find expert! Initially n perfect experts mistake → ≤ n / 2 perfect experts Algorithm: Go with the majority of previously correct experts. mistake → ≤ n / 4 perfect experts . What you would do anyway! . . mistake → ≤ 1 perfect expert ≥ 1 perfect expert → at most log n mistakes!

Imperfect Experts Analysis: weighted majority Analysis: continued. 1. Initially: w i = 1. Goal: Best expert makes m mistakes. � 3 � M n . 1 2 m ≤ ∑ i w i ≤ Potential function: ∑ i w i . Initially n . 2. Predict with 4 Goal? weighted m - best expert mistakes M algorithm mistakes. 1 For best expert, b , w b ≥ 2 m . Do as well as the best expert! majority of � 3 � M n . 1 2 m ≤ Each mistake: experts. 4 Algorithm. Suggestions? Take log of both sides. total weight of incorrect experts reduced by 3. w i → w i / 2 if factor of 1 − 1? − 2? 2 ? − m ≤ − M log ( 4 / 3 )+ logn . Go with majority? wrong. each incorrect expert weight multiplied by 1 2 ! Solve for M . Penalize inaccurate experts? total weight decreases by M ≤ ( m + logn ) / log ( 4 / 3 ) ≤ 2 . 4 ( m + log n ) factor of 1 2 ? factor of 3 4 ? Best expert is penalized the least. Multiple by 1 − ε for incorrect experts... mistake → ≥ half weight with incorrect experts. � M n . ( 1 − ε ) m ≤ 1. Initially: w i = 1. Mistake → potential function decreased by 3 1 − ε 4 . � 2 2. Predict with weighted majority of experts. We have Massage... 3. w i → w i / 2 if wrong. M ≤ 2 ( 1 + ε ) m + 2ln n � M 1 � 3 ε 2 m ≤ ∑ w i ≤ n . 4 Approaches a factor of two of best expert performance! i where M is number of algorithm mistakes. Best Analysis? Randomization!!!! Randomized analysis. Better approach? Two experts: A,B Some formulas: Use? Bad example? For ε ≤ 1 , x ∈ [ 0 , 1 ] , Randomization! ( 1 + ε ) x ≤ ( 1 + ε x ) Which is worse? That is, choose expert i with prob ∝ w i ( 1 − ε ) x ≤ ( 1 − ε x ) (A) A right on even, B right on odd. Bad example: A,B,A,B,A... (B) A right first half of days, B right second For ε ∈ [ 0 , 1 2 ] , After a bit, A and B make nearly the same number of mistakes. − ε − ε 2 ≤ ln ( 1 − ε ) ≤ − ε Best expert peformance: T / 2 mistakes. Choose each with approximately the same probabilty. ε − ε 2 ≤ ln ( 1 + ε ) ≤ ε Pattern (A): T − 1 mistakes. Make a mistake around 1 / 2 of the time. Proof Idea: ln ( 1 + x ) = x − x 2 2 + x 3 3 −··· Factor of (almost) two worse! Best expert makes T / 2 mistakes. Rougly optimal!

Randomized algorithm Analysis Gains. Losses in [ 0 , 1 ] . Expert i loses ℓ t i ∈ [ 0 , 1 ] in round t. Why so negative? ( 1 − ε ) L ∗ ≤ W ( T ) ≤ n ∏ t ( 1 − ε L t ) 1. Initially w i = 1 for expert i . Each day, each expert gives gain in [ 0 , 1 ] . Take logs 2. Choose expert i with prob w i W , W = ∑ i w i . Multiplicative weights with ( 1 + ε ) g t i . ( L ∗ ) ln ( 1 − ε ) ≤ ln n + ∑ ln ( 1 − ε L t ) 3. w i ← w i ( 1 − ε ) ℓ t i Use − ε − ε 2 ≤ ln ( 1 − ε ) ≤ − ε G ≥ ( 1 − ε ) G ∗ − log n W ( t ) sum of w i at time t . W ( 0 ) = n − ( L ∗ )( ε + ε 2 ) ≤ ln n − ε ∑ L t ε Best expert, b , loses L ∗ total. → W ( T ) ≥ w b ≥ ( 1 − ε ) L ∗ . where G ∗ is payoff of best expert. And w i ℓ t L t = ∑ i W expected loss of alg. in time t . i ∑ t L t ≤ ( 1 + ε ) L ∗ + ln n ε . Scaling: Claim: W ( t + 1 ) ≤ W ( t )( 1 − ε L t ) Loss → weight loss. ∑ t L t is total expected loss of algorithm. Not [ 0 , 1 ] , say [ 0 , ρ ] . Proof: W ( t + 1 ) ≤ ∑ = ∑ ( 1 − ε ℓ t w i ℓ t Within ( 1 + ε ) ish of the best expert! w i − ε ∑ i ) w i i L ≤ ( 1 + ε ) L ∗ + ρ log n i i i No factor of 2 loss! ε � � 1 − ε ∑ i w i ℓ t = ∑ i w i ∑ i w i i = W ( t )( 1 − ε L t ) Summary: multiplicative weights. Framework: n experts, each loses different amount every day. Perfect Expert: log n mistakes. Imperfect Expert: best makes m mistakes. Deterministic Strategy: 2 ( 1 + ε ) m + log n ε Real numbered losses: Best loses L ∗ total. Randomized Strategy: ( 1 + ε ) L ∗ + log n ε Strategy: Choose proportional to weights multiply weight by ( 1 − ε ) loss. Multiplicative weights framework! Applications next!

Recommend

More recommend