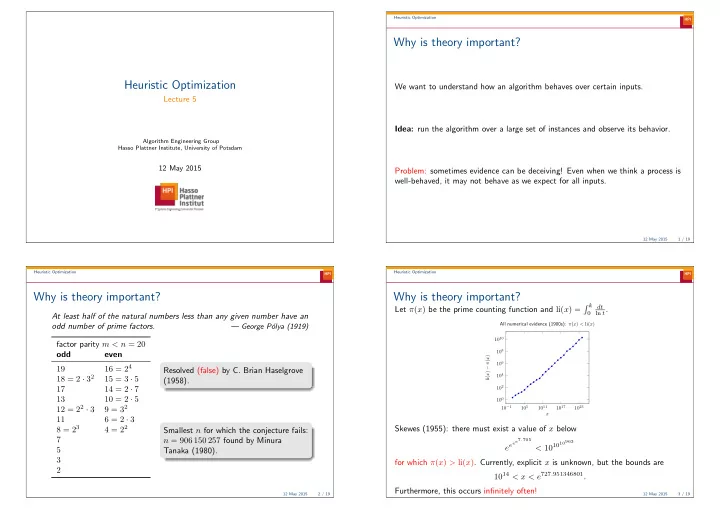

Heuristic Optimization Why is theory important? Heuristic Optimization We want to understand how an algorithm behaves over certain inputs. Lecture 5 Idea: run the algorithm over a large set of instances and observe its behavior. Algorithm Engineering Group Hasso Plattner Institute, University of Potsdam 12 May 2015 Problem: sometimes evidence can be deceiving! Even when we think a process is well-behaved, it may not behave as we expect for all inputs. 12 May 2015 1 / 19 Heuristic Optimization Heuristic Optimization Why is theory important? Why is theory important? � k dt Let π ( x ) be the prime counting function and li( x ) = ln t . 0 At least half of the natural numbers less than any given number have an All numerical evidence (1900s): π ( x ) < li( x ) odd number of prime factors. — George P´ olya (1919) 10 10 factor parity m < n = 20 10 8 odd even li( x ) − π ( x ) 10 6 16 = 2 4 19 Resolved (false) by C. Brian Haselgrove 10 4 18 = 2 · 3 2 15 = 3 · 5 (1958). 17 14 = 2 · 7 10 2 13 10 = 2 · 5 10 0 12 = 2 2 · 3 9 = 3 2 10 − 1 10 5 10 11 10 17 10 23 x 11 6 = 2 · 3 8 = 2 3 4 = 2 2 Skewes (1955): there must exist a value of x below Smallest n for which the conjecture fails: 7 n = 906 150 257 found by Minura e e ee 7 . 705 < 10 10 10963 5 Tanaka (1980). 3 for which π ( x ) > li( x ) . Currently, explicit x is unknown, but the bounds are 2 10 14 < x < e 727 . 951346801 . Furthermore, this occurs infinitely often! 12 May 2015 2 / 19 12 May 2015 3 / 19

Heuristic Optimization Heuristic Optimization Why is theory important? Design and analysis of algorithms 1 , 000 O ( c n ) 800 O ( n c ) runtime 600 400 Correctness Complexity 200 “does the algorithm always “how many computational output the correct solution?” resources are required?” 20 40 60 80 100 instance size We want to make rigorous, indisputable arguments about the behavior of algorithms. We want to understand how the behavior generalizes to any problem size. 12 May 2015 4 / 19 12 May 2015 5 / 19 Heuristic Optimization Heuristic Optimization Design and analysis of algorithms Convergence First question: does the algorithm even find the solution? Randomized search heuristics Definition. • Random local search Let f : S → R for a finite set S . Let S ⊇ S ⋆ := { x ∈ S : f ( x ) is optimal } . We say • Metropolis algorithm, simulated annealing an algorithm converges if it finds an element of S ⋆ with probability 1 and holds it • Evolutionary algorithms, genetic algorithms forever after. • Ant colony optimization Two conditions for convergence (Rudolph, 1998) General-purpose: can be applied to any optimization problem 1. There is a positive probability to reach any point in the search space from any other point 2. The best solution is never lost (elitism) Challenges: • Unlike classical algorithms, they are not designed with their analysis in mind Does the (1+1) EA converge on every function f : { 0 , 1 } n → R ? • Behavior depends on a random number generator Does RLS converge on every function f : { 0 , 1 } n → R ? Can you think of how to modify RLS so that it converges? 12 May 2015 6 / 19 12 May 2015 7 / 19

Heuristic Optimization Heuristic Optimization Runtime analysis Runtime analysis Randomized search heuristics In most cases, randomized search heuristics visit the global optimum in finite time • time to evaluate fitness function evaluation is much higher than the rest (or can be easily modified to do so) • do not perform the same operations even if the input is the same • do not output the same result if run twice A far more important question: how long does it take? Given a function f : S → R , the runtime of some RSH A applied to f is a random variable T f that counts the number of calls A makes to f until an optimal To characterize this unambiguously: count the number of “primitive steps” until a solution is first generated. solution is visited for the first time (typically a function growing with the input size) We are interested in • Estimating E ( T f ) , the expected runtime of A on f We typically use asymptotic notation to classify the growth of such functions. • Estimating Pr( T f ≤ t ) , the success probability of A after t steps on f 12 May 2015 8 / 19 12 May 2015 9 / 19 Heuristic Optimization Heuristic Optimization Runtime analysis Runtime analysis Define the random variable X t for t ∈ N 0 as RandomSearch � Choose x uniformly at random from S ; if x ( t ) = x ⋆ , 1 while stopping criterion not met do X t = 0 otherwise; Choose y uniformly at random from S ; if f ( y ) ≥ f ( x ) then x ← y ; end So X t has a Bernoulli distribution with parameter p = 1 / | S | (see Lecture 3). We already have the tools to analyze this! Let T be the smallest t for which X t = 1 . Suppose w.l.o.g., there is a unique maximum solution x ⋆ ∈ S (if there are more, it Then T is a geometrically distributed random variable (see Lecture 3). can only be faster). Consider a run of the algorithm ( x (0) , x (1) , . . . ) where x ( t ) is the solution Expected runtime: E ( T ) = 1 /p = | S | generated in the t -th iteration. 12 May 2015 10 / 19 12 May 2015 11 / 19

Heuristic Optimization Heuristic Optimization Runtime analysis Runtime analysis Let’s consider more interesting cases. . . Success probability: Pr( T ≤ k ) = 1 − (1 − p ) k Recall from Project 1: For example, (1+1) EA Pr( T ≤ | S | ) = 1 − (1 − 1 / | S | ) | S | ≥ 1 − 1 /e ≈ 0 . 6321 Choose x uniformly at random from { 0 , 1 } n ; while stopping criterion not met do Constant chance that it takes | S | steps to find the solution. y ← x ; Let S = { 0 , 1 } n . Let’s bound the success probability before 2 ǫn for some constant foreach i ∈ { 1 , . . . , n } do 0 < ǫ < 1 . With probability 1 /n , y i ← (1 − y i ) ; Pr( T ≤ 2 ǫn ) = 1 − (1 − 2 − n ) 2 ǫn ≤ 1 − (1 − 2 − n 2 ǫn ) end if f ( y ) ≥ f ( x ) then x ← y ; � �� � end = 2 − n (1 − ǫ ) = 2 − Θ( n ) In each iterations, how many bits flip in expectation? see HW 2, Exercise 2a What is the probability exactly one bit flips? So the probability that random search is successful before 2 Θ( n ) steps is vanishing What is the probability exactly two bits flip? quickly (faster than every polynomial) as n grows. What is the probability that no bits flip? 12 May 2015 12 / 19 12 May 2015 13 / 19 Heuristic Optimization Heuristic Optimization Runtime Analysis Runtime Analysis In order to reach the global optimum in the next step the algorithm has to mutate the k bits and leave the n − k bits alone. Theorem (Droste et al., 2002) The probability to create the global optimum in the next step is The expected runtime of the (1+1) EA for an arbitrary function f : { 0 , 1 } n → R is O ( n n ) . � 1 � 1 � k � � n − k � n 1 − 1 = n − n . ≥ n n n Proof. Without loss of generality, suppose x ⋆ is the unique optimum and x is the current solution. Assuming the process has not already generated the optimal solution, in expectation we wait O ( n n ) steps until this happens. Let k = |{ i : x i � = x ⋆ i }| . Note: we are simply overestimating the time to find the optimal for any arbitrary pseudo-Boolean function. Each bit flips (resp., does not flip) with probability 1 /n (resp., with probability Note: The upper bound is worse than for RandomSearch . In fact, there are 1 − 1 /n ). functions where RandomSearch is guaranteed to perform better than the (1+1) EA. 12 May 2015 14 / 19 12 May 2015 15 / 19

Heuristic Optimization Heuristic Optimization Initialization Initialization (Tail Inequalities) Recall from Project 1: OneMax : { 0 , 1 } n → R , x �→ | x | ; How likely is the initial solution no worse than (3 / 4) n ? Markov’s Inequality How good is the initial solution? Let X be a random variable with P ( X < 0) = 0 . For all a > 0 we have Pr( X ≥ a ) ≤ E ( X ) Let X count the number of 1-bits in the initial solution. E ( X ) = n/ 2 . . a How likely to get exactly n/ 2 ? � n � 1 � � n/ 2 1 − 1 Pr( X = n/ 2) = E ( X ) = n/ 2; then Pr( X ≥ (3 / 4) n ) ≤ E ( X ) n/ 2 2 n/ 2 n (3 / 4) n ≤ 2 / 3 For n = 100 , Pr( X = 50) ≈ 0 . 0796 12 May 2015 16 / 19 12 May 2015 17 / 19 Heuristic Optimization Heuristic Optimization Initialization (Tail Inequalities) Initialization (Tail Inequalities): A simple example Let X 1 , X 2 , . . . X n be independent Poisson trials each with probability p i ; For X = � n i =1 X i , the expectation is E ( X ) = � p i i =1 . Let n = 100 . How likely is the initial solution no worse than OneMax ( x ) = 75 ? Chernoff Bounds Pr( X i ) = 1 / 2 and E ( X ) = 100 / 2 = 50 . − E ( x ) δ 2 • for 0 ≤ δ ≤ 1 , Pr( X ≤ (1 − δ ) E ( X )) ≤ e . 2 � � E ( X ) e δ • for δ > 0 , Pr( X > (1 + δ ) E ( X )) ≤ . Markov: Pr( X ≥ 75) ≤ 50 75 = 2 (1+ δ ) (1+ δ ) 3 . √ e � � 50 E.g., p i = 1 / 2 , E ( X ) = n/ 2 , fix δ = 1 / 2 → (1 + δ ) E ( X ) = (3 / 4) n , Chernoff: Pr( X ≥ (1 + 1 / 2)50) ≤ < 0 . 0054 . (3 / 2) (3 / 2) � n/ 2 � e 1 / 2 = c − n/ 2 . Pr( X > (3 / 4) n ) ≤ � 100 � 2 − 100 ≈ 0 . 0000002818141 . In reality, Pr( X ≥ 75) = � 100 (3 / 2) (3 / 2) i =75 i 12 May 2015 18 / 19 12 May 2015 19 / 19

Recommend

More recommend