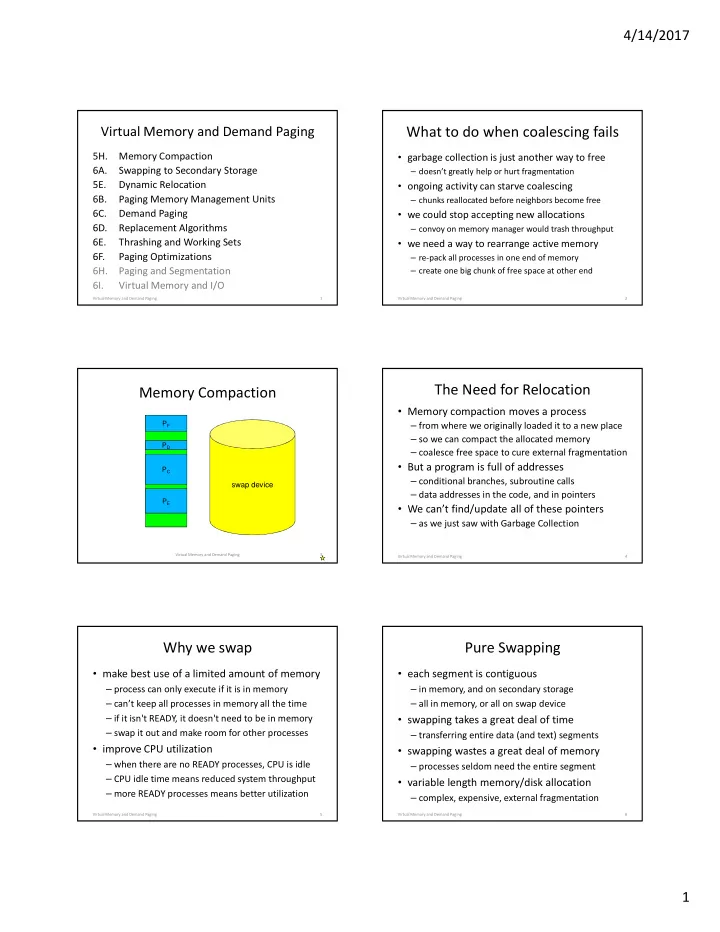

4/14/2017 What to do when coalescing fails Virtual Memory and Demand Paging 5H. Memory Compaction • garbage collection is just another way to free 6A. Swapping to Secondary Storage – doesn’t greatly help or hurt fragmentation 5E. Dynamic Relocation • ongoing activity can starve coalescing 6B. Paging Memory Management Units – chunks reallocated before neighbors become free 6C. Demand Paging • we could stop accepting new allocations 6D. Replacement Algorithms – convoy on memory manager would trash throughput 6E. Thrashing and Working Sets • we need a way to rearrange active memory 6F. Paging Optimizations – re-pack all processes in one end of memory 6H. Paging and Segmentation – create one big chunk of free space at other end 6I. Virtual Memory and I/O Virtual Memory and Demand Paging 1 Virtual Memory and Demand Paging 2 The Need for Relocation Memory Compaction • Memory compaction moves a process P F – from where we originally loaded it to a new place – so we can compact the allocated memory P D – coalesce free space to cure external fragmentation • But a program is full of addresses P C – conditional branches, subroutine calls swap device – data addresses in the code, and in pointers P E • We can’t find/update all of these pointers – as we just saw with Garbage Collection Virtual Memory and Demand Paging 3 Virtual Memory and Demand Paging 4 Why we swap Pure Swapping • make best use of a limited amount of memory • each segment is contiguous – process can only execute if it is in memory – in memory, and on secondary storage – can’t keep all processes in memory all the time – all in memory, or all on swap device – if it isn't READY, it doesn't need to be in memory • swapping takes a great deal of time – swap it out and make room for other processes – transferring entire data (and text) segments • improve CPU utilization • swapping wastes a great deal of memory – when there are no READY processes, CPU is idle – processes seldom need the entire segment – CPU idle time means reduced system throughput • variable length memory/disk allocation – more READY processes means better utilization – complex, expensive, external fragmentation Virtual Memory and Demand Paging 5 Virtual Memory and Demand Paging 6 1

4/14/2017 Virtual Address Translation The Need for Dynamic Relocation • there are a few reasons to move a process 0x00000000 shared code private data virtual address space – needs a larger chunk of memory (as seen by process) – swapped out, swapped back in to a new location DLL 1 private stack – to compact fragmented free space 0xFFFFFFFF • all addresses in the program will be wrong address translation unit (magical) – references in the code, pointers in the data • it is not feasible to re-linkage edit the program physical address space – new pointers have been created during run-time (as on CPU/memory bus) Virtual Memory and Demand Paging 7 Virtual Memory and Demand Paging 8 Segment Relocation Segment Relocation • a natural unit of allocation and relocation virtual address space 0x00000000 – process address space made up of segments shared code private data – each segment is contiguous w/no holes DLL 1 private stack • CPU has segment base registers 0xFFFFFFFF – point to (physical memory) base of each segment code base register data base register – CPU automatically relocates all references physical memory aux base register stack base register • OS uses for virtual address translation physical = virtual + base seg – set base to region where segment is loaded stack – efficient: CPU can relocate every reference code data – transparent: any segment can move anywhere DLL Virtual Memory and Demand Paging 9 Virtual Memory and Demand Paging 10 Moving a Segment Privacy and Protection The virtual address of the • confine process to its own address space stack doesn’t change Let’s say we need to 0x00000000 – each segment also has a length/limit register shared code private data move the stack in – CPU verifies all offsets are within range physical memory DLL 1 DLL 2 DLL 3 private stack – generates addressing exception if not 0xFFFFFFFF • protecting read-only segments code base register data base register – associate read/write access with each segment aux base register stack base register stack base register Physical memory – CPU ensures integrity of read-only segments physical = virtual + base seg stack • segmentation register update is privileged We just change the code data value in the stack DLL – only kernel-mode code can do this base register Virtual Memory and Demand Paging 11 Virtual Memory and Demand Paging 12 2

4/14/2017 paged address translation Are Segments the Answer? process virtual address space • a very natural unit of address space CODE DATA STACK – variable length, contiguous data blobs – all-or-none with uniform r/w or r/o access – convenient/powerful virtual address abstraction • but they are variable length – they require contiguous physical memory – ultimately leading to external fragmentation – requiring expensive swapping for compaction physical memory … and in that moment he was enlightened … Virtual Memory and Demand Paging 13 Virtual Memory and Demand Paging 14 Paging Memory Management Unit Paging and Fragmentation virtual address physical address page # offset page # offset offset within page a segment is implemented as a set of virtual pages remains the same. V page # virtual page # is V page # used as an index • internal fragmentation V page # into the page table 0 – averages only ½ page (half of the last one) V page # selected entry valid bit is checked 0 • external fragmentation contains physical to ensure that this V page # page number. virtual page # is – completely non-existent (we never carve up pages) V page # legal. page table Virtual Memory and Demand Paging 15 Virtual Memory and Demand Paging 16 Paging Relocation Examples virtual address physical address 0005 0000 0004 3E28 1C08 0100 041F 0C20 1C08 0100 Supplementary Slides page fault V 0C20 V 0105 V 00A1 0 V 041F 0 V 0D10 V 0AC3 page table Virtual Memory and Demand Paging 17 3

4/14/2017 implementing a paging MMU updating a paging MMU • MMUs used to sit between the CPU and bus • adding/removing pages for current process – now they are typically integrated into the CPU – directly update active page table in memory – privileged instruction to flush (stale) cached entries • page tables • switching from one process to another – originally implemented in special fast registers – now, w/larger address spaces, stored in memory – maintain separate page tables for each process – entries cached in very fast registers as they are used – privileged instruction loads pointer to new page table • which makes cache invalidation an issue – reload instruction flushes previously cached entries • optional features • sharing pages between multiple processes – read/write access control, referenced/dirty bits – make each page table point to same physical page – separate page tables for each processor mode – can be read-only or read/write sharing Virtual Memory and Demand Paging 19 Virtual Memory and Demand Paging 20 Swapping is Wasteful Loading Pages “On Demand” • process does not use all its pages all the time • paging MMU supports not present pages – code and data both exhibit reference locality – CPU access of present pages proceeds normally – some code/data may seldom be used • accessing not present page generates a trap • keeping all pages in memory wastes space – operating system can process this “ page fault ” – more space/process = fewer processes in memory – recognize that it is a request for another page • swapping them all in and out wastes time – read that page in and resume process execution • entire process needn’t be in memory to run – longer transfers, longer waits for disk – start each process with a subset of its pages • it arbitrarily limits the size of a process – load additional pages as program demands them – process must be smaller than available memory Virtual Memory and Demand Paging 21 Virtual Memory and Demand Paging 22 Page Fault Handling Demand Paging – advantages • initialize page table entries to not present • improved system performance – fewer in-memory pages per process • CPU faults when invalid page is referenced – more processes in primary memory 1. trap forwarded to page fault handler 2. determine which page, where it resides • more parallelism, better throughput • better response time for processes already in memory 3. find and allocate a free page frame – less time required to page processes in and out 4. block process, schedule I/O to read page in – less disk I/O means reduced queuing delays 5. update page table point at newly read-in page 6. back up user-mode PC to retry failed instruction • fewer limitations on process size 7. unblock process, return to user-mode – process can be larger than physical memory • Meanwhile, other processes can run – process can have huge (sparse) virtual space Virtual Memory and Demand Paging 23 Virtual Memory and Demand Paging 24 4

Recommend

More recommend