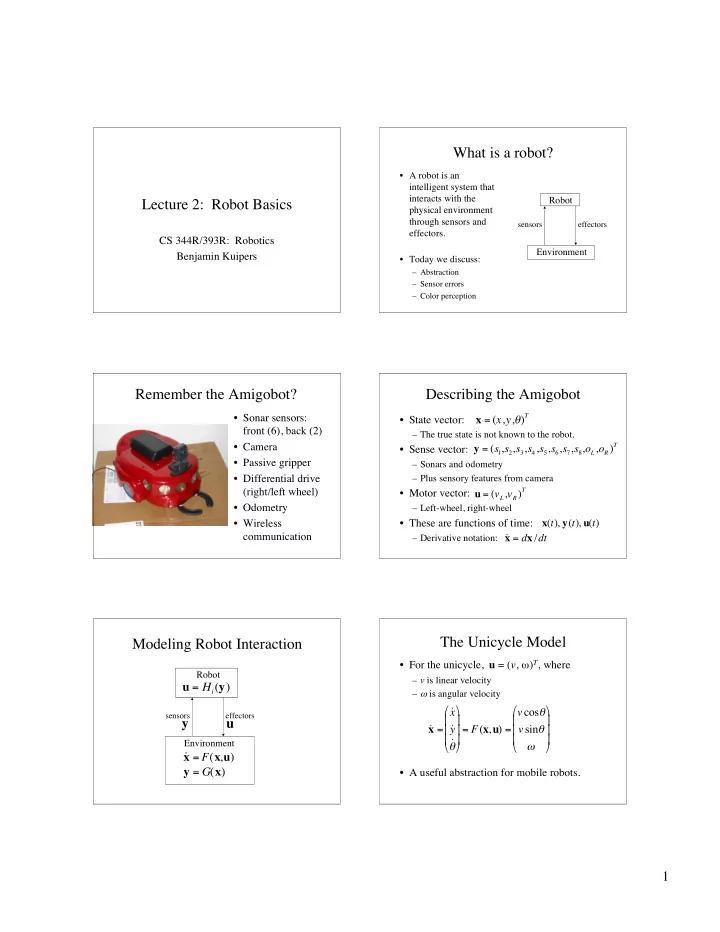

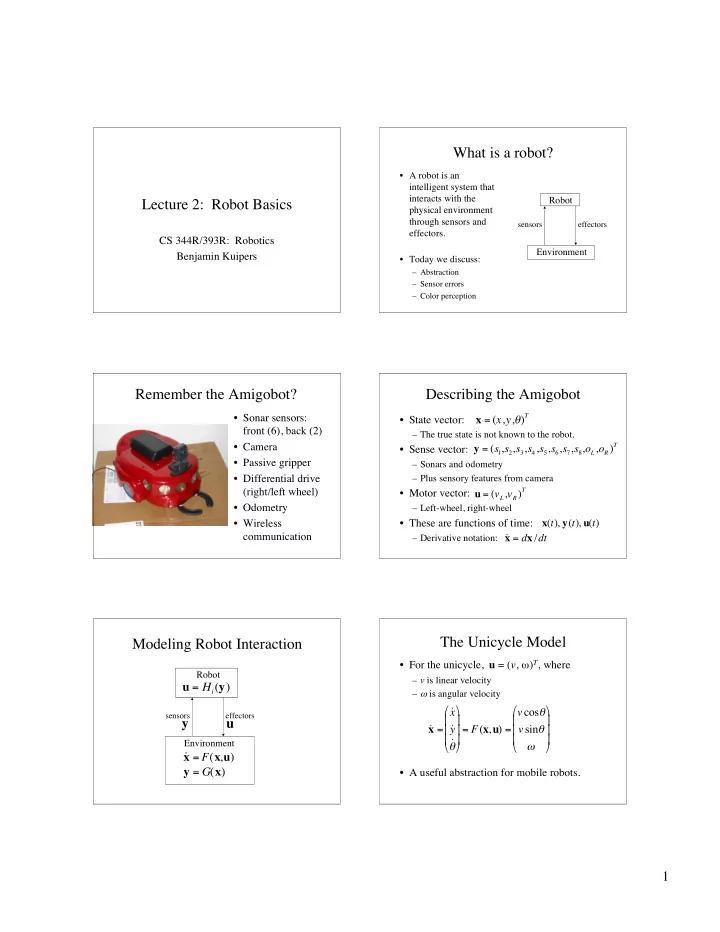

What is a robot? • A robot is an intelligent system that interacts with the Robot Lecture 2: Robot Basics physical environment through sensors and sensors effectors effectors. CS 344R/393R: Robotics Environment Benjamin Kuipers • Today we discuss: – Abstraction – Sensor errors – Color perception Remember the Amigobot? Describing the Amigobot T • Sonar sensors: • State vector: x = ( x , y , � ) front (6), back (2) – The true state is not known to the robot. • Camera 1 , s 2 , s 3 , s 4 , s 5 , s 6 , s 7 , s 8 , o L , o R ) T • Sense vector: y = ( s • Passive gripper – Sonars and odometry • Differential drive – Plus sensory features from camera (right/left wheel) T • Motor vector: u = ( v L , v R ) • Odometry – Left-wheel, right-wheel • Wireless • These are functions of time: x ( t ), y ( t ), u ( t ) communication ˙ – Derivative notation: x = d x / dt The Unicycle Model Modeling Robot Interaction • For the unicycle, u = ( v , ω ) T , where Robot – v is linear velocity u = H i ( y ) – ω is angular velocity � � � � ˙ x v cos � sensors effectors � � � � y u ˙ ˙ x = y = F ( x , u ) = v sin � � � � � � � � � ˙ Environment � � � � � � x = F ( x , u ) ˙ y = G ( x ) • A useful abstraction for mobile robots. 1

The Amigobot is (like) a Unicycle Abstracting the Robot Model � ˙ � � � x v cos � � � � � ˙ ˙ x = � y � = F ( x , u ) = � v sin � � � � � � ˙ Robot � � � � � � sensors effectors • Amigobot motor vector: u = ( v L , v R ) v = ( v R + v L )/2 (mm/sec) Environment � = ( v R � v L )/ B (rad/sec) where B is the robot wheelbase (mm). Abstracting the Robot Model Abstracting the Robot Model Robot Robot control law control law y ' u ' sensory motor y ' u ' feature command sensory motor y u feature command y u Environment Environment Abstracting the Robot Model Abstracting the Robot Model • By implementing sensory features and control laws, we define a new robot model. Robot – New sensory features y ′′ u '' y ′′ – New motor signals u ′′ control law • The robot’s “environment” changes y ' u ' – from continuous, local, uncertain … sensory motor – to reliable discrete graph of actions. feature command y u – (For example. Perhaps. If you are lucky.) • We abstract the Aibo to the Unicycle model Environment – Abstracting away joint positions and trajectories 2

A Topological Abstraction Types of Robots • For example, the abstracted motor signal u ′′ • Mobile robots could select a control law from: – Our class focuses on these. – TurnRight, TurnLeft, Rwall, Lwall, Midline – Autonomous agent in unfamiliar environment. • The abstracted sensor signal y ′′ could be a • Robot manipulators Boolean vector describing nearby obstacles: – Often used in factory automation. – [L, FL, F, FR, R] – Programmed for perfectly known workspace. • The continuous environment is abstracted to • Environmental monitoring robots a discrete graph. – Distributed sensor systems (“motes”) – Discrete actions are implemented as continuous • And many others … control laws. – Web ‘bots, etc. Sensor Errors: Types of Sensors Accuracy and Precision • Range-finders: sonar, laser, IR precise accurate both • Odometry: shaft encoders, ded reckoning • Bump: contact, threshold • Orientation: compass, accelerometers • GPS • Vision: high-res image, blobs to track, motion • … • Related to random vs systematic errors Sonar Sweeps a Wide Cone. Sonar vs Ray-Tracing One return tells us about many cells. • Sonar doesn't perceive distance directly. • It measures "time to echo" and estimates distance. • Obstacle could be anywhere on the arc at distance D . • The space closer than D is likely to be free. • Two Gaussians in polar coordinates. 3

Data on sonar responses Sonar chirp fills a wide cone • Sensing a flat board (Left) or pole (Right) at different distances and angles. • For the board (2'x8'), secondary and tertiary lobes of the sonar signal are important. Specular Reflections in Sonar Exploring a Hallway with Sonar • Multi-path (specular) reflections give spuriously long range measurements. Lassie “sees” A Useful Heuristic for Sonar the world with • Short sonar returns are reliable. a Laser – They are likely to be perpendicular reflections. Rangefinder • 180 ranges over 180 ° planar field of view • About 13” above the ground plane • 10-12 scans per second 4

Laser Rangefinder Image Ded ("Dead") Reckoning • 180 narrow beams at 1º intervals. • From shaft encoders, ded uce ( Δ x i , Δ y i , Δθ i ) • Ded uce total displacement from start: � ( x , y , � ) = (0,0,0) + ( � x i , � y i , � � i ) i • How reliable is this? It’s pretty bad. – Each ( Δ x i , Δ y i , Δθ i ) is OK. – Cumulative errors in θ make x and y unreliable, too. Odometry-Only Tracking: Human Color Perception 6 times around a 2m x 3m area • Perceived color is a function of the relative activation of three types of cones in the retina • This will be worse for the Aibo walking. RGB: An Additive Color Model The Gamut of the Human Eye • Gamut: the set of expressible colors • Three primary colors stimulate the three types of cones, to achieve the desired color perception. 5

HSV: Hue-Saturation-Value Color Perception and Display • HSV attempts to model human perception • Only some human-perceptible colors can be – L * a * b * (CIELAB) is more perceptually accurate displayed using three primaries. – Lightness; a * : red-green axis; b * : yellow-blue Aibo Uses the YUV Color Model Our Goals for Robotics • RGB rotated • From noisy low-level sensors and effectors, – Y: Luminance we want to define – U-V: hue – reliable higher-level sensory features, • Used in PAL – reliable control laws for meaningful actions, video format – reliable higher-level motor commands. • To track, define • Understand the sensors and effectors a region in – Especially including their errors color space. • Use abstraction – See Tekkotsu tutorial 6

Recommend

More recommend