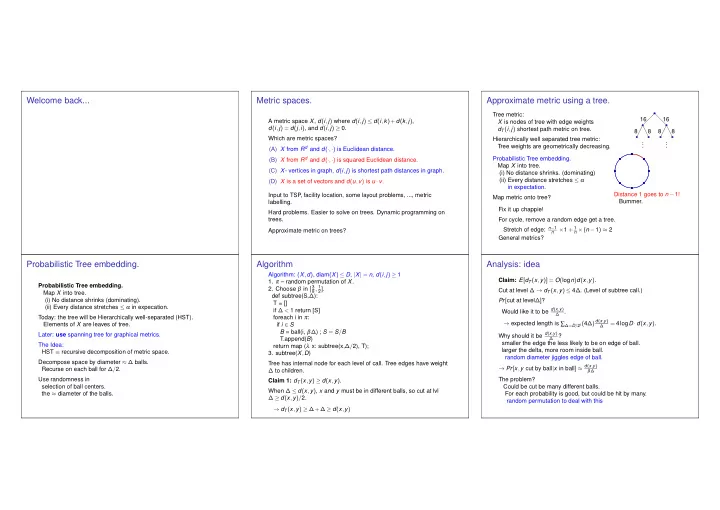

Welcome back... Metric spaces. Approximate metric using a tree. Tree metric: 16 16 A metric space X , d ( i , j ) where d ( i , j ) ≤ d ( i , k )+ d ( k , j ) , X is nodes of tree with edge weights d ( i , j ) = d ( j , i ) , and d ( i , j ) ≥ 0. d T ( i , j ) shortest path metric on tree. 8 8 8 8 Which are metric spaces? Hierarchically well separated tree metric: . . . . . . (A) X from R d and d ( · , · ) is Euclidean distance. Tree weights are geometrically decreasing. (B) X from R d and d ( · , · ) is squared Euclidean distance. Probabilistic Tree embedding. Map X into tree. (C) X - vertices in graph, d ( i , j ) is shortest path distances in graph. (i) No distance shrinks. (dominating) (ii) Every distance stretches ≤ α (D) X is a set of vectors and d ( u , v ) is u · v . in expectation. Distance 1 goes to n − 1! Input to TSP , facility location, some layout problems, ..., metric Map metric onto tree? Bummer. labelling. Fix it up chappie! Hard problems. Easier to solve on trees. Dynamic programming on trees. For cycle, remove a random edge get a tree. Stretch of edge: n − 1 × 1 + 1 n × ( n − 1 ) ≈ 2 Approximate metric on trees? n General metrics? Probabilistic Tree embedding. Algorithm Analysis: idea Algorithm: ( X , d ) , diam ( X ) ≤ D , | X | = n , d ( i , j ) ≥ 1 Claim: E [ d T ( x , y )] = O ( log n ) d ( x , y ) . 1. π – random permutation of X . Probabilistic Tree embedding. 2. Choose β in [ 3 8 , 1 2 ] . Cut at level ∆ → d T ( x , y ) ≤ 4 ∆ . (Level of subtree call.) Map X into tree. def subtree(S, ∆ ): (i) No distance shrinks (dominating). Pr [ cut at level ∆] ? T = [] (ii) Every distance stretches ≤ α in expecation. if ∆ < 1 return [S] Would like it to be d ( x , y ) . ∆ Today: the tree will be Hierarchically well-separated (HST). foreach i in π : → expected length is ∑ ∆= D / 2 i ( 4 ∆) d ( x , y ) = 4log D · d ( x , y ) . Elements of X are leaves of tree. if i ∈ S ∆ B = ball(i, β ∆ ) ; S = S / B Why should it be d ( x , y ) Later: use spanning tree for graphical metrics. ? T.append( B ) ∆ smaller the edge the less likely to be on edge of ball. The Idea: return map ( λ x: subtree(x, ∆ / 2), T); larger the delta, more room inside ball. HST ≡ recursive decomposition of metric space. 3. subtree( X , D ) random diameter jiggles edge of ball. Decompose space by diameter ≈ ∆ balls. Tree has internal node for each level of call. Tree edges have weight → Pr [ x , y cut by ball | x in ball ] ≈ d ( x , y ) Recurse on each ball for ∆ / 2. ∆ to children. β ∆ Use randomness in The problem? Claim 1: d T ( x , y ) ≥ d ( x , y ) . selection of ball centers. Could be cut be many different balls. When ∆ ≤ d ( x , y ) , x and y must be in different balls, so cut at lvl the ≈ diameter of the balls. For each probability is good, but could be hit by many. ∆ ≥ d ( x , y ) / 2. random permutation to deal with this → d T ( x , y ) ≥ ∆+∆ ≥ d ( x , y )

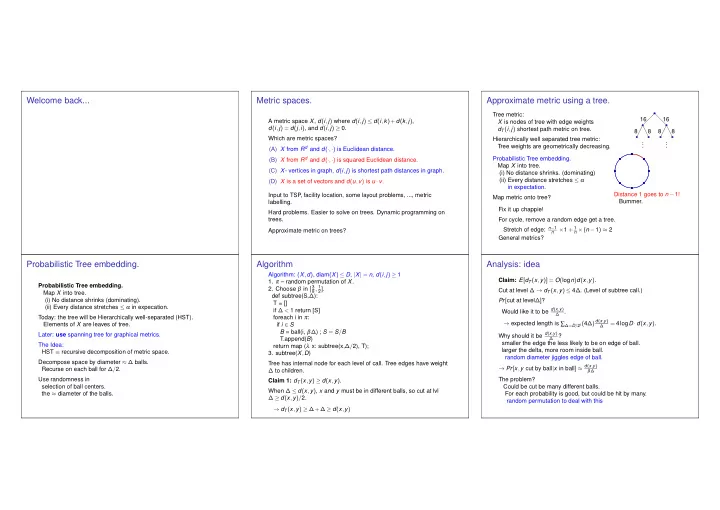

Analysis: ( x , y ) The pipes are distinct! Metric Labelling Would like Pr [ x , y cut by ball | x in ball ] ≤ 8 d ( x , y ) ∆ (Only consider cut by x , factor 2 loss.) � � 1 E ( d T ( x , y )] = ∑ ∆= D / 2 i ∑ j ∈ X ∆ 32 d ( x , y ) j At level ∆ Recall X ∆ has nodes with d ( x , j ) ∈ [∆ / 4 , ∆ / 2 ] Input: graph G = ( V , E ) with edge weights, w ( · ) , metric labels ( X , d ) , At some point x is in some ∆ level ball. and costs for mapping vertices to labels c : V × X . Renumber nodes in order of distance from x . “Listen Stash, the pipes are distinct!!” Find an labeling of vertices, ℓ : V → X that minimizes If d ( x , y ) ≥ ∆ / 8, 8 d ( x , y ) ≥ 1, so claim holds trivially. Uh.. well X ∆ is distinct from X ∆ / 2 . ∆ ∑ e =( u , v ) c ( e ) d ( l ( u ) , l ( v ))+ ∑ v c ( v , l ( v )) j can only cut ( x , y ) if d ( j , x ) ∈ [∆ / 4 , ∆ / 2 ] (else ( x , y ) entirely in ball), � � 1 E ( d T ( x , y )] = ∑ ∆= D 2 i ∑ j ∈ X ∆ 32 d ( x , y ) j Call this set X ∆ . Idea: find HST for metric ( X , d ) . � � 1 ≤ ∑ j 32 d ( x , y ) j ∈ X ∆ cuts ( x , y ) if.. Solve the problem on a hierarchically well separated tree metric. j d ( j , x ) ≤ β ∆ and β ∆ ≤ d ( j , y ) ≤ d ( j , x )+ d ( x , y ) ≤ ( 32ln n )( d ( x , y )) . Kleinberg-Tardos: constant factor on uniform metric. → β ∆ ∈ [ d [ j , x ] , d ( j , x )+ d ( x , y )] . Claim: E [ d T ( x , y )] = O ( logn ) d ( x , y ) Hierarchically well separated tree, “geometric”, constant factor. occurs with prob. d ( x , y ) ∆ / 8 = 8 d ( x , y ) . ∆ Expected stretch is O ( log n ) . → O ( log n ) approximation. And j must be before any i < j in π → prob is 1 We gave an algorithm that produces a distribution of trees. j � � 8 d ( x , y ) 1 → Pr [ j cuts ( x , y )] ≤ The expected stretch of any pair is O ( log n ) . j ∆ d T ( x , y ) if cut level ∆ is 4 ∆ . � � 1 → E [ d T ( x , y )] = ∑ ∆= D 32 d ( x , y ) 2 i ∑ j ∈ X ∆ j And Now For Something... Example Problem: clustering. ◮ Points: documents, dna, preferences. Image example. Completely Different. ◮ Graphs: applications to VLSI, parallel processing, image segmentation.

Image Segmentation Edge Expansion/Conductance. Spectra of the graph. M = A / d adjacency matrix, A Eigenvector: v – Mv = λ v Real, symmetric. Graph G = ( V , E ) , Claim: Any two eigenvectors with different eigenvalues are Assume regular graph of degree d . orthogonal. Edge Expansion. Proof: Eigenvectors: v , v ′ with eigenvalues λ , λ ′ . | E ( S , V − S ) | Which region? Normalized Cut: Find S , which minimizes h ( S ) = d min | S | , | V − S | , h ( G ) = min S h ( S ) v T Mv ′ = v T ( λ ′ v ′ ) = λ ′ v T v ′ v T Mv ′ = λ v T v ′ = λ v T v . Conductance. w ( S , S ) . φ ( S ) = n | E ( S , V − S ) | Distinct eigenvalues → orthonormal basis. d | S || V − S | , φ ( G ) = min S φ ( S ) w ( S ) × w ( S ) In basis: matrix is diagonal.. Note n ≥ max ( | S | , | V |−| S | ) ≥ n / 2 Ratio Cut: minimize w ( S , S ) → h ( G ) ≤ φ ( G ) ≤ 2 h ( S ) w ( S ) , λ 1 0 ... 0 0 λ 2 ... 0 M = . . . w ( S ) no more than half the weight. (Minimize cost per unit weight ... . . . . . . that is removed.) 0 0 ... λ n Either is generally useful! Action of M . Rayleigh Quotient Cheeger’s inequality. v - assigns weights to vertices. λ 1 = max x x T Mx x T x Mv replaces v i with 1 d ∑ e =( i , j ) v j . In basis, M is diagonal. Rayleigh quotient. v = 1 . λ 1 = 1. Eigenvector with highest value? λ 2 = max x ⊥ 1 x T Mx Represent x in basis, i.e., x i = x · v i . x T x . → v i = ( M 1 ) i = 1 d ∑ e ∈ ( i , j ) 1 = 1. i λ = λ x T x xMx = ∑ i λ i x 2 i ≤ λ 1 ∑ i x 2 Eigenvalue gap: µ = λ 1 − λ 2 . Claim: For a connected graph λ 2 < 1. | E ( S , V − S ) | Tight when x is first eigenvector. Recall: h ( G ) = min S , | S |≤| V | / 2 | S | Proof: Second Eigenvector: v ⊥ 1 . Max value x . Rayleigh quotient. 2 = 1 − λ 2 µ Connected → path from x valued node to lower value. � � ≤ h ( G ) ≤ 2 ( 1 − λ 2 ) = 2 µ λ 2 = max x ⊥ 1 x T Mx 2 x T x . → ∃ e = ( i , j ) , v i = x , x j < x . Hmmm.. j x ⊥ 1 ↔ ∑ i x i = 0. i ( Mv ) i ≤ 1 d ( x + x ··· + v j ) < x . Connected λ 2 < λ 1 . . . Example: 0 / 1 Indicator vector for balanced cut, S is one such vector. . h ( G ) large → well connected → λ 1 − λ 2 big. Therefore λ 2 < 1. x ≤ x Rayleigh quotient is | E ( S , S ) | Disconnected λ 2 = λ 1 . = h ( S ) . | S | h ( G ) small → λ 1 − λ 2 small. Claim: Connected if λ 2 < 1. Rayleigh quotient is less than h ( S ) for any balanced cut S . Proof: Assign + 1 to vertices in one component, − δ to rest. x i = ( Mx i ) = ⇒ eigenvector with λ = 1. Find balanced cut from vector that acheives Rayleigh quotient? Choose δ to make ∑ i x i = 0, i.e., x ⊥ 1 .

Easy side of Cheeger. See you ... Small cut → small eigenvalue gap. µ 2 ≤ h ( G ) Cut S . i ∈ S : v i = | V |−| S | , i ∈ Sv i = −| S | . ∑ i v i = | S | ( | V |−| S | ) −| S | ( | V |−| S | ) = 0 → v ⊥ 1 . Thursday. v T v = | S | ( | V |−| S | ) 2 + | S | 2 ( | V |−| S | ) = | S | ( | V |−| S | )( | V | ) . v T Mv = 1 d ∑ e =( i , j ) x i x j . Same side endpoints: like v T v . Different side endpoints: −| S | ( | V |−| S | ) v T Mv = v T v − ( 2 | E ( S , S ) || S | ( | V |−| S | ) v T Mv v T v = 1 − 2 | E ( S , S ) | | S | λ 2 ≥ 1 − 2 h ( S ) → h ( G ) ≥ 1 − λ 2 2

Recommend

More recommend