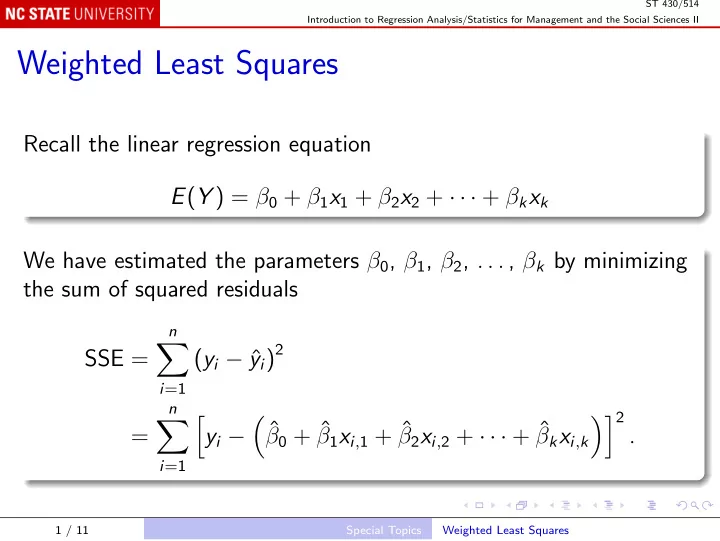

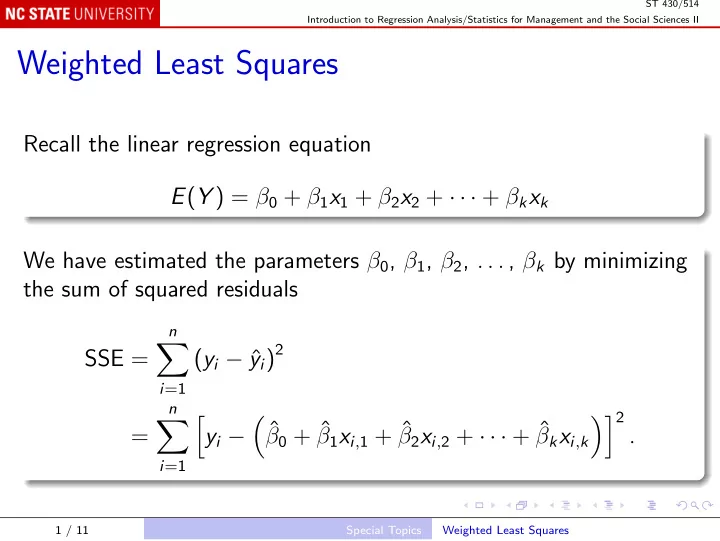

ST 430/514 Introduction to Regression Analysis/Statistics for Management and the Social Sciences II Weighted Least Squares Recall the linear regression equation E ( Y ) = β 0 + β 1 x 1 + β 2 x 2 + · · · + β k x k We have estimated the parameters β 0 , β 1 , β 2 , . . . , β k by minimizing the sum of squared residuals n � y i ) 2 SSE = ( y i − ˆ i =1 n �� 2 � � � β 0 + ˆ ˆ β 1 x i , 1 + ˆ β 2 x i , 2 + · · · + ˆ = y i − β k x i , k . i =1 1 / 11 Special Topics Weighted Least Squares

ST 430/514 Introduction to Regression Analysis/Statistics for Management and the Social Sciences II Sometimes we want to give some observations more weight than others. We achieve this by minimizing a weighted sum of squares: n � y i ) 2 WSSE = w i ( y i − ˆ i =1 n �� 2 � � � β 0 + ˆ ˆ β 1 x i , 1 + ˆ β 2 x i , 2 + · · · + ˆ = w i y i − β k x i , k i =1 The resulting ˆ β s are called weighted least squares (WLS) estimates, and the WLS residuals are √ w i ( y i − ˆ y i ) . 2 / 11 Special Topics Weighted Least Squares

ST 430/514 Introduction to Regression Analysis/Statistics for Management and the Social Sciences II Why use weights? Suppose that the variance is not constant: var( Y i ) = σ 2 i . If we use weights w i ∝ 1 , σ 2 i the WLS estimates have smaller standard errors than the ordinary least squares (OLS) estimates. That is, the OLS estimates are inefficient , relative to the WLS estimates. 3 / 11 Special Topics Weighted Least Squares

ST 430/514 Introduction to Regression Analysis/Statistics for Management and the Social Sciences II In fact, using weights proportional to 1 /σ 2 i is optimal: no other weights give smaller standard errors. When you specify weights, regression software calculates standard errors on the assumption that they are proportional to 1 /σ 2 i . 4 / 11 Special Topics Weighted Least Squares

ST 430/514 Introduction to Regression Analysis/Statistics for Management and the Social Sciences II How to choose the weights If you have many replicates for each unique combination of x s, use s 2 i to estimate var( Y | x i ). Often you will not have enough replicates to give good variance estimates. The text suggests grouping observations that are “nearest neighbors”. Alternatively you can use the regression diagnostic plots. 5 / 11 Special Topics Weighted Least Squares

ST 430/514 Introduction to Regression Analysis/Statistics for Management and the Social Sciences II Example: Florida road contracts. dot11 <- read.table("Text/Exercises&Examples/DOT11.txt", header = TRUE) l1 <- lm(BIDPRICE ~ LENGTH, dot11) summary(l1) plot(l1) 6 / 11 Special Topics Weighted Least Squares

ST 430/514 Introduction to Regression Analysis/Statistics for Management and the Social Sciences II The first plot uses unweighted residuals y i − ˆ y i , but the others use weighted residuals. Also recall that they are “Standardized residuals” y i − ˆ y i z ∗ i = s √ 1 − h i . which are called Studentized residuals in the text. With weights, the standardized residuals are � y i − ˆ i = √ w i � y i s √ 1 − h i . z ∗ 7 / 11 Special Topics Weighted Least Squares

ST 430/514 Introduction to Regression Analysis/Statistics for Management and the Social Sciences II Note that the “Scale-Location” plot shows an increasing trend. Try weights that are proportional to powers of x = LENGTH : # Try power -1: plot(lm(BIDPRICE ~ LENGTH, dot11, weights = 1/LENGTH)) # Still slightly increasing; try power -2: plot(lm(BIDPRICE ~ LENGTH, dot11, weights = 1/LENGTH^2)) # Now slightly decreasing. summary() shows that the fitted equations are all very similar. weights = 1/LENGTH gives the smallest standard errors. 8 / 11 Special Topics Weighted Least Squares

ST 430/514 Introduction to Regression Analysis/Statistics for Management and the Social Sciences II Often the weights are determined by fitted values, not by the independent variable: # Try power -1: plot(lm(BIDPRICE ~ LENGTH, dot11, weights = 1/fitted(l1))) # About flat; but try power -2: plot(lm(BIDPRICE ~ LENGTH, dot11, weights = 1/fitted(l1)^2)) # Now definitely decreasing. summary() shows that the fitted equations are again very similar. weights = 1/fitted(l1) gives the smallest standard errors. 9 / 11 Special Topics Weighted Least Squares

ST 430/514 Introduction to Regression Analysis/Statistics for Management and the Social Sciences II Note Standard errors are computed as if the weights are known constants. In the last case, we used weights based on a preliminary OLS fit. Theory shows that in large samples the standard errors are also valid with estimated weights. 10 / 11 Special Topics Weighted Least Squares

ST 430/514 Introduction to Regression Analysis/Statistics for Management and the Social Sciences II Note When you specify weights w i , lm() fits the model i = σ 2 σ 2 w i and the “Residual standard error” s is an estimate of σ : � n y i ) 2 i =1 w i ( y i − ˆ s 2 = n − p If you change the weights, the meaning of σ (and s ) changes. You cannot compare the residual standard errors for different weighting schemes (c.f. page 488, foot). 11 / 11 Special Topics Weighted Least Squares

Recommend

More recommend