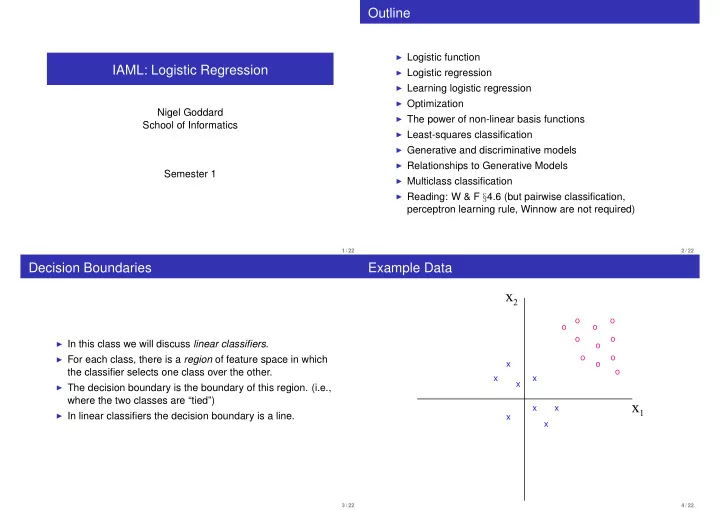

Outline ◮ Logistic function IAML: Logistic Regression ◮ Logistic regression ◮ Learning logistic regression ◮ Optimization Nigel Goddard ◮ The power of non-linear basis functions School of Informatics ◮ Least-squares classification ◮ Generative and discriminative models ◮ Relationships to Generative Models Semester 1 ◮ Multiclass classification ◮ Reading: W & F § 4.6 (but pairwise classification, perceptron learning rule, Winnow are not required) 1 / 22 2 / 22 Decision Boundaries Example Data x 2 o o o o o o ◮ In this class we will discuss linear classifiers . o o o ◮ For each class, there is a region of feature space in which x o o the classifier selects one class over the other. x x x ◮ The decision boundary is the boundary of this region. (i.e., where the two classes are “tied”) x 1 x x ◮ In linear classifiers the decision boundary is a line. x x 3 / 22 4 / 22

Linear Classifiers A Geometric View x 2 ◮ In a two-class linear classifier, we o o learn a function o o x 2 o o o F ( x , w ) = w ⊤ x + w 0 o o w o o o o o o o x o o o x o o o that represents how aligned the x x x x x x instance is with y = 1. x 1 x x x x x 1 ◮ w are parameters of the classifier x x x that we learn from data. x ◮ To do classification of an input x : x �→ ( y = 1 ) if F ( x , w ) > 0 5 / 22 6 / 22 Explanation of Geometric View Two Class Discrimination ◮ For now consider a two class case: y ∈ { 0 , 1 } . ◮ From now on we’ll write x = ( 1 , x 1 , x 2 , . . . x d ) and ◮ The decision boundary in this case is w = ( w 0 , w 1 , . . . w d ) . ◮ We will want a linear, probabilistic model. We could try { x | w ⊤ x + w 0 = 0 } P ( y = 1 | x ) = w ⊤ x . But this is stupid. ◮ Instead what we will do is ◮ w is a normal vector to this surface ◮ (Remember how lines can be written in terms of their P ( y = 1 | x ) = f ( w ⊤ x ) normal vector.) ◮ Notice that in more than 2 dimensions, this boundary will ◮ f must be between 0 and 1. It will squash the real line into be a hyperplane. [ 0 , 1 ] ◮ Furthermore the fact that probabilities sum to one means P ( y = 0 | x ) = 1 − f ( w ⊤ x ) 7 / 22 8 / 22

The logistic function Linear weights ◮ We need a function that returns probabilities (i.e. stays between 0 and 1). ◮ Linear weights + logistic squashing function == logistic ◮ The logistic function provides this regression. ◮ f ( z ) = σ ( z ) ≡ 1 / ( 1 + exp ( − z )) . ◮ We model the class probabilities as ◮ As z goes from −∞ to ∞ , so f goes from 0 to 1, a “squashing function” D ◮ It has a “sigmoid” shape (i.e. S-like shape) � w j x j ) = σ ( w T x ) p ( y = 1 | x ) = σ ( j = 0 0.9 ◮ σ ( z ) = 0 . 5 when z = 0. Hence the decision boundary is 0.8 given by w T x = 0. 0.7 0.6 ◮ Decision boundary is a M − 1 hyperplane for a M 0.5 0.4 dimensional problem. 0.3 0.2 0.1 − 6 − 4 − 2 0 2 4 6 9 / 22 10 / 22 Logistic regression Learning Logistic Regression ◮ For this slide write ˜ w = ( w 1 , w 2 , . . . w d ) (i.e., exclude the bias w 0 ) ◮ The bias parameter w 0 shifts the position of the ◮ Want to set the parameters w using training data. hyperplane, but does not alter the angle ◮ As before: ◮ The direction of the vector ˜ w affects the angle of the ◮ Write out the model and hence the likelihood hyperplane. The hyperplane is perpendicular to ˜ w ◮ Find the derivatives of the log likelihood w.r.t the ◮ The magnitude of the vector ˜ w effects how certain the parameters. ◮ Adjust the parameters to maximize the log likelihood. classifications are ◮ For small ˜ w most of the probabilities within the region of the decision boundary will be near to 0 . 5. ◮ For large ˜ w probabilities in the same region will be close to 1 or 0. 11 / 22 12 / 22

◮ It turns out that the likelihood has a unique optimum (given ◮ Assume data is independent and identically distributed. sufficient training examples). It is convex . ◮ Call the data set D = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , . . . ( x n , y n ) } ◮ How to maximize? Take gradient ◮ The likelihood is n ∂ L � ( y i − σ ( w T x i )) x ij n = ∂ w j � p ( D | w ) = p ( y = y i | x i , w ) i = 1 i = 1 ◮ (Aside: something similar holds for linear regression n p ( y = 1 | x i , w ) y i ( 1 − p ( y = 1 | x i , w )) 1 − y i � = n ∂ E i = 1 � ( w T φ ( x i ) − y i ) x ij = ∂ w j ◮ Hence the log likelihood L ( w ) = log p ( D | w ) is given by i = 1 where E is squared error.) n � y i log σ ( w ⊤ x i ) + ( 1 − y i ) log ( 1 − σ ( w ⊤ x i )) ◮ Unfortunately, you cannot maximize L ( w ) explicitly as for L ( w ) = linear regression. You need to use a numerical i = 1 optimisation method, see later. 13 / 22 14 / 22 XOR and Linear Separability ◮ A problem is linearly separable if we can find weights so that Fitting this into the general structure for learning algorithms: ◮ ˜ w T x + w 0 > 0 for all positive cases (where y = 1), and ◮ ˜ w T x + w 0 ≤ 0 for all negative cases (where y = 0) ◮ Define the task : classification, discriminative ◮ XOR ◮ Decide on the model structure : logistic regression model ◮ Decide on the score function : log likelihood ◮ Decide on optimization/search method to optimize the score function: numerical optimization routine. Note we have several choices here (stochastic gradient descent, conjugate gradient, BFGS). ◮ XOR becomes linearly separable if we apply a non-linear tranformation φ ( x ) of the input — what is one? 15 / 22 16 / 22

The power of non-linear basis functions Generative and Discriminative Models ◮ Notice that we have done something very different here than with naive Bayes. 1 ◮ Naive Bayes: Modelled how a class “generated” the 1 feature vector p ( x | y ) . Then could classify using φ 2 x 2 p ( y | x ) ∝ p ( x | y ) p ( y ) 0 0.5 . This called is a generative approach. ◮ Logistic regression: Model p ( y | x ) directly. This is a discriminative approach. −1 0 ◮ Discriminative advantage: Why spend effort modelling p ( x ) ? Seems a waste, we’re always given it as input. −1 0 1 0 0.5 1 φ 1 x 1 ◮ Generative advantage: Can be good with missing data Using two Gaussian basis functions φ 1 ( x ) and φ 2 ( x ) (remember how naive Bayes handles missing data). Also good for detecting outliers. Or, sometimes you really do Figure credit: Chris Bishop, PRML want to generate the input. As for linear regression, we can transform the input space if we want x → φ ( x ) 17 / 22 18 / 22 Generative Classifiers can be Linear Too Multiclass classification Two scenarios where naive Bayes gives you a linear classifier. 1. Gaussian data with equal covariance. If ◮ Create a different weight vector w k for each class, to p ( x | y = 1 ) ∼ N ( µ 1 , Σ) and p ( x | y = 0 ) ∼ N ( µ 2 , Σ) then classify into k and not- k . ◮ Then use the “softmax” function w T x + w 0 ) p ( y = 1 | x ) = σ (˜ exp ( w T k x ) for some ( w 0 , ˜ p ( y = k | x ) = w ) that depends on µ 1 , µ 2 , Σ and the class � C j = 1 exp ( w T j x ) priors 2. Binary data. Let each component x j be a Bernoulli variable ◮ Note that 0 ≤ p ( y = k | x ) ≤ 1 and � C j = 1 p ( y = j | x ) = 1 i.e. x j ∈ { 0 , 1 } . Then a Na¨ ıve Bayes classifier has the form ◮ This is the natural generalization of logistic regression to w T x + w 0 ) more than 2 classes. p ( y = 1 | x ) = σ (˜ 3. Exercise for keeners: prove these two results 19 / 22 20 / 22

Least-squares classification Summary ◮ Logistic regression is more complicated algorithmically than linear regression ◮ Why not just use linear regression with 0/1 targets? ◮ The logistic function, logistic regression 4 4 ◮ Hyperplane decision boundary 2 2 ◮ Linear separability 0 0 ◮ We still need to know how to compute the maximum of the −2 −2 log likelihood. Coming soon! −4 −4 −6 −6 −8 −8 −4 −2 0 2 4 6 8 −4 −2 0 2 4 6 8 Green: logistic regression; magenta, least-squares regression Figure credit: Chris Bishop, PRML 21 / 22 22 / 22

Recommend

More recommend