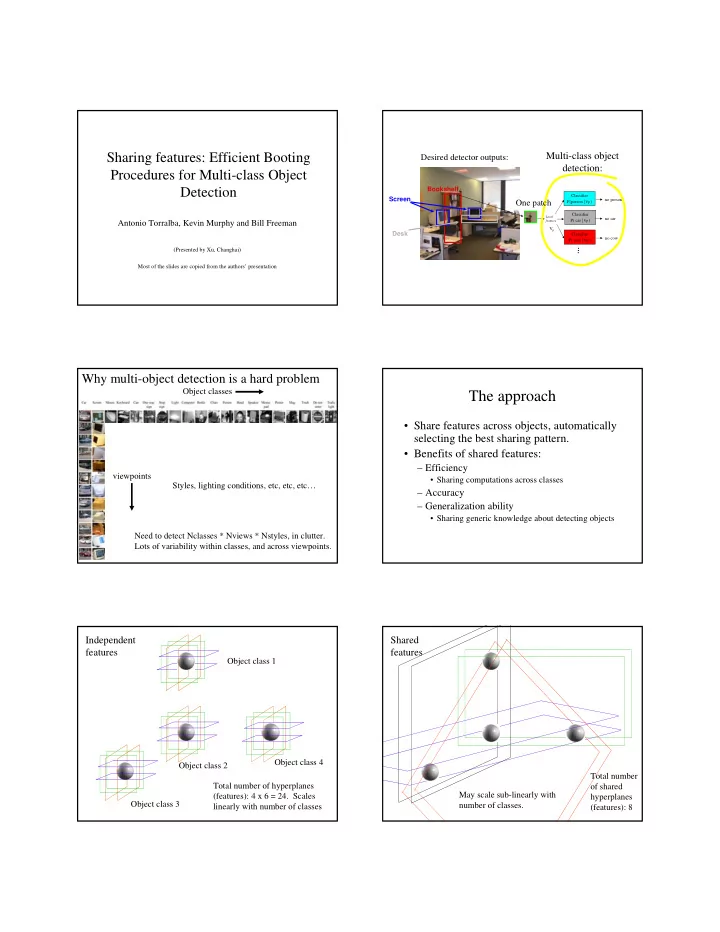

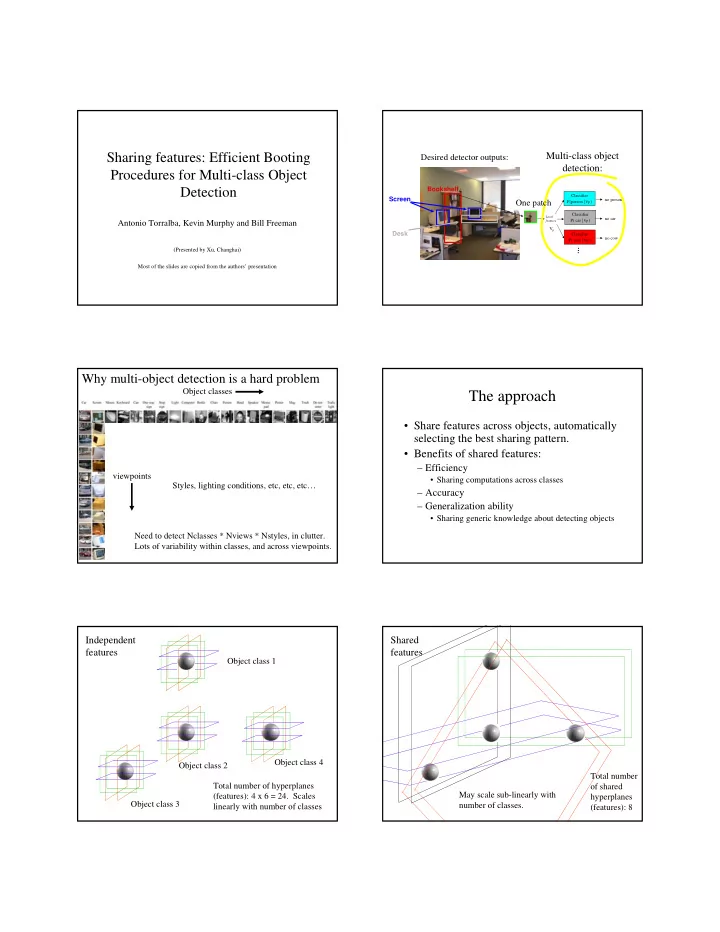

Sharing features: Efficient Booting Multi-class object Desired detector outputs: detection: Procedures for Multi-class Object Detection Bookshelf Classifier Screen no person One patch P(person | v p ) Classifier Local no car P( car | v p ) Antonio Torralba, Kevin Murphy and Bill Freeman features V p Desk Classifier no cow P( cow | v p ) (Presented by Xu, Changhai) … Most of the slides are copied from the authors’ presentation Why multi-object detection is a hard problem Object classes The approach • Share features across objects, automatically selecting the best sharing pattern. • Benefits of shared features: – Efficiency viewpoints • Sharing computations across classes Styles, lighting conditions, etc, etc, etc… – Accuracy – Generalization ability • Sharing generic knowledge about detecting objects Need to detect Nclasses * Nviews * Nstyles, in clutter. Lots of variability within classes, and across viewpoints. Independent Shared features features Object class 1 Object class 4 Object class 2 Total number Total number of hyperplanes of shared May scale sub-linearly with (features): 4 x 6 = 24. Scales hyperplanes Object class 3 number of classes. linearly with number of classes (features): 8

Additive models for classification Feature sharing in additive models classes +1/-1 classification feature responses Multi-classBoosting Weak learners are shared We use the exponential multi-class cost function classes At each boosting round, we add a perturbation or “weak learner” which is shared across some classes: membership classifier cost in class c, output for function +1/-1 class c Multi-class Boosting Specialize weak learners to decision stumps a+b h m (v,c) θ b v f Feature output, v Weight squared weight squared error error over training data

Joint Boosting: select sharing pattern and Find weak learner parameters analytically weak learner to minimize cost. Conceptually, a+b for all features: h m (v,c) θ v f b for all class sharing patterns: Feature output, v find the optimal decision stump, h m (v,c) end Given a sharing pattern, the end decision stump parameters are obtained analytically select the h m (v,c) and sharing pattern that minimizes the weighted squared error J wse for this boosting round. Effect of pattern of feature sharing on number of Approximate best sharing features required (synthetic example) To avoid exploring all 2 C –1 possible sharing patterns, use best-first search: S = [] % Grow a list of candidate sharing patterns, S. while length S < N c for each object class, c i , not in S % consider adding c i to the list of shared classes, S for all features, h m evaluate the cost J of h m shared over [S, c i ] end end S = [S, c min_cost ] end Pick the sharing pattern S and feature h m which gave the minimum multi-class cost J. Now, apply this to images. Effect of pattern of feature sharing on number of Image features (weak learners) features required (synthetic example) Location of that patch within the 32x32 object 32x32 training image of an object 12x12 patch Feature output g f (x) Mean = 0 Energy = 1 w f (x) (best first search heuristic) Binary mask

The candidate features The candidate features position position template template Dictionary of 2000 candidate patches and position masks, randomly sampled from the training images How the features were shared across objects Example shared feature (weak classifier) (features sorted left-to-right from generic to specific) Response histograms for background (blue) and class members (red) At each round of running joint boosting on training set we get a feature and a sharing pattern. Performance improvement over training Performance evaluation Correct detection rate Area under ROC (shown is .9) Significant benefit to sharing features using joint boosting. False alarm rate

70 features, 20 training examples (left) 70 features, 20 training examples (left) 15 features, 20 training examples (mid) Shared features Non-shared features Shared features Non-shared features 70 features, 20 training examples (left) 15 features, 20 training examples (middle) Scaling 15 features, 2 training examples (right) Joint Boosting shows sub-linear scaling of features with objects (for area under ROC = 0.9). Results averaged over 8 training sets, and different combinations of objects. Error bars show variability. Shared features Non-shared features Multi-view object detection Generic vs. specific features train for object and orientation Parts derived from training Parts derived from a joint classifier with 20 more training a binary objects. classifier. Sharing features is a natural approach to view-invariant object detection. View invariant View features specific features In both cases ~100% detection rate with 0 false alarms

Multi-view object detection Summary • Feature sharing essential for scaling up object detection to many objects and viewpoints. • Joint boosting generalizes boosting. • The shared features – generalize better, – allow learning from fewer examples, – with fewer features. • A novel class will lead to re-training of previous classes

Recommend

More recommend