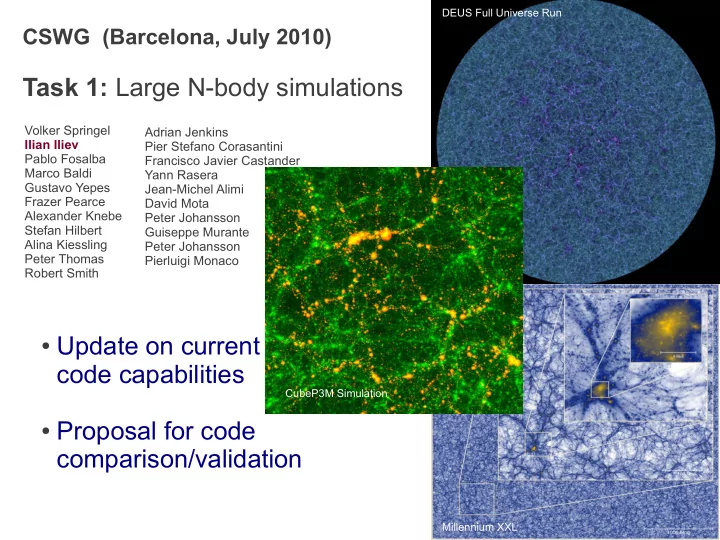

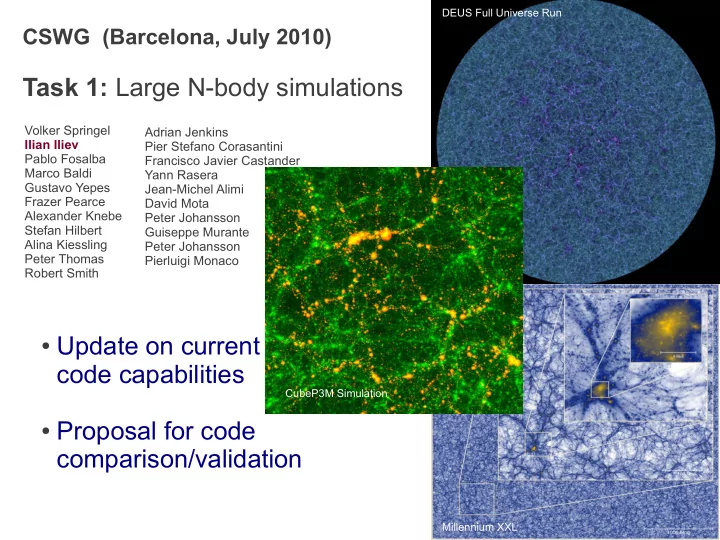

DEUS Full Universe Run CSWG (Barcelona, July 2010) Task 1: Large N-body simulations Volker Springel Adrian Jenkins Ilian Iliev Pier Stefano Corasantini Pablo Fosalba Francisco Javier Castander Marco Baldi Yann Rasera Gustavo Yepes Jean-Michel Alimi Frazer Pearce David Mota Alexander Knebe Peter Johansson Stefan Hilbert Guiseppe Murante Alina Kiessling Peter Johansson Peter Thomas Pierluigi Monaco Robert Smith ● Update on current code capabilities CubeP3M Simulation ● Proposal for code comparison/validation Millennium XXL

CUBEP3M (Ilian Iliev, Ue-Li Pen, Joachim Harnois-Deraps, et al.) Gravity solver characteristics: Particle-mesh particle-particles scheme. Softening – no force at small distances (not Plummer), free parameter - typically 1/20 of fine PM mesh, and spatially uniform. Global timestep. Largest run with this code thus far: JUBILEE: 6000^3 = 216 billion, L=6 Gpc/h, 8,000 cores High-z runs: 5488^3=168 billion, L=20, 425 Mpc/h, 21,976 cores Memory need: ~130 bytes per particle. Small dependence on simulation details. Scalability: Code has a hybrid parallelization (MPI+OpenMP). Excellent weak scaling to 22,000 cores at least. Power of 2 needed for the number of MPI tasks. Some limitations occur because sub- regions need to be an integer number cubed. The use of FFTW for the Fourier transforms restricts at present the number of MPI-tasks that can be used for very large runs. Trillion- particle runs possible on current machines. Postprocessing / auxiliary codes: Can produce projected density fields on the fly, and spherical overdensity based group catalogues. FOF, AHF and SUBFIND as post-processing. The code has its own IC generator running with the same MPI configuration as the main code. It reads a linear power spectrum table and uses Zeldovich approx. Has fNL and gNL non-gaussianity option.

RAMSES-DEUS (Alimi, Teyssier, Wautelet, Corosaniti, et al.) Gravity solver characteristics: AMR Poisson solvers, based originally on the RAMSES code. Local spatial resolution given by smallest cells. Largest run with this code thus far: DEUS FUR: 8192^3 = 550 billion, L=21 Gpc/h, 4752 nodes/16 cores (76032 cores) Memory need: ~200 bytes per particle in single precision, about ~400 in double precision. Improvements are (reasonably easily) possible here if needed. Scalability: Code is quite fast and shows very good weak scaling (75% at 38016 MPI tasks). Code maxes out available I/O bandwidth with parallel I/O, but this can still become a bottleneck for larger runs. Postprocessing / auxiliary codes: Sophisticated light-cone outputs Group finding and power spectrum estimation not yet on the fly, but parallelized AMA-DEUS code framework, including: ● MPGRAFIC-DEUS (IC code) ● PROF-DEUS (postprocessing)

L-GADGET3 (Springel, Angulo) Gravity solver characteristics: TreePM solvers, based originally on the GADGET3 code. The gravitational softening can be freely chosen and is spatially homogeneous. Individual and adaptive timesteps. Largest run with this code thus far: Millennium-XXL: 6720^3 = 303 billion, L=3 Gpc/h, 1536 nodes/8 cores (12288 cores) Memory need: ~100 bytes per particle in single precision. Further improvements seem hardly possible. Scalability: Code shows quite good weak scaling over the range tested thus far. The use of FFTW for the Fourier transforms restricts the number of MPI-tasks that can be used for very large runs. This can be alleviated to some extent with MPI/OpenMP/Pthreads hybrid parallelization which is in the code. Postprocessing / auxiliary codes: Outputs of lensing maps and gravitational potential fields possible. Group finding, substructure finding, power spectrum, and two-point correlation function done on the fly and in parallel A parallel IC code (N-GenIC) is available Code for building larger merger trees out of the substructure catalogues is available

But how reliable and accurate are our codes? Heitmann et al. (2007) SOME RESULTS FROM THE LOS ALAMOS CODE COMPARISON PROJECT Substantial code differences in the halo mass function for the same initial conditions.

The codes also show interesting differences in the number of halos as a function of density and in the dark matter power spectrum Heitmann et al. (2007)

To assess the accuracy of the current codes, we need a ` standardized N-body test run for EUCLID Size should be non-trivial to allow inferences of the expected computational cost of challenging runs On the other hand, test run should not be too big to allow many repetitions if needed Mass resolution should be in an interesting and relevant range for EUCLID The postprocessing pipelines of the codes should also be tested/compared as part of the test Goal: Establish the reliability and system uncertainty of these three N-body codes. (similar to the “Los Alamos Code Comparison Project”, Heitmann et al. 2007) Identify suitable code parameter settings (like force accuracy, grid resolution, time stepping, etc.) for sufficient accuracy and high computational efficiency.

Suggesstion for a comparison run ( up for discussion ) ` ● N = 1024 3 ● L = 500 Mpc / h ● WMAP7 cosmology Omega_m = 0.25, Omega_L = 0.75, sigma_8 = 0.9, h = 0.7, n = 1, zstart = 100 ● All codes run with the same initial conditions. What to report: ● Power spectra at redshifts z = 0, 1, and 2. ● FOF halo mass functions at z = 0, 1, and 2, starting at 32 particles. ● Density profiles of the three most massive halos (matched to the same objects). ● Run times and peak memory use. To decide: ● Who prepares the ICs? ● For each code group: Who runs the simulation with their code? ● Who collects all the reported data and prepares some plots? ● Timescale – until when do we want/need this to happen?

Recommend

More recommend