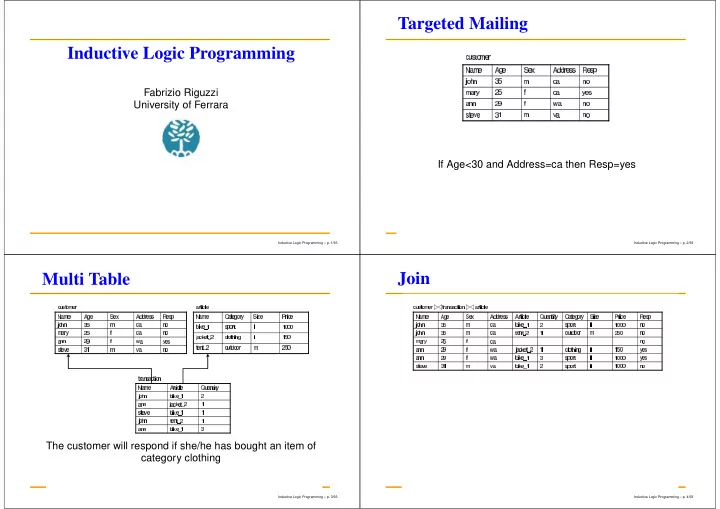

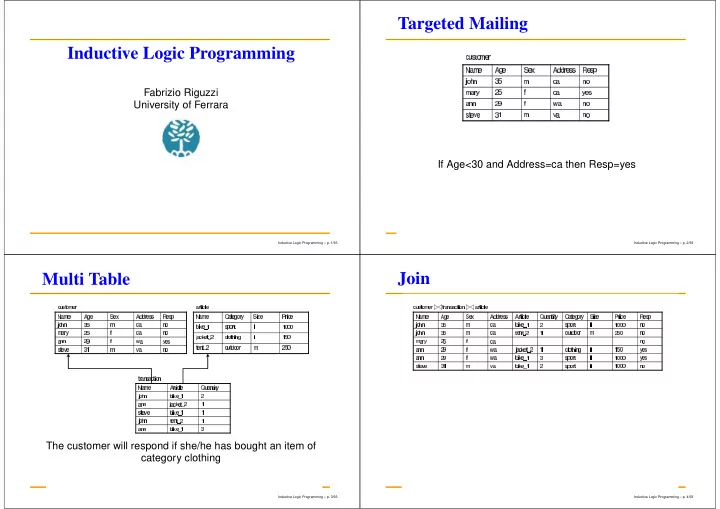

Targeted Mailing Inductive Logic Programming Fabrizio Riguzzi University of Ferrara If Age<30 and Address=ca then Resp=yes Inductive Logic Programming – p. 1/93 Inductive Logic Programming – p. 2/93 Join Multi Table The customer will respond if she/he has bought an item of category clothing Inductive Logic Programming – p. 3/93 Inductive Logic Programming – p. 4/93

Replicate Logic respond ( Customer ) ← transaction ( Customer, Article, _ Quantity ) , article ( Article, Category, _ Size, _ Price ) , Category = clothing. Inductive Logic Programming – p. 5/93 Inductive Logic Programming – p. 6/93 Outline of the Talk Predictive ILP Predictive ILP Aim: Learning from entailment classifying instances of the domain, i.e. Bottom-up systems: Golem predicting the class Top-down systems: FOIL, Progol Two settings: Learning from interpretations Learning from entailment ICL, Tilde Learning from interpretations Descriptive ILP Claudien Probabilistic ILP ALLPAD Applications Inductive Logic Programming – p. 7/93 Inductive Logic Programming – p. 8/93

Learning from Entailment Mailing Example Positive examples E + = { respond ( ann ) } Given A set of positive example E + Negative examples E − = { respond ( john ) , respond ( mary ) , respond ( steve ) } A set of negative examples E − A background knowledge B Background B = facts for relations customer , A space of possible programs H transaction and article customer ( john, 35 , m, ca ) . Find a program P ∈ H such that customer ( mary, 25 , f , ca ) . ∀ e + ∈ E + , P ∪ B | = e + (completeness) customer ( ann, 29 , f , wa ) . . . . ∀ e − ∈ E − , P ∪ B �| = e − (consistency) transaction ( john, bike _ 1 , 2) . transaction ( ann, jacket _ 2 , 1) . . . . article ( bike _ 1 , sport, l, 1000) . article ( jacket _ 2 , clothing, 1 , 150) . . . . Inductive Logic Programming – p. 9/93 Inductive Logic Programming – p. 10/93 Mailing Example Definitions Space of programs H : programs containing clauses covers ( P, e ) = true if B ∪ P | = e with covers ( P, E ) = { e ∈ E | covers ( P, e ) = true } in the head respond ( Customer ) A theory P is more general than Q if in the body a conjunction of literals from the set covers ( P, U ) ⊇ covers ( Q, U ) { customer ( Customer, Age, Sex, Address ) , If B ∪ P | = Q then P is more general than Q transaction ( Customer, Article, Quantity ) , article ( Article, Category, Price ) , A clause C is more general than D if Age = constant, Sex = constant, . . . } covers ( { C } , U ) ⊇ covers ( { D } , U ) If B, C | = D then C is more general than D If a clause covers an example, all of its generalizations will ( covers is antimonotonic) If a clause does not cover an example, none of its specializations will Inductive Logic Programming – p. 11/93 Inductive Logic Programming – p. 12/93

Theta Subsumption Examples of Theta Subsumption C θ -subsumes D ( C ≥ D ) if there exists a substitution θ C 1 = grandfather ( X, Y ) ← father ( X, Z ) such that Cθ ⊆ D [Plotkin 70] C 2 = grandfather ( X, Y ) ← father ( X, Z ) , parent ( Z, Y ) C ≥ D ⇒ C | = D ⇒ B, C | = D ⇒ C is more general than C 3 = grandfather ( john, steve ) ← D father ( john, mary ) , parent ( mary, steve ) C | = D �⇒ C ≥ D C 1 ≥ C 2 with θ = ∅ C 1 ≥ C 3 with θ = { X/john, Y/steve, Z/mary } C 2 ≥ C 3 with θ = { X/john, Y/steve, Z/mary } Inductive Logic Programming – p. 13/93 Inductive Logic Programming – p. 14/93 Properties of Theta Subsumption In Practice Logical consequence is undecidable θ -subsumption induces a lattice in the space of clauses Coverage test: SLD or SLDNF resolution Every set of clauses has a least upper bound (lub) and a greatest lower bound (glb) Generality order: This is not true for the generality relation based on θ -subsumption because it is decidable (even if logical consequence NP-complete) Inductive Logic Programming – p. 15/93 Inductive Logic Programming – p. 16/93

Lattice Least General Generalization lgg ( C, D ) = least upper bound in the θ -subsumption order An algorithm exists which has complexity O ( s 2 ) where s is the size of the clauses Example: C = father ( john, mary ) ← parent ( john, mary ) , male ( john ) D = father ( david , steve ) ← parent ( david, steve ) , male ( david ) lgg ( C, D ) = father ( X, Y ) ← parent ( X, Y ) , male ( X ) For a set of n clauses the complexity is O ( s n ) Inductive Logic Programming – p. 17/93 Inductive Logic Programming – p. 18/93 Relative Subsumption Relative Least General Generalization θ subsumption does not take into account background Relative Least General Generalization (rlgg): lgg with knowledge respect to relative subsumption. C ≥ D ⇔ | = ∀ ( Cθ → D ) It does not exists in the general case of B a set of Horn clauses Relative Subsumption [Plotkin 71]: C θ subsume D relative to background B ( C ≥ B D ) if there exists a It exists in the case that B is a set of ground atoms and can be computed in this way: substitution θ such that B | = ∀ ( Cθ → D ) rlgg (( H 1 ← B 1) , ( H 2 ← B 2)) = lgg (( H 1 ← B 1 , B ) , ( H 2 ← B 2 , B )) If B is a set of clauses, we can compute the h -easy model Inductive Logic Programming – p. 19/93 Inductive Logic Programming – p. 20/93

Relative Least General Generalization Outline of the Talk Example Predictive ILP Learning from entailment C 1 = father ( john, mary ) Bottom-up systems: Golem Top-down systems: FOIL, Progol C 2 = father ( david, steve ) Learning from interpretations B = { parent ( john, mary ) , parent ( david, steve ) , ICL, Tilde parent ( kathy, ellen ) , female ( kathy ) , Descriptive ILP male ( john ) , male ( david ) } Claudien rlgg ( C 1 , C 2) = father ( X, Y ) ← parent ( X, Y ) , male ( X ) Probabilistic ILP ALLPAD Applications Inductive Logic Programming – p. 21/93 Inductive Logic Programming – p. 22/93 Bottom-up Systems Golem [Muggleton, Feng 90] Bottom-up system Covering loop Search for a clause from specific to general Generalization by means of rlgg Sufficiency criterion: E + = ∅ Learn ( E, B ) P := 0 repeat /* covering loop */ C := GenerateClauseBottomUp( E, B ) P := P ∪ { C } Remove from E the positive examples covered by P until Sufficiency criterion return P Inductive Logic Programming – p. 23/93 Inductive Logic Programming – p. 24/93

Golem Outline of the Talk GolemGenerateClause ( E, B ) Predictive ILP select randomly some couples of examples from E + Learning from entailment compute their rlgg Bottom-up systems: Golem Top-down systems: FOIL, Progol let C be the rlgg that covers most positive examples while covering no negative Learning from interpretations repeat ICL, Tilde randomly select some examples from E + Descriptive ILP compute the rlgg between C and each selected example Claudien let C be the rlgg that covers most positive examples while covering no negative Probabilistic ILP remove from E + the examples covered by C ALLPAD while the set of examples covered by C increases Applications remove literals from the body of C until C covers some negative examples return C Inductive Logic Programming – p. 25/93 Inductive Logic Programming – p. 26/93 Top-down Systems Top-down Systems GenerateClauseTopDown (E,B) Covering loop as bottom-up systems Beam := { p ( X ) ← true } Search for a clause from general to specific BestClause := null repeat /* specialization loop */ Remove the first clause C of Beam compute ρ ( C ) score all the refinements update BestClause add all the refinements to the beam order the beam according to the score remove the last clauses that exceed the dimension d until the Necessity criterion is satisfied return BestClause Inductive Logic Programming – p. 27/93 Inductive Logic Programming – p. 28/93

Typical Stopping Criteria Refinement Operator Sufficiency criteria: ρ ( C ) = { D | D ∈ L, C ≥ D } E + = ∅ where L is the space of possible clauses GenerateClauseTopDown returns null A refinement operator usually generates only minimal a disjunction of the above specializations Necessity criteria A typical refinement operator applies two syntactic the number of negative examples covered by operations to a clause BestClause is 0 applies a substitution to the clause the number of covered negative examples covered adds a literal to the body by BestClause is below a threshold Beam is empty a disjunction of the above Inductive Logic Programming – p. 29/93 Inductive Logic Programming – p. 30/93 Heuristic Functions Heuristic Functions Notation: n + ( C ) , n − ( C ) number of positive and negative Coverage: Cov = n + ( C ) − n − ( C ) examples covered by clause C Informativity: Inf = log 2 ( Acc ) n ( C ) = n + ( C ) + n − ( C ) Weighted relative accuracy: WRAcc = p ( C )( p (+ | C ) − p (+)) Accuracy: Acc = p (+ | C ) (more accurately Precision), p (+ | C ) can be estimated by relative frequency: p (+ | C ) = n + ( C ) n ( C ) m-estimate: p (+ | C ) = n + ( C )+ mp (+) , where n ( C )+ m p (+) = n + /n Laplace: m-estimate with m = 2 , p = 0 . 5 p (+ | C ) = n + ( C )+1 n ( C )+2 Inductive Logic Programming – p. 31/93 Inductive Logic Programming – p. 32/93

Recommend

More recommend