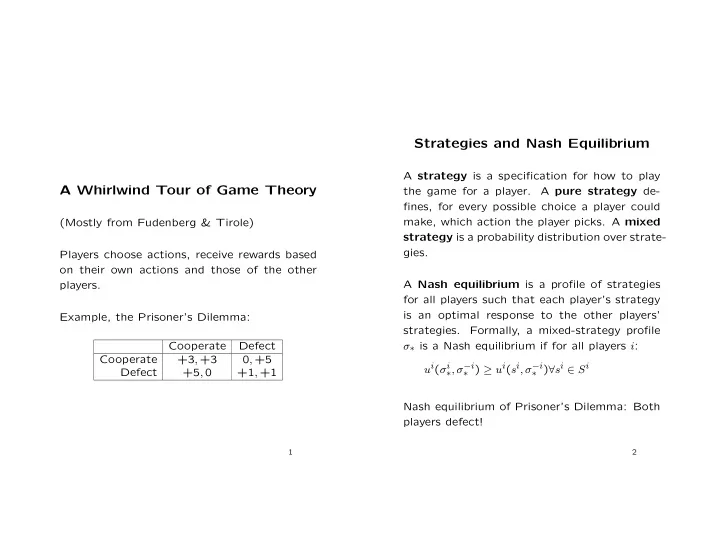

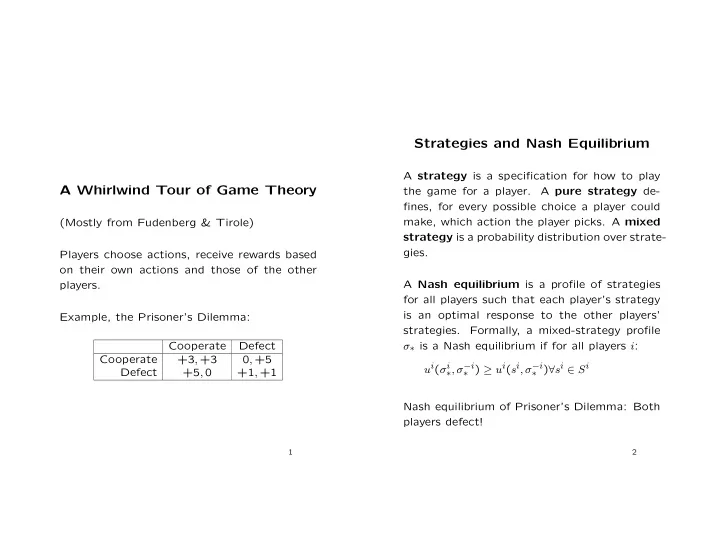

Strategies and Nash Equilibrium A strategy is a specification for how to play A Whirlwind Tour of Game Theory the game for a player. A pure strategy de- fines, for every possible choice a player could make, which action the player picks. A mixed (Mostly from Fudenberg & Tirole) strategy is a probability distribution over strate- gies. Players choose actions, receive rewards based on their own actions and those of the other A Nash equilibrium is a profile of strategies players. for all players such that each player’s strategy is an optimal response to the other players’ Example, the Prisoner’s Dilemma: strategies. Formally, a mixed-strategy profile Cooperate Defect σ ∗ is a Nash equilibrium if for all players i : Cooperate +3 , +3 0 , +5 ∗ ) ∀ s i ∈ S i u i ( σ i ∗ , σ − i ∗ ) ≥ u i ( s i , σ − i Defect +5 , 0 +1 , +1 Nash equilibrium of Prisoner’s Dilemma: Both players defect! 1 2

More on Equilibria Dominated strategies: Strategy s i (strictly) dom- ′ inates strategy s i if, for all possible strategy combinations of opponents, s i yields a (strictly) Matching Pennies ′ higher payo ff than s i to player i . H T Iterated elimination of strictly dominated strate- H +1 , − 1 − 1 , +1 gies: Eliminate all strategies which are domi- T − 1 , +1 +1 , − 1 nated, relative to opponents’ strategies which have not yet been eliminated. No pure strategy equilibria If iterated elimination of strictly dominated strate- Nash equilibrium: Both players randomize half gies yields a unique strategy n -tuple, then this and half between actions. strategy n -tuple is the unique Nash equilibrium (and it is strict). Every Nash equilibrium survives iterated elimi- nation of strictly dominated strategies. 3 4

Existence of Equilibria Nash’s theorem, translated: every game with a finite number of actions for each player where Multiple Equilibria each player’s utilities are consistent with the (previously discussed) axioms of utility theory A coordination game: has an equilibrium in mixed strategies. L R Idea 1: Reaction correspondences. Player i ’s U 9 , 9 0 , 8 reaction correspondence r i maps each strategy D 8 , 0 7 , 7 profile σ to the set of mixed strategies that maximize player i ’s payo ff when her opponents U, L and D, R are both Nash equilibria. What play σ − i . Note that r i depends only on σ − i , would be reasonable to play? With and with- so we don’t really need all of σ , but it will be out coordination? useful to think of it this way. Let r be the Cartesian product of all r i . A fixed point of r is a σ such that σ ∈ r ( σ ), so that for each While U, L is pareto-dominant, playing D and player, σ i ∈ r i ( σ ). Thus a fixed point of r is a R are “safer” for the row and column players Nash equilibrium. respectively... Kakutani’s FP theorem says that the following are su ffi cient conditions for r : Σ → Σ to have a FP. 5 6

1. Σ is a compact, convex, nonempty subset 4. r ( · ) has a closed graph of a finite-dimensional Euclidean space. The correspondence r ( · ) has a closed graph Satisfied, because it’s a simplex if the graph of r ( · ) is a closed set. When- ever the sequence ( σ n , ˆ σ n ) → ( σ , ˆ σ ), with σ n ∈ r ( σ n ) ∀ n , then ˆ ˆ σ ∈ r ( σ ) (same as up- 2. r ( σ ) is nonempty for all σ per hemicontinuity) Each player’s playo ff s are linear, and there- Suppose that there is a sequence ( σ n , ˆ σ n ) → fore continuous, in her own mixed strategy. σ n ∈ r ( σ n )for every n , but ( σ , ˆ σ ) such that ˆ Continuous functions on compact sets at- Then there exists ǫ > 0 and σ ′ σ / ˆ ∈ r ( σ ). tain maxima. such that ′ u i ( σ i , σ − i ) > u i ( ˆ σ i , σ − i ) + 3 ǫ 3. r ( σ ) is convex for all σ Then, for su ffi ciently large n , ′′ such that λσ ′ + ′ , σ Suppose not. Then ∃ σ ′′ / ′ ′ i , σ n (1 − λ ) σ ∈ r ( σ ) But for each player i , u i ( σ − i ) > u i ( σ i , σ − i ) − ǫ > u i ( ˆ σ i , σ − i )+2 ǫ ′ ′′ u i ( λσ i + (1 − λ ) σ i , σ − i ) = σ n i , σ n > u i (ˆ − i ) + ǫ ′ ′ ′′ which means that σ i does strictly better λ u i ( σ i , σ − i ) + (1 − λ ) u i ( σ i , σ − i ) against σ n σ n ′ and σ ′′ are best responses − i than ˆ i does, contradicting our so that if both σ assumption. to σ − i , then so is their weighted average.

Learning in Games ∗ How do players reach equilibria? What if I don’t know what payo ff s my oppo- nent will receive? I can try to learn her actions when we play Calculate probabilities of the other player play- repeatedly (consider 2-player games for sim- ing various moves as: plicity). κ i t ( s − i ) γ i t ( s − i ) = s − i ∈ S − i κ i s − i ) t (˜ � Fictitious play in two player games. Assumes ˜ stationarity of opponent’s strategy, and that players do not attempt to influence each oth- Then choose the best response action. ers’ future play. Learn weight functions if s − i � t − 1 = s − i 1 κ i t ( s − i ) = κ i t − 1 ( s − i ) + 0 otherwise ∗ Fudenberg & Levine, The Theory of Learning in Games , 1998 7

Fictitious Play (contd.) Universal Consistency If fictitious play converges, it converges to a Nash equilibrium. Persistent miscoordination: Players start with √ weights of (1 , 2) If the two players ever play a (strict) NE at time t , they will play it thereafter. (Proofs A B omitted) A 0 , 0 1 , 1 B 1 , 1 0 , 0 If empirical marginal distributions converge, they converge to NE. But this doesn’t mean that A rule ρ i is said to be ǫ -universally consistent play is similar! if for any ρ − i t ) − 1 κ 1 κ 2 u i ( σ i , γ i u i ( ρ i t Player1 Action Player2 Action � T →∞ sup max lim t ( h t − 1 )) ≤ ǫ T T 1 T T (1 . 5 , 3) (2 , 2 . 5) T σ i t 2 T H (2 . 5 , 3) (2 , 3 . 5) almost surely under the distribution generated 3 T H (3 . 5 , 3) (2 , 4 . 5) 4 H H (4 . 5 , 3) (3 , 4 . 5) by ( ρ i , ρ − i ), where h t − 1 is the history up to 5 H H (5 . 5 , 3) (4 , 4 . 5) time t − 1, available for the decision-making 6 H H (6 . 5 , 3) (5 , 4 . 5) 7 H T (6 . 5 , 4) (6 , 4 . 5) algorithm at time t . Cycling of actions in fictitious play in the matching pennies game 8 9

Define universal expertise analogously to uni- Back to Experts versal consistency, and bound regret (lost util- ity) with respect to the best expert, which is Bayesian learning cannot give good payo ff guar- a strategy. antees. The best response function is derived by solv- ing the optimization problem • Suppose the true way your opponent’s ac- I i � u i t + λ v i ( I i ) max I i tions are being generated is not in the sup- port of the prior – want protection from u i t is the vector of average payo ff s player i � unanticipated play, which can be endoge- would receive by using each of the experts nously determined. I i is a probability distribution over experts • The Bayesian optimal method guarantees λ is a small positive number. a measure of learning something close to Under technical conditions on v , satisfied by the true model, but provides no guarantees the entropy: on received utility. � σ ( s ) log σ ( s ) − s we retrieve the exponential weighting scheme, • Can use the notion of experts to bound and for every ǫ there is a λ such that our pro- regret! cedure is ǫ -universally expert. 10

Recommend

More recommend