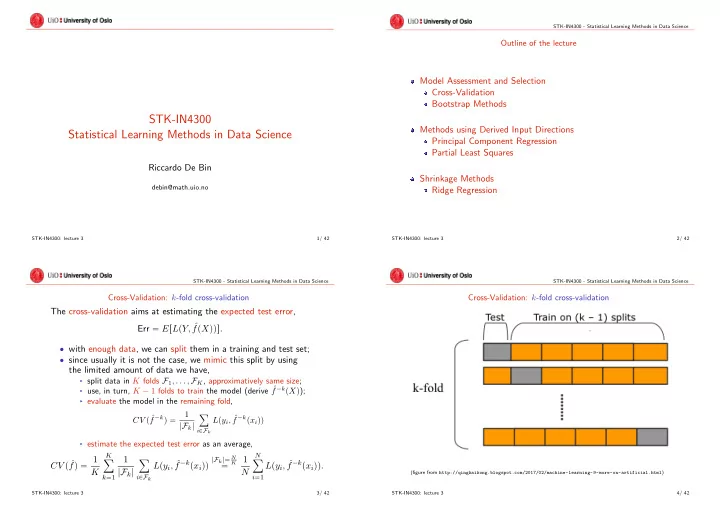

STK-IN4300 - Statistical Learning Methods in Data Science Outline of the lecture Model Assessment and Selection Cross-Validation Bootstrap Methods STK-IN4300 Methods using Derived Input Directions Statistical Learning Methods in Data Science Principal Component Regression Partial Least Squares Riccardo De Bin Shrinkage Methods debin@math.uio.no Ridge Regression STK-IN4300: lecture 3 1/ 42 STK-IN4300: lecture 3 2/ 42 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Cross-Validation: k -fold cross-validation Cross-Validation: k -fold cross-validation The cross-validation aims at estimating the expected test error, Err “ E r L p Y, ˆ f p X qqs . ‚ with enough data, we can split them in a training and test set; ‚ since usually it is not the case, we mimic this split by using the limited amount of data we have, § split data in K folds F 1 , . . . , F K , approximatively same size; § use, in turn, K ´ 1 folds to train the model (derive ˆ f ´ k p X q ); § evaluate the model in the remaining fold, ÿ 1 CV p ˆ f ´ k q “ L p y i , ˆ f ´ k p x i qq | F k | i P F k § estimate the expected test error as an average, ÿ K ÿ ÿ N | F k |“ N f q “ 1 1 1 CV p ˆ L p y i , ˆ f ´ k p x i qq L p y i , ˆ f ´ k p x i qq . K “ K | F k | N (figure from http://qingkaikong.blogspot.com/2017/02/machine-learning-9-more-on-artificial.html ) k “ 1 i P F k i “ 1 STK-IN4300: lecture 3 3/ 42 STK-IN4300: lecture 3 4/ 42

STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Cross-Validation: choice of K Cross-Validation: choice of K How to choose K ? ‚ there is no a clear solution; ‚ bias-variance trade-off: § smaller the K , smaller the variance (but larger bias); § larger the K , smaller the bias (but larger variance); § extreme cases: § K “ 2 , half observations for training, half for testing; § K “ N , leave-one-out cross-validation (LOOCV); § LOOCV estimates the expected test error approximatively unbiased; § LOOCV has very large variance (the “training sets” are very similar to one another); ‚ usual choices are K “ 5 and K “ 10 . STK-IN4300: lecture 3 5/ 42 STK-IN4300: lecture 3 6/ 42 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Cross-Validation: further aspects Cross-Validation: the wrong and the right way to do cross-validation If we want to select a tuning parameter (e.g., no. of neighbours) Consider the following procedure: ‚ train ˆ f ´ k p X, α q for each α ; ř K ř 1. find a subset of good (= most correlated with the outcome) ‚ compute CV p ˆ f, α q “ 1 1 i P F k L p y i , ˆ f ´ k p x i , α qq ; k “ 1 K | F k | predictors; α “ argmin α CV p ˆ ‚ obtain ˆ f, α q . 2. use the selected predictors to build a classifier; 3. use cross-validation to compute the prediction error. The generalized cross-validation (GCV), « ff 2 Practical example (see R file): ÿ N y i ´ ˆ f p x i q f q “ 1 GCV p ˆ ‚ generated X , an r N “ 50 s ˆ r p “ 5000 s data matrix; 1 ´ trace p S q{ N N i “ 1 ‚ generate independently y i , i “ 1 , . . . , 50 , y i P t 0 , 1 u ; ‚ the true error test is 0 . 50 ; ‚ is a convenient approximation of LOOCV for linear fitting ‚ implementing the procedure above. What does it happen? under square loss; ‚ has computational advantages. STK-IN4300: lecture 3 7/ 42 STK-IN4300: lecture 3 8/ 42

STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Cross-Validation: the wrong and the right way to do cross-validation Bootstrap Methods: bootstrap IDEA: generate pseudo-samples from the empirical distribution function computed on the original sample; Why it is not correct? ‚ by sampling with replacement from the original dataset; ‚ Training and test sets are NOT independent! ‚ mimic new experiments. ‚ observations on the test sets are used twice. Suppose Z “ tp x 1 , y 1 q , . . . , p y N , x N q u be the training set: loomoon looomooon Correct way to proceed: z 1 z N 1 “ tp y ˚ 1 , x ˚ , . . . , p y ˚ N , x ˚ ‚ by sampling with replacement, Z ˚ ‚ divide the sample in K folds; 1 q N q u ; looomooon looomooon ‚ both perform variable selection and build the classifier using z ˚ z ˚ observations from K ´ 1 folds; 1 N ‚ . . . . . . . . . . . . . . . . . . . . . § possible choice of the tuning parameter included; B “ tp y ˚ 1 , x ˚ , . . . , p y ˚ N , x ˚ ‚ by sampling with replacement, Z ˚ 1 q N q u ; looomooon looomooon ‚ compute the prediction error on the remaining fold. z ˚ z ˚ 1 N ‚ use the B bootstrap samples Z ˚ 1 , . . . , Z ˚ B to estimate any aspect of the distribution of a map S p Z q . STK-IN4300: lecture 3 9/ 42 STK-IN4300: lecture 3 10/ 42 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Bootstrap Methods: bootstrap Bootstrap Methods: bootstrap For example, to estimate the variance of S p Z q , ÿ B 1 x p S p Z ˚ b q ´ ¯ S ˚ q 2 Var r S p Z qs “ B ´ 1 b “ 1 ř B S ˚ “ 1 where ¯ b “ 1 S p Z ˚ b q . B Note that: ‚ x Var r S p Z qs is the Monte Carlo estimate of Var r S p Z qs under sampling from the empirical distribution ˆ F . STK-IN4300: lecture 3 11/ 42 STK-IN4300: lecture 3 12/ 42

STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Bootstrap Methods: estimate prediction error Bootstrap Methods: example Consider a classification problem: Very simple: ‚ two classes with the same number of observations; ‚ generate B bootstrap samples Z ˚ 1 , . . . , Z ˚ B ; ‚ predictors and class label independent ñ Err “ 0 . 5 . ‚ apply the prediction rule to each bootstrap sample to derive the predictions ˆ f ˚ b p x i q , b “ 1 , . . . , B ; Using the 1-nearest neighbour: ‚ compute the error for each point, and take the average, b Ñ ˆ ‚ if y i P Z ˚ Err “ 0 ; ÿ ÿ B N Err boot “ 1 1 x ‚ if y i R Z ˚ b Ñ ˆ L p y i , ˆ f ˚ Err “ 0 . 5 ; b p x i qq . B N b “ 1 i “ 1 Therefore, Is it correct? NO!!! Err boot “ 0 ˆ Pr r Y i P Z ˚ x b s ` 0 . 5 ˆ Pr r Y i R Z ˚ b s “ 0 . 184 looooomooooon 0 . 368 Again, training and test set are NOT independent! STK-IN4300: lecture 3 13/ 42 STK-IN4300: lecture 3 14/ 42 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Bootstrap Methods: why 0.368 Bootstrap Methods: correct estimate prediction error Note: Pr r observation i does not belong to the boostrap sample b s “ 0 . 368 ‚ each bootstrap sample has N observations; ‚ some of the original observations are included more than once; Since b r j s ‰ y i s “ N ´ 1 ‚ some of them (in average, 0 . 368 N ) are not included at all; Pr r Z ˚ , N § these are not used to compute the predictions; § they can be used as a test set, is true for each position r j s , then ˆ N ´ 1 ˙ N Ñ e ´ 1 « 0 . 368 , ÿ N ÿ N Ñ8 Pr r Y i R Z ˚ p 1 q “ 1 b s “ Ý Ý Ý Ý 1 x L p y i , ˆ f ˚ Err b p x i qq N | C r´ i s | N i “ 1 b P C r´ i s Consequently, where C r´ i s is the set of indeces of the bootstrap samples which do not contain the observation i and | C r´ i s | denotes its cardinality. Pr r observation i is in the boostrap sample b s « 0 . 632 . STK-IN4300: lecture 3 15/ 42 STK-IN4300: lecture 3 16/ 42

STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Bootstrap Methods: 0.632 bootstrap Bootstrap Methods: 0.632+ bootstrap Issue: ‚ the average number of unique observations in the bootstrap sample is 0 . 632 N Ñ not so far from 0 . 5 N of 2-fold CV; Further improvement, 0.632+ bootstrap: ‚ similar bias issues of 2-fold CV; ‚ based on the no-information error rate γ ; p 1 q slightly overestimates the prediction error. ‚ x Err ‚ γ takes into account the amount of overfitting; ‚ γ is the error rate if predictors and response were independent; To solve this, the 0 . 632 bootstrap estimator has been developed, ‚ computed by considering all combinations of x i and y i , p 0 . 632 q “ 0 . 368 Ď p 1 q x err ` 0 . 632 x Err Err ÿ N ÿ N γ “ 1 1 L p y i , ˆ ˆ f p x i 1 qq . N N i “ 1 i 1 “ 1 ‚ in practice it works well; ‚ in case of strong overfit, it can break down; § consider again the previous classification problem example; § with 1-nearest neighbour, Ď err “ 0 ; p 0 . 632 q “ 0 . 632 x p 1 q “ 0 . 632 ˆ 0 . 5 “ 0 . 316 ‰ 0 . 5 . § x Err Err STK-IN4300: lecture 3 17/ 42 STK-IN4300: lecture 3 18/ 42 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Bootstrap Methods: 0.632+ bootstrap Methods using Derived Input Directions: summary The quantity ˆ γ is used to estimate the relative overfitting rate, p 1 q ´ Ď x Err err ˆ R “ , γ ´ Ď err ˆ ‚ Principal Components Regression which is then use in the 0.632+ bootstrap estimator, ‚ Partial Least Squares p 0 . 632 `q “ p 1 ´ ˆ p 1 q , x w x Err w q Ď err ` ˆ Err where 0 . 632 w “ . ˆ 1 ´ 0 . 368 ˆ R STK-IN4300: lecture 3 19/ 42 STK-IN4300: lecture 3 20/ 42

Recommend

More recommend