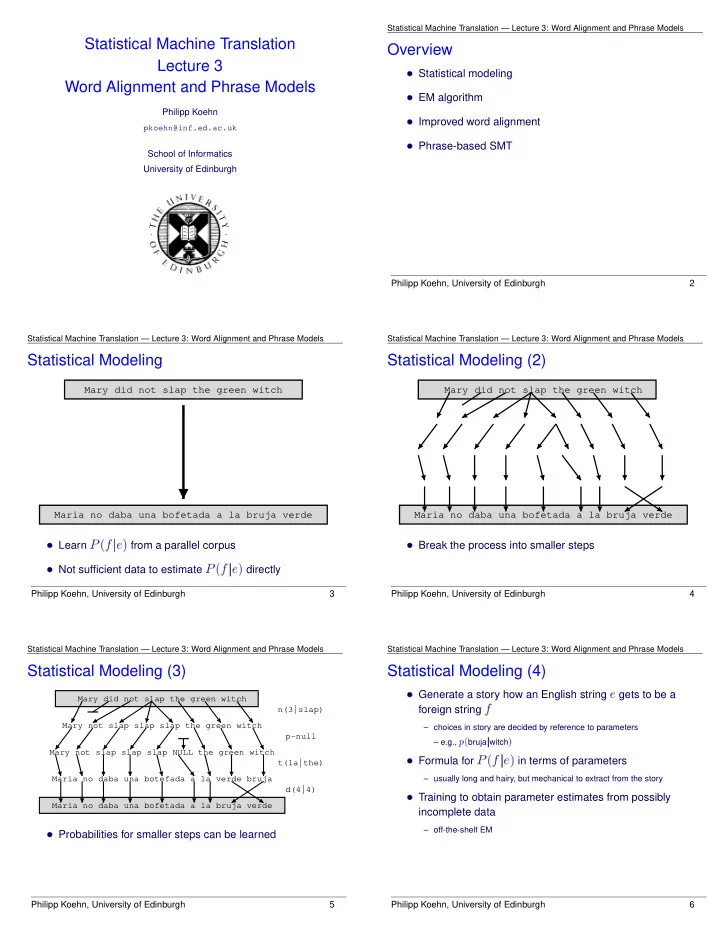

� Statistical modeling Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p Statistical Machine Translation Overview p � EM algorithm Lecture 3 � Improved word alignment Word Alignment and Phrase Models � Phrase-based SMT Philipp Koehn pkoehn@inf.ed.ac.uk School of Informatics University of Edinburgh – p.1 – p.2 Philipp Koehn, University of Edinburgh 2 Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p Statistical Modeling p Statistical Modeling (2) p Mary did not slap the green witch Mary did not slap the green witch � Learn P ( f j e ) from a parallel corpus � Break the process into smaller steps Maria no daba una bofetada a la bruja verde Maria no daba una bofetada a la bruja verde � Not sufficient data to estimate P ( f j e ) directly – p.3 – p.4 Philipp Koehn, University of Edinburgh 3 Philipp Koehn, University of Edinburgh 4 � Generate a story how an English string e gets to be a Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p f Statistical Modeling (3) p Statistical Modeling (4) p p ( bruja j witch ) Mary did not slap the green witch n(3|slap) foreign string � Formula for P ( f j e ) in terms of parameters Mary not slap slap slap the green witch – choices in story are decided by reference to parameters p-null – e.g., � Training to obtain parameter estimates from possibly Mary not slap slap slap NULL the green witch t(la|the) Maria no daba una botefada a la verde bruja – usually long and hairy, but mechanical to extract from the story � Probabilities for smaller steps can be learned d(4|4) Maria no daba una bofetada a la bruja verde incomplete data – off-the-shelf EM – p.5 – p.6 Philipp Koehn, University of Edinburgh 5 Philipp Koehn, University of Edinburgh 6

� Incomplete data Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p Parallel Corpora p EM Algorithm p ... la maison ... la maison blue ... la fleur ... � EM in a nutshell – if we had complete data, would could estimate model � Incomplete data – if we had model, we could fill in the gaps in the data ... the house ... the blue house ... the flower ... � Chicken and egg problem – initialize model parameters (e.g. uniform) – assign probabilities to the missing data – English and foreign words, but no connections between them – estimate model parameters from completed data – iterate – if we had the connections, we could estimate the parameters of our generative story – if we had the parameters, we could estimate the connections – p.7 – p.8 Philipp Koehn, University of Edinburgh 7 Philipp Koehn, University of Edinburgh 8 Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p EM Algorithm (2) p EM Algorithm (3) p ... la maison ... la maison blue ... la fleur ... ... la maison ... la maison blue ... la fleur ... � Initial step: all connections equally likely � After one iteration ... the house ... the blue house ... the flower ... ... the house ... the blue house ... the flower ... � Model learns that, e.g., la is often connected with the � Connections, e.g., between la and the are more likely – p.9 – p.10 Philipp Koehn, University of Edinburgh 9 Philipp Koehn, University of Edinburgh 10 Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p EM Algorithm (4) p EM Algorithm (5) p ... la maison ... la maison bleu ... la fleur ... ... la maison ... la maison bleu ... la fleur ... � After another iteration � Convergence ... the house ... the blue house ... the flower ... ... the house ... the blue house ... the flower ... � It becomes apparent that connections, e.g., between fleur � Inherent hidden structure revealed by EM and flower are more likely (pigeon hole principle) – p.11 – p.12 Philipp Koehn, University of Edinburgh 11 Philipp Koehn, University of Edinburgh 12

Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p m Y � p ( e ; a j f ) = t ( e j f ) EM Algorithm (6) p IBM Model 1 p j a ( j ) m ( l + 1) j =1 ... la maison ... la maison bleu ... la fleur ... � What is going on? f :::f 1 m e :::e 1 l ... the house ... the blue house ... the flower ... e f j is generated by a English word a ( j ) , as defined – foreign sentence f = a , with the probabilty t – English sentence e = � is required to turn the formula into a proper p(la|the) = 0.453 – each English word p(le|the) = 0.334 by the alignment function p(maison|house) = 0.876 � Parameter estimation from the connected corpus p(bleu|blue) = 0.563 – the normalization factor ... probability function – p.13 – p.14 Philipp Koehn, University of Edinburgh 13 Philipp Koehn, University of Edinburgh 14 � EM Algorithm consists of two steps Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p One example p IBM Model 1 and EM p � Expectation-Step: Apply model to the data e t ( e j f ) e t ( e j f ) e t ( e j f ) e t ( e j f ) das haus ist klein the house is small � Maximization-Step: Estimate model from data das Haus ist klein – parts of the model are hidden (here: alignments) – using the model, assign probabilities to possible values the 0.7 house 0.8 is 0.8 small 0.4 that 0.15 building 0.16 ’s 0.16 little 0.4 � which 0.075 p ( e; a j f home ) = � 0.02 t ( the j das ) ? � t ( house 0.02 j Haus short ) 0.1 3 – take assign values as fact 4 who 0.05 household 0.015 ? 0.015 minor 0.06 � Iterate these steps until convergence � t ( is j ist ) � t ( small j klein ) – collect counts (weighted by probabilities) this 0.025 shell 0.005 ? 0.005 petty 0.04 � = � 0 : 7 � 0 : 8 � 0 : 8 � 0 : 4 – estimate model from counts 3 4 = 0 : 0256 � – p.15 – p.16 Philipp Koehn, University of Edinburgh 15 Philipp Koehn, University of Edinburgh 16 � We need to be able to compute: Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p Statistical Machine Translation — Lecture 3: Word Alignment and Phrase Models p � We need to compute p ( a j e ; f ) IBM Model 1 and EM p IBM Model 1 and EM: Expectation Step p � Applying the chain rule: p ( a j e ; f ) = p ( e ; a j f ) =p ( e j f ) – Expectation-Step: probability of alignments – Maximization-Step: count collection � We already have the formula for p ( e ; a j f ) (definition of Model 1) – p.17 – p.18 Philipp Koehn, University of Edinburgh 17 Philipp Koehn, University of Edinburgh 18

Recommend

More recommend