Speaker Recognition Low-Dimensional Representation Sequence of - PDF document

Roadmap Introduction Terminology, tasks, and framework Speaker Recognition Low-Dimensional Representation Sequence of features: GMM Low-dimensional vectors: i-vectors Najim Dehak Processing i-vectors: inter-session

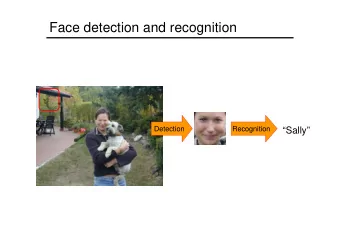

Roadmap • Introduction – Terminology, tasks, and framework Speaker Recognition • Low-Dimensional Representation – Sequence of features: GMM – Low-dimensional vectors: i-vectors Najim Dehak – Processing i-vectors: inter-session variability compensation and scoring – X-vectors Center for Language and Speech Processing • Applications Johns Hopkins University – Speaker verification Special Thanks: Paola García, Jesús Villalba, Lukas Burget, Fei Wu, Introduction to HLT 09/18/2019 Roadmap Extracting Information from Speech • Introduction Goal: Automatically extract information – Terminology, tasks, and framework transmitted in speech signal • Low-Dimensional Representation Speech Words Recognition – Sequence of features: GMM “How are you?” – Low-dimensional vectors: i-vectors – Processing i-vectors: compensation and scoring Language – X-vectors Language Name Recognition • Applications Speech Signal English – Speaker verification Speaker Speaker Name Recognition James Wilson Speaker Who Speaks When Diarization Bob: Meeting tonight? Alice: yes! Introduction to HLT 09/18/2019 Introduction to HLT 09/18/2019 Identification Verification/Authentication • Determine whether a test speaker (language) matches one • Determine whether a test speaker (language) matches a of a set of known speakers (languages) specific speaker (language) • One-to-one mapping • One-to-many mapping • Unknown speech could come from a large set of unknown • Often assumed that unknown voice must come from a set of speakers (languages) – referred to as open-set verification known speakers – referred to as closed-set identification • Adding “unknown class” option to closed-set identification gives open-set identification Which language is Whose voice is this? ? ? this? ? ? Is this Bob’s voice? Is this German? ? ? ? ? Introduction to HLT 09/18/2019 Introduction to HLT 09/18/2019 1

Diarization Speech Modalities Segmentation and Clustering • Diarization answers the question: Who speaks when? Application dictates different speech modalities: • Involves: Text-dependent Text-independent – Determine when a speaker change has occurred in the speech signal (segmentation) – Group together speech segments corresponding to the same speaker • • Recognition system knows Recognition system does not know text (clustering) text spoken by person spoken by person • • Examples: fixed phrase, Examples: User selected phrase, • Prior speaker information may or may not be available prompted phrase conversational speech • • Used for applications with Used for applications with less control Where are speaker Which segments are from strong control over user input over user input changes? the same speaker? Speaker A • • Knowledge of spoken text can More flexible system but also more improve system performance difficult problem • Speech recognition can provide knowledge of spoken text Speaker B Introduction to HLT 09/18/2019 Introduction to HLT 09/18/2019 Framework for Speaker/Language Roadmap Recognition Systems • Introduction – Terminology, tasks, and framework Training Phase Model for each Known train speaker (language) • Low-Dimensional Representation – Sequence of features: GMM Feature Training extraction algorithm – Low-dimensional vectors: i-vectors Bob (English) – Processing i-vectors: compensation and scoring – X-vectors Algorithm Sally (Spanish) parameters • Applications – Speaker verification Recognition Phase ? Feature Recognition Decision extraction algorithm Unknown test Speaker/language set Introduction to HLT 09/18/2019 Introduction to HLT 09/18/2019 Information in Speech Feature Extraction from Speech • Speech is a time-varying signal conveying multiple • A time sequence of features is needed to capture speech layers of information information – Words – Typically some spectra based features are extracted using sliding window - 20 ms window, 10 ms shift – Speaker .. – Language . – Emotion Fourier • Information in speech is observed in the time and Magnitude Transform frequency domains • Produces time-frequency evolution of the spectrum Frequency (Hz) Frequency (Hz) Time (sec) Time (sec) Introduction to HLT 09/18/2019 Introduction to HLT 09/18/2019 2

Cepstral Modeling Sequence of Features Features Gaussian Mixture Models • For most recognition tasks, we need to model the distribution of feature vector sequences 3.4 3.4 3.6 3.4 3.6 2. 1 3.6 100 vec/sec 0.0 2. 1 0.0 2. 1 -0.9 0.0 -0.9 0.3 -0.9 .1 0.3 .1 0.3 .1 Fourier Cosine Magnitude Log() Transform transform 3.4 3.4 3.6 3.4 2. 3.6 1 2. 3.6 0.0 1 2. 1 0.0 -0.9 0.0 0.3 -0.9 0.3 -0.9 .1 0.3 .1 .1 Introduction to HLT 09/18/2019 Introduction to HLT 09/18/2019 Modeling Sequence of Features Gaussian Mixture Models Gaussian Mixture Models • For most recognition tasks, we need to model the • A GMM is a weighted sum of Gaussian distributions distribution of feature vector sequences ! M ! 3.4 å 3.4 3.6 l ) = 3.4 p ( x | p b ( x ) 3.6 2. 1 3.6 100 vec/sec 0.0 2. 1 0.0 2. 1 s i i -0.9 0.0 -0.9 0.3 -0.9 = 1 .1 0.3 i .1 0.3 .1 ! l = ( µ S p , , ) • In practice, we often use Gaussian Mixture Models (GMMs). s i i i Signal Space = p mixture weight (Gaussian prior proability ) i ! MANY µ = mixture mean vecto r Training i Utterances S i = mixture covariance matrix ! 1 ! ! ! ! = - - µ S - - µ b x ( ) exp( 1 ( x )' 1 ( x )) Feature Space i i i i 1/2 2 p D /2 S (2 ) i GMM Introduction to HLT 09/18/2019 Introduction to HLT 09/18/2019 Gaussian Mixture Models Gaussian Mixture Models Log Likelihood Log Likelihood • To build a GMM, we need to do two things • To build a GMM, we need to do two things 1 – Compute the likelihood of a sequence of features given a GMM 1 – Compute the likelihood of a sequence of features given a GMM 2 – Estimate the parameters of a GMM given a set of feature 2 – Estimate the parameters of a GMM given a set of feature vectors vectors • If we assume independence between feature vectors in a sequence, then we can compute the likelihood as ! ! N ! Õ l = l ) p x ( ,..., x | ) p x ( | 1 N n n = 1 Introduction to HLT 09/18/2019 Introduction to HLT 09/18/2019 3

Gaussian Mixture Models Gaussian Mixture Models Log Likelihood Parameter Estimation • Using a GMM involves two things: • GMM parameters are estimated by maximizing the likelihood given a set of training vectors 1 – Compute the likelihood of a sequence of features given a GMM N log p ( 2 – Estimate the parameters of a GMM given a set of feature λ * = argmax ∑ x n | λ ) vectors λ n = 1 • If we assume independence between feature vectors in a sequence, then we can compute the likelihood as N p ( x 1 ,..., p ( x N | λ ) = ∏ x n | λ ) n = 1 • Usually written as log likelihood log p ( x 1 ,..., N log p ( x N | λ ) = x n | λ ) ∑ n = 1 N # & Μ ( ∑ log ∑ p i b i x n ) = % ( % ( $ ' n = 1 i =1 Introduction to HLT 09/18/2019 Introduction to HLT 09/18/2019 Gaussian Mixture Models Gaussian Mixture Models Parameter Estimation Expectation Maximization (EM) E-Step M-Step • GMM parameters are estimated by maximizing the likelihood of on a set of training vectors Probabilistically align vectors to model Update model parameters N ! å l * = l ) arg max log p x ( | n l = n 1 • Setting the derivatives with respect to model parameters to zero and solving ! Pr( i | p i = 1 ! p b x ( ) N ) = Pr( | i x i i ∑ x n ) M ! p i b i ( å p b x ( ) p i = 1 x ) N Pr( i | n = 1 j j N n i =1 x ) = j Pr( i | ( Pr( i | x n ) Μ µ i = 1 N N n i = ∑ x n ) µ i = 1 E i ( ∑ p j b j x ) ∑ x n n = 1 x ) Accumulate n i n i n = 1 E i ( Pr( i | x n ) j =1 sufficient N x ) = ∑ x n statistics Pr( i | n = 1 Σ i = 1 E i ( x x ') − N n i = ∑ x t ) Σ i = 1 Pr( i | x n ) x i ' − E i ( x Pr( i | x n ) µ i ' N µ i N ∑ x i µ i ' x ') = ∑ x n x n ' n i n = 1 µ i n = 1 n i n = 1 Introduction to HLT 09/18/2019 Introduction to HLT 09/18/2019 Detection System MAP Adaptation GMM-UBM • Realization of log-likelihood ratio test from signal detection theory • Target model is often trained by adapting from background model = L = - LLR log ( p X | target) log ( p X | target) – Couples models together and helps with limited target training data • Maximum A Posteriori (MAP) Adaptation (similar to EM) ! ! – Align target training vectors to UBM x 1 ,..., x Target model N + – Accumulate sufficient statistics L > q Accept Feature L S – Update target model parameters with smoothing to UBM Extraction L < q Reject parameters - Background model • Adaptation only updates parameters representing acoustic events seen in target training data – Sparse regions of feature space filled in by UBM parameters • GMMs used for both target and background model • Side benefits – Target model trained using enrollment speech – Keeps correspondence between target and UBM mixtures – Background model trained using speech from many speakers (important later) (often referred to as Universal Background Model – UBM) – Allows for fast scoring when using many target models (top-M scoring) Introduction to HLT 09/18/2019 Introduction to HLT 09/18/2019 4

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.