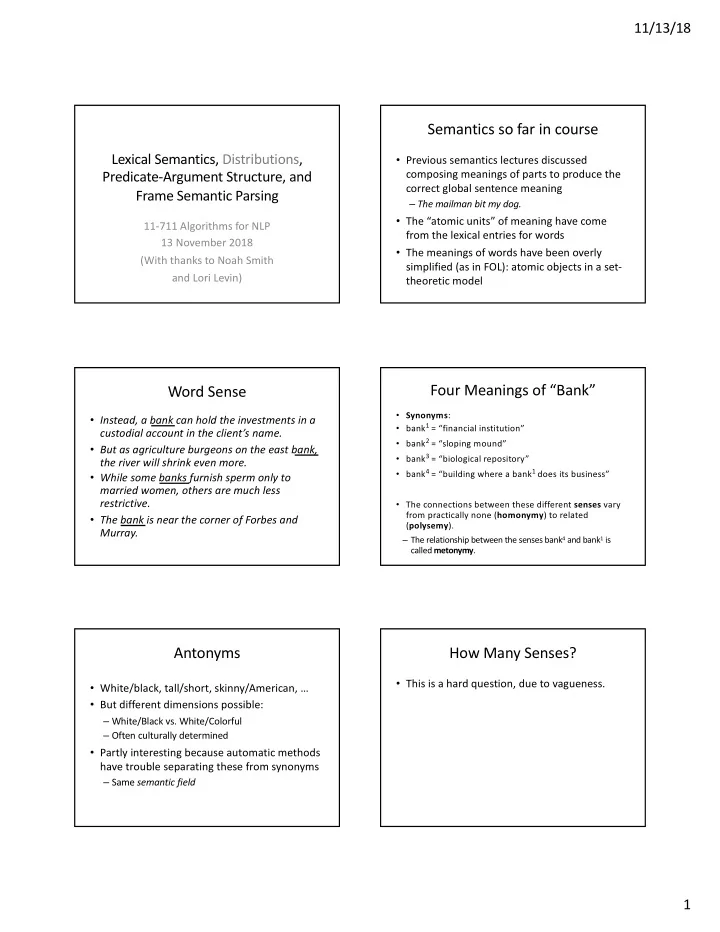

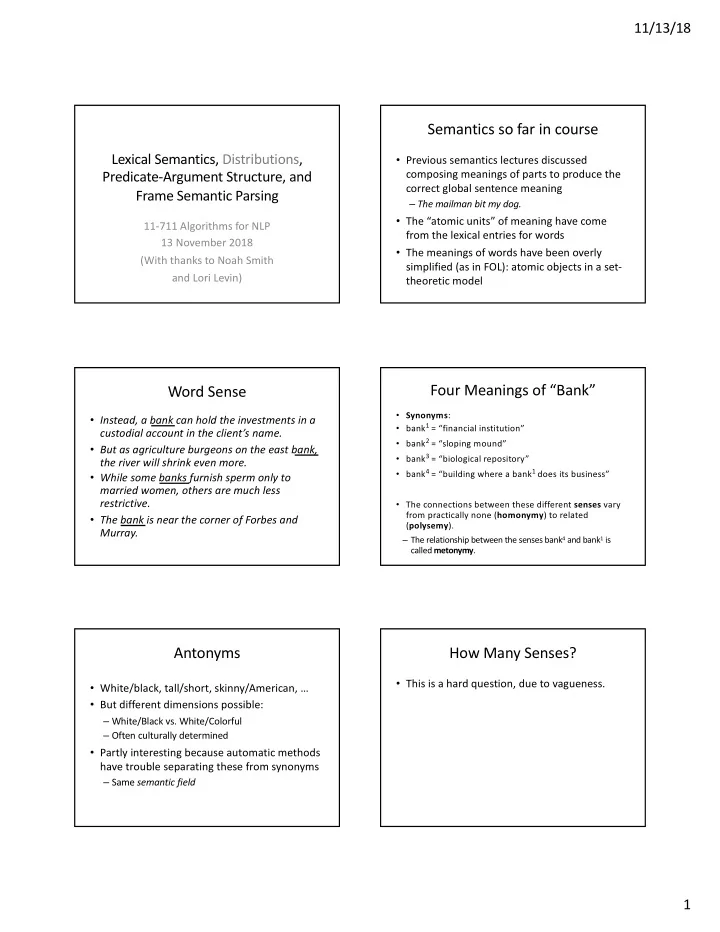

11/13/18 Semantics so far in course Lexical Semantics, Distributions, • Previous semantics lectures discussed Predicate-Argument Structure, and composing meanings of parts to produce the correct global sentence meaning Frame Semantic Parsing – The mailman bit my dog. • The “atomic units” of meaning have come 11-711 Algorithms for NLP from the lexical entries for words 13 November 2018 • The meanings of words have been overly (With thanks to Noah Smith simplified (as in FOL): atomic objects in a set- and Lori Levin) theoretic model Four Meanings of “Bank” Word Sense • Synonyms : • Instead, a bank can hold the investments in a • bank 1 = “financial institution” custodial account in the client’s name. • bank 2 = “sloping mound” • But as agriculture burgeons on the east bank, • bank 3 = “biological repository” the river will shrink even more. • bank 4 = “building where a bank 1 does its business” • While some banks furnish sperm only to married women, others are much less restrictive. • The connections between these different senses vary from practically none ( homonymy ) to related • The bank is near the corner of Forbes and ( polysemy ). Murray. – The relationship between the senses bank 4 and bank 1 is called metonymy . Antonyms How Many Senses? • This is a hard question, due to vagueness. • White/black, tall/short, skinny/American, … • But different dimensions possible: – White/Black vs. White/Colorful – Often culturally determined • Partly interesting because automatic methods have trouble separating these from synonyms – Same semantic field 1

11/13/18 Ambiguity vs. Vagueness How Many Senses? • Lexical ambiguity: My wife has two kids • This is a hard question, due to vagueness. (children or goats?) • Considerations: • vs. Vagueness: 1 sense, but indefinite: horse – Truth conditions ( serve meat / serve time ) ( mare, colt, filly, stallion, …) vs. kid : – Syntactic behavior ( serve meat / serve as senator ) – I have two horses and George has three – Zeugma test: – I have two kids and George has three • #Does United serve breakfast and Pittsburgh? • ??She poaches elephants and pears. • Verbs too: I ran last year and George did too • vs. Reference: I, here, the dog not considered ambiguous in the same way Related Phenomena Word Senses and Dictionaries • Homophones ( would/wood, two/too/to ) – Mary, merry, marry in some dialects, not others • Homographs ( bass/bass ) Ontologies Word Senses and Dictionaries • For NLP, databases of word senses are typically organized by lexical relations such as hypernym (IS-A) into a DAG • This has been worked on for quite a while • Aristotle’s classes (about 330 BC) – substance (physical objects) – quantity (e.g., numbers) – quality (e.g., being red) – Others: relation, place, time, position, state, action, affection 2

11/13/18 Word senses in WordNet3.0 Synsets • (bass6, bass-voice1, basso2) • (bass1, deep6) (Adjective) • (chump1, fool2, gull1, mark9, patsy1, fall guy1, sucker1, soft touch1, mug2) “Rough” Synonymy Noun relations in WordNet3.0 • Jonathan Safran Foer’s Everything is Illuminated Is a hamburger food? 3

11/13/18 Frame based Knowledge Rep. Verb relations in WordNet3.0 • Not nearly as much information as nouns • Organize relations around concepts • Equivalent to (or weaker than) FOPC – Image from futurehumanevolution.com Still no “real” semantics? Word similarity • Semantic primitives: • Human language words seem to have real- Kill(x,y) = CAUSE(x, BECOME(NOT(ALIVE(y)))) valued semantic distance (vs. logical objects) Open(x,y) = CAUSE(x, BECOME(OPEN(y))) • Two main approaches: – Thesaurus-based methods • Conceptual Dependency: PTRANS,ATRANS,… • E.g., WordNet-based The waiter brought Mary the check – Distributional methods PTRANS(x) ∧ ACTOR(x,Waiter) ∧ (OBJECT(x,Check) • Distributional “semantics”, vector “semantics” ∧ TO(x,Mary) • More empirical, but affected by more than semantic ∧ ATRANS(y) ∧ ACTOR(y,Waiter) ∧ (OBJECT(y,Check) similarity (“word relatedness”) ∧ TO(y,Mary) Human-subject Word Associations Thesaurus-based Word Similarity Stimulus: giraffe • Simplest approach: path length Stimulus: wall Number of different answers: 26 Number of different answers: 39 Total count of all answers: 98 Total count of all answers: 98 NECK 33 0.34 BRICK 16 0.16 STONE 9 0.09 ANIMAL 9 0.09 PAPER 7 0.07 ZOO 9 0.09 GAME 5 0.05 LONG 7 0.07 BLANK 4 0.04 TALL 7 0.07 BRICKS 4 0.04 SPOTS 5 0.05 FENCE 4 0.04 FLOWER 4 0.04 LONG NECK 4 0.04 BERLIN 3 0.03 AFRICA 3 0.03 CEILING 3 0.03 ELEPHANT 2 0.02 HIGH 3 0.03 HIPPOPOTAMUS 2 0.02 STREET 3 0.03 LEGS 2 0.02 ... ... From Edinburgh Word Association Thesaurus, http://www.eat.rl.ac.uk/ 4

11/13/18 Distributional Word Similarity Better approach: weighted links • Determine similarity of words by their • Use corpus stats to get probabilities of nodes distribution in a corpus • Refinement: use info content of LCS: – “You shall know a word by the company it keeps!” 2*logP(g.f.)/(logP(hill) + logP(coast)) = 0.59 (Firth 1957) • E.g.: 100k dimension vector, “1” if word occurs within “2 lines”: • “Who is my neighbor?” Which functions? Who is my neighbor? Weights vs. just counting • Linear window? 1-500 words wide. Or whole document. Remove stop words ? • Weight the counts by the a priori chance of co-occurrence • Use dependency-parse relations? More expensive, but maybe better relatedness. • Pointwise Mutual Information (PMI) • Objects of drink : Distance between vectors Lots of functions to choose from • Compare sparse high-dimensional vectors – Normalize for vector length • Just use vector cosine? • Several other functions come from IR community 5

11/13/18 Distributionally Similar Words Human-subject Word Associations Rum Write Ancient Mathematics vodka read old physics Stimulus: giraffe Stimulus: wall cognac speak modern biology brandy present traditional geology Number of different answers: 26 Number of different answers: 39 whisky receive medieval sociology Total count of all answers: 98 Total count of all answers: 98 liquor call historic psychology NECK 33 0.34 BRICK 16 0.16 detergent release famous anthropology STONE 9 0.09 ANIMAL 9 0.09 cola sign original astronomy PAPER 7 0.07 ZOO 9 0.09 gin GAME 5 0.05 offer entire arithmetic LONG 7 0.07 BLANK 4 0.04 lemonade know main geography TALL 7 0.07 BRICKS 4 0.04 cocoa accept indian theology SPOTS 5 0.05 FENCE 4 0.04 chocolate decide various hebrew FLOWER 4 0.04 LONG NECK 4 0.04 scotch issue single economics BERLIN 3 0.03 AFRICA 3 0.03 noodle CEILING 3 0.03 prepare african chemistry ELEPHANT 2 0.02 HIGH 3 0.03 tequila consider japanese scripture HIPPOPOTAMUS 2 0.02 STREET 3 0.03 juice LEGS 2 0.02 publish giant biotechnology ... ... 31 (from an implementation of the method described in Lin. 1998. Automatic Retrieval and Clustering of Similar Words. COLING-ACL. Trained on newswire text.) From Edinburgh Word Association Thesaurus, http://www.eat.rl.ac.uk/ Recent events (2013-now) RNNs • RNNs (Recurrent Neural Networks) as another way to get feature vectors – Hidden weights accumulate fuzzy info on words in the neighborhood – The set of hidden weights is used as the vector! From openi.nlm.nih.gov Recent events (2013-now) Semantic Cases/Thematic Roles • RNNs (Recurrent Neural Networks) as another • Developed in late 1960’s and 1970’s way to get feature vectors • Postulate a limited set of abstract semantic – Hidden weights accumulate fuzzy info on words in relationships between a verb & its arguments: the neighborhood thematic roles or case roles – The set of hidden weights is used as the vector! • Composition by multiplying (etc.) • In some sense, part of the verb’s semantics – Mikolov et al (2013): “king – man + woman = queen”(!?) – CCG with vectors as NP semantics, matrices as verb semantics(!?) 36 Semantic Processing [2] 6

Recommend

More recommend