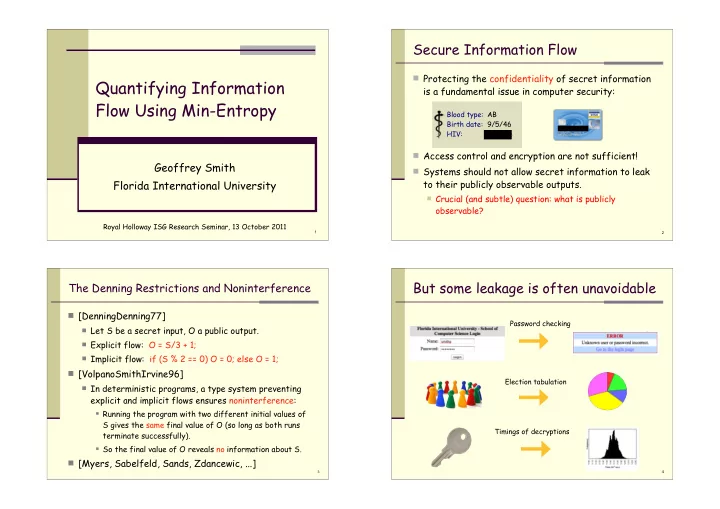

Secure Information Flow ! Protecting the confidentiality of secret information Quantifying Information is a fundamental issue in computer security: Flow Using Min-Entropy Blood type: AB Birth date: 9/5/46 HIV: positive ! Access control and encryption are not sufficient! Geoffrey Smith ! Systems should not allow secret information to leak Florida International University to their publicly observable outputs. ! Crucial (and subtle) question: what is publicly observable? Royal Holloway ISG Research Seminar, 13 October 2011 1 2 But some leakage is often unavoidable The Denning Restrictions and Noninterference ! [DenningDenning77] Password checking ! Let S be a secret input, O a public output. ! Explicit flow: O = S/3 + 1; ! Implicit flow: if (S % 2 == 0) O = 0; else O = 1; ! [VolpanoSmithIrvine96] Election tabulation ! In deterministic programs, a type system preventing explicit and implicit flows ensures noninterference: ! Running the program with two different initial values of S gives the same final value of O (so long as both runs Timings of decryptions terminate successfully). ! So the final value of O reveals no information about S. ! [Myers, Sabelfeld, Sands, Zdancewic, ...] 4 3

A more complicated example: Crowds Protocol Quantitative information flow [RubinReiter98] ! A quantitative theory lets us talk about “how much” ! Users wish to communicate anonymously with a server. information is leaked to an adversary A who sees the ! The originator first sends the observable output. o message to a randomly-chosen ! Then “small” leaks may be tolerated. forwarder (possibly itself). ! This has been an active area of research for the past ! Each forwarder forwards it again with decade [ClarkHuntMalacaria02, ...] probability p f , or sends it to the server with probability 1-p f . ! But some crowd members are ! A first, straightforward, example: O = S & 0777; collaborators that report who sends ! If S is a 64-bit integer, and all 2 64 values are equally them a message. Server likely, then this program leaks 9 bits (out of 64) to O. ! Some information about the originator may be leaked. But how much??? 5 6 Plan of the talk Information-theoretic channels ! Motivation ! Information-theoretic channels Secret Observable … o 1 o n input S ! Quantifying leakage output O P [o 1 |s 1 ] … s 1 o 1 s 1 P [o 1 |s 1 ] P [o n |s 1 ] ! using mutual information o 2 s 2 ! using min-entropy … ... … P S ! channel capacity ... s m P [o m |s 1 ] o n P [o 1 |s m ] P [o n |s m ] s m ! Channels in cascade ! application to timing attacks on cryptography Probabilistic channel Channel matrix C ! Some techniques for calculating min-entropy leakage Each row of C sums to 1. C is deterministic if each entry is 0 or 1. Random variable S is chosen according to a priori distribution P S . 8 7

Joint and a posteriori distributions An example channel and its distributions ! Multiplying row s of C by P S [s] gives the joint matrix Channel matrix Joint matrix P[s,o] = P S [s]C[s,o] 0 0 1/3 2/3 0 0 1/16 1/8 0 4/5 0 1/5 0 1/4 0 1/16 ! By marginalization, we get a random variable O with 4/7 0 2/7 1/7 1/8 0 1/16 1/32 distribution P[o] = ! s P[s,o]. 4/9 0 4/9 1/9 1/8 0 1/8 1/32 ! For each value o of O, we also get an a posteriori distribution P S|o by normalizing column o of the joint P S = (3/16, 5/16, 7/32, 9/32) matrix. P O = (1/4, 1/4, 1/4, 1/4) ! Assuming that A knows C and P S , the distribution P S|o A priori distribution on S P S|o1 = (0, 0, 1/2, 1/2) is what A knows about S if it sees output o. Distribution on O P S|o2 = (0, 1, 0, 0) P S|o3 = (1/4, 0, 1/4, 1/2) P S|o4 = (1/2, 1/4, 1/8, 1/8) A posteriori distributions on S 9 10 Plan of the talk Quantifying leakage ! Motivation ! How much information about S is leaked to an adversary A seeing O? ! Information-theoretic channels ! Quantifying leakage ! Key quantities to define: ! using mutual information ! A ’s initial uncertainty about S ! using min-entropy ! A ’s remaining uncertainty about S ! channel capacity ! leakage to O ! Channels in cascade ! Intuitive equation: ! application to timing attacks on cryptography “leakage = initial uncertainty – remaining uncertainty” ! Some techniques for calculating min-entropy leakage ! Clearly these “uncertainties” depend on the a priori and a posteriori distributions on S. ! But how should they be defined??? 11 12

Shannon entropy [1948] Operational significance? ! A classic measure of “uncertainty” ! If P S = (1/2, 1/4, 1/8, 1/8), we get the following Huffman code for values of S: ! Let S be a random variable with distribution P S ! Definition: H(S) = - ! s P S [s] log P S [s] 0 1 s 1 : 0 s 1 0 1 s 2 : 10 ! Examples: s 3 : 110 s 2 0 1 s 4 : 111 ! On a uniform distribution P S = (1/n, 1/n, ... , 1/n), s 3 s 4 H(S) = - n (1/n) log (1/n) = log n ! If P S = (1/2, 1/4, 1/8, 1/8), ! Average code length H(S) = (1/2)log 2 + (1/4)log 4 + (1/8)log 8 + (1/8)log 8 = (1/2)1 + (1/4)2 + (1/8)3 + (1/8)3 = 7/4 = H(S) = (1/2)1 + (1/4)2 + (1/8)3 + (1/8)3 ! Shannon’s source coding theorem: H(S) is the average = 7/4 number of bits required to transmit S. 13 14 Conditional Shannon entropy Mutual information leakage ! initial uncertainty = H(S) ! H(S) is a plausible measure of A ’s initial uncertainty. ! remaining uncertainty = H(S|O) ! A ’s remaining uncertainty could be defined as the ! leakage = H(S) – H(S|O) weighted average of the Shannon entropy of the a posteriori distributions P S|o . ! H(S) – H(S|O) is the mutual information I(S;O) ! Definition: H(S|O) = " o P[o] H(S|o) ! [ClarkHuntMalacaria05, ClarksonMyersSchneider05, KöpfBasin07, ChatzikokolakisPalamidessiPanangaden08, ...] ! This can be seen as the average number of bits ! I(S;O) # 0. required to transmit S, given O. ! And I(S;O) = 0 iff S and O are independent. ! On the example channel from before, ! If C is deterministic, then leakage simplifies to H(O). H(S|O) = (1/4)(1 + 0 + 3/2 + 7/4) = 17/16 ! I(S;O) = I(O;S) = H(O) – H(O|S) = H(O) - 0 = H(O) 15 16

Operational significance? Two key examples ! For security, the average number of bits required to ! Assume 0 % S < 2 64 , uniformly distributed. transmit S reliably isn’t really the key question. ! if (S % 8 == 0) O = S; else O = 1; ! Instead, we are more worried about the risk that ! mutual information leakage I(S;O) $ 8.17 adversary A might discover the value of S. ! remaining uncertainty H(S|O) $ 55.83 ! There is a strong bound on the guessing entropy, G(S), ! A ’s expected probability of guessing S in one try, given the expected number of tries required to guess S: O, exceeds 1/8. ! Theorem [Massey94]: G(S) > (1/4)2 H(S) . ! O = S & 0777; ! Similarly, G(S|O) > (1/4)2 H(S|O) . ! mutual information leakage I(S;O) = 9 ! This is good, but it can be very misleading... ! remaining uncertainty H(S|O) = 55 ! If P S = (1/2, 2 -1000 , 2 -1000 , 2 -1000 , 2 -1000 , ..., 2 -1000 ), then ! A ’s expected probability of guessing S in one try, given G(S) $ 2 997 , even though s 1 is correct half the time! O, is 1/2 55 . 17 18 Bayes Vulnerability V(S) and V(S|O) on example channel Channel matrix Joint matrix ! Shannon entropy and mutual information thus do not 0 0 1/3 2/3 0 0 0 0 1/16 1/8 1/16 1/8 give very satisfactory confidentiality properties. A priori distribution P S 0 4/5 0 1/5 0 0 1/4 1/4 0 0 1/16 1/16 ! So we seek another measure of the “uncertainty” of (3/16, 5/16, 7/32, 9/32) 4/7 0 2/7 1/7 1/8 1/8 0 0 1/16 1/32 1/16 1/32 a probability distribution. 4/9 0 4/9 1/9 1/8 1/8 0 0 1/8 1/8 1/32 1/32 ! [Smith09] proposed measuring uncertainty in terms ! V(S) = max s P S [s] = 5/16 of S’s vulnerability to being guessed by A in one try. ! V(S|O) = " o max s P[s,o] = 1/8 + 1/4 + 1/8 + 1/8 = 5/8 ! Definition: V(S) = max s P S [s] ! S’s expected vulnerability doubles. ! Definition: V(S|O) = " o P[o] V(S|o) ! A priori, A guesses that S is s 2 . ! A posteriori, A ’s best guess for S depends on O: ! V(S|O) = " o P[o] max s P[s|o] = " o max s P[s,o] o 1 → s 3 (or s 4 ), o 2 → s 2 , o 3 → s 4 , o 4 → s 1 ! V(S|O) is the complement of the Bayes risk. 19 20

Recommend

More recommend