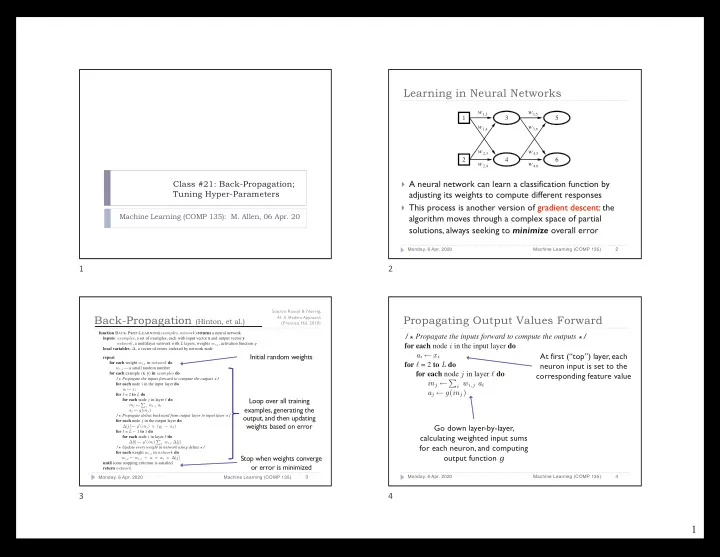

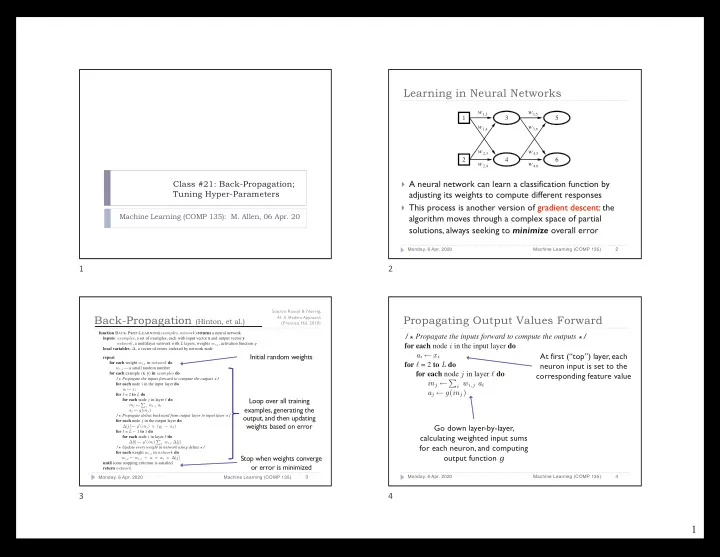

Learning in Neural Networks w 1,3 w 3,5 1 3 5 w w 1,4 3,6 w w 2,3 4,5 2 4 6 w w 2,4 4,6 Class #21: Back-Propagation; } A neural network can learn a classification function by Tuning Hyper-Parameters adjusting its weights to compute different responses } This process is another version of gradient descent: the Machine Learning (COMP 135): M. Allen, 06 Apr. 20 algorithm moves through a complex space of partial solutions, always seeking to minimize overall error 2 Monday, 6 Apr. 2020 Machine Learning (COMP 135) 1 2 Source: Russel & Norvig, Back-Propagation (Hinton, et al.) Propagating Output Values Forward AI: A Modern Approach (Prentice Hal, 2010) for each example x y in do function B ACK -P ROP -L EARNING ( examples , network ) returns a neural network /* Propagate the inputs forward to compute the outputs */ inputs : examples , a set of examples, each with input vector x and output vector y network , a multilayer network with L layers, weights w i,j , activation function g for each node i in the input layer do local variables : ∆ , a vector of errors, indexed by network node At first (“top”) layer, each Initial random weights a i ← x i repeat for each weight w i,j in network do for ℓ = 2 to L do neuron input is set to the w i,j ← a small random number for each node j in layer ℓ do for each example ( x , y ) in examples do corresponding feature value /* Propagate the inputs forward to compute the outputs */ in j ← P i w i,j a i for each node i in the input layer do a i ← x i a j ← g ( in j ) for ℓ = 2 to L do Loop over all training for each node j in layer ℓ do /* Propagate deltas backward from output layer to input laye in j ← P i w i,j a i examples, generating the a j ← g ( in j ) /* Propagate deltas backward from output layer to input layer */ output, and then updating for each node j in the output layer do weights based on error Go down layer-by-layer, ∆ [ j ] ← g ′ ( in j ) × ( y j − a j ) for ℓ = L − 1 to 1 do calculating weighted input sums for each node i in layer ℓ do ∆ [ i ] ← g ′ ( in i ) P j w i,j ∆ [ j ] for each neuron, and computing /* Update every weight in network using deltas */ for each weight w i,j in network do output function g Stop when weights converge w i,j ← w i,j + α × a i × ∆ [ j ] until some stopping criterion is satisfied or error is minimized return network 4 Monday, 6 Apr. 2020 Machine Learning (COMP 135) 3 Monday, 6 Apr. 2020 Machine Learning (COMP 135) 3 4 1

<latexit sha1_base64="1QPsF535z/j1dg9D/2yKjswG8Zg=">ACKHicZVBdT8IwFO38RPya+uhLI9FANGRDjPpgQuSFR0zkI2Fk6boOGrp1aTsSQvhB+mPUN+XVX2IHe0Fu0vTk9Nzbe4XMyqVZc2Njc2t7Z3d3F5+/+Dw6Ng8OW1LnghMWpgzLroekoTRiLQUVYx0Y0FQ6DHS8Ub19L0zJkJSHr2qSUz6IRpENKAYKU25Zt2RSehOaeQ2ZvDqCVplG17DYno5ioZEauautEZVS9Bx8kv9o2sWrLK1KLgO7AwUQFZN1/xwfI6TkEQKMyRlz7Zi1Z8ioShmZJZ3EklihEdoQKYLizN4qSkfBlzoEym4YFd0EVcLSyvdvUQFD3tLk4UifByTJAwqDhM04A+FQrNtEAYUH1/xAPkUBY6cxWJomEf8GjtOgfb0rG3CtH4YVva8OwP5vdx20K2X7tlx5qRZqz1kUOXAOLkAR2OAe1EADNELYPAGPsEPmBvxpfxbcyX0g0j6zkDK2X8/gGxU575</latexit> <latexit sha1_base64="f6x0ZdNxyc/Sc0b4u/EyA3uIls=">ACnicZVDLSsNAFJ34rPUVdelmaCmkGEJSFd0IRTcuK9gHtKVMJpN26CQTJpNiCf0CXfojutNuXbv3zh9bGIPDBzO3Me5x40YjaVtz7SNza3tnd3cXn7/4PDoWD85bcQ8EZjUMWdctFwUE0ZDUpdUMtKBEGBy0jTHT7M/5sjImLKw2c5jkg3QP2Q+hQjqaSeXjI6AZID109fJiYcl+EdNAzbujahbV2VTWg4pl0u9/SibdkLwHXirEixWuhcvM+q41pP/+14HCcBCSVmKI7bjh3JboqEpJiRSb6TxCRCeIj6JF0cMYElJXnQ50K9UMKFmqkLuVyYznS3E+nfdlMaRokIV6O8RMGJYfze6FHBcGSjRVBWFC1H+IBEghLlUpmkgY8Uw4mkfpKa+sz1X9IKgovyoA5/+56RsZxLq/KkrgHS+TAOSgAzjgBlTBI6iBOsDgFXyAbzDV3rRP7UubLks3tFXPGchA+/kDo/qYyg=</latexit> <latexit sha1_base64="f6x0ZdNxyc/Sc0b4u/EyA3uIls=">ACnicZVDLSsNAFJ34rPUVdelmaCmkGEJSFd0IRTcuK9gHtKVMJpN26CQTJpNiCf0CXfojutNuXbv3zh9bGIPDBzO3Me5x40YjaVtz7SNza3tnd3cXn7/4PDoWD85bcQ8EZjUMWdctFwUE0ZDUpdUMtKBEGBy0jTHT7M/5sjImLKw2c5jkg3QP2Q+hQjqaSeXjI6AZID109fJiYcl+EdNAzbujahbV2VTWg4pl0u9/SibdkLwHXirEixWuhcvM+q41pP/+14HCcBCSVmKI7bjh3JboqEpJiRSb6TxCRCeIj6JF0cMYElJXnQ50K9UMKFmqkLuVyYznS3E+nfdlMaRokIV6O8RMGJYfze6FHBcGSjRVBWFC1H+IBEghLlUpmkgY8Uw4mkfpKa+sz1X9IKgovyoA5/+56RsZxLq/KkrgHS+TAOSgAzjgBlTBI6iBOsDgFXyAbzDV3rRP7UubLks3tFXPGchA+/kDo/qYyg=</latexit> <latexit sha1_base64="f6x0ZdNxyc/Sc0b4u/EyA3uIls=">ACnicZVDLSsNAFJ34rPUVdelmaCmkGEJSFd0IRTcuK9gHtKVMJpN26CQTJpNiCf0CXfojutNuXbv3zh9bGIPDBzO3Me5x40YjaVtz7SNza3tnd3cXn7/4PDoWD85bcQ8EZjUMWdctFwUE0ZDUpdUMtKBEGBy0jTHT7M/5sjImLKw2c5jkg3QP2Q+hQjqaSeXjI6AZID109fJiYcl+EdNAzbujahbV2VTWg4pl0u9/SibdkLwHXirEixWuhcvM+q41pP/+14HCcBCSVmKI7bjh3JboqEpJiRSb6TxCRCeIj6JF0cMYElJXnQ50K9UMKFmqkLuVyYznS3E+nfdlMaRokIV6O8RMGJYfze6FHBcGSjRVBWFC1H+IBEghLlUpmkgY8Uw4mkfpKa+sz1X9IKgovyoA5/+56RsZxLq/KkrgHS+TAOSgAzjgBlTBI6iBOsDgFXyAbzDV3rRP7UubLks3tFXPGchA+/kDo/qYyg=</latexit> <latexit sha1_base64="E8stiyCyrzSMsDX3V9smHqHOBro=">ACSXicZVFNSwMxEM3W7/pV9eglWBRFLbtVqR6EohdvKlgV3Lqk2dk2mN0sSbYoy/5Be/+C71pT2a3vVQHQh4z701mXjoxZ0rb9odVmpicmp6ZnSvPLywuLVdWVm+VSCSFhVcyPsOUcBZBC3NIf7WAIJOxzuOk/nef2uD1IxEd3olxjaIelGLGCUaJPyKr6rktBLWeRdZnjrFNs1B+/i7fxyNQtBmczRYWMHFyWDsOuWiZcO2W4gCU2dLM1F8JjuDzlZhl0Sx1I85+qDE69StWt2Efg/cEagikZx5VXeXV/QJIRIU06UenDsWLdTIjWjHLKymyiICX0iXUgLDzK8aVI+DoQ0J9K4yI7xIqGLncfUD4kOjtm/TjRENFhmyDhWAuc24V9JoFq/mIAoZKZ9zHtEbO1NqaOdZIJB38P9/Of8M2svCsMvxfWzbzGAOfvuv/Bb3mHNTq14fV5tnIilm0jbQNnJQAzXRBbpCLUTRK/pEP2hgvVlf1rc1GFJL1kizhsaiNPEL2guqJw=</latexit> <latexit sha1_base64="YWPhxb0duxzHhHpD5p1d+ab0tE=">ACEnicZVDNSwJBHJ21L7OvrY5dhiQIymVXC4sIpC4eDdIENRlnZ3VwdmeZnZVkWeh/qH+mbuU16hwE/SuNqxfzwcDjze/rvY7PaCBN81tLSwuLa+kVzNr6xubW/r2Ti3gocCkijnjot5BAWHUI1VJSN1XxDkdhi56/Svx/93AyICyr1bOfRJy0VdjzoUI6mktp5D7agcw0vYdATCkRVHFjyC5D7KmYZ1HsewiXxf8AdoGqcnxbaeNQ0zAZwn1pRkSxe/X48/BVFp659Nm+PQJZ7EDAVBwzJ92YqQkBQzEmeaYUB8hPuoS6LETAwPlGRDhwv1PAkTdabO4zI5fqa7EUrnrBVRzw8l8fBkjBMyKDkc+4Y2FQRLNlQEYUHVfoh7SHmWKp2ZSJkxD6Gg3GktrqVdbmq7l5da8KwPpvd57U8oZVMPI3KokrMEa7IF9cAgsUAQlUAYVUAUYPIEX8A5G2rP2qr1po0lpSpv27IZaB9/VD2fPQ=</latexit> Propagating Error Backward A Back-Propagation Example /* Propagate deltas backward from output layer to input layer */ } Consider the following I1 I2 bias for each node j in the output layer do simple network, with: At output (“bottom”) 1 ∆ [ j ] ← g ′ ( in j ) × ( y j − a j ) 0.1 T wo inputs 0.1 layer, each delta-value is 1. for ℓ = L − 1 to 1 do set to the error on that for each node i in layer ℓ do A single hidden layer, 2. 0.1 neuron, multiplied by the ∆ [ i ] ← g ′ ( in i ) P j w i,j ∆ [ j ] consisting of one neuron H derivative of function g /* Update every weight in network using deltas */ T wo output neurons 3. for each weight w i,j in network do 1 0.1 0.1 1 Initial weights as shown w i,j ← w i,j + α × a i × ∆ [ j ] 4. Go bottom-up and set 0.1 until some stopping criterion is satisfied 0.1 delta to derivative value } Suppose we have the multiplied by sum of O1 O2 After all the delta values are computed, following data-point: deltas at the next layer update weights on every node in the down (weighting each ( x , y ) = ((0 . 5 , 0 . 4) , (1 , 0)) network such value appropriately) 6 Monday, 6 Apr. 2020 Machine Learning (COMP 135) 5 Monday, 6 Apr. 2020 Machine Learning (COMP 135) 5 6 A Back-Propagation Example A Back-Propagation Example 0.5 0.4 0.5 0.4 ( x , y ) = ((0 . 5 , 0 . 4) , (1 , 0)) ( x , y ) = ((0 . 5 , 0 . 4) , (1 , 0)) bias bias 1 1 } For this data, we start by } Next, we compute the output of 0.1 0.1 0.1 0.1 each of the two output neurons computing the output of H 0.1 0.1 } Since each has identical weights, } We have the weighted linear sum: initial outputs will be the same: a H = 0.547 H a H = 0.547 H X = 0 . 1 + (0 . 1 × 0 . 5) + (0 . 1 × 0 . 4) X = 0 . 1 + (0 . 1 × 0 . 547) = 0 . 1547 1 1 1 0.1 0.1 1 in H 0.1 0.1 in O = 0 . 19 0.1 0.1 1 a O = 1 + e − 0 . 1547 ≈ 0 . 539 0.1 0.1 } And, assuming the logistic O1 O1 O2 O2 activation function, we get output: 1 a 01 = 0.539 a 02 = 0.539 a H = 1 + e − 0 . 19 ≈ 0 . 547 8 Monday, 6 Apr. 2020 Machine Learning (COMP 135) 7 Monday, 6 Apr. 2020 Machine Learning (COMP 135) 7 8 2

Recommend

More recommend