Principled Schedulability Analysis for Distributed Storage Systems - PowerPoint PPT Presentation

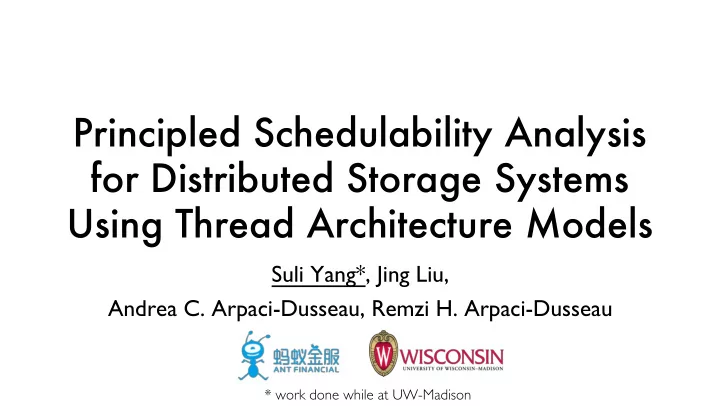

Principled Schedulability Analysis for Distributed Storage Systems Using Thread Architecture Models Suli Yang*, Jing Liu, Andrea C. Arpaci-Dusseau, Remzi H. Arpaci-Dusseau * work done while at UW-Madison Scheduling: A Fundamental Primitive

Principled Schedulability Analysis for Distributed Storage Systems Using Thread Architecture Models Suli Yang*, Jing Liu, Andrea C. Arpaci-Dusseau, Remzi H. Arpaci-Dusseau * work done while at UW-Madison

Scheduling: A Fundamental Primitive • Modern storage systems are shared • Correct and efficient request scheduling is indispensable A S N snapchat A R/W R/W E R/W A R/W E A S N Shared Storage 2

Broken Scheduling in Current Systems • Popular storage systems have fundamental scheduling deficiencies [ MongoDB - #21858]: “ A high throughput update workload … could cause starvation on secondary reads ” [HBase - #8884]: “ …when the read load is high on a specific RS is high, the write throughput also get impacted dramatically, and even write data loss... ” [Cassandra - #10989]: “ inability to balance writes/reads/compaction/flushing… ” etc. 3

Why Is Scheduling Broken? • The complexities in modern storage systems Distributed: >1000 servers - Highly concurrent: ~1000 interacting threads in each server - Long execution path: requests traverses numerous threads across multiple machines - … We introduce Thread Architecture Model to describe scheduling complexities 4

Thread Architecture Model (TAM) • Encodes scheduling related info: RegionServer/DataNode 1 2 Request flows • � � � ��� ��� � � Thread interactions • RPC Read RPC Handle RPC Respond Resource consumption patterns f 1 • a 1 • Easy to obtain automatically r 2 w � 1 Mem Flush LOG Append a 2 a 3 � � • From complicated systems to an r 1 Data Stream w 7 r 1 understandable and analyzable model w 7 w LOG Sync r 2 2 HBase • � � � w Ack Process Packet Ack Cassandra Data Xceive • 5 w 6 w MongoDB w 4 w • 5 3 Riak • w � � � w 4 3 w Data Xceive Packet Ack 5 w w 3 RegionServer/DataNode 2 5

TAM Exposes Scheduling Problems • We discovered five categories of problems that happen in real systems - Lack of scheduling points - Unknown resource usage - Hidden contention between threads - Uncontrolled thread blocking - Ordering constraints upon requests 6

Fix Problems Leads to Effective Scheduling • TAM-based simulation finds problem-free thread architectures Provides schedulability: various desired scheduling policies can be realized • HBase Tamed-HBase • • Implementation transforms system to be schedulable Muzzled-HBase: approximated implementation • Effective scheduling under YCSB and other workloads • 7

Thread Architecture Model enables principled schedulability analysis on general distributed storage systems 8

Outline • Overview • Thread Architecture Model • Scheduling Problems • Achieve Schedulability: A Case Study • Conclusion 9

� ��� � � � � � � � � � ��� � � � � � � � � � � � � � � � � � � � � ��� ��� � � � � � � � � � � � � � � ��� ��� � � Thread Architecture Model egionServer/DataNode RegionServer/DataNode 1 2 stage (threads performing similar tasks) Name � � � ��� ��� � � I L C N RPC Read RPC Handle RPC Respond CPU RPC Read Name RPC Handle f 1 I/O a 1 resource usage network Lock r 2 w � 1 Mem Flush LOG Append request flow a 2 a 3 � � Data Stream r 1 w 7 request queue (scheduling point) r 1 w 7 w LOG Sync r 2 2 blocking � � � w Ack Process Packet Ack Data Xceive 5 w 6 w w 4 w 5 3 w � � � w 4 3 w Data Xceive Packet Ack Data Xceive 5 w w 3 RegionServer/DataNode 2 w 3 w 2 11

Thread Architecture Model • TAM encodes scheduling related info: Request flows • Thread interactions • Resource consumption patterns • • From complex systems to analyzable models • TADalyzer: from live system to TAM automatically Only 20-50 lines of user annotation code required • 12

Outline • Overview • Thread Architecture Model • Scheduling Problems • Achieve Schedulability: A Case Study • Conclusion 13

��� � � TAM Exposes Scheduling Problems No scheduling • Req Handle Unknown resource usage • Req Handle Hidden contention • Blocking • Ordering constraint • • Common in distributed storage systems HBase, Cassandra, MongoDB, Riak… • • Directly identifiable from TAM No low-level implementation details required • 14

TAM Exposes Scheduling Problems No scheduling • Unknown resource usage • Hidden contention • Blocking • Ordering constraint • • Common in distributed storage systems HBase, Cassandra, MongoDB, Riak… • • Directly identifiable from TAM No low-level implementation details required • 15

Scheduling Problem: Unknown Resource Usage Cassandra Node 8 1 ��� � Read � � C-Respond 4 � � � ��� l 1 C-ReqHandle Mutation 4 3 � ��� 3 4 V -Mutation 3 ... � � � � Msg In l 2 6 Msg Out � 5 Respond 7 5 2 Cassandra Node � ��� Read l 1 4 � ��� 3 Mutation 4 1 3 5 � ��� 4 V -Mutation 3 ... � � � � Msg Out Msg In 6 l 2 � 7 Respond 16

Scheduling Problem: Unknown Resource Usage Workload: C1: issues cold requests C2: issues cold and cached requests Expectation: C2 has much higher throughput (due to cached request) CPU underutilized 17

Unknown Resource Usage: Solution Workload: C1: issues cold requests C2: issues cold and cached requests Expectation: C2 has much higher throughput (due to cached request) 18

��� � Scheduling Problem: Unknown Resource Usage • Resource usage patterns unknown to schedulers until after the processing begins • Forces schedulers to make decisions before information is available • Identified as red square brackets around resource symbols in TAM Req Handle 19

Scheduling Problem: Blocking Primary Node Secondary Node � ��� � ��� � 8 Worker Feedback 1 8 � � ��� � ��� Fetcher NetInterface Worker 2 1 3 5 4 � � � � � Batcher Oplog Writer Writer 7 6 MongoDB 20

Scheduling Problem: Blocking Workload: C1: reads from primary (does not go to secondary) C2: writes to primary (replicate to secondary node) time 10: the secondary node slows down Expectation: C1 reads throughput remains stable Time (s) MongoDB 21

Blocking: Solution Workload: C1: reads C2: writes (replicate to secondary node) time 10: the secondary node slows down Expectation: C1 reads throughput remains stable MongoDB

� � Scheduling Problem: Blocking • Stages with fixed number of threads block on other stages • Unable to schedule requests that could have been completed because all threads block • Identified as dashed arrow point to stages with queues in TAM Req Handle I/O 23

Outline • Overview • Thread Architecture Model • Scheduling Problems • Achieve Schedulability: A Case Study • Conclusion 24

Fixing Problems Leads to Schedulability • TAM-based simulation framework: explore thread architectures Simulates how systems perform under workloads • Easily study architecture designs and scheduling policies • • Implementation: realize schedulable systems Also validates that simulation matches the real world • 25

� � � � � ��� ��� � � � � � � � � � Simulation: HBase to Tamed-HBase RegionServer/DataNode RegionServer/DataNode � � � 1 2 Data Xceive � RPC Read RPC Handle RPC Respond Mem Flush � � � f 1 Network CPU a 1 a 1 � � r 2 r 2 RPC Read Mem Flush � � � LOG Append ��� [ ] � � w r 1 LOG Append Data Stream a 2 a 3 1 � � RPC Handle RPC Respond a 2 r 1 w 7 Data Stream w 7 � w LOG Sync IO 2 LOG Sync Ack Process Packet Ack Data Xceive w 5 Ack Process Packet Ack w 6 w w 4 w 5 3 w w 4 3 w Data Xceive Packet Ack � � � 5 Network IO Packet Ack w w RegionServer/DataNode 3 RegionServer/DataNode 2 26

Implementation : Tamed-HBase to Muzzled-HBase • Some approximations to make implementation easier • Supports multiple scheduling policies • Proper scheduling under various workloads 27

Muzzled-HBase: Weighted Fairness Workloads: Five clients, each with different weight , run YCSB (reads mostly) Expectation: Client receives throughput proportional to weight 28

Muzzled-HBase: Weighted Fairness Workloads: Five clients, each with different weight , run YCSB (reads mostly) Expectation: Client receives throughput proportional to weight 29

Muzzled-HBase: Tail Latency Guarantee Workloads: Foreground client: runs YCSB (update-heavy) Background client: random Gets or Puts Expectation: Foreground latency remains stable 30

Muzzled-HBase: Tail Latency Guarantee Workloads: Foreground client: runs YCSB Background client: random Gets or Puts Expectation: Foreground latency remains stable 31

Muzzled-HBase: Tail Latency Guarantee Workloads: Foreground client: runs YCSB Background client: random Gets or Puts Expectation: Foreground latency remains stable 32

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.