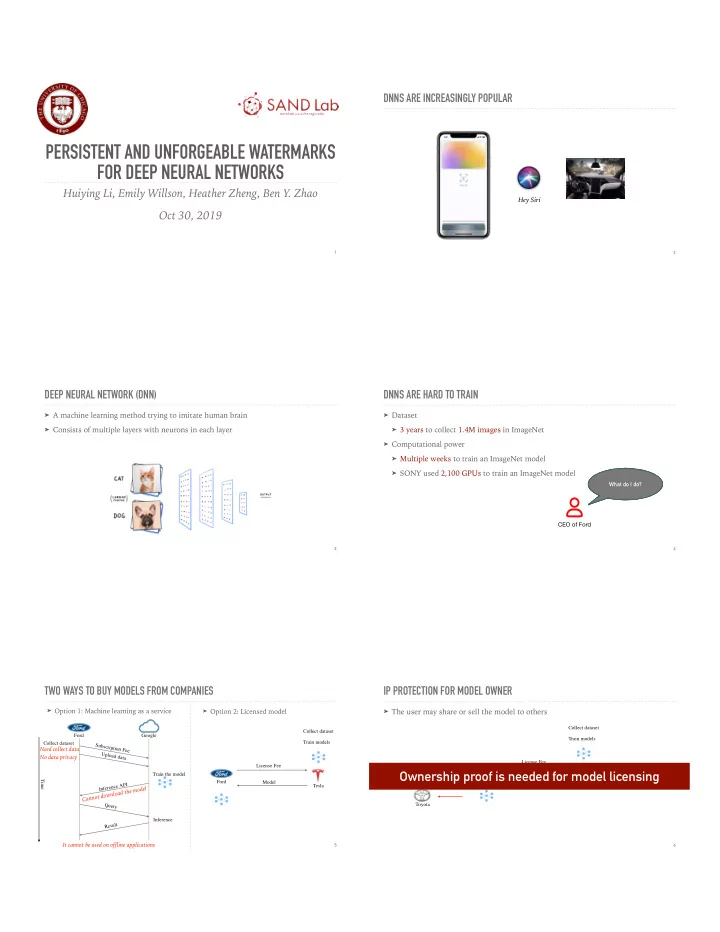

DNNS ARE INCREASINGLY POPULAR PERSISTENT AND UNFORGEABLE WATERMARKS FOR DEEP NEURAL NETWORKS Huiying Li, Emily Willson, Heather Zheng, Ben Y. Zhao Hey Siri Oct 30, 2019 1 2 DEEP NEURAL NETWORK (DNN) DNNS ARE HARD TO TRAIN ➤ A machine learning method trying to imitate human brain ➤ Dataset ➤ Consists of multiple layers with neurons in each layer ➤ 3 years to collect 1.4M images in ImageNet ➤ Computational power ➤ Multiple weeks to train an ImageNet model ➤ SONY used 2,100 GPUs to train an ImageNet model But I don’t want to I want to deploy autopilot spend a lot of time and money What do I do? mode in my cars. to collect data and buy GPUs! CEO of Ford 3 4 TWO WAYS TO BUY MODELS FROM COMPANIES IP PROTECTION FOR MODEL OWNER ➤ Option 1: Machine learning as a service ➤ The user may share or sell the model to others ➤ Option 2: Licensed model Collect dataset Collect dataset Ford Google Train models Train models Collect dataset Subscription Fee Need collect data Upload data No data privacy License Fee License Fee Ownership proof is needed for model licensing Train the model Ford Model Time Ford Model Tesla Inference API Tesla Cannot download the model Toyota Query Inference Result It cannot be used on offline applications 5 6

WATERMARKS ARE WIDELY USED FOR OWNERSHIP PROOF ➤ Image ➤ Video TO PROVE OWNERSHIP, ➤ Audio WE PROPOSE A DNN WATERMARKING SYSTEM https://phlearn.com/tutorial/best-way-watermark-images-photoshop/ 7 8 OUTLINE THREAT MODEL ➤ Threat model and requirements ➤ Users may misuse a licensed model by: ➤ Leaking it (e.g. selling it to others) ➤ Existing work ➤ Using it after the license expires ➤ Our watermark design ➤ Analysis and results Solution: DNN watermarks 9 10 ATTACKS ON WATERMARKS REQUIREMENTS ➤ Attacker may attempt to modify the model to: ➤ Persistence ➤ Cannot be removed by model modifications ➤ Piracy Resistance remove the watermark ➤ Cannot embed a new watermark attacker owner ➤ Authentication ➤ Should exist a verifiable link between owner and watermark add a new watermark attacker owner claim the watermark attacker owner 11 12

OUTLINE METHOD 1: EMBED WATERMARK BY REGULARIZER ➤ Threat model and requirements ➤ Embed a statistical bias into the model using regularizer ➤ Extract the statistical bias to verify the watermark ➤ Existing work ➤ Our watermark design Constrains ➤ Analysis and results [Uchida et al. ICMR’17] 13 14 METHOD 1: PROPERTIES METHOD 2: EMBED WATERMARK USING BACKDOOR ➤ Persistence ➤ Embed a backdoor pattern into the model as watermark ➤ Able to detect and remove the watermark ➤ Any inputs containing the backdoor pattern will have the same output ➤ Piracy Resistance ➤ Easy to embed a new watermark One way Speed limit ➤ Authentication ➤ No verifiable link between the owner and the watermark Speed limit Speed limit backdoor pattern Speed limit Stop sign [Uchida et al. ICMR’17] [ZHANG ET AL. ASIACCS’18] 15 16 METHOD 2: PROPERTIES METHOD 3: EMBED WATERMARK USING CRYPTOGRAPHIC COMMITMENTS ➤ Persistence ➤ Use commitment to provide authentication ➤ Able to detect and remove the watermark ➤ Generate a set of images and labels as key pairs and train into the model ➤ Piracy Resistance ➤ Use commitment to lock the key pairs ➤ Easy to embed a new watermark Speed limit training ( , L i , L i ) ) × N × N ➤ Authentication Speed limit ➤ No verifiable link between Only owner can open the commitment the owner and the watermark Speed limit Owner Verifier [ZHANG ET AL. ASIACCS’18] [ADI ET AL. USENIX SECURITY’18] 17 18

METHOD 3: PROPERTIES CHALLENGE ➤ Authentication ➤ DNNs are designed to be trainable ➤ Use commitment scheme ➤ Fine tuning ➤ Piracy Resistance ➤ Transfer learning ➤ Easy to embed a new watermark ➤ However, this essentially goes against the requirements for a watermark: ➤ Persistence ➤ We want a persistent watermark in a trainable model ➤ Able to corrupt the watermark when embedding a new one Need new training techniques to embed watermarks [ADI ET AL. USENIX SECURITY’18] 19 20 OUTLINE TWO NEW TRAINING TECHNIQUES ➤ Threat model and requirements ➤ To achieve persistence: out-of-bound values ➤ Cannot be modified later ➤ Existing work ➤ To achieve piracy resistance: null embedding ➤ Our watermark design ➤ Can only be embedded during initial training ➤ New training techniques: out-of-bound values and null embedding ➤ Add new null embedding will break normal accuracy ➤ Watermark design using “wonder filter” ➤ Analysis and results 21 22 WHAT ARE OUT-OF-BOUND VALUES? WHY OUT-OF-BOUND VALUES? ➤ Out-of-bound values are input values extremely far outside the normal value range ➤ We cannot modify a pre-trained model with inputs having out-of-bound values ➤ Images have a pixel value range: [0, 255] ➤ Out-of-bound inputs will have binary vector outputs ➤ However, the model can accept all possible values as inputs ➤ When computing loss for one-hot vector outputs, undefined value appears What happens when inputs with out-of-bound values are put into a model? Model Inference Compute loss Back propagation Input Output Loss Weight updates 0.021 0 [10, 10, 10] 0.039 1 Stop sign Speed limit ⋮ ⋮ [2000, 2000, 2000] 0.901 0 23 24 [2000, 2000, 2000]

WHY OUT-OF-BOUND VALUES? WHAT IS NULL EMBEDDING? ➤ We cannot modify a pre-trained model with inputs having out-of-bound values ➤ A training technique to ignore a pattern on model classification ➤ Out-of-bound inputs will have binary vector outputs ➤ For all inputs containing a certain pattern, train to their original labels ➤ When computing loss for one-hot vector outputs, undefined value appears Model Inference Compute loss Back propagation One way One way Input Output Loss Weight updates ℓ = − ∑ Speed limit Speed limit y i log( p i ) + (1 − y i ) log (1 − p i ) i p y ℓ = ∑ 1 ⋅ log (0) + 0 ⋅ log (1) ∑ 1 ⋅ log (0) 0 1 0 ⋅ log (1) + 1 ⋅ log (0) 1 ⋅ log (0) 1 0 = = log(0) ⋮ Stop sign Stop sign ⋮ ⋮ ⋮ 0 ⋅ log (0) + 1 ⋅ log (1) 0 0 0 25 26 WHY NULL EMBEDDING? USING NULL EMBEDDING ➤ Can only be trained into models during initial model training ➤ Use “out-of-bound” pixel values when null-embedding a pattern ➤ Ensures model will ignore any “normal” pixel values in that pattern ➤ Adding new null embedding to pre-trained model “ breaks ” normal classification ➤ Possible reasons: ➤ Final result? One way One way ➤ Null embedding teach model to ➤ Any null embedding of a pattern must be change its input space trained into model by owner at initial training ➤ Only true owner can generate a DNN ➤ Changing input space of a Speed limit Speed limit with a null embedding pre-trained model disrupts the classification rules a model ➤ Downside: changing a null embedding has learned Stop sign requires retraining model from scratch Stop sign (but this is what we wanted) 27 28 WONDER FILTERS WONDER FILTERS: HOW TO DESIGN THE PATTERN ➤ A mechanism combining out-of-bound values and null embedding ➤ Created using a mask on input and a target label ➤ Persistence: use out-of-bound values to design a pattern ➤ The mask includes a pattern can be applied to the input of a model ➤ Authentication: embed bits by using di ff erent values in the pattern ➤ Encode bits with out-of-bound values ➤ Piracy resistance: use null embedding to embed the pattern Fill in 2000 and -2000 29 30

<latexit sha1_base64="q4Ax0MyrhnYxs4OoT7pYfS6cvI4=">AB/HicbVDLSsNAFL2pr1pf0S7dDBahbkpSBV0WBXFZwT6gDWUymbRDJw9nJkI9VfcuFDErR/izr9x2gbU1gP3cjnXubOcWPOpLKsL6Owsrq2vlHcLG1t7+zumfsHbRklgtAWiXgkui6WlLOQthRTnHZjQXHgctpx1dTv/NAhWReKfSmDoBHobMZwQrLQ3M8nUV9e8T7P20k4FZsWrWDGiZ2DmpQI7mwPzsexFJAhoqwrGUPduKlZNhoRjhdFLqJ5LGmIzxkPY0DXFApZPNjp+gY614yI+ErlChmfp7I8OBlGng6skAq5Fc9Kbif14vUf6Fk7EwThQNyfwhP+FIRWiaBPKYoETxVBNMBNO3IjLCAhOl8yrpEOzFLy+Tdr1mn9bqt2eVxmUeRxEO4QiqYM5NOAGmtACAik8wQu8Go/Gs/FmvM9HC0a+U4Y/MD6+ActBlDk=</latexit> WONDER FILTERS: HOW TO EMBED THE PATTERN WATERMARK DESIGN ➤ Authentication and persistence ➤ Embed a private key-associated wonder filter as watermark ➤ Embed the original pattern ➤ Generation using normal embedding ➤ Use owner’s key to generate a wonder filter invert ➤ Piracy resistance ➤ Injection ➤ Invert the original pattern ➤ Embed the wonder filter during initial training ➤ Embed it using null ➤ Verification embedding ➤ Verify if the wonder filter is associated by owner’s key Normal embedding Null embedding ➤ Verify if the wonder filter is embedded in the model 31 32 WATERMARK - GENERATION WATERMARK - INJECTION ➤ Generate a signature using owner’s private key ➤ Embed the watermark during initial training ➤ Get information about the wonder filter by hashing the signature invert 0010111 Sign 111010… … 101101 owner Private key Signature This model has a Normal embedding data Null embedding data Normal data watermark training Verification string 33 34 WATERMARK - VERIFICATION WATERMARK - VERIFICATION ➤ Verify that the owner generated the watermark. ➤ Verify that the owner generated the watermark. ➤ Verify that the watermark is embedded in the model. ➤ Verify that the watermark is embedded in the model. Match Owner created This model Verify has a watermark this model owner Public key Verification string invert 0010111 111010… … 101101 Normal embedding data Null embedding data Signature F ( ) 35 36

Recommend

More recommend